Storage and High Availability in Windows Server 2012 R2

Hello everyone, dear colleagues and fans of IT technology!

Today I would like to tell you about the innovations that we will have in the field of managing disk subsystems and data storages in Windows Sever 2012 R2 . Despite the fact that with the release of Windows Server 2012 there were a lot of improvements in data warehousing and work with disk arrays and SANs, version R2 also has an impressive list of innovations, on the one hand, as well as evolutionary improvements to the features that appeared in the output his predecessor.

And so, let's try to figure out what has become fundamentally new in Windows Server 2012 R2, and what continues to evolve rapidly in the new incarnation of our wonderful server product.

')

As a rule, such things as high-performance disk storages and the possibility of smooth scaling of the system on the fly are associated, first of all, with large, expensive “pieces of iron”, which have a cost commensurate with the cost of the cast-iron bridge.

But on the other hand, not every company is able to afford to acquire such a storage system, and even building a SAN network is a difficult and resource-intensive and expensive business, to be frank.

If we briefly formulate those requirements that everyone wants to satisfy for a minimum of money, then you and I will have the following picture:

Figure 1. Features of the data warehouse platform based on Windows Server 2012 R2.

In the left part of the figure, we see a list of challenges that companies usually encounter when it comes to implementing data storage systems and disk storage. On the right hand side is a list of features built-in Windows Server 2012 R2, which are designed to resolve these points. To be detailed, then let's look at some points, or rather we will understand what is usually required from modern high-performance "smart" data storage system:

1) The storage system must be resistant to component failures, which are, as a rule , disk array controllers , disk shelves that are connected directly to the storage controllers, as well as the disks themselves, more precisely arrays and aggregates that form from the disks. Connection interfaces, usually FC- adapters or iSCSI- adapters, should also be redundant and from the point of view of access to disks use multi-threading or MPIO ( M ulti- P ath I nput / O utput) mechanisms. Also, in practice, CNA adapters ( C onverged N etwork A dapter - converged network adapter) are used - a new trend in the field of building data centers and data networks, where the adapter uses an Ethernet medium for transmitting both LAN and SAN-type traffic, and type, mode of operation of such an adapter - from LAN to SAN - and vice versa, be dynamically changed. Also, the DCB standard ( D ata C enter B ridging) was developed to support the CNA approach for more convenient management of data and convergence networks - the DCB protocol, by the way, has been supported in Windows Server since the 2012 version.

2) Modern storage should be "smart", which in particular is manifested in the presence of functions such as data deduplication , Thin Provisioning or so-called. “Thin provision”, “thin cutting” and virtualization of the disk subsystem . Intellectual tiring (intellectual tiering) for distribution of loads according to disk types is not superfluous.

3) A modern storage system would be inconvenient to manage any convenient tool, preferably built into the OS. In real life, the IT environment consists of a heterogeneous environment, which in fact means that the infrastructure managers manage different storage systems from different manufacturers, and based on this fact, the task of managing them is complicated. It would be nice to use any neutral standards for management. Examples of such standards are SMI-S ( S torage M anagement I nitiative- S pecification) or SMP .

Now let's take a look at this picture in terms of the capabilities of Windows Server 2012 R2. As for multi-threading support for organizing, in fact, data storage clusters - then this function has been present in Windows Server since time immemorial and is implemented at the level of the network adapter driver and OS function - there is no problem with that. As far as convergence is concerned, I already mentioned support for CNA and DCB earlier.

But if you go to the second part, then there is something to profit (that is, something to tell - Samyl).

And so, let's start with data deduplication. This functionality was first introduced in Windows Server 2012 and data de-duplication in WS2012 / 2012R2 works at the block level. Let me remind you, colleagues, that deduplication can work at 3 levels, which determines, on the one hand, its effectiveness, and on the other, resource consumption. The most "light" version is file deduplication . Examples of file deduplication include SIS technology ( S ingle Instance S torage, which has already sunk into oblivion). As you might guess, it works at the file level and replaces completely duplicate files with links to the location of the original file. Replacing real data with links is a general principle of how deduplication works. But now, if we make changes to the deduplicated file, it will already become unique - and as a result, it will “eat” the real place - so the script is not the most attractive. Therefore, deduplication at the block level looks like a more attractive solution, and even if the original file is changed, only changed blocks will eat the place, not blocks of the entire file. This is how data deduplication works in WS2012 / 2012R2. Well, for completeness, it remains to mention that there is also a bit deduplication operates, as it is easy to guess from its name, at the bit level, it has the largest deduplication ratio, BUT it has just hellish resource-intensiveness ... As a rule, bit deduplication is used in traffic optimization systems which are used in the case of scenarios with participation of geographically distributed organizations, where communication channels between the organization’s offices are either very expensive to operate or have very low throughput the awn In essence, the function of such devices is to cache the traffic transmitted in the device and transmit only unique data bits. Solutions of this class can be both hardware and based on virtual applains (virtual machines with software that implement the corresponding functionality).

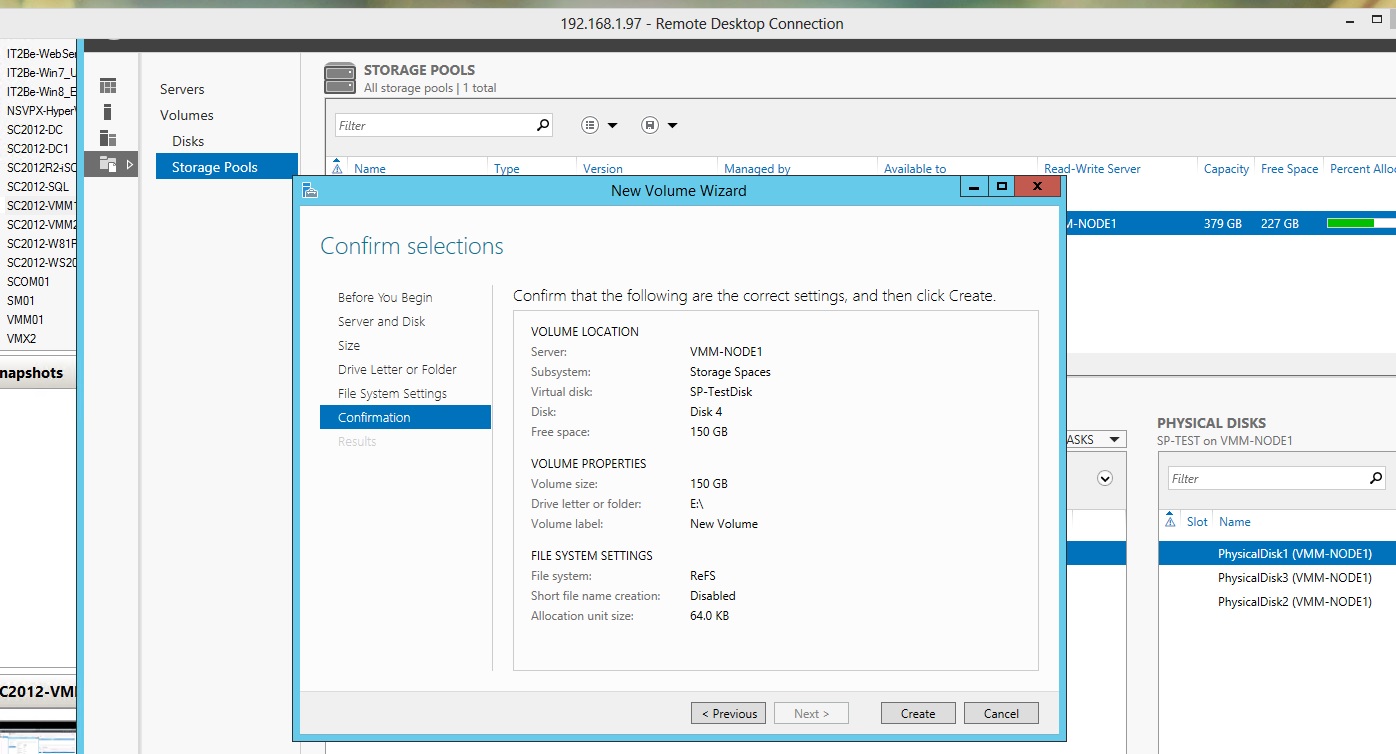

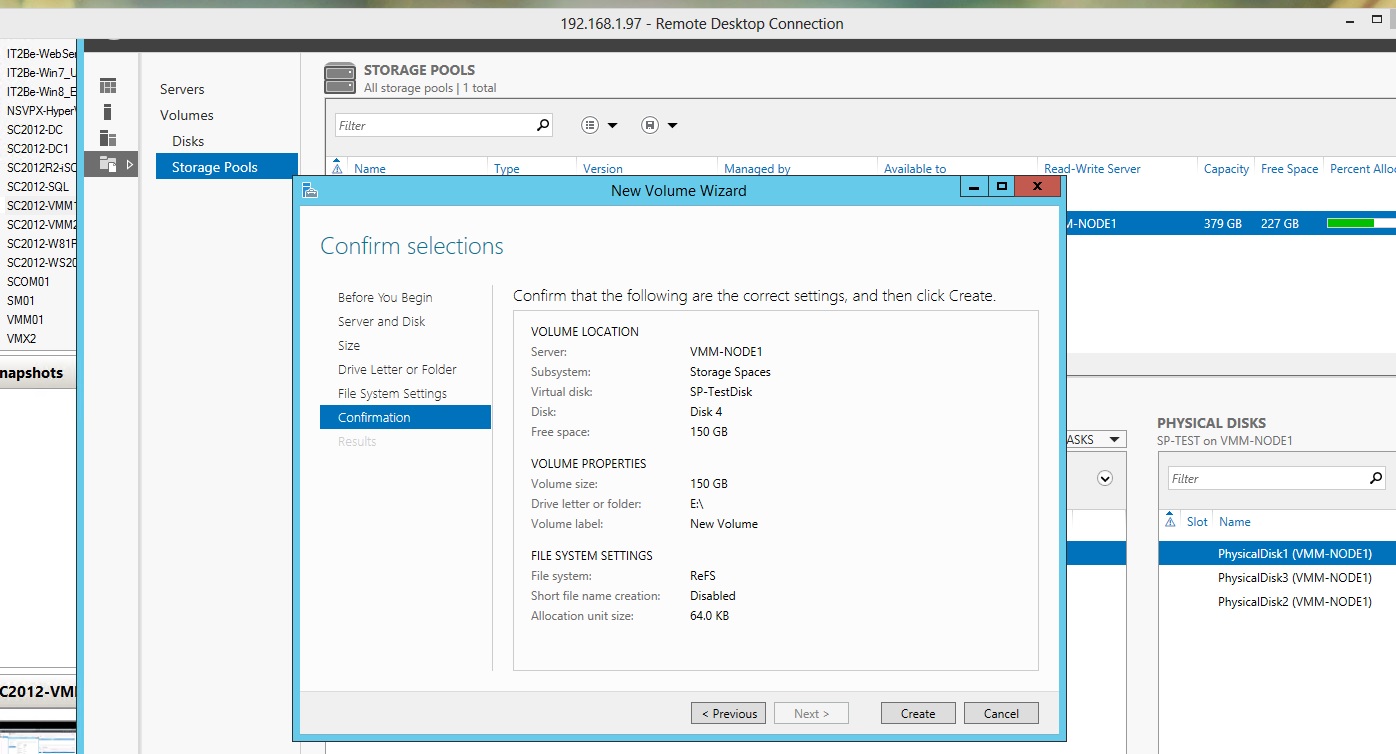

Everything would have been great with deduplication, but one small but ... De-duplication in WS2012 could not be applied to online-data, i.e. those that are engaged in some process are in use, which means the use of de-duplication over the VM becomes impossible, which killed all the attractiveness of this approach. However, the improvements in WS2012R2 allow us to use deduplication over active VHD / VHDX files, and is also effective for VHD / VHDX libraries, shared resources with product distributions and file globes in general. Below is a picture of deduplication efficiency indicators for my home server with a bunch of virtualoks and VHD / VHDX disks.

Figure 2. The effectiveness of de-duplication in Windows Server 2012 R2

If we are talking to you about the fine provision, then this approach can also be implemented for the VM. To make one prototype, the parent disk — and to make differential disks from it — we thereby preserve the consistency and uniformity of the virtual OS inside, and on the other, we reduce the space occupied by VM disks. The only subtle point in this scenario is the placement of the parent virtual hard disk on the fast drive - since parallel access to the same hard disk from many different VMs will lead to increased load on the sector data and data blocks. Those. either SSD is our choice, or virtualized storage. Well, the intellectual tearing is also useful here.

However, everything is in order.

Let's go back to the virtualization of the disk subsystem.

Historically, the virtualization of the disk subsystem is a very old topic and history. If we remember that virtualization is an abstraction from the physical layer, i.e. Hiding the underlying level - the RAID controller on the motherboard also performs the virtualization function of the storage device, namely the disks themselves.

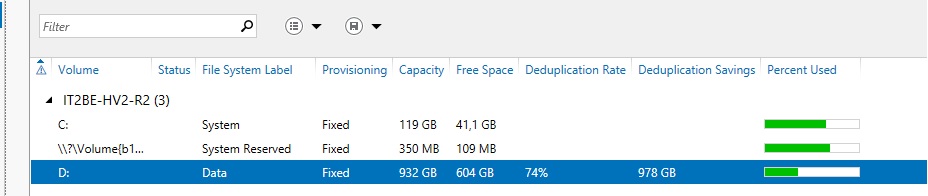

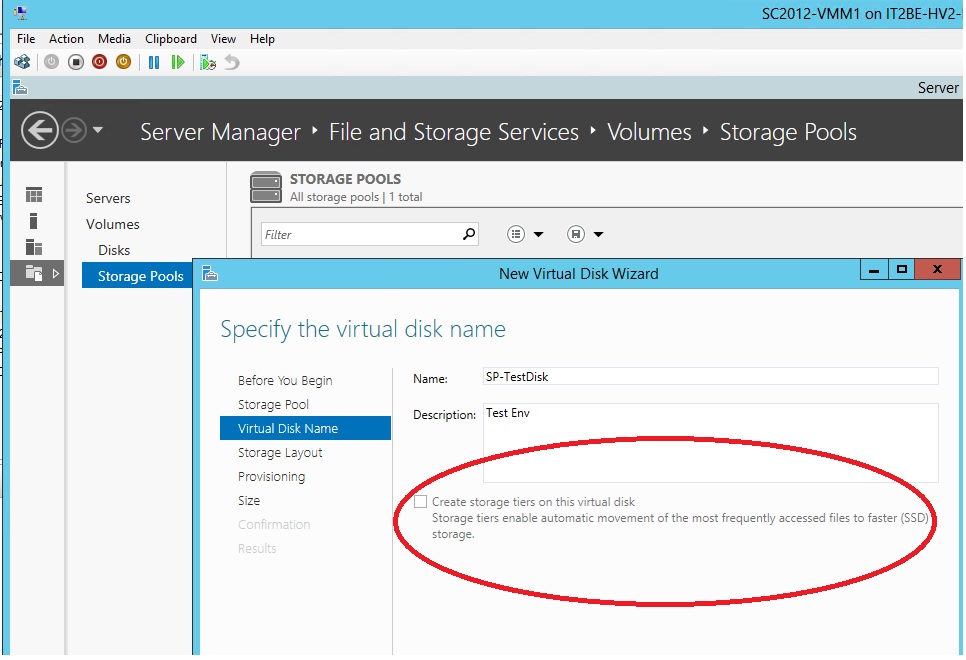

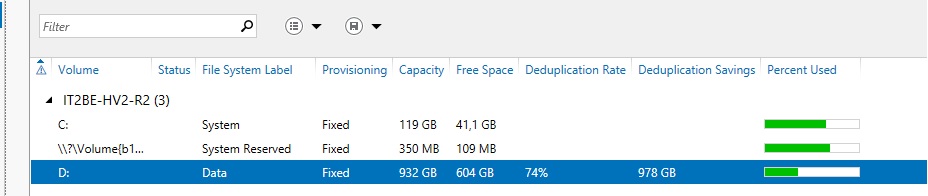

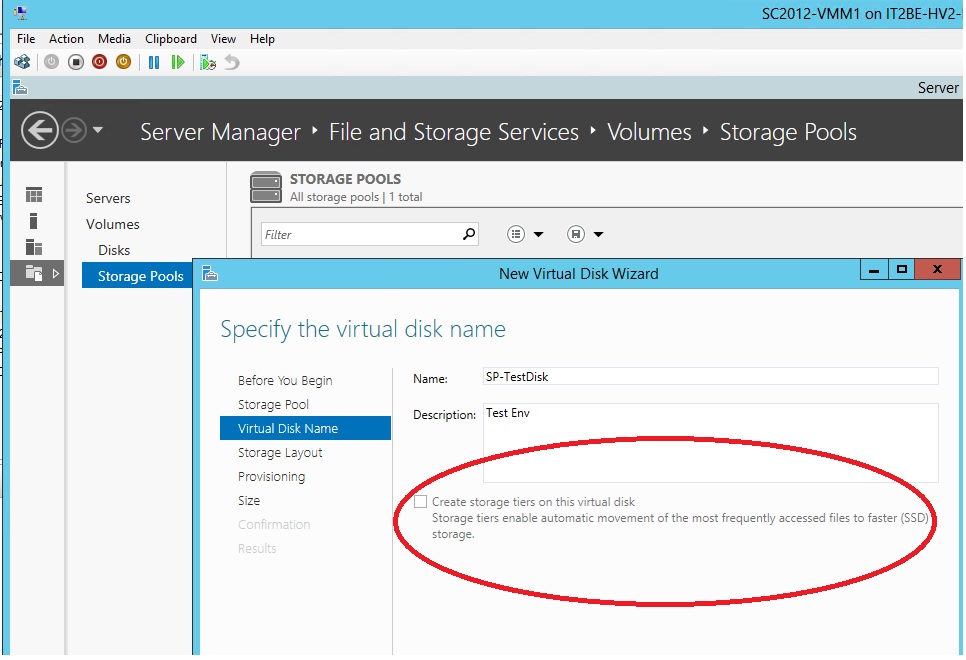

However, in WS2012, the Storage Spaces mechanism appeared - actually an analogue of a RAID controller, but at the level of a special WS I OS data input driver. Actually, the types of logical units created are very similar to the types of RAID volumes: Simple (RAID 0), Mirror (RAID 1) and Parity (RAID 5). In WS 2012 R2 OS, an intelligent storage tiring has been added to this mechanism. In other words, it is now possible to create aggregates on top of various types of disks, SATA , SAS and SSD , which are included in one unit - and WS2012 R2 will already intelligently distribute the loads on the disks themselves, depending on the types and intensity of the loads.

Figure 3. Enabling Intelligent Tiring when creating a logical disk aggregate using Storage Spaces.

Well, if we are talking about discipline related to data warehouses, how can we not pay attention to the issues of data reliability, or rather the reliability of their placement. There are several important points:

With the advent of WS 2012, we have the opportunity to place loads on top of the file and SMB 3.0 file system. Well, here, of course, we recall the duplication of our storage components: we can reserve network adapters using NIC Teaming built-in functionality or aggregation of data interfaces , and SMB Multichannel mechanisms perform the MPIO function, but at the SMB messaging level. We were protected from the fall of the channel - do not forget to only duplicate switches. Well, from the point of view of the continuity of VM hosting, and not only, but of files as a whole - we can deploy a scalable file server (Scale-Out File Server - SOFS) and place critical data on it, thus ensuring not that high availability, but it is the continuity of data access . Add to this the ability of WS 2012 R2 to use the ReFS file system for CSV volumes when creating a cluster - and here it is, high reliability and error correction of the file system almost on the fly, without stopping work! It remains to add that ReFS had some sores in its first version for WS2012, but now, of course, it has recovered and is now a truly resilient-system!

The ability to encrypt data over SMB is also an interesting feature for those who care about the safe placement and control of data access.

Another interesting feature is the possibility of using the mechanisms for controlling the throughput and performance of the disk subsystem - Storage QoS . The mechanism is interesting and important - it allows you to further use the policies of placing loads on top of storage using this mechanism, as well as transfer this data to System Center Virtual Machine Manager 2012/2012 R2.

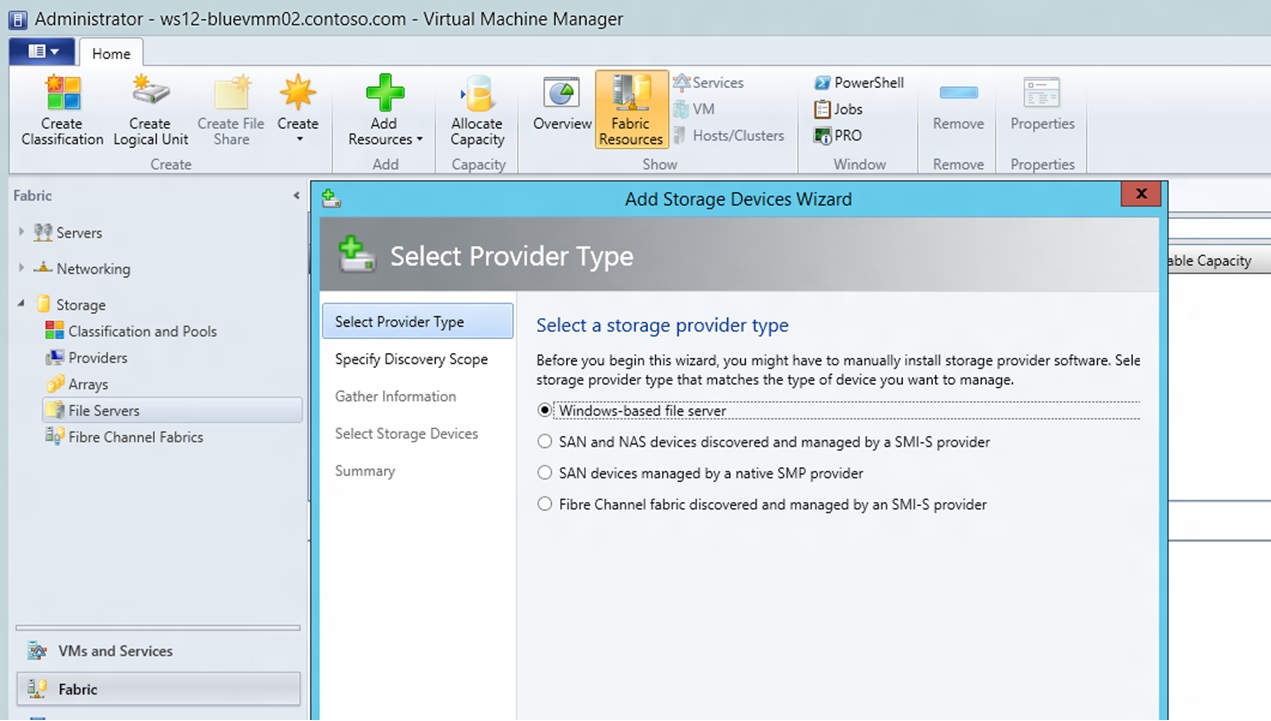

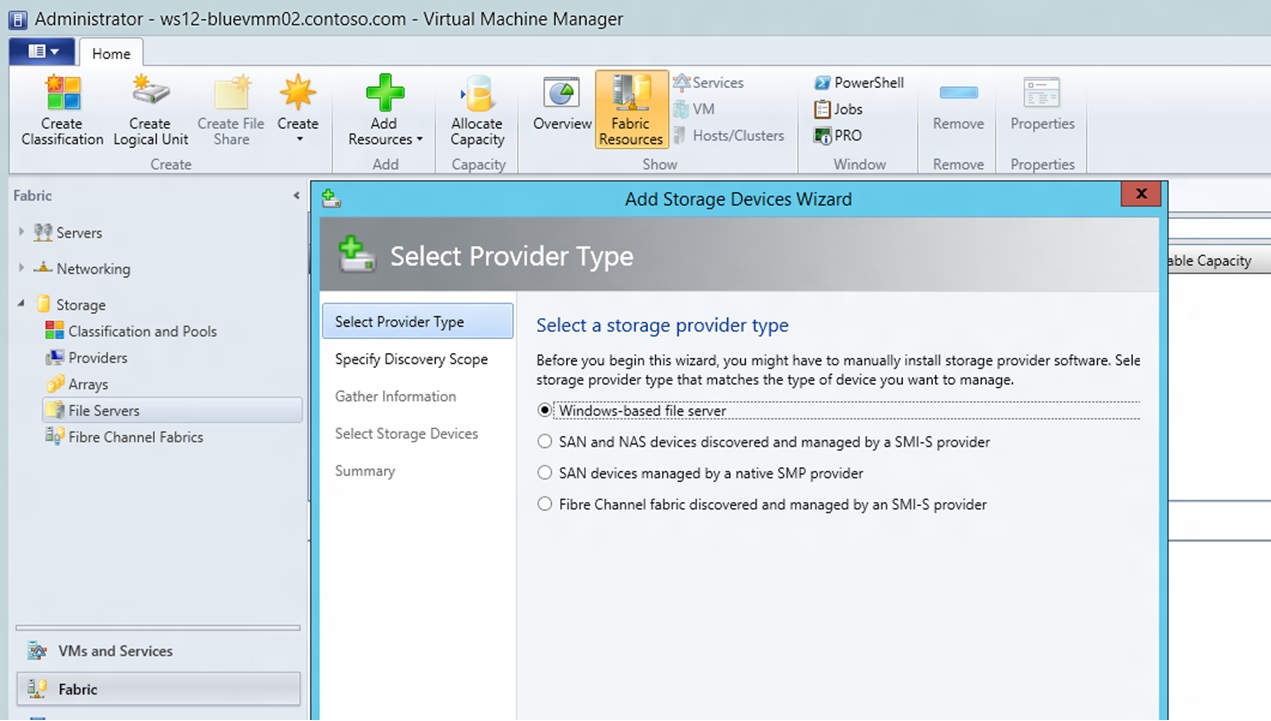

And lastly, it remains to recall that from the point of view of the management task, that Windows Server 2012 R2, that System Center 2012 R2, support various neutral management mechanisms - SMI-S, SMP, WMI .

Figure 5. Storage management capabilities and their provision in System Center 2012 R2 - Virtual Machine Manager .

Well, I can only add that so far the R2 category products are available in the preliminary version for review and you can download them here:

1) Windows Server 2012 R2 Preview - technet.microsoft.com/en-US/evalcenter/dn205286

2) System Center 2012 R2 Preview - technet.microsoft.com/en-US/evalcenter/dn205295

I hope you have been useful and interesting, until we meet again and have a good weekend!

PS> I forgot to remind you that on September 10, I and Alexander Shapoval will hold for everyone a free online seminar on the subject of new Windows Server 2012 R2 - join - it will be fiery! We recuperate here: technet.microsoft.com/ru-ru/dn320171

With respect and a fiery motor in the heart,

Fireman,

concurrently,

Information Infrastructure Expert

Microsoft Corporation

George A. Gadzhiev

Today I would like to tell you about the innovations that we will have in the field of managing disk subsystems and data storages in Windows Sever 2012 R2 . Despite the fact that with the release of Windows Server 2012 there were a lot of improvements in data warehousing and work with disk arrays and SANs, version R2 also has an impressive list of innovations, on the one hand, as well as evolutionary improvements to the features that appeared in the output his predecessor.

And so, let's try to figure out what has become fundamentally new in Windows Server 2012 R2, and what continues to evolve rapidly in the new incarnation of our wonderful server product.

')

I want to store it - big, fast, cool ... And quite inexpensive!

As a rule, such things as high-performance disk storages and the possibility of smooth scaling of the system on the fly are associated, first of all, with large, expensive “pieces of iron”, which have a cost commensurate with the cost of the cast-iron bridge.

But on the other hand, not every company is able to afford to acquire such a storage system, and even building a SAN network is a difficult and resource-intensive and expensive business, to be frank.

If we briefly formulate those requirements that everyone wants to satisfy for a minimum of money, then you and I will have the following picture:

Figure 1. Features of the data warehouse platform based on Windows Server 2012 R2.

In the left part of the figure, we see a list of challenges that companies usually encounter when it comes to implementing data storage systems and disk storage. On the right hand side is a list of features built-in Windows Server 2012 R2, which are designed to resolve these points. To be detailed, then let's look at some points, or rather we will understand what is usually required from modern high-performance "smart" data storage system:

1) The storage system must be resistant to component failures, which are, as a rule , disk array controllers , disk shelves that are connected directly to the storage controllers, as well as the disks themselves, more precisely arrays and aggregates that form from the disks. Connection interfaces, usually FC- adapters or iSCSI- adapters, should also be redundant and from the point of view of access to disks use multi-threading or MPIO ( M ulti- P ath I nput / O utput) mechanisms. Also, in practice, CNA adapters ( C onverged N etwork A dapter - converged network adapter) are used - a new trend in the field of building data centers and data networks, where the adapter uses an Ethernet medium for transmitting both LAN and SAN-type traffic, and type, mode of operation of such an adapter - from LAN to SAN - and vice versa, be dynamically changed. Also, the DCB standard ( D ata C enter B ridging) was developed to support the CNA approach for more convenient management of data and convergence networks - the DCB protocol, by the way, has been supported in Windows Server since the 2012 version.

2) Modern storage should be "smart", which in particular is manifested in the presence of functions such as data deduplication , Thin Provisioning or so-called. “Thin provision”, “thin cutting” and virtualization of the disk subsystem . Intellectual tiring (intellectual tiering) for distribution of loads according to disk types is not superfluous.

3) A modern storage system would be inconvenient to manage any convenient tool, preferably built into the OS. In real life, the IT environment consists of a heterogeneous environment, which in fact means that the infrastructure managers manage different storage systems from different manufacturers, and based on this fact, the task of managing them is complicated. It would be nice to use any neutral standards for management. Examples of such standards are SMI-S ( S torage M anagement I nitiative- S pecification) or SMP .

Now let's take a look at this picture in terms of the capabilities of Windows Server 2012 R2. As for multi-threading support for organizing, in fact, data storage clusters - then this function has been present in Windows Server since time immemorial and is implemented at the level of the network adapter driver and OS function - there is no problem with that. As far as convergence is concerned, I already mentioned support for CNA and DCB earlier.

But if you go to the second part, then there is something to profit (that is, something to tell - Samyl).

And so, let's start with data deduplication. This functionality was first introduced in Windows Server 2012 and data de-duplication in WS2012 / 2012R2 works at the block level. Let me remind you, colleagues, that deduplication can work at 3 levels, which determines, on the one hand, its effectiveness, and on the other, resource consumption. The most "light" version is file deduplication . Examples of file deduplication include SIS technology ( S ingle Instance S torage, which has already sunk into oblivion). As you might guess, it works at the file level and replaces completely duplicate files with links to the location of the original file. Replacing real data with links is a general principle of how deduplication works. But now, if we make changes to the deduplicated file, it will already become unique - and as a result, it will “eat” the real place - so the script is not the most attractive. Therefore, deduplication at the block level looks like a more attractive solution, and even if the original file is changed, only changed blocks will eat the place, not blocks of the entire file. This is how data deduplication works in WS2012 / 2012R2. Well, for completeness, it remains to mention that there is also a bit deduplication operates, as it is easy to guess from its name, at the bit level, it has the largest deduplication ratio, BUT it has just hellish resource-intensiveness ... As a rule, bit deduplication is used in traffic optimization systems which are used in the case of scenarios with participation of geographically distributed organizations, where communication channels between the organization’s offices are either very expensive to operate or have very low throughput the awn In essence, the function of such devices is to cache the traffic transmitted in the device and transmit only unique data bits. Solutions of this class can be both hardware and based on virtual applains (virtual machines with software that implement the corresponding functionality).

Everything would have been great with deduplication, but one small but ... De-duplication in WS2012 could not be applied to online-data, i.e. those that are engaged in some process are in use, which means the use of de-duplication over the VM becomes impossible, which killed all the attractiveness of this approach. However, the improvements in WS2012R2 allow us to use deduplication over active VHD / VHDX files, and is also effective for VHD / VHDX libraries, shared resources with product distributions and file globes in general. Below is a picture of deduplication efficiency indicators for my home server with a bunch of virtualoks and VHD / VHDX disks.

Figure 2. The effectiveness of de-duplication in Windows Server 2012 R2

If we are talking to you about the fine provision, then this approach can also be implemented for the VM. To make one prototype, the parent disk — and to make differential disks from it — we thereby preserve the consistency and uniformity of the virtual OS inside, and on the other, we reduce the space occupied by VM disks. The only subtle point in this scenario is the placement of the parent virtual hard disk on the fast drive - since parallel access to the same hard disk from many different VMs will lead to increased load on the sector data and data blocks. Those. either SSD is our choice, or virtualized storage. Well, the intellectual tearing is also useful here.

Virtualization ... And what is it like? ..

However, everything is in order.

Let's go back to the virtualization of the disk subsystem.

Historically, the virtualization of the disk subsystem is a very old topic and history. If we remember that virtualization is an abstraction from the physical layer, i.e. Hiding the underlying level - the RAID controller on the motherboard also performs the virtualization function of the storage device, namely the disks themselves.

However, in WS2012, the Storage Spaces mechanism appeared - actually an analogue of a RAID controller, but at the level of a special WS I OS data input driver. Actually, the types of logical units created are very similar to the types of RAID volumes: Simple (RAID 0), Mirror (RAID 1) and Parity (RAID 5). In WS 2012 R2 OS, an intelligent storage tiring has been added to this mechanism. In other words, it is now possible to create aggregates on top of various types of disks, SATA , SAS and SSD , which are included in one unit - and WS2012 R2 will already intelligently distribute the loads on the disks themselves, depending on the types and intensity of the loads.

Figure 3. Enabling Intelligent Tiring when creating a logical disk aggregate using Storage Spaces.

Reliability, reliability and reliability again

Well, if we are talking about discipline related to data warehouses, how can we not pay attention to the issues of data reliability, or rather the reliability of their placement. There are several important points:

With the advent of WS 2012, we have the opportunity to place loads on top of the file and SMB 3.0 file system. Well, here, of course, we recall the duplication of our storage components: we can reserve network adapters using NIC Teaming built-in functionality or aggregation of data interfaces , and SMB Multichannel mechanisms perform the MPIO function, but at the SMB messaging level. We were protected from the fall of the channel - do not forget to only duplicate switches. Well, from the point of view of the continuity of VM hosting, and not only, but of files as a whole - we can deploy a scalable file server (Scale-Out File Server - SOFS) and place critical data on it, thus ensuring not that high availability, but it is the continuity of data access . Add to this the ability of WS 2012 R2 to use the ReFS file system for CSV volumes when creating a cluster - and here it is, high reliability and error correction of the file system almost on the fly, without stopping work! It remains to add that ReFS had some sores in its first version for WS2012, but now, of course, it has recovered and is now a truly resilient-system!

The ability to encrypt data over SMB is also an interesting feature for those who care about the safe placement and control of data access.

Another interesting feature is the possibility of using the mechanisms for controlling the throughput and performance of the disk subsystem - Storage QoS . The mechanism is interesting and important - it allows you to further use the policies of placing loads on top of storage using this mechanism, as well as transfer this data to System Center Virtual Machine Manager 2012/2012 R2.

And lastly, it remains to recall that from the point of view of the management task, that Windows Server 2012 R2, that System Center 2012 R2, support various neutral management mechanisms - SMI-S, SMP, WMI .

Figure 5. Storage management capabilities and their provision in System Center 2012 R2 - Virtual Machine Manager .

Is this the end? .. No - it is just the beginning!

Well, I can only add that so far the R2 category products are available in the preliminary version for review and you can download them here:

1) Windows Server 2012 R2 Preview - technet.microsoft.com/en-US/evalcenter/dn205286

2) System Center 2012 R2 Preview - technet.microsoft.com/en-US/evalcenter/dn205295

I hope you have been useful and interesting, until we meet again and have a good weekend!

PS> I forgot to remind you that on September 10, I and Alexander Shapoval will hold for everyone a free online seminar on the subject of new Windows Server 2012 R2 - join - it will be fiery! We recuperate here: technet.microsoft.com/ru-ru/dn320171

With respect and a fiery motor in the heart,

Fireman,

concurrently,

Information Infrastructure Expert

Microsoft Corporation

George A. Gadzhiev

Source: https://habr.com/ru/post/191962/

All Articles