XAP (Shitty Architecture Ruins)

Yesterday for the first time I wrote an article on habr, not knowing the local subtleties.

Correct! Now understandable language and with humor!

Black Friday turned out to be truly black for Kohl's, an American online store. All servers were covered with a copper basin on the day of Christmas sales. The usual 20% of the annual income produced on this day turned into a ridiculous trifle, and all because Bolivar could not bear such a burden.

')

Traditional architecture Tomcat + WebLogic + DB screwed up in full! System administrators ran in vain on the floors, leading programmers fussed in panic, and the architects tore out the remnants of hair ... The bottle neck was too narrow for all potential customers to squeeze into it and not elastic enough to expand in a short time. Bottle ripped fuck. And for a long time the wounds caused by its fragments were bleeding ...

- Son, it works - do not touch!

- Dad, it does not work, it BRAKES !!!

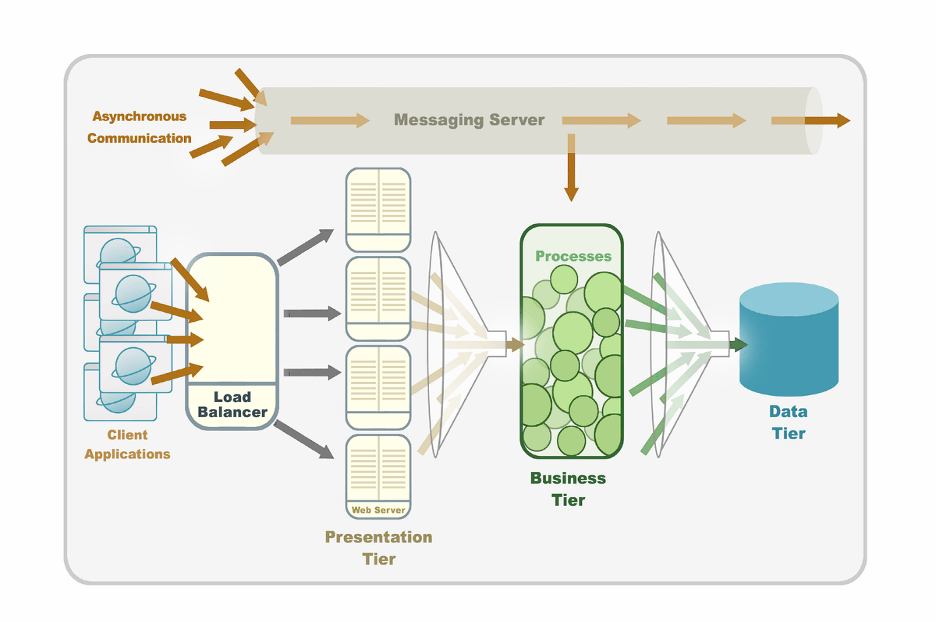

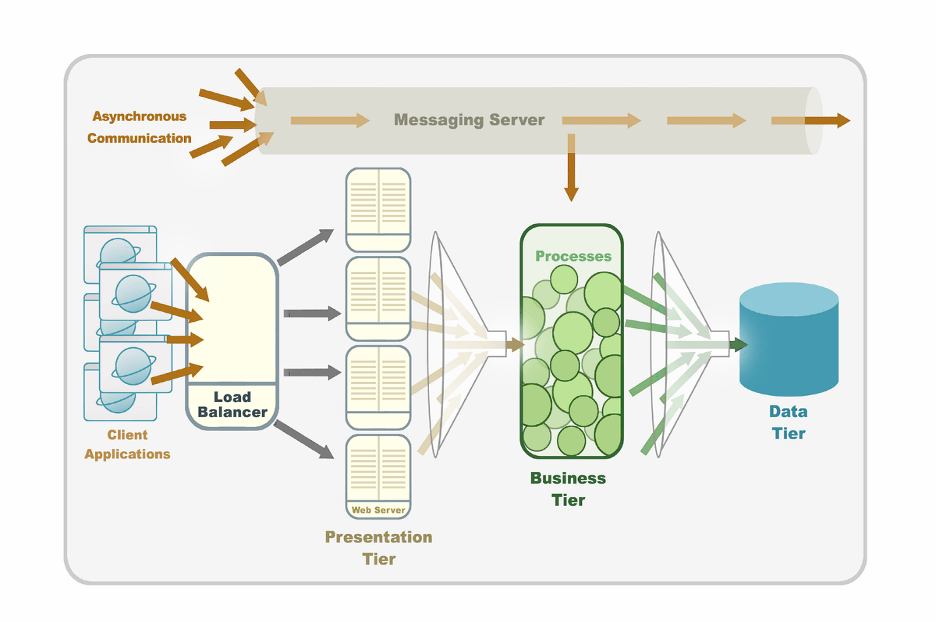

You all know perfectly well the old, but far from always kind, three-tier architecture.

By analyzing this solution, it is easy to identify several obvious problems.

All levels have different clustering models. To manage such a system requires knowledge and experience with all of them. This entails:

Such as hard drives and server names. Difficulties arise when installing such applications in the clouds, as they are very dynamic in nature.

Almost any request goes through all three levels of the system and can include many network hops between and within the levels, which is bad for the average response time. In addition, sooner or later, the data will have to be flushed to disk. Network and disk I / O significantly limits scalability and, again, leads to system braking.

As a result, the Three-tier Architecture cannot be predictably scaled. Increasing the load on the system will require more resources for data processing, but to solve this problem stupidly adding hardware will not work. Moreover, often the addition of additional resources to one of the levels (for example, database servers) will not only help, but, on the contrary, will increase the waiting time and lower the bandwidth of the system as a whole due to the overhead of synchronization between cluster nodes.

To solve problems of latency and scalability, they usually put an in-memory datagrid in front of the relational database. Undoubtedly, this is a step in the right direction, which partially relieves the system and is mainly suitable for caching. It is worth noting that most datagrids are limited in their ability to retrieve data only with a unique ID. Although this solution can be applied in individual cases, it is not ideal for the following reasons:

Consider Kohl's real multi-tier system architecture from the field of online sales.

Immediately striking that any part of this system can play the role of a bottleneck. Obviously, adding additional resources to any other place except the “narrow” does not help to get rid of the brakes in the system.

In the case of Kohl's WebLogic, Apache and the Oracle database did an excellent job with tasks using 50 physical servers. 30,000 simultaneously connected users regularly received answers to all requests. It would continue to work in the future if, for example, the company had to service a certain fixed number of transactions every second, and there were no abrupt changes in the system requirements.

However, that same “black Friday” (Black Friday, when millions of Americans rush into stores, and retailers make 20, and sometimes 30 percent of annual revenue per day) of 2009 required the system to cope with a load of 500,000 users at the same time. The three-tier architecture was not ready for such a blow ...

The result is a loss of tens of millions of dollars. Hence the question:

So, we formulate the key requirements for a modern platform:

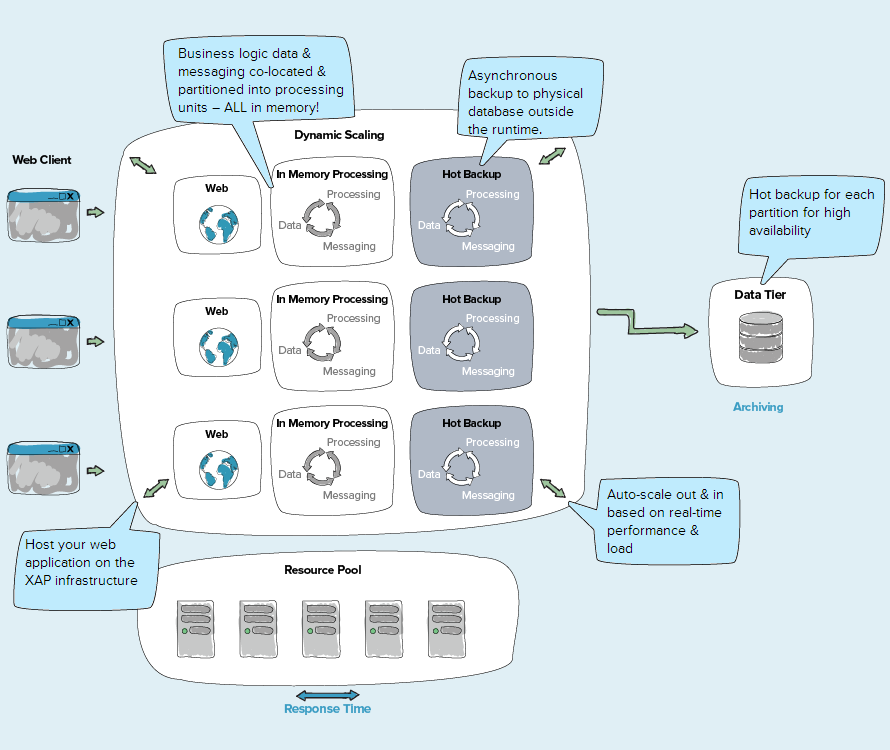

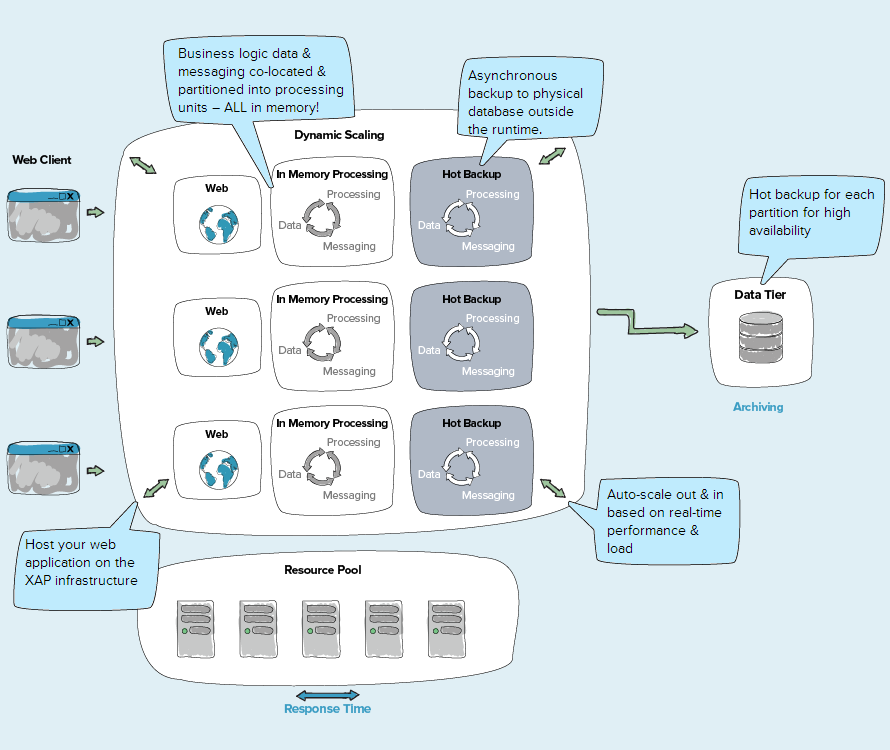

Approximately such requirements were guided by the engineers of Gigaspaces who 10 years ago began developing a new XAP platform (eXtreme Application Platform)

But the video

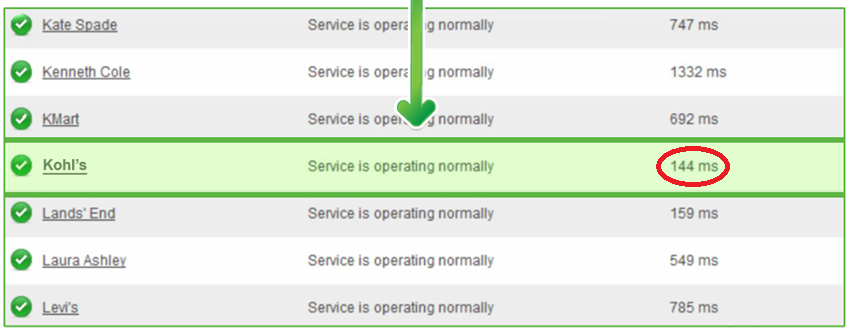

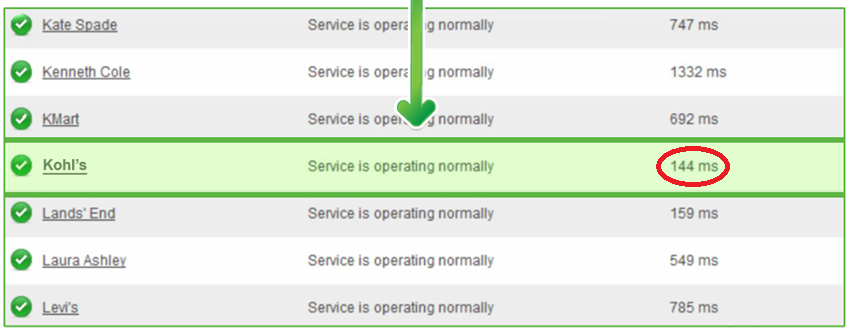

After the crisis of 2009, Kohl replaced the old architecture with XAP. As a result, according to Google, today their site is one of the first in the world in the speed of issue

To date, the XAP platform uses not only Kohl. The list of her clients today includes leading Swiss banks (for example, UBS ), the New York Stock Exchange, Avaya, and hundreds of other companies.

Someone will say: "And I use a multi-layered architecture and very satisfied!" And very well. But living in a world where the volume of data grows exponentially, we must understand that even if today there is no need for an in-memory computing platform, then we should at least know about their existence and the benefits they bring. Maybe the requirements for the amount of data processed by your application will grow in the near future, and then, undoubtedly, in-memory computing platforms can help, not to mention applications that operate in real time with huge amounts of data for which their use is indispensable.

Come to JUG on Saturday the 31st , and I will tell you about XAP, much more colorfully and lively will demonstrate its work.

And here is the video:

Correct! Now understandable language and with humor!

Black Friday turned out to be truly black for Kohl's, an American online store. All servers were covered with a copper basin on the day of Christmas sales. The usual 20% of the annual income produced on this day turned into a ridiculous trifle, and all because Bolivar could not bear such a burden.

')

Traditional architecture Tomcat + WebLogic + DB screwed up in full! System administrators ran in vain on the floors, leading programmers fussed in panic, and the architects tore out the remnants of hair ... The bottle neck was too narrow for all potential customers to squeeze into it and not elastic enough to expand in a short time. Bottle ripped fuck. And for a long time the wounds caused by its fragments were bleeding ...

The problems of three-tier architecture

- Son, it works - do not touch!

- Dad, it does not work, it BRAKES !!!

You all know perfectly well the old, but far from always kind, three-tier architecture.

three-tier architecture

The first layer contains data that are mostly stored in a relational database; if we are talking about large volumes, then apparently the choice will fall on Oracle. Here the data is saved, updated, retrieved and sent to the next logical layer. On the second layer, find business logic, all sorts of EJB, Spring and Hibernate. Where will all this code sit? Of course, there are plenty of options in the application server - JBoss, WebLogic, WebSphere. Next is the third layer - the web client. Here you can get rid of tomkats, and even load balancer.

What did I forget? Oh yeah, messaging - it will provide reliable asynchronous interaction with application components. Event-driven architecture is our everything! And of course, the clusters that will be used in each of the layers for reliability.

What did I forget? Oh yeah, messaging - it will provide reliable asynchronous interaction with application components. Event-driven architecture is our everything! And of course, the clusters that will be used in each of the layers for reliability.

By analyzing this solution, it is easy to identify several obvious problems.

Management difficulties

All levels have different clustering models. To manage such a system requires knowledge and experience with all of them. This entails:

- High cost: companies are forced to acquire separate licenses for all components and hire experts to install and maintain each level. In addition, the clustering of some components is not always simple and, often, full of unforeseen difficulties even for the most experienced specialists.

- Difficulties in controlling: tracking and monitoring such a large number of components in a real working system once again requires additional resources. In most cases, you need to purchase additional software applications for such purposes.

- Difficulties in identifying and solving problems: it is difficult to determine what and at what level it happened if the system failed

- Difficulties in software implementation: inter-module integration and configuration can also be an additional source of costs. Making all modules “communicate” correctly with one another will usually take some time and additional resources.

Binding to static resources

Such as hard drives and server names. Difficulties arise when installing such applications in the clouds, as they are very dynamic in nature.

Performance

Almost any request goes through all three levels of the system and can include many network hops between and within the levels, which is bad for the average response time. In addition, sooner or later, the data will have to be flushed to disk. Network and disk I / O significantly limits scalability and, again, leads to system braking.

As a result, the Three-tier Architecture cannot be predictably scaled. Increasing the load on the system will require more resources for data processing, but to solve this problem stupidly adding hardware will not work. Moreover, often the addition of additional resources to one of the levels (for example, database servers) will not only help, but, on the contrary, will increase the waiting time and lower the bandwidth of the system as a whole due to the overhead of synchronization between cluster nodes.

Why cache and datagrid do not solve the problem

To solve problems of latency and scalability, they usually put an in-memory datagrid in front of the relational database. Undoubtedly, this is a step in the right direction, which partially relieves the system and is mainly suitable for caching. It is worth noting that most datagrids are limited in their ability to retrieve data only with a unique ID. Although this solution can be applied in individual cases, it is not ideal for the following reasons:

- It adds another level for which additional licenses are required. Like all others, a new level needs to be integrated, configured, monitored, and eliminated if a problem arises. Thus, it increases the overall complexity of managing this architecture and the cost of its installation, support and maintenance.

- As mentioned above, such solutions will help for systems with many reads. But it is absolutely useless for systems with many write operations. Moreover, in the presence of a cache, there are additional costs of synchronization between the cache and the database.

Real world example

Consider Kohl's real multi-tier system architecture from the field of online sales.

Immediately striking that any part of this system can play the role of a bottleneck. Obviously, adding additional resources to any other place except the “narrow” does not help to get rid of the brakes in the system.

In the case of Kohl's WebLogic, Apache and the Oracle database did an excellent job with tasks using 50 physical servers. 30,000 simultaneously connected users regularly received answers to all requests. It would continue to work in the future if, for example, the company had to service a certain fixed number of transactions every second, and there were no abrupt changes in the system requirements.

However, that same “black Friday” (Black Friday, when millions of Americans rush into stores, and retailers make 20, and sometimes 30 percent of annual revenue per day) of 2009 required the system to cope with a load of 500,000 users at the same time. The three-tier architecture was not ready for such a blow ...

The result is a loss of tens of millions of dollars. Hence the question:

Is it time to change the old heroes?

So, we formulate the key requirements for a modern platform:

- Data operations must be fast.

- The separation between layers of abstraction should not beat on performance

- Adding new resources should linearly (or almost linearly) increase productivity, and also not rest on any restrictions.

- The diplomament must be both flexible and simple.

- The system must be "unkillable" and warn in advance of potential problems.

Approximately such requirements were guided by the engineers of Gigaspaces who 10 years ago began developing a new XAP platform (eXtreme Application Platform)

That's how they all came up

- All data is stored in the RAM, and not on the disk. If anything, the RAM is very cheap today, nor in the example of the Oracle Database, even with the simplest support

- The application logic sits in the same data container. Of course, university teachers and other lovers of classical architecture may not like it. Another habrovchanin can even decide that such a decision contributes to unhealthy mixing of data with logic. However, experience shows that no layers will stop real craftsmen, and with proper skills, you can make a salad with any kind of “architecture”!

- All data is divided into an unlimited number of partisens, each of which can sit in a separate machine.

- The diplomament is described declaratively! “Break me all the data into 100,500 pieces, for each piece, 2 backups, not double backups in one data center, do not use machines loaded with more than 146 percent for diploymen”

- Special dispatchers write data to several places at once and in the event of a failure automatically redirect requests to other machines. In parallel, there is a convenient monitoring system that notifies of all potential problems, such as an insufficient number of nodes in the cluster, system failures, or machine congestion.

But the video

Kohl has risen from the ashes

After the crisis of 2009, Kohl replaced the old architecture with XAP. As a result, according to Google, today their site is one of the first in the world in the speed of issue

To date, the XAP platform uses not only Kohl. The list of her clients today includes leading Swiss banks (for example, UBS ), the New York Stock Exchange, Avaya, and hundreds of other companies.

Epilogue

Someone will say: "And I use a multi-layered architecture and very satisfied!" And very well. But living in a world where the volume of data grows exponentially, we must understand that even if today there is no need for an in-memory computing platform, then we should at least know about their existence and the benefits they bring. Maybe the requirements for the amount of data processed by your application will grow in the near future, and then, undoubtedly, in-memory computing platforms can help, not to mention applications that operate in real time with huge amounts of data for which their use is indispensable.

P.S

And here is the video:

Source: https://habr.com/ru/post/191946/

All Articles