Reed-Solomon codes. Simple example

Thanks to the Reed-Solomon codes, you can read a CD with many scratches, or transmit information in connection with a lot of interference. On average, for a compact disc, code redundancy (i.e., the number of additional characters that can be used to recover information) is approximately 25%. At the same time, you can restore the amount of data equal to half of the excess. If the disk capacity is 700 MB, then, it turns out, it is theoretically possible to restore up to 87.5 MB out of 700. At the same time, we don’t have to know exactly which character was transmitted with an error. It is also worth noting that interleaving is used with coding, when the bytes of different blocks are mixed in a certain order, which as a result allows reading discs with extensive damage localized close to each other (for example, deep scratches), because after the reverse operation of interleaving, extensive damage results in single errors in a variety of code blocks that can be recovered.

Thanks to the Reed-Solomon codes, you can read a CD with many scratches, or transmit information in connection with a lot of interference. On average, for a compact disc, code redundancy (i.e., the number of additional characters that can be used to recover information) is approximately 25%. At the same time, you can restore the amount of data equal to half of the excess. If the disk capacity is 700 MB, then, it turns out, it is theoretically possible to restore up to 87.5 MB out of 700. At the same time, we don’t have to know exactly which character was transmitted with an error. It is also worth noting that interleaving is used with coding, when the bytes of different blocks are mixed in a certain order, which as a result allows reading discs with extensive damage localized close to each other (for example, deep scratches), because after the reverse operation of interleaving, extensive damage results in single errors in a variety of code blocks that can be recovered.Let's take a simple example and try to go all the way - from coding to getting the source data on the receiver. Suppose we need to transmit the code word C, consisting of two numbers - 3 and 1 in exactly this sequence, i.e. we need to pass the vector C = (3,1). Suppose we want to correct a maximum of two errors, not knowing exactly where they may appear. For this you need to take 2 * 2 = 4 redundant characters. We write them with zeros in our word, i.e. C is now equal to (3,1,0,0,0,0). Next, you need a little bit to deal with mathematical features.

Paul Galois

Many people know the romantic story of a young man who lived only 20 years old and wrote his mathematical theory one night, and was killed in a duel in the morning. This is Evariste Galois . He also tried several times to go to university, but the examiners did not understand his decisions, and he failed the exams. He had to learn on his own. Neither Gauss nor Poisson, to whom he sent his works, also did not understand them, but his theory was very useful in the 60s of the 20th century, and is actively used in our time both for theoretical calculations in new branches of mathematics and in practice.

We will use fairly simple conclusions that follow from his group theory. The basic idea is a finite (and not infinite) number of numbers, called a field, with which you can perform all known mathematical operations. The number of numbers in a field must be a prime number in any natural degree, however, in the case of simple Reed-Solomon codes considered here, the field dimension is a prime number in degree 1. In the extended Reed-Solomon codes, the degree is more than 1.

For example, for a Galois field of dimension 7, i.e. GF (7), all mathematical operations will occur with numbers 0,1,2,3,4,5,6.

Example of addition: 1 + 2 = 3; 4 + 5 = 9 mod 7 = 2. Addition in the Galois fields is addition modulo. Subtraction and multiplication is also done modulo.

Example of division: 5/6 = 30/36 = 30 / (36 mod 7) = 30/1 = 30 = 30 mod 7 = 2.

The erection degree is analogous to multiplication.

A useful property is found in the Galois fields during exponentiation. As you can see, if you raise to the power of the number 3 or 5 in the selected Galois field GF (7), the line contains all the elements of the current Galois field except 0. Such numbers are called primitive elements. Reed-Solomon codes typically use the largest primitive element of a selected Galois field. For GF (7) it is equal to 5.

It can be noted that the numbers in the Galois fields are at the same time abstractions, which are more closely related to each other than the numbers we are used to.

')

Interpolation

As many know from the school course, interpolation is the finding of a minimal degree polynomial in a given set of points. For example, there are three points and three values of some function at these points. You can find a function that satisfies this input. For example, it is very easy to do using the Lagrange transform. Once the function is found, you can build a few more points, and these points will be associated with the three original points. The formation of redundant characters during encoding is an operation similar to interpolation.

Inverse Discrete Fourier Transform (IDFT)

The Fourier transform , along with the theory of Galois groups, is another vertex of mathematical thought, which in today's time is used in many fields. Some even believe that the Fourier transform describes one of the fundamental laws of the universe. The main point: any non-periodic signal of finite length can be represented as a sum of sinusoids of different frequencies and phases, then the original signal can be re-constructed from them. However, this is not the only description. The numbers in the Galois fields resemble the different frequencies of the sinusoids mentioned, so the Fourier transform can be used for them.

The discrete Fourier transform is a Fourier transform formula for discrete values. The transformation has two directions - direct and reverse. The inverse transformation is easier mathematically, so let's encode the considered word C = (3,1,0,0,0,0) with it. The first two characters are information, the last four characters are redundant and are always 0.

We write the code word C in the form of a polynomial: C = 3 * x 0 + 1 * x 1 + 0 * x 2 + ... = 3 + x. As a Galois field, we take the aforementioned GF (7), where the primitive element is = 5. Making the IDFT, the values of the polynomial C are found for the primitive element z of different degrees. IDFT formula: c j = C (z j ). That is, we find the values of the function C (z j ), where j are elements of the Galois field GF (7). We count to j = N-2 = 7-2 = 5 degrees. Looking at the table of degrees, you can guess why: in the sixth degree, the value is again 1, i.e. repeats, as a result of which it would then be impossible to determine to what extent they erected - at the 6th or 0th.

0 = (z 0 ) = 3 + 1 * z 0 = 3 + 1 * 5 0 = 4

with 1 = C (z 1 ) = 3 + 1 * z 1 = 8 = 1

2 = (z 2 ) = 3 + 1 * z 2 = 0

...

Thus C (3,1,0,0,0,0) => s (4,1,0,2,5,6).

We pass the word with (4,1,0,2,5,6).

Mistake

Error is another word that is added to the transmitted one. For example, the error f = (0,0,0,2,0,6). If done with + f, we get with f (4,1,0,4,5,5).

Direct Fourier Transform (DFT). Decoding. Syndrome

At the receiver, we received the word c + f = c f (4,1,0,4,5,5). How to check if there were any transmission errors? It is known that we encoded information using IDFT in GF (7). DFT (Discrete Fourier Transform) is the inverse of the IDFT. Having done it, you can get the initial information and four zeros (i.e. C (3,1,0,0,0,0)), in case there were no errors. If there were errors, then instead of these four zeros there will be other numbers. Do the DFT for with f and check if there are any errors. DFT formula: k = N -1 * c (z -kj ). The primitive element z = 5 of the field GF (7) is still used.

C 0 = c ( 5-0 * j ) / 6 = (4 * 5-0 * 0 + 1 * 5 -1 * 0 + 0 * 5 -2 * 0 + 4 * 5 -3 * 0 + 5 * 5 -4 * 0 + 5 * 5 -5 * 0 ) / 6 = (4 + 1 + 4 + 0 + 5 + 5) / 6 = 19/6 = 5/6 = 30/36 = 30 = 2;

C 1 = c (5 -1 * j ) / 6 = (4 * 5 -0 * 1 + 1 * 5 -1 * 1 + 0 * 5 -2 * 1 + 4 * 5 -3 * 1 + 5 * 5 -4 * 1 + 5 * 5 -5 * 1 ) / 6 = (4 + 3/15 + 24/750 + 20/2500 + 25/15625) / 6 = (4 + 3 + 24 + 20 + 25) / 6 = 76/6 = 456/36 = 456 = 1;

C 2 = c (5 -2 * j ) / 6 = (4 * 5 -0 * 2 + 1 * 5 -1 * 2 + 0 * 5 -2 * 2 + 4 * 5 -3 * 2 + 5 * 5 -4 * 2 + 5 * 5 -5 * 2 ) / 6 = (4 + 2 + 4 + 10 + 20) / 6 = 40/6 = 240/36 = 240 = 2;

...

with f (4,1,0,4,5,5) => C f (2,1, 2,1,0,5 ). The highlighted characters would be zeros if there was no error. Now it is clear that the error was. In this case, the symbols 2,1,0,5 are called error syndrome.

Berlekamp-Messi algorithm for calculating the error position

To correct the error, you need to know exactly which characters were transmitted with an error. At this stage, it is calculated where the error symbols are located, how many errors there were, and whether it is possible to correct such a number of errors.

The Berlekampa-Messi algorithm is engaged in the search for a polynomial, which, when multiplied by a special matrix prepared from the syndrome numbers (example below), will return the zero vector. The proof of the algorithm shows that the roots of this polynomial contain information about the position of characters with errors in the resulting codeword.

Since the maximum number of errors for the considered case can be 2, we write the formula of the desired polynomial in the matrix form for two errors (a polynomial of degree 2): T = [1 T1 T2].

Now we will write down the syndrome (2,1,0,5) in the Toeplitz matrix format. If you look at the syndrome and the resulting matrix, you will immediately notice the principle of creating such a matrix. The dimension of the matrix is due to the polynomial G, indicated above.

The equation to be solved is:

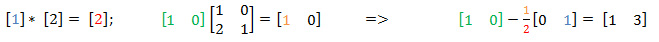

Items under question marks do not affect the result. It is necessary to find G1 and G2, they are responsible for the position of errors. We will gradually increase the dimension with which we work. We start at 1 and at the lower left edge of the matrix (marked in green) with a minimum dimension of 1x1.

We increase the dimension. Suppose that we do not have an error in position G1. Then r1 = 0.

We should get [0 0] on the right, or at least one 0 in the first place to increase the dimension of calculations, but in the first place it is 1. We can choose a value for G1 (which is now 0) in order to get the required 0 on the right The process of selecting the value of G1 in the algorithm is optimized. We will write down two equations considered by yours, once again, and also we will deduce the third equation, which calculates 1. The colors are shown to go where.

Those. G1 = 3. I remind you that the calculation goes to GF (7). It is also worth noting that the value of G1 is temporary. During the calculations of G2 it may change. Consider again the right side:

Received 0 on the right, now we increase the dimension. We assume by analogy that G2 = 0. We use the found G1 = 3.

First of all, instead of the three right you need to get 0. Actions correspond to the previous ones. Further selection of the value of T2.

We write the new value G2 = 2 into the basic equation and again try to find the values on the right:

The task at this step was to get only the first zero, but by chance the second element of the matrix also turned out to be zero, i.e. problem solved. If it were not zero, we would once again make a selection of values (this time for G1 and G2), increasing the dimension of the selection. If you are interested in this algorithm, here are two more examples.

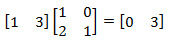

So, G1 = 3, G2 = 2. Non-zero values for G1 and G2 show that there were 2 errors. We write the matrix Γ in the form of a polynomial: Γ (z) = 1 + 3x + 2x 2 . Roots taking into account the degrees of the primitive element, in which the result is 0:

G (5 3 ) = 1 + 3 * 6 + 2 * 6 2 = 91 = 13 * 7 = 0.

G (5 5 ) = 1 + 3 * 3 + 2 * 3 2 = 28 = 0.

This means that errors in positions 3 and 5.

Similarly, you can find the remaining values to make sure that they do not give zeros:

=> (z j ) = (3,5,5,0,4,0). As you can see, IDFT is again used in GF (7).

Forni error correction

At the previous stage, the error positions were calculated, it now remains to find the correct values. A polynomial describing the positions of errors: (z) = 1 + 3x + 2x 2 . We write it in normalized form, multiplying by 4 and using the properties of GF (7): G (z) = x 2 + 5x + 4.

The Forney method is based on the Lagrange interpolation and uses the polynomials we used in the Berlekamp-Messi algorithm.

The method additionally calculates those characters that stand on the ground, not related to the syndrome. These are the positions that correspond to real values, however, other values are calculated for them, which are obtained from the convolution of the error syndrome and the polynomial G. These calculated new values together with the syndrome form the error mask. Next, the IDFT is executed and the result is directly an error, which was previously summed up with the transmitted codeword. It is subtracted from the received word and we get the original transmitted word. Then we execute DFT for the transmitted codeword and finally we get the information. Further, as it happens in the context of the considered example.

We write the error vector F (the last 4 characters are the syndrome that we constantly use, two question marks - in the field of information symbols, this is the error mask) and we denote each symbol with a letter:

The symbols of the error locator polynomial (z) = x2 + 5x + 4 are denoted as:

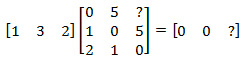

The multiplication of the polynomial Γ on the Toeplitz matrix in the previous section was, in fact, a cyclic convolution operation: if we write linear equations that are derived from the matrix, we can see that the values that are taken from the syndrome (the values of the Toeplitz matrix) simply change from equation to equation in some places, moving consistently in a certain direction, and this is called convolution. I specifically placed the polynomials F and G on top of each other at the beginning of this paragraph so that you can do convolutions (multiply element by element in a certain order), moving the polynomials visually. Expanding the matrix equation from the previous section and using the notation for the polynomials F and G, just introduced:

0 * F4 + 1 * F3 + 2 * F2 = 0

0 * F5 + 1 * F4 + 2 * F3 = 0

Previously, the convolution was performed only for the syndrome; in the Forni method, it is necessary to do convolutions for F0 and F1, and then find their values:

0 * F3 + 1 * F2 + 2 * F1 = 0

0 * F2 + 1 * F1 + 2 * F0 = 0

F0 = -G0 * F3 - G1 * F2 = 0

F1 = -G0 * F2 - G1 * F1 = 6

That is, F = (6,0,2,1,0,5). We perform IDFT, since the error was summed up with the word that was encoded in IDFT: f = (0,0,0,2,0,6).

Subtract error f from the received code word cf: (4,1,0,4,5,5) - (0,0,0,2,0,6) = s (4,1,0,2,5,6 )

Let's make DFT for this word: c (4,1,0,2,5,6) => C = (3,1,0,0,0,0). And here are our symbols 3 and 1, which had to be transmitted.

Conclusion

Usually, extended Reed-Solomon codes are used, that is, the Galois field is a power of two (GF (2 m )), for example, encoding information bytes. The work algorithms are similar to the ones that are discussed in this article.

There are many variations of the algorithm, depending on the application, as well as depending on the age of each particular variety and on the company-developer. The younger the algorithm, the more difficult it is.

Also, many devices use predefined error tables. Under the conditions of using Galois arithmetic, a finite number of possible errors is obtained. This property is used to reduce the number of calculations. Here, if the syndrome is non-zero, it is simply compared with a table of possible erroneous syndromes.

A coding theory course for many is often one of the most difficult. I would be glad if this article will help someone to understand this topic faster.

Source: https://habr.com/ru/post/191418/

All Articles