Python inside. Process structures

1. Introduction

1. Introduction2. Objects. Head

3. Objects. Tail

4. Process structures

We continue to translate a series of articles on Python internals. If you have ever wondered "how is it arranged?", Be sure to read. The author sheds light on many interesting and important aspects of the device language.

In previous installments, we talked about the Python object system. The topic is not exhausted yet, but let's go further.

')

When I think about the implementation of Python, I imagine a huge conveyor through which machine operation codes move, which then get into a giant factory, where cooling towers and tower cranes are rising everywhere - and I just get overwhelmed with the desire to get closer. In this part we will talk about the structures of the state of the interpreter and the state of the stream (

./Python/pystate.c ). Now we need to lay the foundation so that it would be easier to understand how the bytecode is executed. Very soon we will find out how the frames, namespaces and code objects are arranged. But first, let's talk about the data structures that tie everything together. Consider, I assume at least a superficial understanding of the structure of operating systems and at least knowledge of such terms as core , process , flow , etc.In many operating systems, user code is executed in streams that live in processes (this is true for most * nix-systems and for "modern" versions of Windows). The kernel is responsible for preparing and deleting processes and threads, as well as determining which thread on which logical CPU will be executed. When a process calls the

Py_Initialize function, another abstraction, the interpreter, takes the scene. Any Python code run in the process is bound to an interpreter. The interpreter can be thought of as the basis of all the other concepts that we will discuss. Python supports the initialization of two (or more) interpreters in one process. Despite the fact that this opportunity is rarely used in practice, I will take it into account. As mentioned, the code is executed in a stream (or streams). No exception and the virtual machine of Python (VM). At the same time, the VM itself has support for threads, i.e. Python has its own abstraction to represent threads. The implementation of this abstraction relies entirely on the core mechanisms. Thus, both the kernel and Python have an idea of each of the Python threads. These threads are managed by the kernel and are executed as separate threads in parallel with all other threads in the system. Well ... almost parallel.Until now, we did not pay attention to the elephant in our china shop. The name of the elephant is GIL ( Global Interpreter Lock ). For some reason, many aspects of CPython are not safe. This has both advantages (for example, simplified implementation and guaranteed atomicity of many Python operators), and disadvantages. The main drawback is the need for a mechanism that prevents parallel execution of Python threads, since without such a mechanism, data corruption is possible. GIL is a process level lock that a thread must seize if it needs to execute Python code. This limits the number of simultaneously running Python threads on one logical CPU to one. Python streams implement cooperative multitasking, voluntarily freeing GIL and allowing other threads to work. This functionality is built into the execution loop, i.e. There is no need to think specifically about this block when writing ordinary scripts and some extensions (it seems to them that they are working continuously). Note that while the thread does not use the Python API (with many it happens), it can work in parallel with other Python threads. A little later we will discuss GIL, and those who can not wait, can read the presentation of David Beazley.

We remember the concepts of the process (OS abstraction), interpreter (Python abstraction), and flow (both OS and Python abstraction). Now we will do the following: we will start with a single operation and end with the whole process.

Let's take another look at the bytecode generated by the expression

spam = eggs - 1 ( what is diss ): >>> diss("spam = eggs - 1") 1 0 LOAD_NAME 0 (eggs) 3 LOAD_CONST 0 (1) 6 BINARY_SUBTRACT 7 STORE_NAME 1 (spam) 10 LOAD_CONST 1 (None) 13 RETURN_VALUE >>> In addition to the

BINARY_SUBTRACT operation, which performs all the work, we see the LOAD_NAME (eggs) and STORE_NAME (spam) operations. Obviously, to perform these operations, you need a place: you need to pull eggs from somewhere, and you need to remove the spam somewhere. This place is referred to by the internal data structures in which the code is executed — frame objects and code objects . When you run the Python code, frames are actually executed (remember ceval.c : PyEval_EvalFrameEx ). Now we are confusing the concepts of frame objects and code objects, so far easier. The difference between these structures will understand later. Now we are most interested in the f_back field of the frame object. In frame n this field points to frame n-1 , i.e. the frame that caused the current frame (the first frame in the stream indicates NULL ).The frame stack is unique for each thread and is associated with a thread-specific structure

./Include.h/pystate.h : PyThreadState , which contains a pointer to the current frame being executed (the most recently called frame, the top of the stack). The PyThreadState structure PyThreadState allocated and initialized for each Python stream in the process by the _PyThreadState_Prealloc function right before the created thread is requested from the OS ( ./Modules/_threadmodule.c : thread_PyThread_start_new_thread and >>> from _thread import start_new_thread ). In the process, such threads can be created that are not controlled by the interpreter; they do not have a PyThreadState structure, and they should not access the Python API. This happens mostly in embedded applications. But such threads can be “pythonized” in order to be able to execute Python code in them, while creating a new PyThreadState structure. In case one interpreter is running, you can use the API for such a stream migration. If there are several interpreters, you will have to do it manually. Finally, approximately in the same way as each frame is connected via a pointer to the previous one, the states of the threads are combined by a linked list of PyThreadState *next pointers.The list of thread structures is associated with the interpreter structure in which the threads are located. The interpreter structure is defined in

./Include.h/pystate.h : PyInterpreterState . It is created by calling the Py_Initialize function, which initializes the Python virtual machine in the process, or by calling the Py_NewInterpreter function, in which a new interpreter structure is created (if there is more than one interpreter in the process). For better understanding, Py_NewInterpreter remind you that Py_NewInterpreter does not return the interpreter structure, but the PyThreadState structure of the newly created thread for the new interpreter. Creating a new interpreter without a single thread in it does not make much sense, just as there is no point in processes without threads in them. The interpreter structures in the process are related to each other in the same way as the thread structures in the interpreter.In general, our journey from a single operation to a whole process is completed: operations are in executing code objects (while “non-executing” objects lie somewhere nearby, like normal data), code objects are in executing frames that are in Python -flows, and flows in turn belong to the interpreter. The static variable

./Python/pystate.c : interp_head refers to the root of this whole structure. It indicates the structure of the first interpreter (through it all other interpreters, streams, etc. are available). The head_mutex mutex protects against the damage of these structures by competing changes from different streams (I clarify that this is not a GIL, but an ordinary mutex for interpreter structures and streams). This lock is controlled by the HEAD_LOCK and HEAD_UNLOCK . As a rule, the interp_head variable interp_head accessed in case you need to add a new or delete an existing interpreter or stream. If there is not one interpreter in the process, then by this variable the structure is not necessarily the interpreter in which the stream is currently executing.It is safer to use the variable

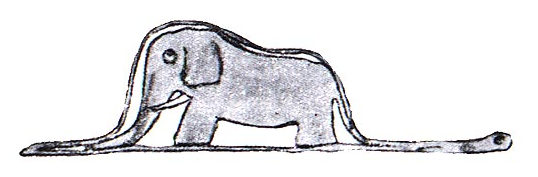

./Python/pystate.c : _PyThreadState_Current , which indicates the structure of the executable stream (with some conditions taken into account). That is, to get to its interpreter, the code needs the structure of its stream, from which it is already possible to pull the interpreter. To access this variable (take the current value or change it, keeping the old one) there are functions that require GIL to work. This is important, and this is one of the problems that arise from the lack of thread safety in CPython. The value of the _PyThreadState_Current variable _PyThreadState_Current set in the structure of a new thread during Python initialization or during the creation of a new thread. When the Python thread first starts after the initial load, it relies on the fact that: a) it holds the GIL and b) the value of the _PyThreadState_Current variable _PyThreadState_Current correct. At this point, the stream should not give up GIL. First, it must save _PyThreadState_Current , so that the next time you capture the GIL, you can restore the desired value of the variable and continue working. Due to this behavior, _PyThreadState_Current always points to the currently executing thread. To implement this behavior, there are macros Py_BEGIN_ALLOW_THREADS and Py_END_ALLOW_THREADS . You can talk for hours about GIL and the API for working with it , and it would be interesting to compare CPython with other implementations (for example, Jython or IronPython, in which threads run simultaneously). But let's postpone this topic for now.In the diagram, I have indicated the connections between the structures of one process, in which two interpreters are running with two threads each, which refer to their frame stacks.

Beautiful, yes? So. Everything was discussed, but it is still not clear what the meaning of these structures is. What are they needed for? What is interesting about them? I don’t want to complicate things, so I’ll only briefly tell you about some of the functions. In the structure of the interpreter, for example, there are fields designed to work with imported modules; pointers necessary for working with unicode; the dynamic compiler flags field and the TSC field for profiling (see the penultimate item here ).

Some fields of the stream structure are associated with the details of the execution of this stream. For example, the

recursion_depth , overflow and recursion_critical are needed to catch too deep a recursion and throw a RuntimeError exception before the stack of the underlying platform overflows and the entire process crashes. There are also fields related to profiling, tracing and exception handling and a dictionary for storing any trash.I think this is the end of the story about the structure of the Python process. I hope everything is clear. In the next posts, we will move on to real hardcore and talk about frame objects , namespaces and code objects . Get ready.

Source: https://habr.com/ru/post/191032/

All Articles