I will give the library in good hands

A long time ago, in 2008, when I was working on my dissertation, I was interested in the topic of using convolutional neural networks for image recognition problems. At that time, they were not yet as popular as they are now, and the attempt to find ready-made libraries did not lead to anything - there was only a realization in Lush (the language created by the author of convolution networks, Jan LeKun). Then I thought that it would be possible to implement them on Matlab using the Neural Network Toolbox. But I was faced with the impossibility of implementing shared weights within this toolbox. And then it was decided to write its own implementation.

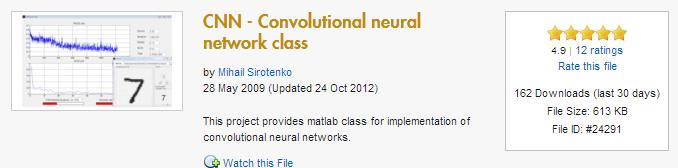

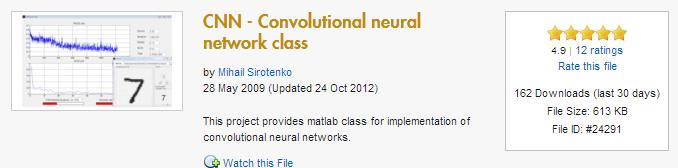

It was originally made on a clean matlab. A little later, I implemented the functions of direct and reverse distribution on CUDA. This version lies on Matlabcentral and still has 162 downloads per month.

This, by the way, was the first version of convolutional networks on CUDA on the Internet.

Using this implementation, I wrote a post Using neural networks in image recognition .

Realizing that the interest in the topic is very large, I decided to rewrite the library and make it completely in C ++ / CUDA, leaving the support of Matlab as a frontend. So came CudaCnn . I have, in fact, conducted this development in my spare time. Later, I changed jobs and my ability to run open source development became very limited.

')

Since the beginning of work on the library, the topic of convolutional neural networks and deep learning has become very popular. A special surge in popularity occurred after Jeffrey Hinton's team, using its implementation of convolutional networks in CUDA (written by Alex Kryzhevsky), won by a large margin from competitors in the competition Large Scale Visual Recognition Challenge. Now Google uses this technology to search for images.

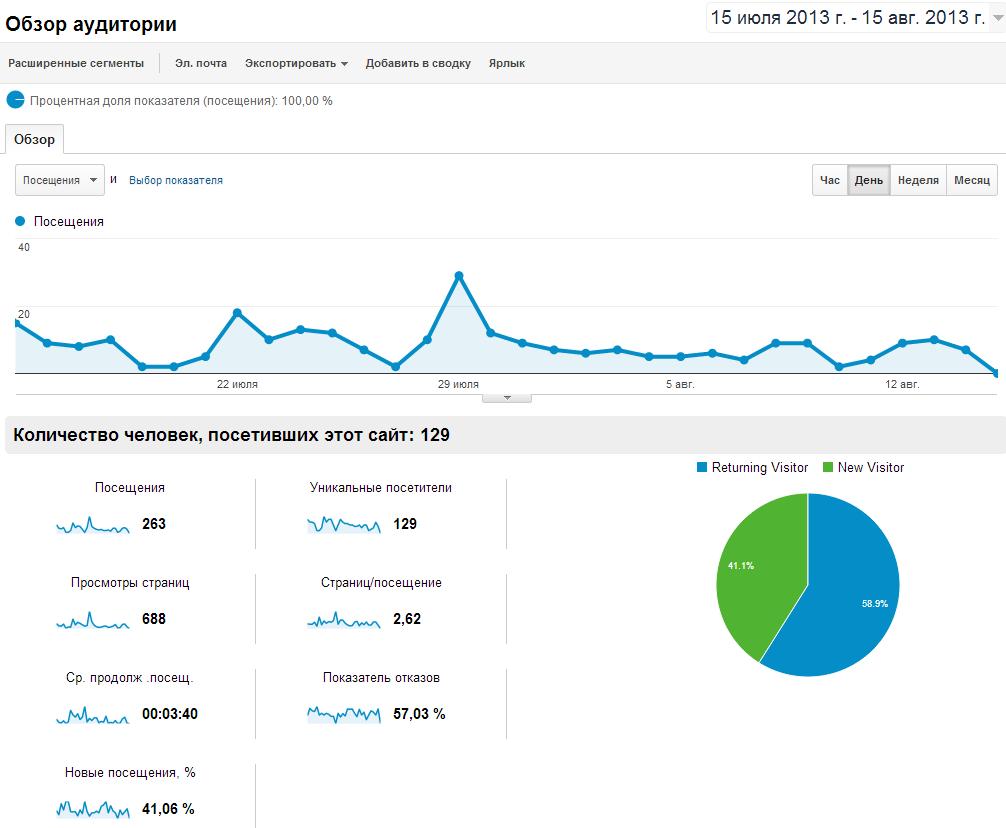

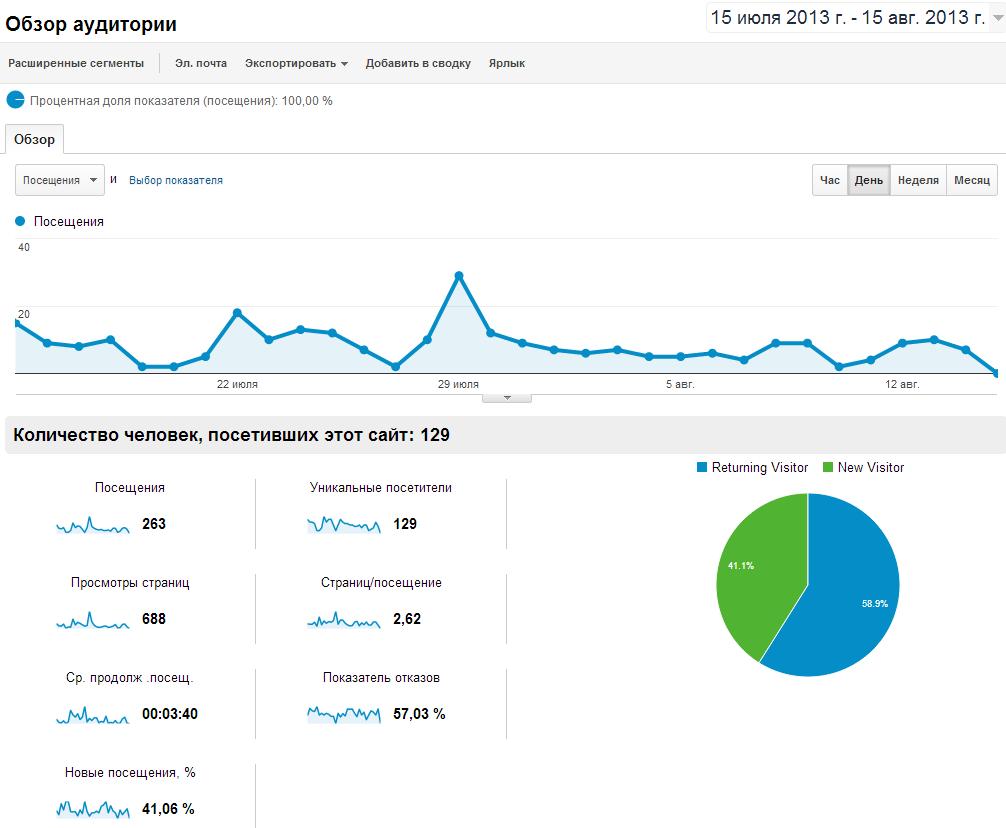

Now my library is hosted on bigbucket , the description page is visited by about 130 people per month:

which is of course quite a bit for any blog, but for a very specialized library designed for a narrow circle of researchers it is not so little. By the way, the majority of visits from the States and China. In addition, users periodically write me with a request to add or fix something and I don’t even have time to answer them.

So, what is the result: there is a library that implements the functionality of learning convolutional (and fully connected) neural networks with the following features and characteristics:

There are also things that would be worth working on. In particular, the code for the CPU is not optimized for speed, the CUDA code also has room for development, the doxygen documentation would not hurt.

As I noted above, I currently have neither the time nor the capacity to support the library, so if someone is interested in participating in its development, I am ready to provide all kinds of assistance. I think this may be useful for those who would like to participate in an open source project, plus gain experience in the field of Machine learning, apply deep learning technologies for their tasks, and gain experience in CUDA development.

Who is interested, write to me in the LAN.

PS Taking this opportunity, I want to promote the recently opened vacancy related to Machine Learning + Big data

It was originally made on a clean matlab. A little later, I implemented the functions of direct and reverse distribution on CUDA. This version lies on Matlabcentral and still has 162 downloads per month.

This, by the way, was the first version of convolutional networks on CUDA on the Internet.

Using this implementation, I wrote a post Using neural networks in image recognition .

Realizing that the interest in the topic is very large, I decided to rewrite the library and make it completely in C ++ / CUDA, leaving the support of Matlab as a frontend. So came CudaCnn . I have, in fact, conducted this development in my spare time. Later, I changed jobs and my ability to run open source development became very limited.

')

Since the beginning of work on the library, the topic of convolutional neural networks and deep learning has become very popular. A special surge in popularity occurred after Jeffrey Hinton's team, using its implementation of convolutional networks in CUDA (written by Alex Kryzhevsky), won by a large margin from competitors in the competition Large Scale Visual Recognition Challenge. Now Google uses this technology to search for images.

Now my library is hosted on bigbucket , the description page is visited by about 130 people per month:

which is of course quite a bit for any blog, but for a very specialized library designed for a narrow circle of researchers it is not so little. By the way, the majority of visits from the States and China. In addition, users periodically write me with a request to add or fix something and I don’t even have time to answer them.

So, what is the result: there is a library that implements the functionality of learning convolutional (and fully connected) neural networks with the following features and characteristics:

- Ability to work on CPU and GPU (CUDA)

- Cross-platform (based on CMake), incl. compiles under ARM with some limitations

- Training methods: stochastic gradient, stochastic Levenberg-Marquardt

- Layers: convolutional, pooling, fully connected

- Activation functions: tangential, linear, tangential with normalized variance

- Arbitrary size and shape of convolution kernels (other implementations have limitations)

- Having a front end for Matlab

There are also things that would be worth working on. In particular, the code for the CPU is not optimized for speed, the CUDA code also has room for development, the doxygen documentation would not hurt.

As I noted above, I currently have neither the time nor the capacity to support the library, so if someone is interested in participating in its development, I am ready to provide all kinds of assistance. I think this may be useful for those who would like to participate in an open source project, plus gain experience in the field of Machine learning, apply deep learning technologies for their tasks, and gain experience in CUDA development.

Who is interested, write to me in the LAN.

PS Taking this opportunity, I want to promote the recently opened vacancy related to Machine Learning + Big data

Source: https://habr.com/ru/post/190132/

All Articles