LSI Nytro MegaRAID NMR8100-4i in action

The client formulated a task in HOSTKEY for me - for 1 (one) server with 12 cores and 64GB of memory, you need about 3-4TB of space for virtual virtual machines of the same type, but that would work just like on an SSD and at the same time meet 16000r per month. They began to think there were several options:

No sooner said than done, about 3 working days and 4 options on our table. Let's see what he is capable of - not in advertising brochures, but in practice.

1U Supermicro, 2xE5-2630 2.3GHz 12 cores, 16Gb DDR3 ECC Reg LV x4 = 64GB of memory (can be expanded to 256GB if desired), LSI Nytro MegaRAID NMR8100-4i, 4x2Tb WDC RE 7200rpm HDD (WD2000FYYZ) SATA3. All components on a 3 year warranty.

Monthly price with accommodation and 100M channel - 16000r.

')

8xPCIe 3.0 (E5 and E3 only), 4 SAS / SATA 6Gbps ports, two 64GB SLC SSDs with redundancy of 30% of the space, 1GB of cache per DDR3, all possible RAID levels, support for expanders and a battery can be supplied. Sample price at the time of August 2013 - 27000r, 3 years warranty.

Who wants to read the full review - attention, a lot of letters in English.

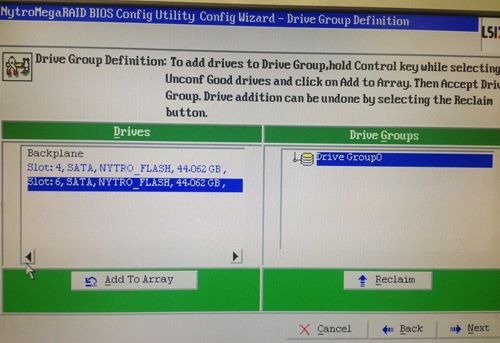

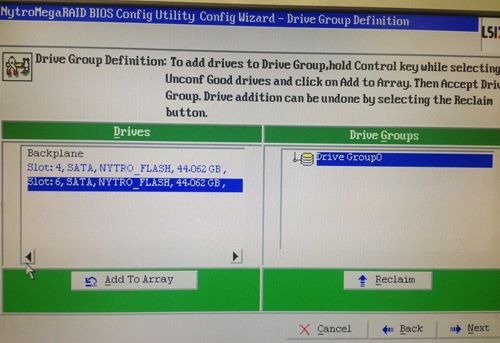

Let's see what our Nitra is capable of. Watch will be under Windows 2012 - the most obvious. First, we configure the necessary arrays in BIOS and see the controller settings - everything is flexibly configured in the most traditional way. The appearance of the utility has not changed for about 15 years.

RAID is configured separately from a pair of embedded SSDs of 44GB, a separate logical volume from the remaining disks. You can make a mirror or a stripe - choose a stripe, especially since the documentation clearly states that if the disk fails, the system will remove it from the file itself. On a logical volume, you can do any RAID - from 0 to 6. Everything is configured traditionally.

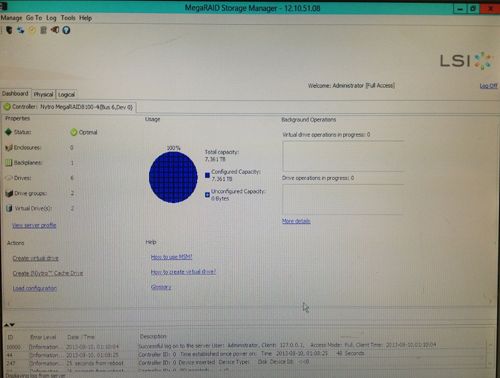

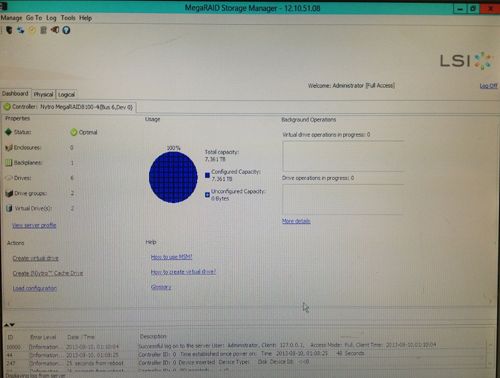

We’ll load the Windows 2012 server, and the Nitra comes with a flash drive with Megaraid Storage Manager - finally. We put it, everything is visible from the box. The software is normal, also did not change for 10 years. Everything is native, in its place. Understandable behavior in case of accidents.

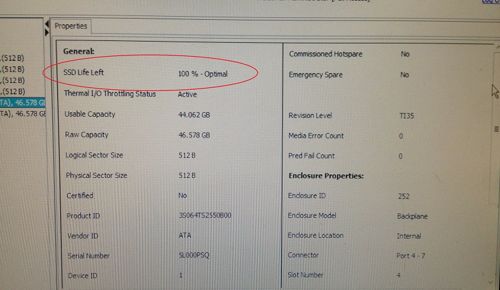

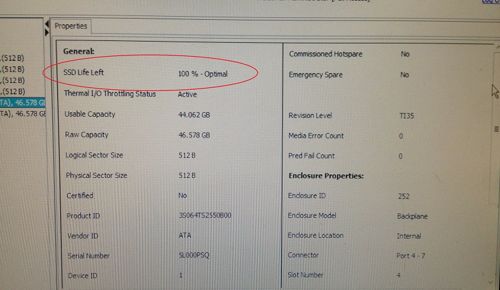

It is very important that now we see the percentage of SSD wear and we can change the controller in a timely manner and take preventive measures. On older controllers, if the SSD was in an array, then getting SMART from it or something else was unrealistic.

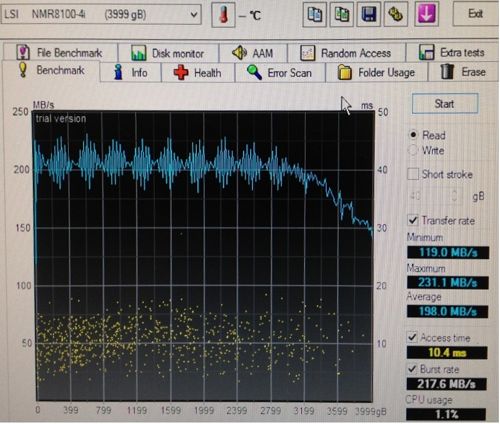

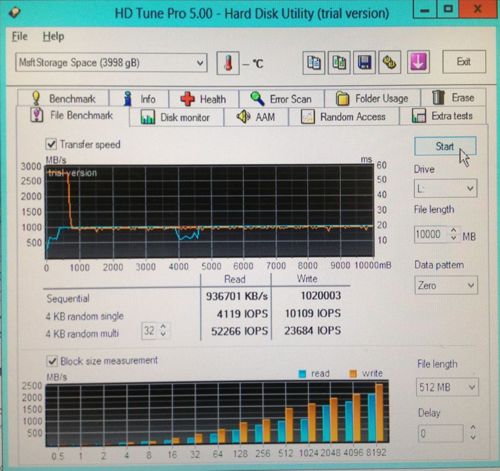

We are compiling a 4TB volume - RAID10 and without marking it we are hitting HD Tune Pro to check for dry performance without the help of the OS and its caches.

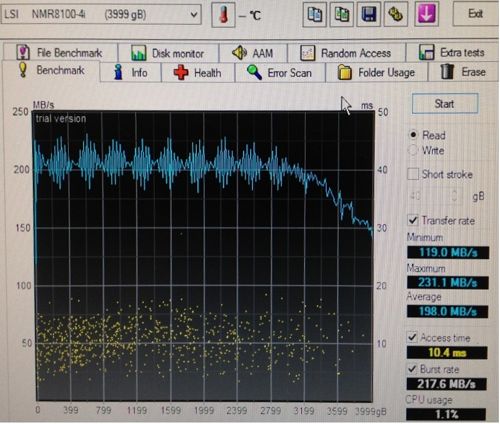

A read run on the entire disk capacity gives the expected result - 200-250MB per second, about 100 IOPS, 10ms. The cache is clearly empty - everything comes from the disk. Not much for 10 RAID, should be thicker. But we have another task, virtualization. Reading performance is usually leveled by OS caches.

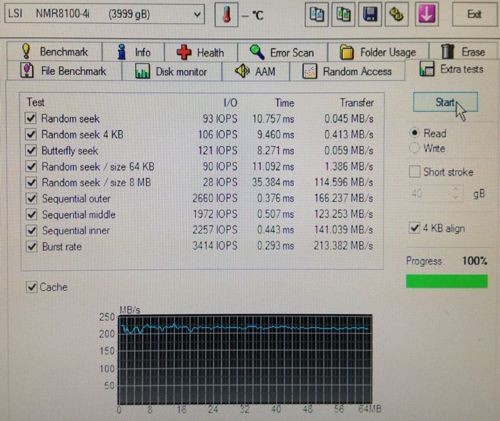

IOPS on random reads - nothing magical, all operations past the SSD - everything is predictably slow, as it should be from a pair of disks.

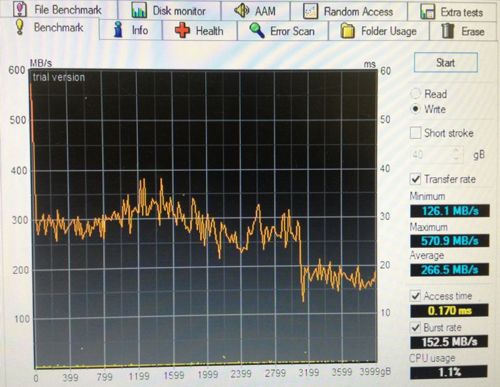

here that is necessary, on average 266 Mb / s and access 0.17 ms. Nitra writes to the SSD and then from it in large blocks to the disks. In the beginning, 1GB of memory of the controller was clogged, then the 3Tb system had enough SSD supply and only the last 1TB was written directly to the disks without a cache, the disks did not have time.

Let's see what is with the record - everything is gorgeous here. In one turn, we see the full-time performance of the SSD - all the records go through it, the disks do not participate.

We have 14,000 IOPS on small blocks with a typical access time of 0.07 ms. Fine! If we commit transactions from the database, they will crash to disk instantly. If the virtual machine decided to pop it up or put something on the disk, it will not affect the disk subsystem.

Now we create the file system and see how the OS will work with files and, in general, with the marked place. All standard tests throughout are not particularly different from the block device tests. Let's set a file on 10 GB and we will chase it.

First run, the file is not yet in cache. The first gig on the recording fails through memory at speeds of about 3 Gb / s, then it slows down to 1000 Mb / s, after 50 GB it probably does not fit into the SSD and starts writing to 250 Mb / s disks.

IOPS values are removed from the SSD cache - one thread 4000 for reading and 10,000 for writing, great! In 32 threads - 52000 for reading and 23000 for writing, apparently 32 threads are overkill. Files of 512MB in size fall into the RAM cache of the controller and are distributed from there at a PCIe speed of 2.5 GB per second.

The repetition of the operation shows visually how a file is distributed from a pair of SSDs - a flat shelf of 1000 MB per second - faster than RAID0 on a pair of integrated SSDs does not accelerate. IOPS is not affected.

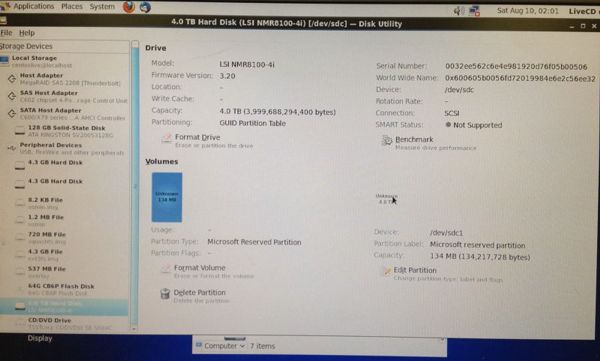

Let's try to boot in the fresh Tsenthos and check if our Nitra is visible without a tambourine. The answer is correct, LSI is true for itself - everything can be seen immediately and without prompts.

Let's test the regular benchmark, the first time is a dull picture, the second time is much more fun! An average reading of 426 MB / s with a delay of about 10 ms, is permissible when testing the surface at 4 TB.

We try as earlier LSI cards see our RAID10 - I took 4 different controllers such as LSI 9260 and Intel OEM - none of them could load the array configuration. Perhaps 9266 will be able to read it, but there were none on hand. In general, it is necessary to keep this in mind when forming the SPTA.

In conclusion, I can say this - the Nytro MegaRAID NMR 8100-4i is available for order at HOSTKEY at a price of 1500r per month to the price of an ordinary 4-port RAID controller in Moscow and +30 Euro to custom servers in the Netherlands. It is more profitable and more reliable to use pure SSD to build file systems and more reliably than building software filers with in-memory caches.

Nitra is an excellent support for large database servers and intracorporate virtualization, when you can or need to do without a global storage system. 4-8 SAS / SATA drives + Nitra = SSD performance without SSD and RAID reliability.

On the basis of Nitra, we will speed up the work of our virtualization cluster in the Netherlands in the very near future and I think we will significantly increase reliability and performance, which I will accomplish further.

Author - CEO HOSTKEY

- We do everything on the SSD. we take 6 500GB disks of the type Samsung 840 Pro eMLC, collect them into a stripe or even as cunning, add a couple of 3TB disks for the backup and ... do not go through the budget. 2U server, 8 port RAID controller and 12000r drives per unit are not allowed.

- We do everything on hard drives, take 12x300Gb SAS 15K and again do not go through either in the budget or in performance.

- We use a RAID controller with an external SSD cache - LSI CacheCade or Adaptec MaxCache. The idea is better, but we need 4 disks of 2 TB in RAID10 - we have to take an 8-port controller and a 120 GB SSB eMLC or SLC for it. What would have been access to the SSD, again we must take a 2U case. The controller 8 ports + SSD costs about 37000r, we do not pass into the budget.

- We use the new LSI Nytro MegaRAID NMR8100-4i. We take the 1U system, put the controller in it, put 4 disks of 2 TB in it in RAID10 and fit into both the performance and the budget. Nitra costs 27000r, it already has 2 44GB disks, and SLC!

No sooner said than done, about 3 working days and 4 options on our table. Let's see what he is capable of - not in advertising brochures, but in practice.

Machine assembly:

1U Supermicro, 2xE5-2630 2.3GHz 12 cores, 16Gb DDR3 ECC Reg LV x4 = 64GB of memory (can be expanded to 256GB if desired), LSI Nytro MegaRAID NMR8100-4i, 4x2Tb WDC RE 7200rpm HDD (WD2000FYYZ) SATA3. All components on a 3 year warranty.

Monthly price with accommodation and 100M channel - 16000r.

')

Specification of our Nitra:

8xPCIe 3.0 (E5 and E3 only), 4 SAS / SATA 6Gbps ports, two 64GB SLC SSDs with redundancy of 30% of the space, 1GB of cache per DDR3, all possible RAID levels, support for expanders and a battery can be supplied. Sample price at the time of August 2013 - 27000r, 3 years warranty.

Who wants to read the full review - attention, a lot of letters in English.

Tests

Let's see what our Nitra is capable of. Watch will be under Windows 2012 - the most obvious. First, we configure the necessary arrays in BIOS and see the controller settings - everything is flexibly configured in the most traditional way. The appearance of the utility has not changed for about 15 years.

RAID

RAID is configured separately from a pair of embedded SSDs of 44GB, a separate logical volume from the remaining disks. You can make a mirror or a stripe - choose a stripe, especially since the documentation clearly states that if the disk fails, the system will remove it from the file itself. On a logical volume, you can do any RAID - from 0 to 6. Everything is configured traditionally.

Ready setup

We’ll load the Windows 2012 server, and the Nitra comes with a flash drive with Megaraid Storage Manager - finally. We put it, everything is visible from the box. The software is normal, also did not change for 10 years. Everything is native, in its place. Understandable behavior in case of accidents.

It is very important that now we see the percentage of SSD wear and we can change the controller in a timely manner and take preventive measures. On older controllers, if the SSD was in an array, then getting SMART from it or something else was unrealistic.

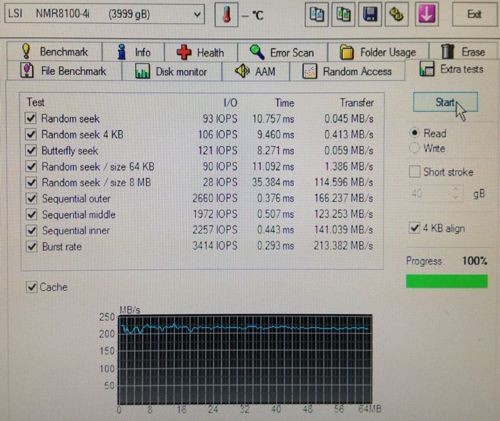

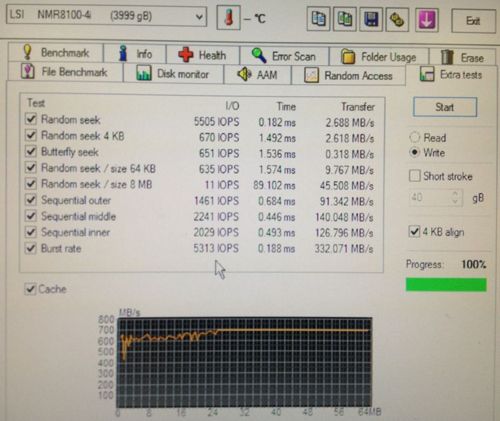

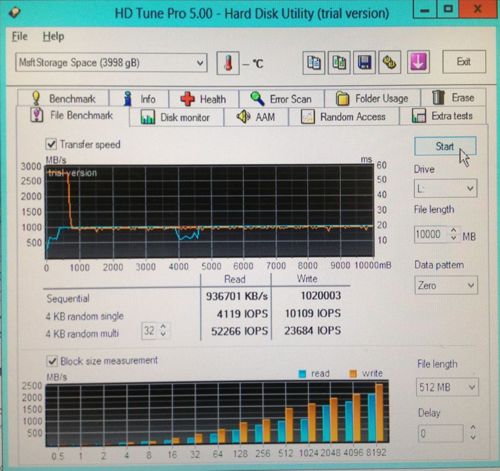

Run block device

We are compiling a 4TB volume - RAID10 and without marking it we are hitting HD Tune Pro to check for dry performance without the help of the OS and its caches.

Reading:

A read run on the entire disk capacity gives the expected result - 200-250MB per second, about 100 IOPS, 10ms. The cache is clearly empty - everything comes from the disk. Not much for 10 RAID, should be thicker. But we have another task, virtualization. Reading performance is usually leveled by OS caches.

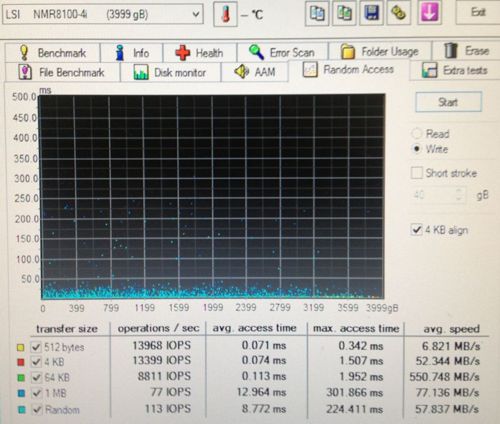

IOPS on random reads - nothing magical, all operations past the SSD - everything is predictably slow, as it should be from a pair of disks.

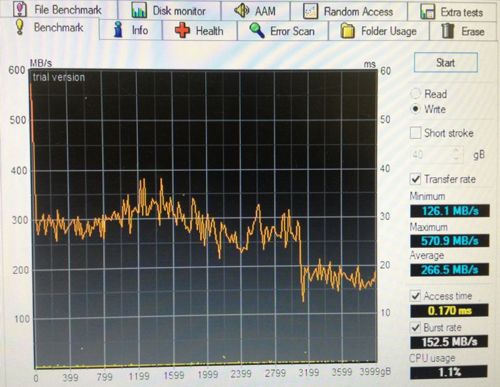

Record:

here that is necessary, on average 266 Mb / s and access 0.17 ms. Nitra writes to the SSD and then from it in large blocks to the disks. In the beginning, 1GB of memory of the controller was clogged, then the 3Tb system had enough SSD supply and only the last 1TB was written directly to the disks without a cache, the disks did not have time.

Let's see what is with the record - everything is gorgeous here. In one turn, we see the full-time performance of the SSD - all the records go through it, the disks do not participate.

We have 14,000 IOPS on small blocks with a typical access time of 0.07 ms. Fine! If we commit transactions from the database, they will crash to disk instantly. If the virtual machine decided to pop it up or put something on the disk, it will not affect the disk subsystem.

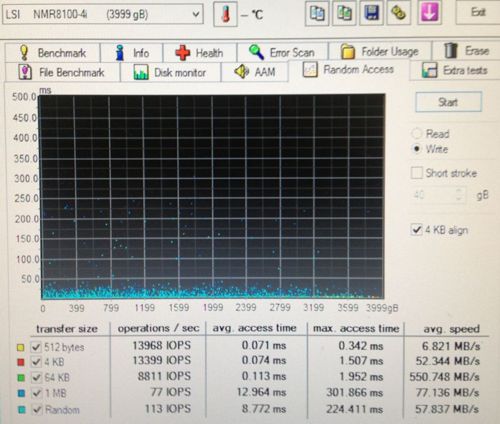

File system run

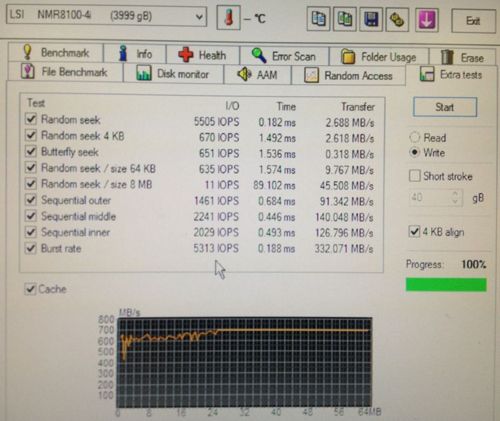

Now we create the file system and see how the OS will work with files and, in general, with the marked place. All standard tests throughout are not particularly different from the block device tests. Let's set a file on 10 GB and we will chase it.

First run, the file is not yet in cache. The first gig on the recording fails through memory at speeds of about 3 Gb / s, then it slows down to 1000 Mb / s, after 50 GB it probably does not fit into the SSD and starts writing to 250 Mb / s disks.

IOPS values are removed from the SSD cache - one thread 4000 for reading and 10,000 for writing, great! In 32 threads - 52000 for reading and 23000 for writing, apparently 32 threads are overkill. Files of 512MB in size fall into the RAM cache of the controller and are distributed from there at a PCIe speed of 2.5 GB per second.

The repetition of the operation shows visually how a file is distributed from a pair of SSDs - a flat shelf of 1000 MB per second - faster than RAID0 on a pair of integrated SSDs does not accelerate. IOPS is not affected.

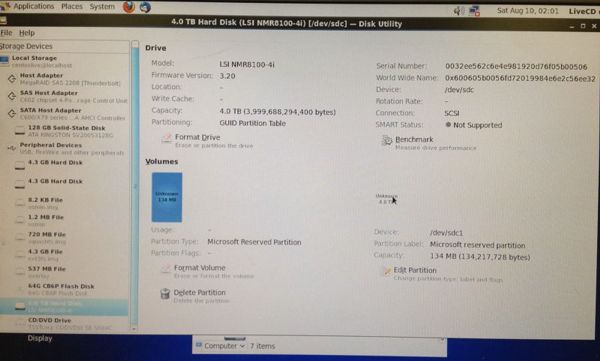

Linux

Let's try to boot in the fresh Tsenthos and check if our Nitra is visible without a tambourine. The answer is correct, LSI is true for itself - everything can be seen immediately and without prompts.

Let's test the regular benchmark, the first time is a dull picture, the second time is much more fun! An average reading of 426 MB / s with a delay of about 10 ms, is permissible when testing the surface at 4 TB.

We try as earlier LSI cards see our RAID10 - I took 4 different controllers such as LSI 9260 and Intel OEM - none of them could load the array configuration. Perhaps 9266 will be able to read it, but there were none on hand. In general, it is necessary to keep this in mind when forming the SPTA.

What should Nitra be used for?

- For virtualization: there are not so many moving fragments in VM disks. The statistics on our cloud-based VPS nodes shows that each read operation accounts for 2-3 write operations that reach the disk subsystem. During the day, 10% of the used space is overwritten and re-read from the strength of 5-6% of the total capacity. To measure your metrics elementary - run iostat for 24 hours and you will learn everything about yourself.

- For databases: If the database does not fit entirely into memory, Nitra will be salvation. Everything important with indexes will be on the SSD and in memory, all unimportant of the voluminous directories will be on the disk. All write transactions will be in place instantly, there will be nothing left.

What exactly should not be used:

- large media archives, video sites, streaming, backups and backup storage. There will be no benefit - the cache will not work or will be too weak. For websites, too, there is no special meaning - everything that is particularly important is perfectly placed in memory and distributed from there, and not important at the block level will not be cached.

- For ZFS and other file systems that want to see the disks themselves. The cache will not understand the logic of the file system and will disappear.

- Under video surveillance, non-linear editing - long linear reads and writes is not about Nitra.

Total

In conclusion, I can say this - the Nytro MegaRAID NMR 8100-4i is available for order at HOSTKEY at a price of 1500r per month to the price of an ordinary 4-port RAID controller in Moscow and +30 Euro to custom servers in the Netherlands. It is more profitable and more reliable to use pure SSD to build file systems and more reliably than building software filers with in-memory caches.

Nitra is an excellent support for large database servers and intracorporate virtualization, when you can or need to do without a global storage system. 4-8 SAS / SATA drives + Nitra = SSD performance without SSD and RAID reliability.

On the basis of Nitra, we will speed up the work of our virtualization cluster in the Netherlands in the very near future and I think we will significantly increase reliability and performance, which I will accomplish further.

Author - CEO HOSTKEY

Source: https://habr.com/ru/post/189634/

All Articles