URiX - Review of Not uNix

I Draw

In the beginning was “I”

Hello!

I often research different tasks and problems, and one of them turned out to be that there are many many GNU distributions, but all of them work in one way or another according to the principles of unix, that is, as such, development does not occur, programs are developed, the core, but the structure is was x-year-old and remained, in general, this is due to compatibility problems that may arise in the event of an attempt to jump on the spot. I decided to think about what could be the development of GNU / Linux and at the same time about the options for combining communities of different distributions, so I suspect that there are adherents including window crafts, and bsdelok, and for me and non-xx, about all the same, at least in terms of the distribution of transactions between cache / caches of processor cores, I suggest immediately git together.

The task of this post is to attract the attention of the audience to the problem, assess the degree of its importance and try to find a better performing solution, I would like to have good intentions and possible voluntary assistance in the implementation.

Beware of odd words! Text below is very hard to read and understand!

Unreadable-Formation-Occurrence spotted!

The loading process is often quite long (regarding the execution of programs for example) and this can be simplified using hardware profiles and snapshots (snapshots) of memory, I once suggested using the simutrans x-kernel model, it may already be working, or when they will do it because there are not so many alternatives in using the transaction scheme and the position of the soft-core in memory in order to distribute the threads between different processor cores in order to get a reduction in the processing time of them Using the computing factories of all the physical cores of the processor, and not just the one on which the current code is executed.

At the boot kernel stage, the initial instructions will be loaded - the cpu mem bus, so that the first wave of services can determine the basic equipment and load modules for it, but even before the primary user (root) is activated, each computer must have its own hash code for this user, to be the owner of all processes and resources. For me, this is important because now any root can view files on an unencrypted system and gain access to any hardware. Therefore, I decided that there should be a system-root-hash user that can be authenticated if the user did not ignore such a function during installation. The same hash can be used as a salt to encrypt user / home, pam / ssl, and therefore the pam-auth service must also be loaded in the first wave along with the basic equipment so that additional authentication can be performed - keyboard, video, sound (yeah, I want voice authentication and voice commands), can be data storage. I think all this is already done by the Linux kernel, you only need to raise the authentication so that you can then manage the resources of the hash user and delegate them to real users.

Knowing that all the data will then be transferred via aufs, you can try to separate them into a more computer-readable form, I think that all executable files should not be dumped into one directory, since it takes time when listing, searching and matching files in this directory , we divide them into heaps at least having human logic, for example 1,2,3- / a / b / c as for example in the spool / squid hierarchy, but I think it may be better to divide scripts / php as follows. js.sh and execs / c.java.fortran texts / html.txt.pdf - that is, such reverse sorting by extension it is easier to determine the interpreter or program for opening this file type integrated into system processes, so that in any case any jpg can be found in data / img / jpg and any cron script in scripts / sh. I’m not completely sure that this should definitely be like this, I just think that if all permissions and restrictions in the kernel are about the same and are executed from one user, then there’s actually no need for dividing into additional folders - this logic is needed more for admins and programmers, and the computer needs the starting point of the file and to which buffer to load / unload it, so maybe just such a name is 01.011.this.program.file.jpg - yes, this is a new / non-unix / scheme and here it can also be The priority and the order of loading the file by the kernel are noted.

and a human-friendly form will appear only at the next higher level, which can easily reproduce the unix data scheme using empty hard symlinks in squashfs

An executable kernel loaded into memory can be recompiled at least once according to the tasks it performs for each machine, something like initrd.img, so that it does not need to detect and load non-existent hardware modules and this is a kind of kernel sharpening for iron and most frequent tasks Such statistics can be collected for several working sessions, and the exhaust will be in a finer allocation of resources and a simple check on the identity of the equipment - then the sharpened core can be immediately loaded into memory (snapshot) without initialization and other things, the basic functions of the boot core are stitched to it and immediately after loading into memory this kernel can already work.

After the first wave, the kernel load ends, and the second causes extended services of the system and equipment — the network, x.org, cron, additional devices, file systems, management for users and everything else related to the basic functionality of the system, that is, gnome, apache, squid is not loaded here, but compilers, interpreters, drivers, fonts are loaded, so that the user can already cope with their services

on the second wave, the standard nix model / usr / lib / bin is built up to create a working environment for unix / gnu programs using aufs, zfs or virtual machines so that you can move away from the rawx kernel abstraction level and emulate the work of the gnu / linux model for it end-user interfaces.

at the top of the rawx operation, we get a virtual linux-compatible kernel that works with user processes, that is, the real kernel is reliably protected behind the virtual layer, but it also means that we can have as many cores as the resources allow and will keep the hardware, so deal with the distribution of memory and cp resources between users - this is approximately like a virtual machine, only without a machine, we turn to kernel services, perhaps this is already implemented in KVM, that is, we are not virtualizing the kernel and

machine, but only high-level processes

In general, the kernel loads and intercepts links from different libraries, emulates the environment in some strange cases and emulates distribution structures when necessary for each user or process.

Sometimes, I imagine users as a process, and processes as users - such a model can show how to distribute programs between users, why one process cannot have different users? Now each user runs his own copy of the program, although he basically needs only some of its functions, it is possible to divide this program into basic logic, system libraries and user data

then everyone will not need to launch their “own” browser - all users run one process in which the basic logic and system libraries are loaded, and all user ones are loaded into a separate memory space, much smaller than the program itself. Users only work with separate cache and memory, personal settings are contained in the profile and are stored in the browser memory snapshot for a specific user, and when there is no activity for all users, this process is also unloaded entirely from memory in squashfs or img.gz, that is, if there is one If it started successfully and also completed successfully, then this image of the process is saved and used by the system without reloading all configurations, libraries and interpreters - a snapshot (snapshot) of the memory of this process is loaded Ssa, which immediately works in the same way as the rawx kernel, and users connect their settings to this process and work through agents.

Core profiling

Several levels of kernel loading allow you to return to the level below and dump accumulated errors or simply unload programs and equipment from memory, this allows you to create kernel profiles to perform certain capacious tasks, for example, virtual machines use quite a lot of iron resources, but do not require loading all compilers and libraries, and the calculation of 3D models or programming-compilation of the code need to load only some of the libraries and can fully load the system, that is, require power n and memory tasks in a narrow range. In such cases, the core can be redeveloped on-the-fly, and program memory cards and libraries can also be useful for this. In principle, the Linux kernel now allows unloading and loading modules and drivers during operation, the task is to do this automatically depending on the running applications (tasks), so that user intervention is not required, and there are not so many functions At the same time, it is not necessary to search for these functions by the memory stacks during the execution of programs.

Some assigned tasks will require a static arrangement of some inputs / outputs of the kernel, so that other programs can access them directly and this can be spelled out when compiling the programs, however such libraries will not be too frequent and in general you only need to connect user-level agents to them Let's say that these agents will cope with the assignment of requests and responses - they will become a kind of intelligent services, they need to be provided with high speed, therefore, stats are offered Other memory addresses are buffer stacks for certain libraries; this can be compared with partitioning a disk into different areas. After the second recompilation of the kernel, the free memory addresses will be accurately known, if you specify a small interval for data exchange between libraries, then theoretically it can help in solving some problems.

The X-core model assumes the use of several cores and the distribution of functions of the common Linux-core between them, in such a model there should be a fairly rigid structure of parallelization of functions and distribution of processor-a-core resources (s), which means that deep work with memory, which may already create certain static points for internal work, I think that it will be necessary to connect the libraries directly to these points - the compilation will take place on-the-fly. And in fact, the compilation will not need to occur and traditional application loading will not occur, since the application will occupy its previous place in memory along with all the necessary modules from the snapshot of memory stored in squashfs and connect to the base entry points of the rawx-core.

Possible problems will arise when there is a lack of memory and the forced transfer of programs from one area to another, then these programs will need to be restarted to recompile their squash image in accordance with the new constitution of memory. Probably, prioritization should be introduced for processes so that unnecessary conflicts do not occur.

in general, everything has already been done in parallel computing programs, in distributed cloud solutions, oscar , openstack , moSix render farms, or even squid, nginx + fpm, that is, you don’t need to do just such a bike, but just generalize the knowledge and apply it in a new light .

in principle, some problems between network machines can be solved using squashfs modules, as is done in magos , that is, transferring a file system with the necessary working files to a client host, for a particular program, these files can be saved there, but this is a special case of parallelization, in general, a unified stack of libraries is needed for several types of programs that the user will load into his local virtual core and it turns out that this can also be solved using squashfs for several cores on one or not how many processors of one computer.

P / S

I am far from a system programmer or architect, I studied asm only in terms of mov (ax, bx), system administration is my weakness, I try hard not to get involved in it and do not abuse it, so if there is true knowledge in the paths, correct it, and if the question is not even interesting and does not cause conscious motivation, but only emotional reactions - you can simply skipanut post or try to comprehend

encore une foi, ')

My site will not sustain the popularity, so there are no links here, and I wrote in GooglePly in English.

In general, I am not sure that the topic is of concern to anyone, the question of the development of open source software is deeply cashless.

Some of this is difficult to understand, because it seems to me that these are not simple things and to explain to everyone and everyone — it is impossible in a short period of time until the concentration of attention of the consciousness subsides, on average for new information it is 2-4 minutes ± 2 time of popular music. You can slightly explore your musical preferences, find your favorite track, see its duration, it is better to highlight the moment that you especially like - this is the likely peak of perception.

I'm used to a minute and a half. ” )

It’s interesting to invent, but gaps arise ... between what I came up with and what other people know, because in order to create and create something you need to analyze a lot of what has already been done, understand mistakes, forgive differences, determine probable directions - this is called intuition, to confirm the correctness, I cannot describe all my initial data, I think that this is not necessary, I proposed a model, and partly in the comments there is already a proof of the concept in the form of links to other projects that are already implemented a similar i n edlagayu move on the basis of current data, I thought I had a bicycle or a wheel, but it turns out it's a flying saucer. " )

To "our open source software" was - it must be done.

btw 10x 4 add. + read ++ understand

In the beginning was “I”

Hello!

I often research different tasks and problems, and one of them turned out to be that there are many many GNU distributions, but all of them work in one way or another according to the principles of unix, that is, as such, development does not occur, programs are developed, the core, but the structure is was x-year-old and remained, in general, this is due to compatibility problems that may arise in the event of an attempt to jump on the spot. I decided to think about what could be the development of GNU / Linux and at the same time about the options for combining communities of different distributions, so I suspect that there are adherents including window crafts, and bsdelok, and for me and non-xx, about all the same, at least in terms of the distribution of transactions between cache / caches of processor cores, I suggest immediately git together.

The task of this post is to attract the attention of the audience to the problem, assess the degree of its importance and try to find a better performing solution, I would like to have good intentions and possible voluntary assistance in the implementation.

Beware of odd words! Text below is very hard to read and understand!

Unreadable-Formation-Occurrence spotted!

The loading process is often quite long (regarding the execution of programs for example) and this can be simplified using hardware profiles and snapshots (snapshots) of memory, I once suggested using the simutrans x-kernel model, it may already be working, or when they will do it because there are not so many alternatives in using the transaction scheme and the position of the soft-core in memory in order to distribute the threads between different processor cores in order to get a reduction in the processing time of them Using the computing factories of all the physical cores of the processor, and not just the one on which the current code is executed.

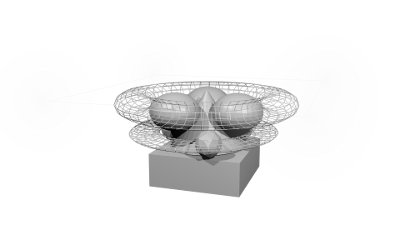

RawX Visual Core Model

')

cone - root hash user

cube - boot kernel

icosahedra - the first wave of core services

spheres - the second wave of services

bagels are peripherals loaded by services from a hash-root user

at the very top - user-friendly environmental process

the task of review of "not unix" - (understand and forgive) to make the system more flexible and stable

approximately three states

logic-structure-data and also boot-system-user

')

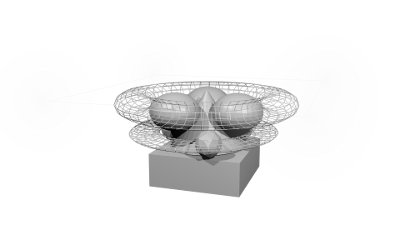

cone - root hash user

cube - boot kernel

icosahedra - the first wave of core services

spheres - the second wave of services

bagels are peripherals loaded by services from a hash-root user

at the very top - user-friendly environmental process

the task of review of "not unix" - (understand and forgive) to make the system more flexible and stable

approximately three states

logic-structure-data and also boot-system-user

At the boot kernel stage, the initial instructions will be loaded - the cpu mem bus, so that the first wave of services can determine the basic equipment and load modules for it, but even before the primary user (root) is activated, each computer must have its own hash code for this user, to be the owner of all processes and resources. For me, this is important because now any root can view files on an unencrypted system and gain access to any hardware. Therefore, I decided that there should be a system-root-hash user that can be authenticated if the user did not ignore such a function during installation. The same hash can be used as a salt to encrypt user / home, pam / ssl, and therefore the pam-auth service must also be loaded in the first wave along with the basic equipment so that additional authentication can be performed - keyboard, video, sound (yeah, I want voice authentication and voice commands), can be data storage. I think all this is already done by the Linux kernel, you only need to raise the authentication so that you can then manage the resources of the hash user and delegate them to real users.

Knowing that all the data will then be transferred via aufs, you can try to separate them into a more computer-readable form, I think that all executable files should not be dumped into one directory, since it takes time when listing, searching and matching files in this directory , we divide them into heaps at least having human logic, for example 1,2,3- / a / b / c as for example in the spool / squid hierarchy, but I think it may be better to divide scripts / php as follows. js.sh and execs / c.java.fortran texts / html.txt.pdf - that is, such reverse sorting by extension it is easier to determine the interpreter or program for opening this file type integrated into system processes, so that in any case any jpg can be found in data / img / jpg and any cron script in scripts / sh. I’m not completely sure that this should definitely be like this, I just think that if all permissions and restrictions in the kernel are about the same and are executed from one user, then there’s actually no need for dividing into additional folders - this logic is needed more for admins and programmers, and the computer needs the starting point of the file and to which buffer to load / unload it, so maybe just such a name is 01.011.this.program.file.jpg - yes, this is a new / non-unix / scheme and here it can also be The priority and the order of loading the file by the kernel are noted.

and a human-friendly form will appear only at the next higher level, which can easily reproduce the unix data scheme using empty hard symlinks in squashfs

An executable kernel loaded into memory can be recompiled at least once according to the tasks it performs for each machine, something like initrd.img, so that it does not need to detect and load non-existent hardware modules and this is a kind of kernel sharpening for iron and most frequent tasks Such statistics can be collected for several working sessions, and the exhaust will be in a finer allocation of resources and a simple check on the identity of the equipment - then the sharpened core can be immediately loaded into memory (snapshot) without initialization and other things, the basic functions of the boot core are stitched to it and immediately after loading into memory this kernel can already work.

After the first wave, the kernel load ends, and the second causes extended services of the system and equipment — the network, x.org, cron, additional devices, file systems, management for users and everything else related to the basic functionality of the system, that is, gnome, apache, squid is not loaded here, but compilers, interpreters, drivers, fonts are loaded, so that the user can already cope with their services

on the second wave, the standard nix model / usr / lib / bin is built up to create a working environment for unix / gnu programs using aufs, zfs or virtual machines so that you can move away from the rawx kernel abstraction level and emulate the work of the gnu / linux model for it end-user interfaces.

at the top of the rawx operation, we get a virtual linux-compatible kernel that works with user processes, that is, the real kernel is reliably protected behind the virtual layer, but it also means that we can have as many cores as the resources allow and will keep the hardware, so deal with the distribution of memory and cp resources between users - this is approximately like a virtual machine, only without a machine, we turn to kernel services, perhaps this is already implemented in KVM, that is, we are not virtualizing the kernel and

machine, but only high-level processes

In general, the kernel loads and intercepts links from different libraries, emulates the environment in some strange cases and emulates distribution structures when necessary for each user or process.

Sometimes, I imagine users as a process, and processes as users - such a model can show how to distribute programs between users, why one process cannot have different users? Now each user runs his own copy of the program, although he basically needs only some of its functions, it is possible to divide this program into basic logic, system libraries and user data

then everyone will not need to launch their “own” browser - all users run one process in which the basic logic and system libraries are loaded, and all user ones are loaded into a separate memory space, much smaller than the program itself. Users only work with separate cache and memory, personal settings are contained in the profile and are stored in the browser memory snapshot for a specific user, and when there is no activity for all users, this process is also unloaded entirely from memory in squashfs or img.gz, that is, if there is one If it started successfully and also completed successfully, then this image of the process is saved and used by the system without reloading all configurations, libraries and interpreters - a snapshot (snapshot) of the memory of this process is loaded Ssa, which immediately works in the same way as the rawx kernel, and users connect their settings to this process and work through agents.

Core profiling

Several levels of kernel loading allow you to return to the level below and dump accumulated errors or simply unload programs and equipment from memory, this allows you to create kernel profiles to perform certain capacious tasks, for example, virtual machines use quite a lot of iron resources, but do not require loading all compilers and libraries, and the calculation of 3D models or programming-compilation of the code need to load only some of the libraries and can fully load the system, that is, require power n and memory tasks in a narrow range. In such cases, the core can be redeveloped on-the-fly, and program memory cards and libraries can also be useful for this. In principle, the Linux kernel now allows unloading and loading modules and drivers during operation, the task is to do this automatically depending on the running applications (tasks), so that user intervention is not required, and there are not so many functions At the same time, it is not necessary to search for these functions by the memory stacks during the execution of programs.

Some assigned tasks will require a static arrangement of some inputs / outputs of the kernel, so that other programs can access them directly and this can be spelled out when compiling the programs, however such libraries will not be too frequent and in general you only need to connect user-level agents to them Let's say that these agents will cope with the assignment of requests and responses - they will become a kind of intelligent services, they need to be provided with high speed, therefore, stats are offered Other memory addresses are buffer stacks for certain libraries; this can be compared with partitioning a disk into different areas. After the second recompilation of the kernel, the free memory addresses will be accurately known, if you specify a small interval for data exchange between libraries, then theoretically it can help in solving some problems.

The X-core model assumes the use of several cores and the distribution of functions of the common Linux-core between them, in such a model there should be a fairly rigid structure of parallelization of functions and distribution of processor-a-core resources (s), which means that deep work with memory, which may already create certain static points for internal work, I think that it will be necessary to connect the libraries directly to these points - the compilation will take place on-the-fly. And in fact, the compilation will not need to occur and traditional application loading will not occur, since the application will occupy its previous place in memory along with all the necessary modules from the snapshot of memory stored in squashfs and connect to the base entry points of the rawx-core.

Possible problems will arise when there is a lack of memory and the forced transfer of programs from one area to another, then these programs will need to be restarted to recompile their squash image in accordance with the new constitution of memory. Probably, prioritization should be introduced for processes so that unnecessary conflicts do not occur.

in general, everything has already been done in parallel computing programs, in distributed cloud solutions, oscar , openstack , moSix render farms, or even squid, nginx + fpm, that is, you don’t need to do just such a bike, but just generalize the knowledge and apply it in a new light .

in principle, some problems between network machines can be solved using squashfs modules, as is done in magos , that is, transferring a file system with the necessary working files to a client host, for a particular program, these files can be saved there, but this is a special case of parallelization, in general, a unified stack of libraries is needed for several types of programs that the user will load into his local virtual core and it turns out that this can also be solved using squashfs for several cores on one or not how many processors of one computer.

P / S

I am far from a system programmer or architect, I studied asm only in terms of mov (ax, bx), system administration is my weakness, I try hard not to get involved in it and do not abuse it, so if there is true knowledge in the paths, correct it, and if the question is not even interesting and does not cause conscious motivation, but only emotional reactions - you can simply skipanut post or try to comprehend

encore une foi, ')

My site will not sustain the popularity, so there are no links here, and I wrote in GooglePly in English.

In general, I am not sure that the topic is of concern to anyone, the question of the development of open source software is deeply cashless.

Some of this is difficult to understand, because it seems to me that these are not simple things and to explain to everyone and everyone — it is impossible in a short period of time until the concentration of attention of the consciousness subsides, on average for new information it is 2-4 minutes ± 2 time of popular music. You can slightly explore your musical preferences, find your favorite track, see its duration, it is better to highlight the moment that you especially like - this is the likely peak of perception.

I'm used to a minute and a half. ” )

It’s interesting to invent, but gaps arise ... between what I came up with and what other people know, because in order to create and create something you need to analyze a lot of what has already been done, understand mistakes, forgive differences, determine probable directions - this is called intuition, to confirm the correctness, I cannot describe all my initial data, I think that this is not necessary, I proposed a model, and partly in the comments there is already a proof of the concept in the form of links to other projects that are already implemented a similar i n edlagayu move on the basis of current data, I thought I had a bicycle or a wheel, but it turns out it's a flying saucer. " )

To "our open source software" was - it must be done.

btw 10x 4 add. + read ++ understand

Source: https://habr.com/ru/post/189598/

All Articles