ETegro Fastor FS200 G3 - fault tolerant data storage server

Today we want to tell you about another product of our production: the storage system ETegro Fastor FS200 G3. Its main and extremely attractive, as we believe, feature is that it is a fault-tolerant storage server, built on a two-node cluster running Windows Storage Server 2012.

')

Before talking about the device, we will try to explain why we created such a solution and why we need it. To build a data warehouse with a high degree of fault tolerance. Solutions that provide not only storage reliability, but also high availability. To achieve this, we developed a product based on a cluster of two nodes that interact with a common disk space. And all this is combined in one case. That is, the default storage system has two controllers operating in Active-Active mode and the transfer of functionality from one controller to another in the event of a failure is extremely small. It is unlikely that any other solution will be able to implement a fault-tolerant file server just as successfully in an environment where even a 5-minute simple “reboot” leads to significant financial losses and remains within a reasonable budget. And if the disk space on the “native” 12 disks is not enough for you, then it can always be expanded by installing additional Fastor JS300 G3 / JS200 G3 disk shelves, with which the number of disks can be increased to 132. An interesting feature of this storage system is that it can simultaneously be both NAS (SMB 3.0 and NFS 4.1) and SAN.

Before moving on to the device itself, we will answer one more question, which will certainly be asked. How is ETegro Fastor FS200 G3 better than storage systems assembled on the basis of a cluster of separate servers and disk shelves? The answer is simple and consists of two points. First, it is more compact, since it takes only 2U in a rack. Secondly, it is cheaper, simply due to the fact that it requires less iron. Well, the bonus is a significant decrease in the number of wires in the rack))

Well, okay, we end up verbiage (no one likes to read the big walls of the text) and go to look at the newcomer in our range.

At first glance - nothing special, a standard 2U server. Most recently, we wrote about a server with 4 pens - now it’s time for a server with one pen. Joke. The green handle in the center of the lid is not designed to carry the entire server, but only allows you to comfortably open the central lid to replace the fans, if you suddenly need it.

The entire front panel is occupied by a basket of 12 hard drives. As it should be in a decent society of modern servers, they have a hot connection and their own display. Yes, yes, these are the most basic 12 disks that are the total disk space for the entire cluster as a whole and are connected directly to both nodes via a duplicated input / output system (Multipath I / O).

Particularly attentive will even be able to see the name of the manufacturer of the hard drives used in this demo of a fully functional server model.

But on the edges of the disk basket two sets of LEDs are located at once, which with the head and give the whole fault-tolerant essence of this server. Because each group displays the current state of the individual node of the cluster.

The back panel in all its glory shows two separate absolutely identical platforms, pressed against the side panels of the case, on each of which is placed on the server, which is the node of the cluster. Well, between them there is a place just for two power supplies.

Naturally, all this also exists on the principles of hot-swapping and for extraction and installation operations not only does not require tools, but is equipped with special convenient handles.

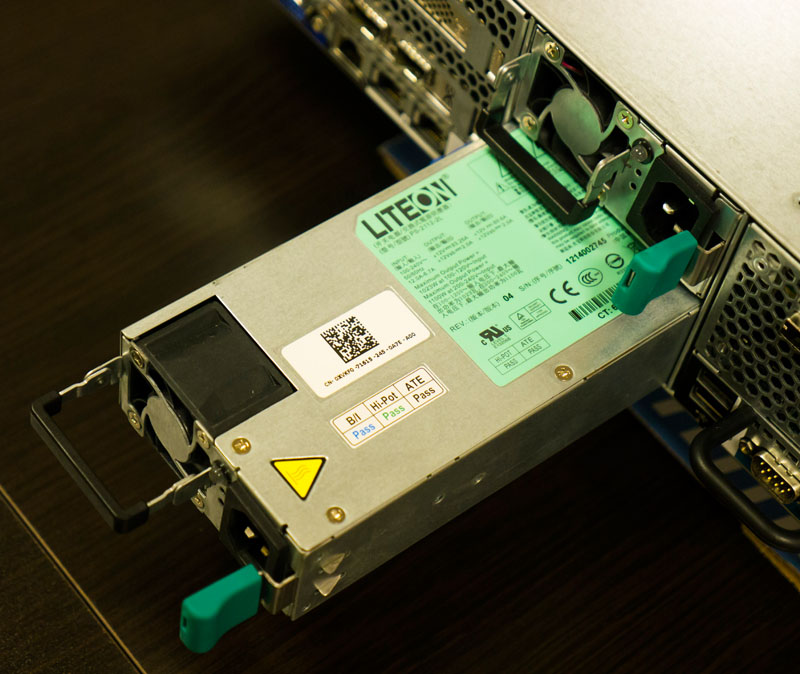

Duplicate power supplies are exactly the same as in all our other servers; this greatly eases the question of finding the right replacement and storing the combat stock for those whose IT infrastructure is mainly based on ETegro equipment. In the FS200 G3, we use blocks with a capacity of 1100 W - this is enough for a server full of disks to the maximum.

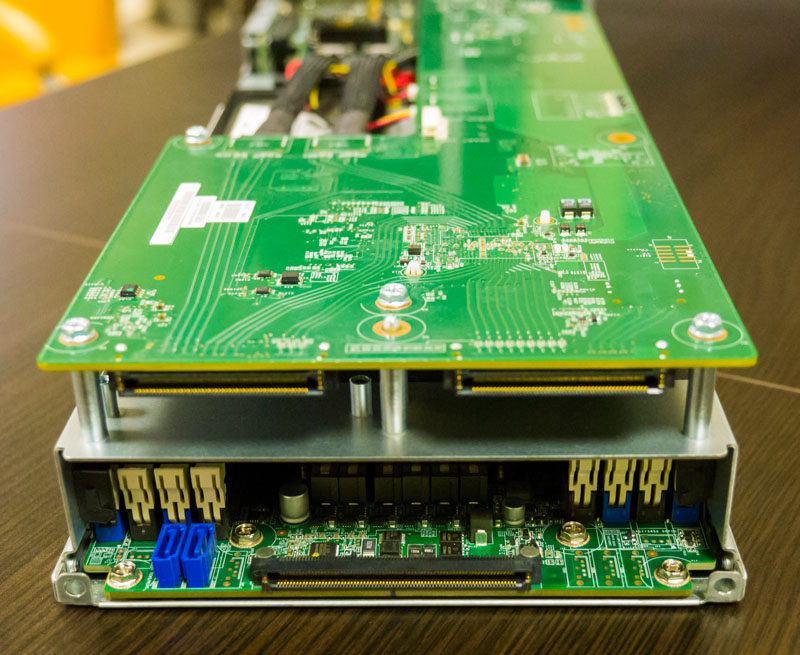

The server blocks themselves are parallelepipeds very densely packed with iron and silicon. By their capabilities, they are comparable with traditional modern 1U-servers. Judge for yourself, each node carries an Intel C602 chipset with two Intel Xeon E5-2600 processors installed and up to 512 GB of RAM.

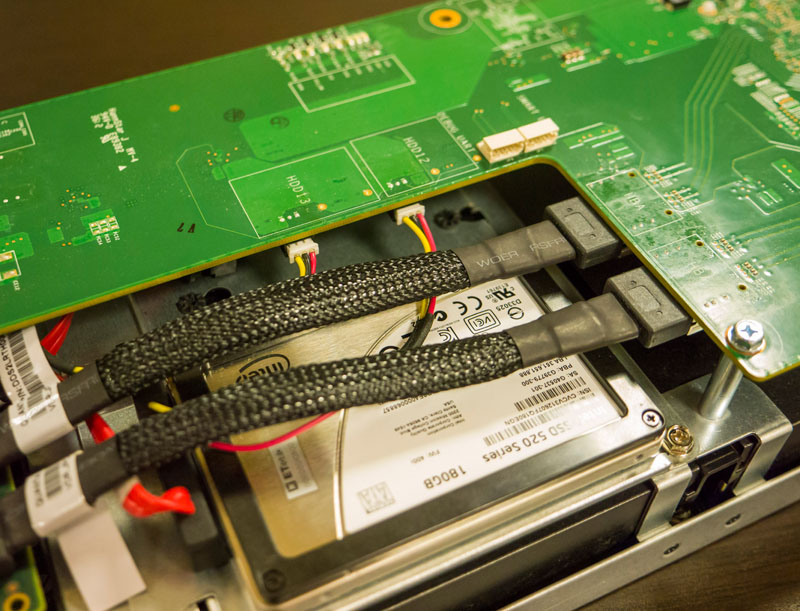

Seats are provided for each node for two 2.5-inch drives, on which the operating system of each node is installed. And in this case, we use SSDs ourselves and recommend everyone else to do the same, because in the case of installing disks, the system’s response rate becomes very uncomfortable.

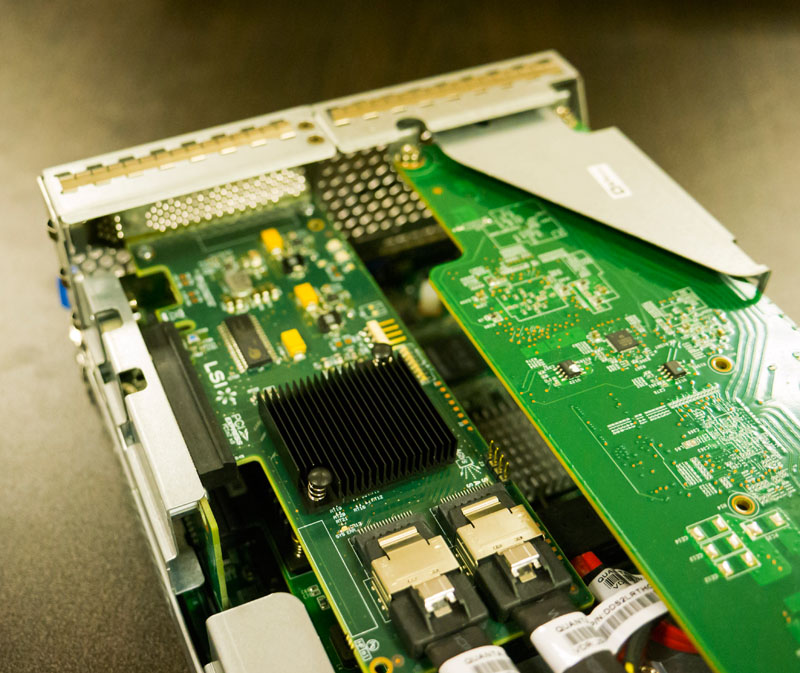

As for expansion cards, it all depends on what you need in your particular case. You can install SAS RAID or HBA controllers or 10G Ethernet, 40G Ethernet or 56G FDR Infiniband controllers into the available PCI-E slots - it all depends on which infrastructure you already have to enter this storage system into.

But many will have enough of those connectors that are already present on the rear panel of each node. In addition to traditional VGA, RS232, 2 USB and RJ45 for server management, here you can find two 10G Ethernet ports (on Intel X540) with RJ-45 connectors and a miniSAS port for connecting expansion disk shelves.

We will immediately mention that there is also a third network interface, the gigabit Intel i350AM2, but it is used for the internal connection of the cluster nodes.

For dessert, we left a paragraph relating to religious wars for operating systems. Traditionally, we are quite tolerant of the choice of clients of the operating system - our servers work fine both on the OS from the Windows camp and on the OS with the * NIX kernel. But in this particular case, the choice of our experts is very clear: this data storage server carries Windows Server 2012. And it’s not even the case that this server is certified for Microsoft Windows Server 2012 and is presented in the appropriate list. Everything is much simpler: at the current level of OS development, this is the only option that supports clustering directly with SAS devices, allowing us to sell a truly ready-to-use “cluster out of the box”, while any other options require software processing by file. regarding the use of "midpleina". Of course, there is another option LSI Syncro, but it requires separate consideration.

In its current form, the server supports data access via the NFS 4.1 and SMB 3.0 protocols. The Fastor FS200 G3 also supports failover block data access through an iSCSI SAN.

As always, we are ready to hear with pleasure your thoughts and suggestions or reasonable criticism (no one would like, of course, nobody likes criticism, but it is useful to hear sensible).

Source: https://habr.com/ru/post/189520/

All Articles