How to measure cloud performance?

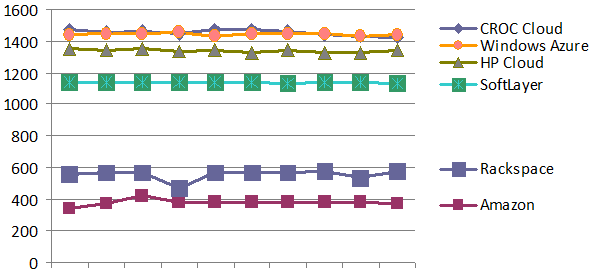

The graph shows the performance tests of the virtual machines of the five largest players on the IaaS- “clouds” from cloudspectator.com and measured the speed of our “cloud” of KROK using the same methodology. Breakdown by days from 06/20/13 to 06/29/13.

Despite our pleasant high result, the conclusions of this test seemed to us somewhat one-sided. And the task itself caused a lot of controversy among our colleagues: after all, assessing the performance of the “cloud” is not a trivial question . And we decided to look deeper.

The “cloud” consists, roughly speaking, of the equipment that allows this “cloud” to work, and the equipment on which the virtual machines of the customers are started. The performance of the virtual machines themselves depends on the performance of the physical servers on which they are running. There is no single standard: everyone on the market offers completely abstract virtual processors, respectively, completely different configurations of these virtualukalok.

')

Nobody reveals their technologies used to build the “cloud”. Some even go to the fact that they are developing their own data center architecture for their “cloud” - but only very large players, for example, Amazon, are doing it. If you saw their data centers, then you know that they are the size of an airfield.

To begin with, we asked our colleagues and clients how and for what they choose the “cloud” . It turned out that there is no “one number” as such, but the overwhelming majority of the scenario is about the same. To begin with, the customer wants to make sure that a single virtual is fast enough (that is, what they are offered, for example, not a quarter of the kernel instead of the whole: processors, memory and disk subsystem are important here). Then there are network issues: (ping to the "cloud", bandwidth of communication channels with the Internet, bandwidth of the cloud-physics interface, and bandwidth between our data centers).

For large businesses, the speed of interaction between virtual networks and the speed of data center interconnects becomes the main thing. Additionally, there are questions of SLA, backup as a service, security, monitoring, reliability of the data center, and so on. We have a full order with this, so the main point of choice is performance. So, in order to adequately compare ourselves with the rest of the “clouds”, we decided not to deviate from the method and conditions of testing proposed by the startup and began to measure the speed of our own virtual machines. And here, too, there are nuances.

If you look at the situation through the eyes of a customer, the results of such tests of single virtual machines can be useful in order to estimate the necessary size of a virtual server to which you can migrate current physical servers.

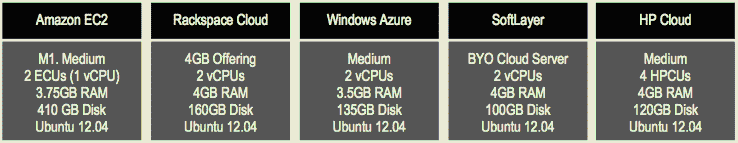

The essence of the source test is simple: in all the “clouds”, the same size is usually the most common type of virtual machine (1 vCPU, 4 GB RAM), which for some time runs the same utility that performs performance measurements. In this particular case, it is an open-source utility UnixBench, which allows you to numerically evaluate the performance of the core of the virtual machine from the physical processor. Ok, great, we'll do the same.

Examples of virtualok configurations from the same report:

"Cheat" test

Considering the fact that we wanted to get real results on our “cloud”, we tried to bring the conditions as close as possible to those in action. I think it’s not necessary to explain the difference between tests under real load and synthetic ones.

For a start, the question of tuning the infrastructure for the test comes up. Settings, of course, you can "twist" the results by several tens of percent. As well as possible and under the specific tasks of a particular customer to tune the physical infrastructure of the cloud. For example, knowing exactly the load on the CPU and the ratio of read-write operations to applications of a large customer, you can change settings to ensure greater performance. However, every time there is a choice between buying additional servers or “tyun” for a specific task, we choose to add additional servers. This is due to the fact that the more “cloud” - the more physical equipment must be maintained. And maintaining the same type of infrastructure is much easier. If you get carried away tuning, you will lose flexibility and can go wrong. Therefore, for the test, no optimization of physical servers, as well as virtual machines, was performed by us.

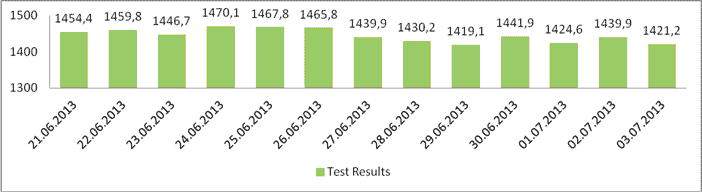

Secondly, obviously, it makes no sense to measure the performance of a virtual machine, for example, at three in the morning . Since in the "cloud" the performance of a physical server is divided between all customers, virtualka which it is located. At night, all virtuals work, of course, but not at all. That is, the test will show far greater values than at the moment when the “cloud” is working with a more or less high load. We have the main load at about 17:00. This situation is quite constant, and therefore we began to take measurements at this time. Tested within one and a half weeks. Each test is performed about 10-15 minutes.

Network testing

So, we obtained practical data on the speed of virtual machines and compared ourselves with other “clouds”. Our next step is network tests: they determine the speed of the network between virtual machines, the stability of your virtual infrastructure, the speed and stability of the network at the junction of our “cloud” and the customer’s physical equipment, which is located on another site (or, for example, physical storage systems). customer data, docked to its virtual infrastructure), as well as the speed of communication channels that provide interconnect between data centers.

Why is this necessary? Because, for example, if you have two active-active systems in two different data centers, or the main system and a high availability copy in case of failure first, the channel between these points is very important. We specialize in such solutions for large businesses. And soon my colleagues in a separate topic will tell you how we fought for one client for the crucial reduction of delays from 7 ms to 5 ms for transactions between data centers.

Total

The tests took place in our “cloud” located in the “Compressor” data center. UnixBench on configuration 1 vCPU, 4Gb RAM. We were pleasantly surprised by the results of the tests we performed: they showed that the performance of virtual machines in the KROK cloud is no worse than the leader of the list of the results we obtained for Windows Azure, the infrastructure cloud platform of Microsoft.

The results are:

Final test table:

date | CROC Cloud | Amazon | HP Cloud | Rackspace | Softlayer | Windows Azure |

06/20/2013 | 1468.9 | 337.9 | 1350.4 | 561.6 | 1137.2 | 1437.6 |

06/21/2013 | 1454.4 | 375 | 1338.6 | 569.5 | 1139.4 | 1446.1 |

06/22/2013 | 1459.8 | 421.2 | 1352.7 | 568.7 | 1140.7 | 1444.5 |

06.23.2013 | 1446.7 | 378.6 | 1333.5 | 469.1 | 1137.1 | 1451 |

06.24.2013 | 1470.1 | 378.5 | 1344.9 | 568 | 1137.5 | 1433.2 |

06.25.2013 | 1467.8 | 381.5 | 1326.4 | 565.2 | 1142.3 | 1447.1 |

06.26.2013 | 1465,8 | 380.2 | 1338.2 | 563.6 | 1134.1 | 1449.3 |

27.06.2013 | 1439.9 | 375,8 | 1328,8 | 574.1 | 1140 | 1442.6 |

28.06.2013 | 1430.2 | 376.5 | 1327.7 | 537.3 | 1139 | 1434 |

06/29/2013 | 1419.1 | 373.2 | 1341.6 | 575.2 | 1135 | 1442.2 |

Source: https://habr.com/ru/post/189382/

All Articles