How GIL works in Ruby. Part 1

Five out of four developers admit that multi-threaded programming is not easy to understand.

Most of the time I spent in the Ruby community, the infamous GIL remained for me a dark horse. In this article I will talk about how I finally got to know GIL better.

Most of the time I spent in the Ruby community, the infamous GIL remained for me a dark horse. In this article I will talk about how I finally got to know GIL better.

The first thing I heard about GIL was nothing to do with how it works or what it needs. All I heard is that GIL is bad because it limits concurrency, or that it’s good because it makes code thread-safe. The time has come, I have become accustomed to multi-threaded programming and realized that everything is actually more complicated.

')

I wanted to know how GIL works from a technical point of view. There is no specification or documentation on GIL. In fact, this is a feature of MRI (Matz's Ruby Implementation). The MRI development team says nothing about how GIL works and what it guarantees.

However, I am running ahead.

From the 2008 article “ Parallelism is a Myth in Ruby ” by Ilya Grigorik, I got a general understanding of GIL. Here are just a general understanding does not help deal with technical issues. In particular, I want to know whether GIL guarantees the thread safety of certain operations in Ruby. I will give an example.

In Ruby, little is thread-safe at all. Take, for example, adding an element to an array

In this example, each of the five threads adds a

= (

Even in such a simple example, we are faced with non-thread-safe operations. We will understand what is happening.

Pay attention to the fact that launching code using MRI gives the correct ( perhaps in this context, you will like the word “expected” - lane ), but JRuby and Rubinius will not. If you run the code again, the situation will repeat, and JRuby and Rubinius will give other (still incorrect) results.

The difference in the results is due to the existence of GIL. Since there is a GIL in the MRI, despite the fact that five threads work in parallel, only one of them is active at any time. In other words, there is no real parallelism here. JRuby and Rubinius do not have GIL, so when five threads run in parallel, they are indeed parallelized between the available cores and, by executing non-thread-safe code, can violate the integrity of the data.

How can this be? Thought Ruby wouldn't let that happen? Let's see how it is technically possible.

Whether MRI, JRuby or Rubinius, Ruby is implemented in another language: MRI is written in C, JRuby in Java, and Rubinius in Ruby and C ++. Therefore, when performing a single operation in Ruby, for example,

Note that there are at least four different operations here:

Each of them refers to other functions. I pay attention to these details in order to show how parallel streams can compromise data integrity. We are accustomed to linear step-by-step code execution — in a single-threaded environment, you can look at the short function in C and easily track the order in which the code is executed.

But if we are dealing with several threads, this is not possible. If we have two threads, they can perform different parts of the function code and have to keep track of two chains of code execution.

In addition, since threads use shared memory, they can simultaneously modify data. One of the threads may interrupt the other, change the general data, after which the other thread will continue to run, being unaware that the data has changed. This is the reason why some implementations of Ruby give unexpected results by simply adding

Initially, the system is in the following state:

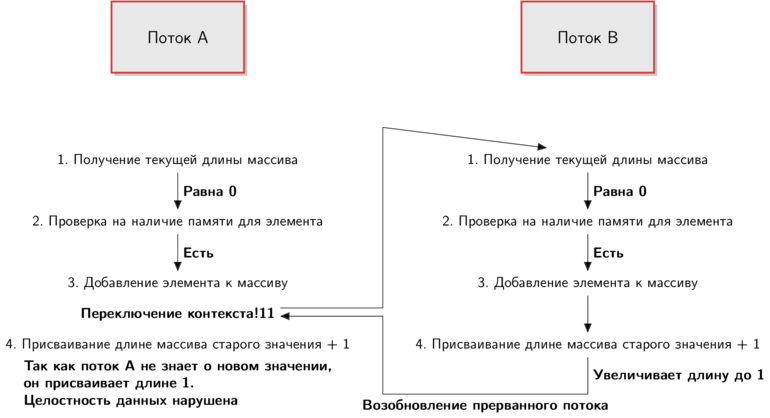

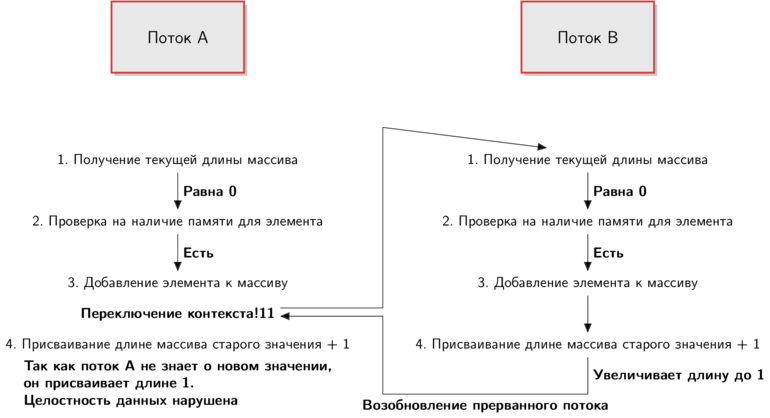

We have two streams, each of which is about to begin its function. Let steps 1-4 be the pseudo-code implementation of

To understand what is happening, just follow the arrows. I added inscriptions reflecting the state of things in terms of each flow.

This is just one of the possible scenarios:

Thread A starts to execute the function code, but when the queue reaches step 3, the context switches. Flow A pauses and a thread B turns, which executes the entire function code, adding an element and increasing the length of the array.

After that, flow A resumes exactly from the point at which it was stopped, and this happened right before increasing the length of the array. Stream A sets the length of the array to

Once again: the flow B assigns the value

The scenario described above can lead to incorrect results, as we have seen in the case of JRuby and Rubinius. But with JRuby and Rubinius it is still more difficult, since in these implementation the threads can actually work in parallel. In the figure, one stream is suspended when the other is running, while in the case of true concurrency, both streams can work simultaneously.

If you run the example above several times using JRuby or Rubinius, you will see that the result is always different. Context switching is unpredictable. It can happen sooner or later or not at all. I will touch on this topic in the next section.

Why doesn't Ruby protect us from this madness? For the same reason that the underlying data structures in other languages are not thread-safe: this is too expensive. Implementations of Ruby might have thread-safe data structures, but this would require an overhead, which would make the code even slower. Therefore, the burden of ensuring thread safety is shifted to the programmer.

I still haven't touched on the technical details of the GIL implementation, and the main question still remains unanswered: why does the launch of the code on the MRI still give the correct result?

This question was the reason why I wrote this article. A general understanding of GIL does not provide an answer to it: it is clear that only one thread can execute Ruby code at some point in time. But after all, context switching can still occur in the middle of a function?

But first...

Context switching is part of the task of the OS scheduler. In all mentioned implementations, one native stream corresponds to one Ruby stream. The OS must ensure that no thread will capture all available resources (CPU time, for example), so it implements scheduling so that each thread gets access to resources.

For a flow, this means that it will pause and resume. Each thread gets CPU time, then pauses, and the next thread gets access to resources. When the time comes, the flow resumes, and so on.

This is effective from the point of view of the OS, but introduces some randomness and motivates to revise the view on the correctness of the program. For example, when executing

If you want to be sure that the stream will not be interrupted in the wrong place, use atomic operations that ensure there are no interruptions to completion. Due to this, in our example, the stream will not be interrupted in step 3 and ultimately does not violate the integrity of the data in step 4.

The easiest way to use an atomic operation is to resort to blocking. The following code will give the same predictable result with MRI, JRuby and Rubinius thanks to the mutex.

If a thread starts executing the

We saw how locking can be used to create an atomic operation and provide thread safety. GIL is also a lock, but does it make code thread-safe? Does GIL

Soon the tale is affected, but it is not done soon The article is too big to read at one time, so I divided it into two parts. In the second part, we will look at the implementation of GIL at MRI to answer the questions posed.

The translator will be happy to hear comments and constructive criticism.

Most of the time I spent in the Ruby community, the infamous GIL remained for me a dark horse. In this article I will talk about how I finally got to know GIL better.

Most of the time I spent in the Ruby community, the infamous GIL remained for me a dark horse. In this article I will talk about how I finally got to know GIL better.The first thing I heard about GIL was nothing to do with how it works or what it needs. All I heard is that GIL is bad because it limits concurrency, or that it’s good because it makes code thread-safe. The time has come, I have become accustomed to multi-threaded programming and realized that everything is actually more complicated.

')

I wanted to know how GIL works from a technical point of view. There is no specification or documentation on GIL. In fact, this is a feature of MRI (Matz's Ruby Implementation). The MRI development team says nothing about how GIL works and what it guarantees.

However, I am running ahead.

If you don’t know anything about GIL at all, here’s a short description:

MRI has something called GIL (global interpreter lock, global interpreter lock). Thanks to it, in a multi-threaded environment, at some point in time, Ruby code can be executed only in one thread.

For example, if you have eight threads running on an eight-core processor, only one thread can work at some point in time. GIL is designed to prevent race conditions from occurring, which can compromise data integrity. There are some subtleties, but the essence is as follows.

From the 2008 article “ Parallelism is a Myth in Ruby ” by Ilya Grigorik, I got a general understanding of GIL. Here are just a general understanding does not help deal with technical issues. In particular, I want to know whether GIL guarantees the thread safety of certain operations in Ruby. I will give an example.

Adding an element to an array is not thread safe.

In Ruby, little is thread-safe at all. Take, for example, adding an element to an array

array = [] 5.times.map do Thread.new do 1000.times do array << nil end end end.each(&:join) puts array.size In this example, each of the five threads adds a

nil to the same array a thousand times. As a result, there should be five thousand elements in the array, right? $ ruby pushing_nil.rb 5000 $ jruby pushing_nil.rb 4446 $ rbx pushing_nil.rb 3088 = (

Even in such a simple example, we are faced with non-thread-safe operations. We will understand what is happening.

Pay attention to the fact that launching code using MRI gives the correct ( perhaps in this context, you will like the word “expected” - lane ), but JRuby and Rubinius will not. If you run the code again, the situation will repeat, and JRuby and Rubinius will give other (still incorrect) results.

The difference in the results is due to the existence of GIL. Since there is a GIL in the MRI, despite the fact that five threads work in parallel, only one of them is active at any time. In other words, there is no real parallelism here. JRuby and Rubinius do not have GIL, so when five threads run in parallel, they are indeed parallelized between the available cores and, by executing non-thread-safe code, can violate the integrity of the data.

Why parallel threads can compromise data integrity

How can this be? Thought Ruby wouldn't let that happen? Let's see how it is technically possible.

Whether MRI, JRuby or Rubinius, Ruby is implemented in another language: MRI is written in C, JRuby in Java, and Rubinius in Ruby and C ++. Therefore, when performing a single operation in Ruby, for example,

array << nil , it may turn out that its implementation consists of tens or even hundreds of lines of code. Here is the implementation of Array#<< in MRI: VALUE rb_ary_push(VALUE ary, VALUE item) { long idx = RARRAY_LEN(ary); ary_ensure_room_for_push(ary, 1); RARRAY_ASET(ary, idx, item); ARY_SET_LEN(ary, idx + 1); return ary; } Note that there are at least four different operations here:

- Getting the current length of the array

- Check for memory for another item

- Add item to array

- Assigning the length of the old value array + 1

Each of them refers to other functions. I pay attention to these details in order to show how parallel streams can compromise data integrity. We are accustomed to linear step-by-step code execution — in a single-threaded environment, you can look at the short function in C and easily track the order in which the code is executed.

But if we are dealing with several threads, this is not possible. If we have two threads, they can perform different parts of the function code and have to keep track of two chains of code execution.

In addition, since threads use shared memory, they can simultaneously modify data. One of the threads may interrupt the other, change the general data, after which the other thread will continue to run, being unaware that the data has changed. This is the reason why some implementations of Ruby give unexpected results by simply adding

nil to an array. The situation is similar to that described below.Initially, the system is in the following state:

We have two streams, each of which is about to begin its function. Let steps 1-4 be the pseudo-code implementation of

Array#<< in the MRI above. The following is a possible development of events (at the initial moment of time, flow A is active):

To understand what is happening, just follow the arrows. I added inscriptions reflecting the state of things in terms of each flow.

This is just one of the possible scenarios:

Thread A starts to execute the function code, but when the queue reaches step 3, the context switches. Flow A pauses and a thread B turns, which executes the entire function code, adding an element and increasing the length of the array.

After that, flow A resumes exactly from the point at which it was stopped, and this happened right before increasing the length of the array. Stream A sets the length of the array to

1 . That's just the flow of B has already changed the data.Once again: the flow B assigns the value

1 to the length of the array, after which the flow A also assigns it to 1 , despite the fact that both threads added elements to the array. The integrity of the data is broken.And I relied on Ruby

The scenario described above can lead to incorrect results, as we have seen in the case of JRuby and Rubinius. But with JRuby and Rubinius it is still more difficult, since in these implementation the threads can actually work in parallel. In the figure, one stream is suspended when the other is running, while in the case of true concurrency, both streams can work simultaneously.

If you run the example above several times using JRuby or Rubinius, you will see that the result is always different. Context switching is unpredictable. It can happen sooner or later or not at all. I will touch on this topic in the next section.

Why doesn't Ruby protect us from this madness? For the same reason that the underlying data structures in other languages are not thread-safe: this is too expensive. Implementations of Ruby might have thread-safe data structures, but this would require an overhead, which would make the code even slower. Therefore, the burden of ensuring thread safety is shifted to the programmer.

I still haven't touched on the technical details of the GIL implementation, and the main question still remains unanswered: why does the launch of the code on the MRI still give the correct result?

This question was the reason why I wrote this article. A general understanding of GIL does not provide an answer to it: it is clear that only one thread can execute Ruby code at some point in time. But after all, context switching can still occur in the middle of a function?

But first...

Blame the planner

Context switching is part of the task of the OS scheduler. In all mentioned implementations, one native stream corresponds to one Ruby stream. The OS must ensure that no thread will capture all available resources (CPU time, for example), so it implements scheduling so that each thread gets access to resources.

For a flow, this means that it will pause and resume. Each thread gets CPU time, then pauses, and the next thread gets access to resources. When the time comes, the flow resumes, and so on.

This is effective from the point of view of the OS, but introduces some randomness and motivates to revise the view on the correctness of the program. For example, when executing

Array#<< it should be borne in mind that a stream can be stopped at any time and another stream can execute the same code in parallel, changing common data.Decision? Use atomic operations

If you want to be sure that the stream will not be interrupted in the wrong place, use atomic operations that ensure there are no interruptions to completion. Due to this, in our example, the stream will not be interrupted in step 3 and ultimately does not violate the integrity of the data in step 4.

The easiest way to use an atomic operation is to resort to blocking. The following code will give the same predictable result with MRI, JRuby and Rubinius thanks to the mutex.

array = [] mutex = Mutex.new 5.times.map do Thread.new do mutex.synchronize do 1000.times do array << nil end end end end.each(&:join) puts array.size If a thread starts executing the

mutex.synchronize block, other threads are forced to wait for it to complete before starting the execution of the same code. Using atomic operations, you get a guarantee that if the context switch happens inside the block, other threads will still not be able to enter it and change the general data. The scheduler will notice and switch the stream again. Now the code is thread safe.GIL is lock too

We saw how locking can be used to create an atomic operation and provide thread safety. GIL is also a lock, but does it make code thread-safe? Does GIL

array << nil into an atomic operation?The translator will be happy to hear comments and constructive criticism.

Source: https://habr.com/ru/post/189320/

All Articles