Testing Web Application Security Scanners: Approaches and Criteria

Information security scanners (vulnerability scanners) are monitoring and control tools that can be used to check computer networks, individual computers and applications installed on them for security problems. Most scanners can detect vulnerabilities described by the WASC Threat Classifcation classifier . In today's habratopic, we will consider some issues related to testing information security scanners of web applications as software products.

Information security scanners (vulnerability scanners) are monitoring and control tools that can be used to check computer networks, individual computers and applications installed on them for security problems. Most scanners can detect vulnerabilities described by the WASC Threat Classifcation classifier . In today's habratopic, we will consider some issues related to testing information security scanners of web applications as software products.A modern web application scanner is a multifunctional and very complex product. Therefore, its testing and comparison with similar solutions has a number of features.

Test procedure

The article " Building a Test Suite for Web Application Scanners " outlines the general principles for testing scanners, on which we will continue. One of these principles is the Test Procedure for comparing the work of various web application scanners. In a slightly modified form, this procedure is as follows:

')

- Prepare the necessary test content for the functional verification of all technical requirements and deploy test benches.

- Initialize the tests, get all the necessary settings for the tests.

- Configure the web application to be scanned and select the type of vulnerability and the level of protection for it.

- Run the scanner with the selected settings on the tested web application and pass a set of functional tests.

- Count and classify web objects found by the scanner (unique links, vulnerabilities, attack vectors, etc.).

- Repeat steps 2-5 for each type of vulnerability and protection level.

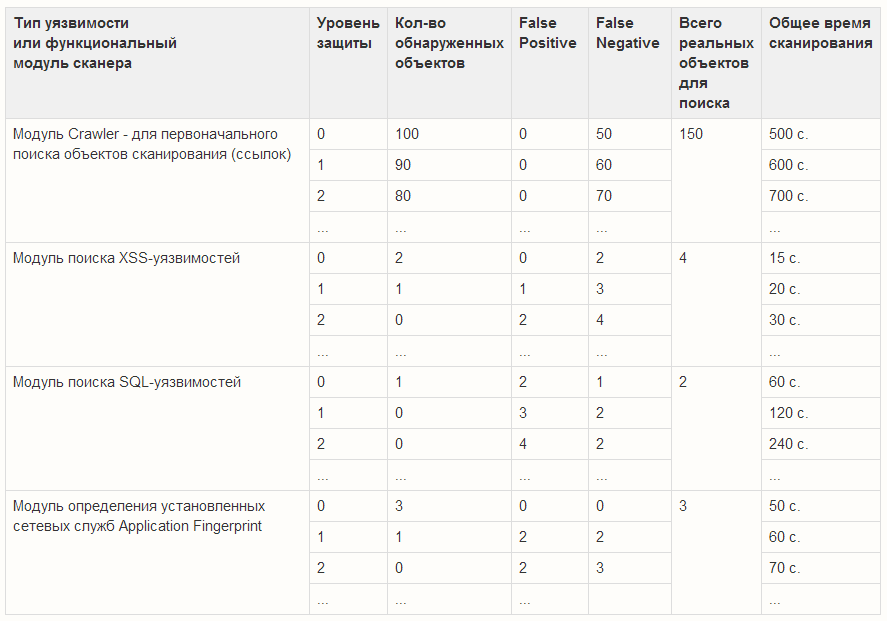

Changes after each iteration must be entered into the summary table of the results of object detection. It should look something like this:

Obviously, not all web application scanners have the same set of scanning modules, but such a table can still be used, reducing the scanner rating in the absence of various modules, functionality.

It is impossible to prepare a test application with an exactly known number of vulnerabilities of a certain type. Therefore, in compiling such a table, we will inevitably encounter difficulties in determining the number of real objects to be searched. You can solve this problem as follows.

- As one vulnerability, consider the class of equivalent vulnerabilities that can be found in a test web application. For example, for SQL injections, the class of equivalent vulnerabilities can be considered all vulnerabilities found for the same parameter of a GET request to an application. In other words, if there is some vulnerable id parameter, which modification causes a failure of the web server or database, then all attack vectors using this parameter can be considered equivalent up to the permutation of the parameters: example.com/page.php?id=blabla ~ example.com/page.php?a=1&id=bla&b=2 .

- Develop simple test applications that implement or simulate some vulnerabilities, but using different frameworks and deploying them on a variety of operating system variants, different web servers, using different databases, accessing different types of network protocols, as well as through various proxy chains.

- Deploy a variety of different CMS, vulnerable applications (DVWA, Gruyere, OWASP Site Generator, etc.) on test benches and scan them with various security scanners. The total number of vulnerabilities found by all scanners is taken as a reference and used in further tests.

You can configure and manage the test application by setting the desired level of protection, for example, using the OWASP Site Generator tool, whose configuration can be stored and edited in a regular XML file. Unfortunately, at the moment this tool is considered obsolete and it is recommended to create applications of our own design to emulate modern vulnerabilities.

The types of vulnerabilities to be implemented in test content for checking the scanner can be taken from the WASC Threat Classification classifier.

The expected number of test procedure launches for all possible combinations of installed applications will be very, very large, which is not surprising. This number can be reduced by using the pairwise analysis testing technique .

According to the scan results we get the numerical vectors of the form

(protection level, number of detected objects, False Positive, False Negative, total objects, scan time)

Then you need to enter a scan quality metric that can be used to compare indicators and scanners among themselves. As a similar metric, it suffices to use the simplest Euclidean metric.

Types of test

Another article that you can rely on when testing web application scanners is called " Analyzing the Accuracy and Time Costs of Web Application Security Scanners ." This material describes how to test various scanners (BurpSuitePro, Qualys, WebInspect, NTOSpider, Appscan, Hailstorm, Acunetix) and four types of tests are proposed for each specific tool:

- Scan the web application in Point and Shoot (PaS) mode and determine the number of found and confirmed vulnerabilities.

- Re-scan after prior "training" and configure the scanner to work with this type of application, determine the number of found and confirmed vulnerabilities in this case.

- Assess the accuracy and completeness of the description of the found vulnerabilities.

- Estimate the total time spent by experts on the preparation and conduct of testing, analysis and quality assurance of scan results.

PaS mode is a scan start with standard scanner settings. It takes place according to the “goal setting - scanning - getting result” scheme.

Training - includes any configuration settings, changing scripts, communication with scanner vendors, etc.

To determine the amount of time that specialists need to spend to obtain a quality result, the article proposes to use a simple formula:

Total Time = Training Time + #False Positive * 15 min. + #False Negative * 15 min.

During each test, the test procedure we described above should be applied.

Evaluation Criteria for Web Application Scanners

In another useful article, Top 10: The Web Application Vulnerability Scanners Benchmark , the authors offer a general approach to comparing the characteristics of scanners, as well as a set of such characteristics with examples. The evaluation of the capabilities of web application scanners in this article proposes to set in tabular form using the following criteria:

- Comparison of the product price in relation to the estimated criteria . (A Price Comparison of the Benchmark Results). An example of comparison can be found in the pivot table .

- The versatility of a scanner is the number of supported protocols and delivery vectors - methods for delivering data to the server (Scanner Versatility). Delivery vectors include data delivery methods to the server, such as HTTP request string parameters, HTTP-body, JSON, XML parameters, binary methods for specific technologies such as AMF, Java serialized objects, and WCF. An example of comparison can be found in the pivot table .

- The number of attack vectors supported : the number and type of active scanner plugins (Attack Vector Support - Vulnerability Detection). An example of comparison can be found in the pivot table .

- CSS Accuracy (Reflected Cross Site Scripting Detection Accuracy). An example of comparison can be found in the pivot table .

- Accuracy of detection of SQL injections (SQL Injection Detection Accuracy). An example of comparison can be found in the pivot table .

- Accuracy of crawling web application structure and local file detection . (Path Traversal / Local File Inclusion Detection Accuracy). An example of comparison can be found in the pivot table .

- Remote file use, XSS, phishing through RFI . (Remote File Inclusion Detection Accuracy (XSS / Phishing via RFI)). An example of comparison can be found in the pivot table . An example of RFI test cases is shown in the diagram .

- WIVET-Comparison : Automated Crawling and Retrieval of Input Vectors for Attack (WIVET (Web Input Vector Extractor Teaser) Score Comparison - Automated Crawling / Input Vector Extraction). An example of comparison can be found in the pivot table .

- Scanner Adaptability : The number of additional scanner options for overcoming protective barriers (Scanner Adaptability - Complementary Coverage Features and Scan Barrier Support). An example of comparison can be found in the pivot table .

- Authentication Features Comparison: Number and Type of Authentication Features Comparison Supported. An example of comparison can be found in the pivot table .

- The number of additional scanning features and built-in mechanisms. (Complementary Scan Features and Embedded Products). An example of comparison can be found in the pivot table .

- General impression of the operation of the main scanning function (General Scanning Features and Overall Impression). An example of comparison can be found in the pivot table .

- Comparison of licenses and technologies (License Comparison and General Information). An example of comparison can be found in the pivot table .

Some of the listed items are included in the object detection summary table. In addition, you can see that most criteria require expert judgment, and this makes it difficult to automate the testing and comparison of scanners. As a result, the proposed evaluation criteria can be used, for example, as sections for a more general report on the study of the characteristics of the scanner, which contains all the results of testing and comparing a specific scanner with competitors.

Types of tests for web application scanners

Based on the materials of the articles presented above, we have developed a classification of test types that can be used in a test procedure.

- Basic functional (smoke) tests should check the operability of the main low-level scanner nodes: operation of the transport subsystem, configuration subsystem, logging subsystem, etc. When scanning the simplest test targets, there should be no error messages, exceptions and traceback in the logs, the scanner should not hang when using various transports, redirects, proxy servers, etc.

- Functional tests should implement the verification of the main scenarios to verify the technical requirements. It is necessary to check the operability of each scanning module in order with different settings of the module and the test environment. Positive and negative test scenarios are performed, various stress tests using large arrays of correct and incorrect data sent to the scanner in response from the web application.

- Comparison tests (compare) of functionality , during which the quality and average speed of searching for objects are selected by the selected scanner module with modules of similar functionality in competing products. For each specific scanning module should be given a definition of what to mean by the objects for it and the quality of their search.

- Tests for comparison of indicators of evaluation criteria (criteria) , during which it is checked that the speed and quality of the search for objects by each scanning module in each new version of the scanner under test has not deteriorated compared to the previous version. The definitions of speed and quality should be set in the same way as for tests on the comparison of functionality: with the exception that in this type of tests its previous version acts as a competitor for the tested scanner.

The test procedure described in the topic can be used for any web application security scanners, and using the scan quality metrics, we get a tool for comparing scanners of high-quality by comparing their metrics. In the development of this idea, you can use fuzzy indicators, scales and metrics - to simplify the work of comparing scanners.

Thank you for your attention, we will be happy to answer questions in the comments.

Author: Timur Gilmullin, Positive Technologies automated testing group.

Source: https://habr.com/ru/post/187636/

All Articles