The history of one DDOS attack on the router and Juniper routing engine protection methods

On duty, I often have to deal with DDOS on the server, but some time ago I encountered another attack, for which I was not ready. The attack was made on the Juniper MX80 router supporting BGP sessions and performing the announcement of the data center subnets. The purpose of the attackers was a web resource located on one of our servers, but as a result of the attack, the entire data center remained without communication with the outside world. Details of the attack, as well as tests and methods of dealing with such attacks under the cut.

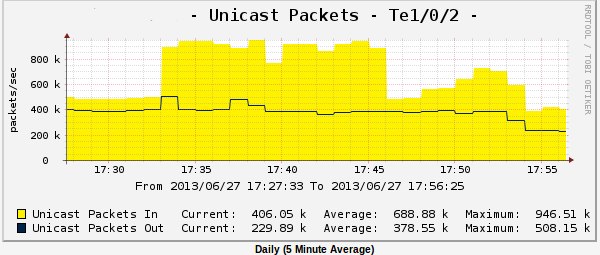

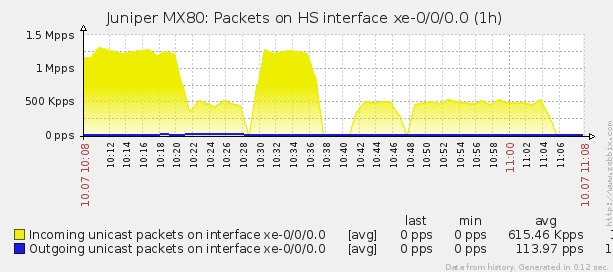

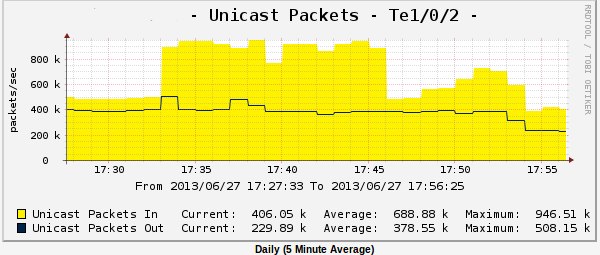

Historically, the router blocked all UDP traffic flying into our network. The first wave of the attack (at 17:22) was just UDP traffic, the schedule for unicast packets from the uplink router:

and a graph of unicast packets from the port of the switch connected to the router:

demonstrate that all traffic is donkey on the filter of the router. The flow of unicast packets on the uplink of the router increased by 400 thousand and the attack on UDP packets continued until 17:33. Then the attackers changed the strategy and added UDP attack to the attack with TCP SYN packets on the attacked server as well as on the router itself. As you can see from the graph, the router became so bad that it stopped sending SNMP to zabbix. After the SYN wave, BGP sessions with peers began to fall off the ports of the router (we use three uplinks from each of which we get full view on ipv4 and on ipv6), tragic entries appeared in the logs:

As it was found out later, after the attack, the TCP SYN wave increased the load on the routing engine of the router and then all BGP sessions fell and the router could not restore its work on its own. The attack on the router lasted several minutes, but due to the additional load, the router could not process the full view from three uplinks and the sessions were torn again. It was only possible to restore the work by alternately raising all BGP sessions. A further attack went on the server itself.

As the target of the attack, the Juniper MX80 was used with the same firmware version as on the combat router. A server with a 10Gb card and an ubuntu server + quagga installed on it was used as an attacker. The traffic generator was a script calling the hping3 utility. To check the harmful effects of traffic spikes, the script generated traffic with temporary gaps: 30 seconds attack - 2 seconds no attack. Also, for the purity of the experiment, the BGP session between the router and the server was raised. In the configuration of the combat router installed at that time, the BGP and SSH ports were open on all interfaces / addresses of the router and were not filtered. A similar configuration was transferred to the bench router.

')

The first stage of testing was the attack of TCP SYN on BGP (179) port of the router. Ip address of the source coincided with the address of the peer in the config. IP address spoofing was not excluded, since our uplinks did not include uPRF. Session has been set. From quagga side:

Juniper side:

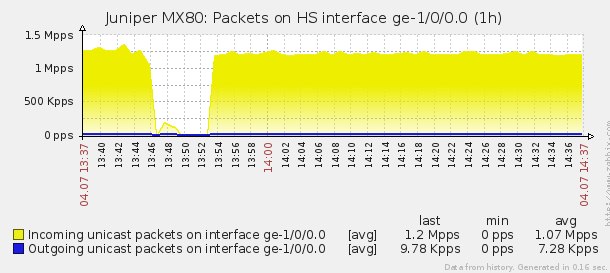

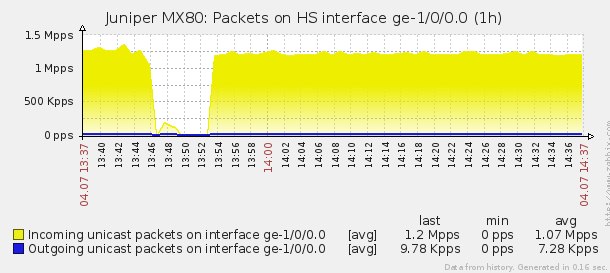

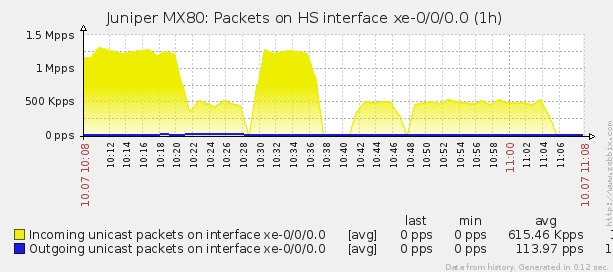

After the start of the attack (13:52) ~ 1.2 Mpps of traffic flies to the router:

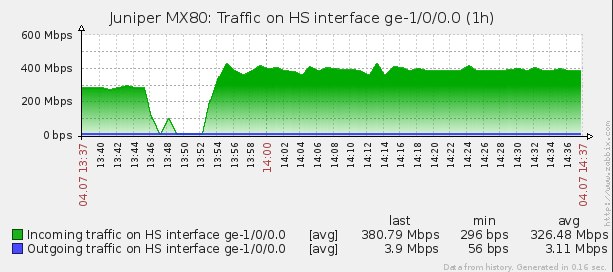

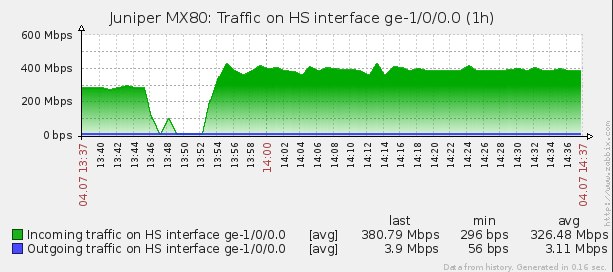

or 380Mbps:

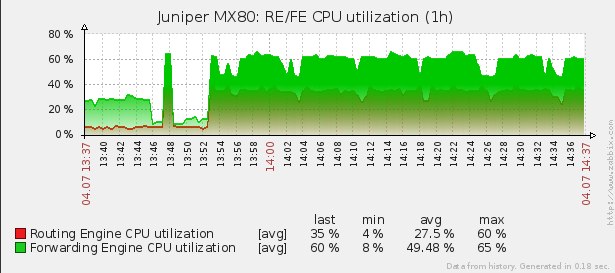

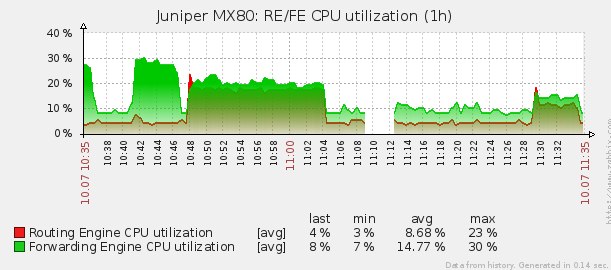

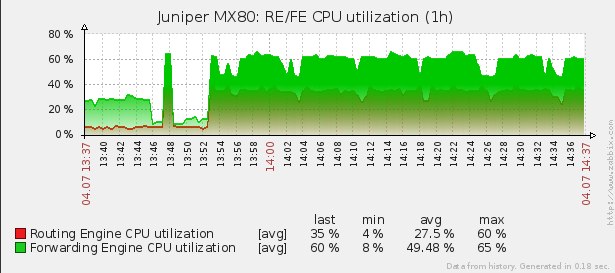

The load on the CPU RE and CPU FE of the router increases:

After a timeout (90 sec), the BGP session drops and does not rise again:

The router is busy processing incoming TCP SYN on the BGP port and cannot establish a session. There are many packages on the port:

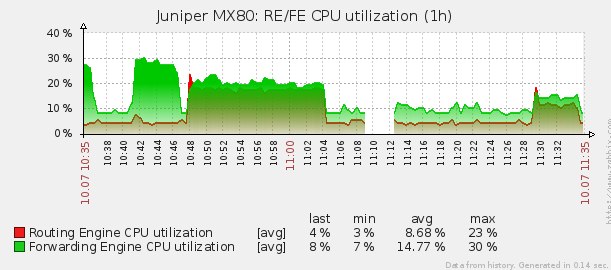

The second stage of testing was the attack of TCP SYN on BGP (179) port of the router. The source Ip address was chosen randomly and did not match the address of the peer specified in the router config. This attack had the same effect on the router. In order not to stretch the article with monotonous conclusions of the logs, I will give only the load graph

The graph clearly shows the start of the attack. The BGP session also fell and was unable to recover.

The feature of Juniper equipment is the separation of tasks between the routing engine (RE) and the packet forwarding engine (PFE). PFE processes the entire flow of passing traffic by filtering and routing it according to a pre-established pattern. RE handles the processing of direct calls to the router (traceroute, ping, ssh), packet processing for service services (BGP, NTP, DNS, SNMP) and generates traffic filtering and routing schemes for the PFE router.

The basic idea of protecting a router is to filter all traffic destined for an RE. Creating a filter will allow transferring the load created by the DDOS attack from the CPU RE to the CPU PFE of the router, which will enable the RE to process only real packets and not waste processor time on other traffic. To build protection, you must determine what we are filtering. The scheme for writing filters for IPv4 was taken from the book Douglas Hanks Jr. - Day One Book: Securing the Routing Engine on M, MX and T series . In my case, the following scheme was on the router:

By IPv4 protocol

Under the IPv6 protocol, in my case, the filters were superimposed only on BGP, NTP, ICMP, DNS and traceroute. The only difference is in ICMP traffic filtering, since IPv6 uses ICMP for business purposes. The remaining protocols did not use IPv6 addressing.

For writing filters in juniper there is a handy tool - prefix-list, which allows you to dynamically compile lists of ip addresses / subnets for substitution in filters. For example, to create a list of ipv4 BGP neighbors specified in the config, the following structure is used:

List result:

We compile dynamic lists of prefixes for all filters:

We make polisry to limit bandwidth:

Below, under “copy and paste”, the configuration of the filters of the final protection variant (the thresholds for the throughput of NTP and ICMP traffic were reduced, the reasons for lowering the thresholds are described in detail in the testing section). Configure ipv4 filters:

Similar filter for ipv6:

Next, you need to apply filters on the service interface lo0.0. In JunOS, this interface is used to transfer data between the PFE and RE. The configuration will be as follows:

The order of specifying filters in the input-list interface is very important. Each package will be checked for validity passing through the filters specified in the input-list from left to right.

After applying the filters, conducted a series of tests on the same stand. After each test, the firewall counters were cleared. Normal (no attack) load on the router is visible on the charts at 11:06 - 11:08. Pps chart for the entire test period:

CPU graph for the entire test period:

The first test was carried out to test icmp with a traffic threshold of 5 Mb / s (on charts 10:21 - 10:24). The filter counters and the graph show traffic bandwidth limitations, but even this stream was enough to increase the load, so the threshold was reduced to 1 Mb / s. Counters:

Repeated icmp flood test with a 1Mb / s traffic threshold (on charts 10:24 - 10:27). The load on the RE router dropped from 19% to 10%, the load on the PFE to 30%. Counters:

Next, a flood test was performed on the BGP port of the router from an outsider (not included in the config) ip address (on the charts 10:29 - 10:36). As can be seen from the counters, the entire flood settled on the RE discard-tcp filter and only increased the load on the PFE. The load on the RE has not changed. Counters:

session does not fall:

The fourth test was a flooding test (on charts 10:41 - 10:46) UDP per NTP port (in the filter settings, the interaction on this port is limited to the servers specified in the router config). According to the schedule, the load rises only on the PFE router up to 28%. Counters:

Fifth, a flood test was conducted (on charts 10:41 - 11:04) UDP to the NTP port with IP spoofing. The load on the RE increased by 12%, the load on the PFE increased to 22%. From the counters it is clear that the flood rests on the 1Mb / s threshold, but this is enough to increase the load on the RE. The traffic threshold was eventually reduced to 512Kb / s. Counters:

( 11:29 — 11:34) UDP NTP IP spoofing, 512/. :

:

, DDOS , RE. . MX80:

“” discard.

.

Attack history

Historically, the router blocked all UDP traffic flying into our network. The first wave of the attack (at 17:22) was just UDP traffic, the schedule for unicast packets from the uplink router:

and a graph of unicast packets from the port of the switch connected to the router:

demonstrate that all traffic is donkey on the filter of the router. The flow of unicast packets on the uplink of the router increased by 400 thousand and the attack on UDP packets continued until 17:33. Then the attackers changed the strategy and added UDP attack to the attack with TCP SYN packets on the attacked server as well as on the router itself. As you can see from the graph, the router became so bad that it stopped sending SNMP to zabbix. After the SYN wave, BGP sessions with peers began to fall off the ports of the router (we use three uplinks from each of which we get full view on ipv4 and on ipv6), tragic entries appeared in the logs:

Jun 27 17:35:07 ROUTER rpd[1408]: bgp_hold_timeout:4035: NOTIFICATION sent to ip.ip.ip.ip (External AS 1111): code 4 (Hold Timer Expired Error), Reason: holdtime expired for ip.ip.ip.ip (External AS 1111), socket buffer sndcc: 19 rcvcc: 0 TCP state: 4, snd_una: 1200215741 snd_nxt: 1200215760 snd_wnd: 15358 rcv_nxt: 4074908977 rcv_adv: 4074925361, hold timer out 90s, hold timer remain 0s Jun 27 17:35:33 ROUTER rpd[1408]: bgp_hold_timeout:4035: NOTIFICATION sent to ip.ip.ip.ip (External AS 1111): code 4 (Hold Timer Expired Error), Reason: holdtime expired for ip.ip.ip.ip (External AS 1111), socket buffer sndcc: 38 rcvcc: 0 TCP state: 4, snd_una: 244521089 snd_nxt: 244521108 snd_wnd: 16251 rcv_nxt: 3829118827 rcv_adv: 3829135211, hold timer out 90s, hold timer remain 0s Jun 27 17:37:26 ROUTER rpd[1408]: bgp_hold_timeout:4035: NOTIFICATION sent to ip.ip.ip.ip (External AS 1111): code 4 (Hold Timer Expired Error), Reason: holdtime expired for ip.ip.ip.ip (External AS 1111), socket buffer sndcc: 19 rcvcc: 0 TCP state: 4, snd_una: 1840501056 snd_nxt: 1840501075 snd_wnd: 16384 rcv_nxt: 1457490093 rcv_adv: 1457506477, hold timer out 90s, hold timer remain 0s As it was found out later, after the attack, the TCP SYN wave increased the load on the routing engine of the router and then all BGP sessions fell and the router could not restore its work on its own. The attack on the router lasted several minutes, but due to the additional load, the router could not process the full view from three uplinks and the sessions were torn again. It was only possible to restore the work by alternately raising all BGP sessions. A further attack went on the server itself.

Test stand and replay attack

As the target of the attack, the Juniper MX80 was used with the same firmware version as on the combat router. A server with a 10Gb card and an ubuntu server + quagga installed on it was used as an attacker. The traffic generator was a script calling the hping3 utility. To check the harmful effects of traffic spikes, the script generated traffic with temporary gaps: 30 seconds attack - 2 seconds no attack. Also, for the purity of the experiment, the BGP session between the router and the server was raised. In the configuration of the combat router installed at that time, the BGP and SSH ports were open on all interfaces / addresses of the router and were not filtered. A similar configuration was transferred to the bench router.

')

The first stage of testing was the attack of TCP SYN on BGP (179) port of the router. Ip address of the source coincided with the address of the peer in the config. IP address spoofing was not excluded, since our uplinks did not include uPRF. Session has been set. From quagga side:

BGP router identifier 9.4.8.2, local AS number 9123 RIB entries 3, using 288 bytes of memory Peers 1, using 4560 bytes of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd 9.4.8.1 4 1234 1633 2000 0 0 0 00:59:56 0 Total number of neighbors 1 Juniper side:

user@MX80> show bgp summary Groups: 1 Peers: 1 Down peers: 0 Table Tot Paths Act Paths Suppressed History Damp State Pending inet.0 2 1 0 0 0 0 Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|#Active/Received/Accepted/Damped... 9.4.8.2 4567 155 201 0 111 59:14 1/2/2/0 0/0/0/0 After the start of the attack (13:52) ~ 1.2 Mpps of traffic flies to the router:

or 380Mbps:

The load on the CPU RE and CPU FE of the router increases:

After a timeout (90 sec), the BGP session drops and does not rise again:

Jul 4 13:54:01 MX80 rpd [1407]: bgp_hold_timeout: 4035: NOTIFICATION sent to 9.4.8.2 (External AS 4567): code 4 (Hold Timer Expired Error), Reason: holdtime expired for 9.4.8.2 (External AS 4567 ), socket buffer sndcc: 38 rcvcc: 0 TCP state: 4, snd_una: 3523671294 snd_nxt: 3523671313 snd_wnd: 114 rcv_nxt: 1556791630 rcv_adv: 1556808014, hold delay out 90s, hold timer 0 0

The router is busy processing incoming TCP SYN on the BGP port and cannot establish a session. There are many packages on the port:

user @ MX80> monitor traffic interface ge-1/0/0 count 20

13: 55: 39.219155 In IP 9.4.8.2.2097> 9.4.8.1.bgp: S 1443462200: 1443462200 (0) win 512

13: 55: 39.219169 In IP 9.4.8.2.27095> 9.4.8.1.bgp: S 295677290: 295677290 (0) win 512

13: 55: 39.219177 In IP 9.4.8.2.30114> 9.4.8.1.bgp: S 380995480: 380995480 (0) win 512

13: 55: 39.219184 In IP 9.4.8.2.57280> 9.4.8.1.bgp: S 814209218: 814209218 (0) win 512

13: 55: 39.219192 In IP 9.4.8.2.2731> 9.4.8.1.bgp: S 131350916: 131350916 (0) win 512

13: 55: 39.219199 In IP 9.4.8.2.2261> 9.4.8.1.bgp: S 2145330024: 2145330024 (0) win 512

13: 55: 39.219206 In IP 9.4.8.2.z39.50> 9.4.8.1.bgp: S 1238175350: 1238175350 (0) win 512

13: 55: 39.219213 In IP 9.4.8.2.2098> 9.4.8.1.bgp: S 1378645261: 1378645261 (0) win 512

13: 55: 39.219220 In IP 9.4.8.2.30115> 9.4.8.1.bgp: S 1925718835: 1925718835 (0) win 512

13: 55: 39.219227 In IP 9.4.8.2.27096> 9.4.8.1.bgp: S 286229321: 286229321 (0) win 512

13: 55: 39.219235 In IP 9.4.8.2.2732> 9.4.8.1.bgp: S 1469740166: 1469740166 (0) win 512

13: 55: 39.219242 In IP 9.4.8.2.57281> 9.4.8.1.bgp: S 1179645542: 1179645542 (0) win 512

13: 55: 39.219254 In IP 9.4.8.2.2262> 9.4.8.1.bgp: S 1507663512: 1507663512 (0) win 512

13: 55: 39.219262 In IP 9.4.8.2.914c / g> 9.4.8.1.bgp: S 1219404184: 1219404184 (0) win 512

13: 55: 39.219269 In IP 9.4.8.2.2099> 9.4.8.1.bgp: S 577616492: 577616492 (0) win 512

13: 55: 39.219276 In IP 9.4.8.2.267> 9.4.8.1.bgp: S 1257310851: 1257310851 (0) win 512

13: 55: 39.219283 In IP 9.4.8.2.27153> 9.4.8.1.bgp: S 1965427542: 1965427542 (0) win 512

13: 55: 39.219291 In IP 9.4.8.2.30172> 9.4.8.1.bgp: S 1446880235: 1446880235 (0) win 512

13: 55: 39.219297 In IP 9.4.8.2.57338> 9.4.8.1.bgp: S 206377149: 206377149 (0) win 512

13: 55: 39.219305 In IP 9.4.8.2.2789> 9.4.8.1.bgp: S 838483872: 838483872 (0) win 512

The second stage of testing was the attack of TCP SYN on BGP (179) port of the router. The source Ip address was chosen randomly and did not match the address of the peer specified in the router config. This attack had the same effect on the router. In order not to stretch the article with monotonous conclusions of the logs, I will give only the load graph

The graph clearly shows the start of the attack. The BGP session also fell and was unable to recover.

The concept of building protection RE router

The feature of Juniper equipment is the separation of tasks between the routing engine (RE) and the packet forwarding engine (PFE). PFE processes the entire flow of passing traffic by filtering and routing it according to a pre-established pattern. RE handles the processing of direct calls to the router (traceroute, ping, ssh), packet processing for service services (BGP, NTP, DNS, SNMP) and generates traffic filtering and routing schemes for the PFE router.

The basic idea of protecting a router is to filter all traffic destined for an RE. Creating a filter will allow transferring the load created by the DDOS attack from the CPU RE to the CPU PFE of the router, which will enable the RE to process only real packets and not waste processor time on other traffic. To build protection, you must determine what we are filtering. The scheme for writing filters for IPv4 was taken from the book Douglas Hanks Jr. - Day One Book: Securing the Routing Engine on M, MX and T series . In my case, the following scheme was on the router:

By IPv4 protocol

- BGP - we filter packets by source and destination ip, source ip can be any of the bgp neighbor list. We only allow tcp established connections, that is, the fltr will discard all SYNs arriving at this port, and the BGP session will start only from us (BGP neighbor uplink works in passive mode).

- TACACS + - filter packets by source and destination ip, source ip can only be from the internal network. We limit the bandwidth to 1Mb / s.

- SNMP - filter packets by source and destination ip, source ip can be any of the list of snmp-clients in the config.

- SSH - we filter packets by destination ip, source ip can be any, as there is a need for emergency access to the device from any network. We limit the bandwidth to 5Mb / s.

- NTP - we filter packets by source and destination ip, source ip can be any of the ntp servers config list. We limit the bandwidth to 1Mb / s (the threshold was later reduced to 512Kb / s).

- DNS - filter packets by source and destination ip, source ip can be any of the dns servers config list. We limit the bandwidth to 1Mb / s.

- ICMP - filter packets, skip only to addresses belonging to the router. We limit the bandwidth to 5Mb / s (the threshold was later reduced to 1Mb / s).

- TRACEROUTE - we filter packets, we only pass packets with TTL equal to 1 and only to addresses belonging to the router. We limit the bandwidth to 1Mb / s.

Under the IPv6 protocol, in my case, the filters were superimposed only on BGP, NTP, ICMP, DNS and traceroute. The only difference is in ICMP traffic filtering, since IPv6 uses ICMP for business purposes. The remaining protocols did not use IPv6 addressing.

Writing configuration

For writing filters in juniper there is a handy tool - prefix-list, which allows you to dynamically compile lists of ip addresses / subnets for substitution in filters. For example, to create a list of ipv4 BGP neighbors specified in the config, the following structure is used:

prefix-list BGP-neighbors-v4 { apply-path "protocols bgp group <*> neighbor <*.*>"; } List result:

show configuration policy-options prefix-list BGP-neighbors-v4 | display inheritance

##

## apply-path was expanded to:

## 1.1.1.1/32;

## 2.2.2.2/32;

## 3.3.3.3/32;

##

apply-path "protocols bgp group <*> neighbor <*. *>";

We compile dynamic lists of prefixes for all filters:

/* ipv4 BGP */ prefix-list BGP-neighbors-v4 { apply-path "protocols bgp group <*> neighbor <*.*>"; } /* ipv6 BGP */ prefix-list BGP-neighbors-v6 { apply-path "protocols bgp group <*> neighbor <*:*>"; } /* ipv4 NTP */ prefix-list NTP-servers-v4 { apply-path "system ntp server <*.*>"; } /* ipv6 NTP */ prefix-list NTP-servers-v6 { apply-path "system ntp server <*:*>"; } /* ipv4 */ prefix-list LOCALS-v4 { apply-path "interfaces <*> unit <*> family inet address <*>"; } /* ipv6 */ prefix-list LOCALS-v6 { apply-path "interfaces <*> unit <*> family inet6 address <*>"; } /* ipv4 SNMP */ prefix-list SNMP-clients { apply-path "snmp client-list <*> <*>"; } prefix-list SNMP-community-clients { apply-path "snmp community <*> clients <*>"; } /* TACACS+ */ prefix-list TACPLUS-servers { apply-path "system tacplus-server <*>"; } /* */ prefix-list INTERNAL-locals { /* - */ 192.168.0.1/32; } /* , SSH */ prefix-list MGMT-locals { apply-path "interfaces fxp0 unit 0 family inet address <*>"; } /* */ prefix-list rfc1918 { 10.0.0.0/8; 172.16.0.0/12; 192.168.0.0/16; } /* Loopback */ prefix-list localhost-v6 { ::1/128; } prefix-list localhost-v4 { 127.0.0.0/8; } /* ipv4 BGP */ prefix-list BGP-locals-v4 { apply-path "protocols bgp group <*> neighbor <*.*> local-address <*.*>"; } /* ipv6 BGP */ prefix-list BGP-locals-v6 { apply-path "protocols bgp group <*> neighbor <*:*> local-address <*:*>"; } /* ipv4 DNS */ prefix-list DNS-servers-v4 { apply-path "system name-server <*.*>"; } /* ipv6 DNS */ prefix-list DNS-servers-v6 { apply-path "system name-server <*:*>"; } We make polisry to limit bandwidth:

/* 1Mb */ policer management-1m { apply-flags omit; if-exceeding { bandwidth-limit 1m; burst-size-limit 625k; } /* */ then discard; } /* 5Mb */ policer management-5m { apply-flags omit; if-exceeding { bandwidth-limit 5m; burst-size-limit 625k; } /* */ then discard; } /* 512Kb */ policer management-512k { apply-flags omit; if-exceeding { bandwidth-limit 512k; burst-size-limit 25k; } /* */ then discard; } Below, under “copy and paste”, the configuration of the filters of the final protection variant (the thresholds for the throughput of NTP and ICMP traffic were reduced, the reasons for lowering the thresholds are described in detail in the testing section). Configure ipv4 filters:

IPv4 filter

/* BGP */ filter accept-bgp { interface-specific; term accept-bgp { from { source-prefix-list { BGP-neighbors-v4; } destination-prefix-list { BGP-locals-v4; } /* . . */ tcp-established; protocol tcp; port bgp; } then { count accept-bgp; accept; } } } /* SSH */ filter accept-ssh { apply-flags omit; term accept-ssh { from { destination-prefix-list { MGMT-locals; } protocol tcp; destination-port ssh; } then { /* */ policer management-5m; count accept-ssh; accept; } } } /* SNMP */ filter accept-snmp { apply-flags omit; term accept-snmp { from { source-prefix-list { SNMP-clients; SNMP-community-clients; } destination-prefix-list { /* */ INTERNAL-locals; } protocol udp; destination-port [ snmp snmptrap ]; } then { count accept-snmp; accept; } } } /* ICMP */ filter accept-icmp { apply-flags omit; /* ICMP */ term discard-icmp-fragments { from { is-fragment; protocol icmp; } then { count discard-icmp-fragments; discard; } } term accept-icmp { from { protocol icmp; icmp-type [ echo-reply echo-request time-exceeded unreachable source-quench router-advertisement parameter-problem ]; } then { /* */ policer management-1m; count accept-icmp; accept; } } } /* traceroute */ filter accept-traceroute { apply-flags omit; term accept-traceroute-udp { from { destination-prefix-list { LOCALS-v4; } protocol udp; /* TTL = 1 */ ttl 1; destination-port 33434-33450; } then { /* */ policer management-1m; count accept-traceroute-udp; accept; } } term accept-traceroute-icmp { from { destination-prefix-list { LOCALS-v4; } protocol icmp; /* TTL = 1 */ ttl 1; icmp-type [ echo-request timestamp time-exceeded ]; } then { /* */ policer management-1m; count accept-traceroute-icmp; accept; } } term accept-traceroute-tcp { from { destination-prefix-list { LOCALS-v4; } protocol tcp; /* TTL = 1 */ ttl 1; } then { /* */ policer management-1m; count accept-traceroute-tcp; accept; } } } /* DNS */ filter accept-dns { apply-flags omit; term accept-dns { from { source-prefix-list { DNS-servers-v4; } destination-prefix-list { LOCALS-v4; } protocol udp; source-port 53; } then { /* */ policer management-1m; count accept-dns; accept; } } } /* */ filter discard-all { apply-flags omit; term discard-ip-options { from { ip-options any; } then { /* */ count discard-ip-options; log; discard; } } term discard-TTL_1-unknown { from { ttl 1; } then { /* */ count discard-TTL_1-unknown; log; discard; } } term discard-tcp { from { protocol tcp; } then { /* */ count discard-tcp; log; discard; } } term discard-udp { from { protocol udp; } then { /* */ count discard-udp; log; discard; } } term discard-icmp { from { destination-prefix-list { LOCALS-v4; } protocol icmp; } then { /* */ count discard-icmp; log; discard; } } term discard-unknown { then { /* */ count discard-unknown; log; discard; } } } /* TACACS+ */ filter accept-tacacs { apply-flags omit; term accept-tacacs { from { source-prefix-list { TACPLUS-servers; } destination-prefix-list { INTERNAL-locals; } protocol [ tcp udp ]; source-port [ tacacs tacacs-ds ]; tcp-established; } then { /* */ policer management-1m; count accept-tacacs; accept; } } } /* NTP */ filter accept-ntp { apply-flags omit; term accept-ntp { from { source-prefix-list { NTP-servers-v4; localhost-v4; } destination-prefix-list { localhost-v4; LOCALS-v4; } protocol udp; destination-port ntp; } then { /* */ policer management-512k; count accept-ntp; accept; } } } /* */ filter accept-common-services { term protect-TRACEROUTE { filter accept-traceroute; } term protect-ICMP { filter accept-icmp; } term protect-SSH { filter accept-ssh; } term protect-SNMP { filter accept-snmp; } term protect-NTP { filter accept-ntp; } term protect-DNS { filter accept-dns; } term protect-TACACS { filter accept-tacacs; } } Similar filter for ipv6:

IPv6 filter

/* BGP */ filter accept-v6-bgp { interface-specific; term accept-v6-bgp { from { source-prefix-list { BGP-neighbors-v6; } destination-prefix-list { BGP-locals-v6; } tcp-established; next-header tcp; port bgp; } then { count accept-v6-bgp; accept; } } } /* ICMP */ filter accept-v6-icmp { apply-flags omit; term accept-v6-icmp { from { next-header icmp6; /* , ipv6 icmp */ icmp-type [ echo-reply echo-request time-exceeded router-advertisement parameter-problem destination-unreachable packet-too-big router-solicit neighbor-solicit neighbor-advertisement redirect ]; } then { policer management-1m; count accept-v6-icmp; accept; } } } /* traceroute */ filter accept-v6-traceroute { apply-flags omit; term accept-v6-traceroute-udp { from { destination-prefix-list { LOCALS-v6; } next-header udp; destination-port 33434-33450; hop-limit 1; } then { policer management-1m; count accept-v6-traceroute-udp; accept; } } term accept-v6-traceroute-tcp { from { destination-prefix-list { LOCALS-v6; } next-header tcp; hop-limit 1; } then { policer management-1m; count accept-v6-traceroute-tcp; accept; } } term accept-v6-traceroute-icmp { from { destination-prefix-list { LOCALS-v6; } next-header icmp6; icmp-type [ echo-reply echo-request router-advertisement parameter-problem destination-unreachable packet-too-big router-solicit neighbor-solicit neighbor-advertisement redirect ]; hop-limit 1; } then { policer management-1m; count accept-v6-traceroute-icmp; accept; } } } /* DNS */ filter accept-v6-dns { apply-flags omit; term accept-v6-dns { from { source-prefix-list { DNS-servers-v6; } destination-prefix-list { LOCALS-v6; } next-header udp; source-port 53; } then { policer management-1m; count accept-v6-dns; accept; } } } /* NTP */ filter accept-v6-ntp { apply-flags omit; term accept-v6-ntp { from { source-prefix-list { NTP-servers-v6; localhost-v6; } destination-prefix-list { localhost-v6; LOCALS-v6; } next-header udp; destination-port ntp; } then { policer management-512k; count accept-v6-ntp; accept; } } } /* */ filter discard-v6-all { apply-flags omit; term discard-v6-tcp { from { next-header tcp; } then { count discard-v6-tcp; log; discard; } } term discard-v6-udp { from { next-header udp; } then { count discard-v6-udp; log; discard; } } term discard-v6-icmp { from { destination-prefix-list { LOCALS-v6; } next-header icmp6; } then { count discard-v6-icmp; log; discard; } } term discard-v6-unknown { then { count discard-v6-unknown; log; discard; } } } /* */ filter accept-v6-common-services { term protect-TRACEROUTE { filter accept-v6-traceroute; } term protect-ICMP { filter accept-v6-icmp; } term protect-NTP { filter accept-v6-ntp; } term protect-DNS { filter accept-v6-dns; } } Next, you need to apply filters on the service interface lo0.0. In JunOS, this interface is used to transfer data between the PFE and RE. The configuration will be as follows:

lo0 { unit 0 { family inet { filter { input-list [ accept-bgp accept-common-services discard-all ]; } } family inet6 { filter { input-list [ accept-v6-bgp accept-v6-common-services discard-v6-all ]; } } } } The order of specifying filters in the input-list interface is very important. Each package will be checked for validity passing through the filters specified in the input-list from left to right.

Filter Testing

After applying the filters, conducted a series of tests on the same stand. After each test, the firewall counters were cleared. Normal (no attack) load on the router is visible on the charts at 11:06 - 11:08. Pps chart for the entire test period:

CPU graph for the entire test period:

The first test was carried out to test icmp with a traffic threshold of 5 Mb / s (on charts 10:21 - 10:24). The filter counters and the graph show traffic bandwidth limitations, but even this stream was enough to increase the load, so the threshold was reduced to 1 Mb / s. Counters:

Filter: lo0.0-i Counters: Name Bytes Packets accept-bgp-lo0.0-i 0 0 accept-icmp-lo0.0-i 47225584 1686628 accept-ntp-lo0.0-i 152 2 accept-snmp-lo0.0-i 174681 2306 accept-ssh-lo0.0-i 38952 702 accept-traceroute-icmp-lo0.0-i 0 0 accept-traceroute-tcp-lo0.0-i 841 13 accept-traceroute-udp-lo0.0-i 0 0 discard-TTL_1-unknown-lo0.0-i 0 0 discard-icmp-lo0.0-i 0 0 discard-icmp-fragments-lo0.0-i 0 0 discard-ip-options-lo0.0-i 0 0 discard-tcp-lo0.0-i 780 13 discard-udp-lo0.0-i 18743 133 discard-unknown-lo0.0-i 0 0 Policers: Name Bytes Packets management-1m-accept-ntp-lo0.0-i 0 0 management-1m-accept-traceroute-icmp-lo0.0-i 0 0 management-1m-accept-traceroute-tcp-lo0.0-i 0 0 management-1m-accept-traceroute-udp-lo0.0-i 0 0 management-5m-accept-icmp-lo0.0-i 933705892 33346639 management-5m-accept-ssh-lo0.0-i 0 0 Repeated icmp flood test with a 1Mb / s traffic threshold (on charts 10:24 - 10:27). The load on the RE router dropped from 19% to 10%, the load on the PFE to 30%. Counters:

Filter: lo0.0-i Counters: Name Bytes Packets accept-bgp-lo0.0-i 0 0 accept-icmp-lo0.0-i 6461448 230766 accept-ntp-lo0.0-i 0 0 accept-snmp-lo0.0-i 113433 1497 accept-ssh-lo0.0-i 33780 609 accept-traceroute-icmp-lo0.0-i 0 0 accept-traceroute-tcp-lo0.0-i 0 0 accept-traceroute-udp-lo0.0-i 0 0 discard-TTL_1-unknown-lo0.0-i 0 0 discard-icmp-lo0.0-i 0 0 discard-icmp-fragments-lo0.0-i 0 0 discard-ip-options-lo0.0-i 0 0 discard-tcp-lo0.0-i 360 6 discard-udp-lo0.0-i 12394 85 discard-unknown-lo0.0-i 0 0 Policers: Name Bytes Packets management-1m-accept-icmp-lo0.0-i 665335496 23761982 management-1m-accept-ntp-lo0.0-i 0 0 management-1m-accept-traceroute-icmp-lo0.0-i 0 0 management-1m-accept-traceroute-tcp-lo0.0-i 0 0 management-1m-accept-traceroute-udp-lo0.0-i 0 0 management-5m-accept-ssh-lo0.0-i 0 0 Next, a flood test was performed on the BGP port of the router from an outsider (not included in the config) ip address (on the charts 10:29 - 10:36). As can be seen from the counters, the entire flood settled on the RE discard-tcp filter and only increased the load on the PFE. The load on the RE has not changed. Counters:

Filter: lo0.0-i Counters: Name Bytes Packets accept-bgp-lo0.0-i 824 26 accept-icmp-lo0.0-i 0 0 accept-ntp-lo0.0-i 0 0 accept-snmp-lo0.0-i 560615 7401 accept-ssh-lo0.0-i 33972 585 accept-traceroute-icmp-lo0.0-i 0 0 accept-traceroute-tcp-lo0.0-i 1088 18 accept-traceroute-udp-lo0.0-i 0 0 discard-TTL_1-unknown-lo0.0-i 0 0 discard-icmp-lo0.0-i 0 0 discard-icmp-fragments-lo0.0-i 0 0 discard-ip-options-lo0.0-i 0 0 discard-tcp-lo0.0-i 12250785600 306269640 discard-udp-lo0.0-i 63533 441 discard-unknown-lo0.0-i 0 0 Policers: Name Bytes Packets management-1m-accept-icmp-lo0.0-i 0 0 management-1m-accept-ntp-lo0.0-i 0 0 management-1m-accept-traceroute-icmp-lo0.0-i 0 0 management-1m-accept-traceroute-tcp-lo0.0-i 0 0 management-1m-accept-traceroute-udp-lo0.0-i 0 0 management-5m-accept-ssh-lo0.0-i 0 0 session does not fall:

user@MX80# run show bgp summary Groups: 1 Peers: 1 Down peers: 0 Table Tot Paths Act Paths Suppressed History Damp State Pending inet.0 2 1 0 0 0 0 Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|#Active/Received/Accepted/Damped... 9.4.8.2 4567 21 22 0 76 8:49 1/2/2/0 0/0/0/0 The fourth test was a flooding test (on charts 10:41 - 10:46) UDP per NTP port (in the filter settings, the interaction on this port is limited to the servers specified in the router config). According to the schedule, the load rises only on the PFE router up to 28%. Counters:

Filter: lo0.0-i Counters: Name Bytes Packets accept-bgp-lo0.0-i 0 0 accept-icmp-lo0.0-i 0 0 accept-ntp-lo0.0-i 0 0 accept-snmp-lo0.0-i 329059 4344 accept-ssh-lo0.0-i 22000 388 accept-traceroute-icmp-lo0.0-i 0 0 accept-traceroute-tcp-lo0.0-i 615 10 accept-traceroute-udp-lo0.0-i 0 0 discard-TTL_1-unknown-lo0.0-i 0 0 discard-icmp-lo0.0-i 0 0 discard-icmp-fragments-lo0.0-i 0 0 discard-ip-options-lo0.0-i 0 0 discard-tcp-lo0.0-i 0 0 discard-udp-lo0.0-i 1938171670 69219329 discard-unknown-lo0.0-i 0 0 Policers: Name Bytes Packets management-1m-accept-icmp-lo0.0-i 0 0 management-1m-accept-ntp-lo0.0-i 0 0 management-1m-accept-traceroute-icmp-lo0.0-i 0 0 management-1m-accept-traceroute-tcp-lo0.0-i 0 0 management-1m-accept-traceroute-udp-lo0.0-i 0 0 management-5m-accept-ssh-lo0.0-i 0 0 Fifth, a flood test was conducted (on charts 10:41 - 11:04) UDP to the NTP port with IP spoofing. The load on the RE increased by 12%, the load on the PFE increased to 22%. From the counters it is clear that the flood rests on the 1Mb / s threshold, but this is enough to increase the load on the RE. The traffic threshold was eventually reduced to 512Kb / s. Counters:

Filter: lo0.0-i Counters: Name Bytes Packets accept-bgp-lo0.0-i 0 0 accept-icmp-lo0.0-i 0 0 accept-ntp-lo0.0-i 34796804 1242743 accept-snmp-lo0.0-i 630617 8324 accept-ssh-lo0.0-i 20568 366 accept-traceroute-icmp-lo0.0-i 0 0 accept-traceroute-tcp-lo0.0-i 1159 19 accept-traceroute-udp-lo0.0-i 0 0 discard-TTL_1-unknown-lo0.0-i 0 0 discard-icmp-lo0.0-i 0 0 discard-icmp-fragments-lo0.0-i 0 0 discard-ip-options-lo0.0-i 0 0 discard-tcp-lo0.0-i 0 0 discard-udp-lo0.0-i 53365 409 discard-unknown-lo0.0-i 0 0 Policers: Name Bytes Packets management-1m-accept-icmp-lo0.0-i 0 0 management-1m-accept-ntp-lo0.0-i 3717958468 132784231 management-1m-accept-traceroute-icmp-lo0.0-i 0 0 management-1m-accept-traceroute-tcp-lo0.0-i 0 0 management-1m-accept-traceroute-udp-lo0.0-i 0 0 management-5m-accept-ssh-lo0.0-i 0 0 ( 11:29 — 11:34) UDP NTP IP spoofing, 512/. :

:

Filter: lo0.0-i Counters: Name Bytes Packets accept-bgp-lo0.0-i 0 0 accept-icmp-lo0.0-i 0 0 accept-ntp-lo0.0-i 21066260 752363 accept-snmp-lo0.0-i 744161 9823 accept-ssh-lo0.0-i 19772 347 accept-traceroute-icmp-lo0.0-i 0 0 accept-traceroute-tcp-lo0.0-i 1353 22 accept-traceroute-udp-lo0.0-i 0 0 discard-TTL_1-unknown-lo0.0-i 0 0 discard-icmp-lo0.0-i 0 0 discard-icmp-fragments-lo0.0-i 0 0 discard-ip-options-lo0.0-i 0 0 discard-tcp-lo0.0-i 0 0 discard-udp-lo0.0-i 82745 602 discard-unknown-lo0.0-i 0 0 Policers: Name Bytes Packets management-1m-accept-icmp-lo0.0-i 0 0 management-1m-accept-traceroute-icmp-lo0.0-i 0 0 management-1m-accept-traceroute-tcp-lo0.0-i 0 0 management-1m-accept-traceroute-udp-lo0.0-i 0 0 management-512k-accept-ntp-lo0.0-i 4197080384 149895728 management-5m-accept-ssh-lo0.0-i 0 0 Conclusion

, DDOS , RE. . MX80:

Filter: lo0.0-i Counters: Name Bytes Packets accept-v6-bgp-lo0.0-i 31091878 176809 accept-v6-icmp-lo0.0-i 1831144 26705 accept-v6-ntp-lo0.0-i 0 0 accept-v6-traceroute-icmp-lo0.0-i 0 0 accept-v6-traceroute-tcp-lo0.0-i 48488 684 accept-v6-traceroute-udp-lo0.0-i 0 0 discard-v6-icmp-lo0.0-i 0 0 discard-v6-tcp-lo0.0-i 0 0 discard-v6-udp-lo0.0-i 0 0 discard-v6-unknown-lo0.0-i 0 0 Policers: Name Bytes Packets management-1m-accept-v6-icmp-lo0.0-i 0 0 management-1m-accept-v6-traceroute-icmp-lo0.0-i 0 0 management-1m-accept-v6-traceroute-tcp-lo0.0-i 0 0 management-1m-accept-v6-traceroute-udp-lo0.0-i 0 0 management-512k-accept-v6-ntp-lo0.0-i 0 0 Filter: lo0.0-i Counters: Name Bytes Packets accept-bgp-lo0.0-i 135948400 698272 accept-dns-lo0.0-i 374 3 accept-icmp-lo0.0-i 121304849 1437305 accept-ntp-lo0.0-i 87780 1155 accept-snmp-lo0.0-i 1265470648 12094967 accept-ssh-lo0.0-i 2550011 30897 accept-tacacs-lo0.0-i 702450 11657 accept-traceroute-icmp-lo0.0-i 28824 636 accept-traceroute-tcp-lo0.0-i 75378 1361 accept-traceroute-udp-lo0.0-i 47328 1479 discard-TTL_1-unknown-lo0.0-i 27790 798 discard-icmp-lo0.0-i 26400 472 discard-icmp-fragments-lo0.0-i 0 0 discard-ip-options-lo0.0-i 35680 1115 discard-tcp-lo0.0-i 73399674 1572144 discard-udp-lo0.0-i 126386306 694603 discard-unknown-lo0.0-i 0 0 Policers: Name Bytes Packets management-1m-accept-dns-lo0.0-i 0 0 management-1m-accept-icmp-lo0.0-i 38012 731 management-1m-accept-tacacs-lo0.0-i 0 0 management-1m-accept-traceroute-icmp-lo0.0-i 0 0 management-1m-accept-traceroute-tcp-lo0.0-i 0 0 management-1m-accept-traceroute-udp-lo0.0-i 0 0 management-512k-accept-ntp-lo0.0-i 0 0 management-5m-accept-ssh-lo0.0-i 0 0 “” discard.

.

Source: https://habr.com/ru/post/186566/

All Articles