Data compression during transfer from browser to server

Are you processing a lot of data in a browser?

Are you processing a lot of data in a browser?Want to send them back to the server?

Yes, so that it is sent quickly and placed in one http request?

In the article I will show how we solved this problem in a new project, using compression and modern javascript features.

')

Task Description

Habrayuzer aneto complained to me that Yandex.Direct badly handles the intersection of keywords between themselves. In the meantime, the task is urgent and practically unsolvable by hand. So we made a small service that solves this problem.

There are many processed keywords - tens of thousands of lines. Due to the quadratic complexity, the processing algorithm is demanding of memory and computational power. Therefore, it would not be a sin to involve the user's browser and transfer processing from the server to the client.

During development, we had two problems:

- With a slow connection, the data is transmitted for too long.

- Often data does not fit into one post request due to nginx / apache / php / etc limitations.

Decision

There are many solutions. In our case, a version based on modern standards: Typed Arrays , Workers , XHR 2 . In a nutshell: we compress the data and send it to the server in binary form. These simple actions allowed us to reduce the size of the transmitted data by more than 2 times.

Consider the algorithm step by step.

Step 0: Baseline

For example, I generated an array containing various data about the set of users. In the example, it will be loaded via JSONP and sent back to the server.

Load code and data send function

<script> function setDemoData(data) { window.initialData = data; } function send(data) { var http = new XMLHttpRequest(); http.open('POST', window.location.href, true); http.setRequestHeader('X-Requested-With', 'XMLHttpRequest'); http.onreadystatechange = function() { if (http.readyState == 4) { if (http.status === 200) { // xhr success } else { // xhr error; } } }; http.send(data); } </script> <script src="http://nodge.ru/habr/demoData.js"></script> Let's try to send the data as it is and see the debugger:

var data = JSON.stringify(initialData); send(data);

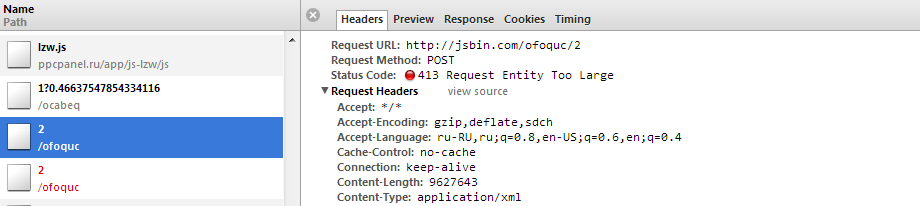

With a simple transfer, the request volume is 9402 Kb. A lot, we will cut.

Step 1: Data Compression

There are no built-in data compression functions in javascript. For compression, you can use any algorithm convenient for you: LZW , Deflate , LZMA and others. The choice will depend mainly on the availability of libraries for the client and the server. The corresponding javascript libraries are easily located on a githaba: one , two , three .

We tried to use all three options, but only LZW was able to make friends with PHP. This is a very simple algorithm. In the example we will use the following implementation:

LZW compression function

var LZW = { compress: function(uncompressed) { "use strict"; var i, l, dictionary = {}, w = '', k, wk, result = [], dictSize = 256; // initial dictionary for (i = 0; i < dictSize; i++) { dictionary[String.fromCharCode(i)] = i; } for (i = 0, l = uncompressed.length; i < l; i++) { k = uncompressed.charAt(i); wk = w + k; if (dictionary.hasOwnProperty(wk)) { w = wk; } else { result.push(dictionary[w]); dictionary[wk] = dictSize++; w = k; } } if (w !== '') { result.push(dictionary[w]); } result.dictionarySize = dictSize; return result; } }; Since LZW is designed to work with ASCII, we pre-screen unicode characters. The library is taken here .

So, compress the data and send it to the server:

var data = JSON.stringify(initialData); data = stringEscape(data); data = LZW.compress(data); send(data.join('|')); The query volume is 6079 KB (compression 65%), saving 3323 KB. A more sophisticated compression algorithm will show better results, but we go to the next step.

Step 2: Binary Translation

Since after compression by LZW we get an array of numbers, it is completely inefficient to transfer it as a string. It is much more efficient to pass it as binary data.

For this we can use Typed Arrays :

// 16- 32- var type = data.dictionarySize > 65535 ? 'Uint32Array' : 'Uint16Array', count = data.length, buffer = new ArrayBuffer((count+2) * window[type].BYTES_PER_ELEMENT), // bufferBase = new Uint8Array(buffer, 0, 1), // LZW bufferDictSize = new window[type](buffer, window[type].BYTES_PER_ELEMENT, 1), bufferData = new window[type](buffer, window[type].BYTES_PER_ELEMENT*2, count); bufferBase[0] = type === 'Uint32Array' ? 32 : 16; // bufferDictSize[0] = data.dictionarySize; // LZW bufferData.set(data); // data = new Blob([buffer]); // ArrayBuffer Blob XHR send(data); Query volume - 3686 Kb (compression 39%), saved 6079 Kb. Now the size of the request has decreased by more than two times, both of the problems described have been resolved.

Step 3: Processing on the server.

The data that came to the server must now be unpacked before processing. Naturally, you need to use the same algorithm as on the client. Here is an example of how this can be done in php:

PHP processing example

<?php $data = readBinaryData(file_get_contents('php://input')); $data = lzw_decompress($data); $data = unicode_decode($data); $data = json_decode($data, true); function readBinaryData($buffer) { $bufferType = unpack('C', $buffer); // - if ($bufferType[1] === 16) { $dataSize = 2; $unpackModifier = 'v'; } else { $dataSize = 4; $unpackModifier = 'V'; } $buffer = substr($buffer, $dataSize); // remove type from buffer $data = new SplFixedArray(strlen($buffer)/$dataSize); $stepCount = 2500; // 2500 for ($i=0, $l=$data->getSize(); $i<$l; $i+=$stepCount) { if ($i + $stepCount < $l) { $bytesCount = $stepCount * $dataSize; $currentBuffer = substr($buffer, 0, $bytesCount); $buffer = substr($buffer, $bytesCount); } else { $currentBuffer = $buffer; $buffer = ''; } $dataPart = unpack($unpackModifier.'*', $currentBuffer); $p = $i; foreach ($dataPart as $item) { $data[$p] = $item; $p++; } } return $data; } function lzw_decompress($compressed) { $dictSize = 256; // - $dictionary = new SplFixedArray($compressed[0]); for ($i = 0; $i < $dictSize; $i++) { $dictionary[$i] = chr($i); } $i = 1; $w = chr($compressed[$i++]); $result = $w; for ($l = count($compressed); $i < $l; $i++) { $entry = ''; $k = $compressed[$i]; if (isset($dictionary[$k])) { $entry = $dictionary[$k]; } else { if ($k === $dictSize) { $entry = $w . $w[0]; } else { return null; } } $result .= $entry; $dictionary[$dictSize++] = $w .$entry[0]; $w = $entry; } return $result; } function replace_unicode_escape_sequence($match) { return mb_convert_encoding(pack('H*', $match[1]), 'UTF-8', 'UCS-2BE'); } function unicode_decode($str) { return preg_replace_callback('/\\\\u([0-9a-f]{4})/i', 'replace_unicode_escape_sequence', $str); } For other languages, I think, everything is just as easy.

Step 4: Workers

Since the above code compresses enough volume data, the page will hang for the duration of the compression. Quite an unpleasant effect. To get rid of it, create a stream in which we will perform all the calculations. In javascript, there are Workers for this. How to use Workers can be found in the full example below or in the documentation.

Step 5: Browser Support

Obviously, the above javascript code will not work in IE6 =)

For work we need Typed Arrays , XHR 2 and Workers .

Supported browsers list: IE10 +, Firefox 21+, Chrome 26+, Safari 5.1+, Opera 15+, IOS 5+, Android 4.0+ (without Workers).

For verification, you can use Modernizr, or something like this code:

Determine support for required standards

var compressionSupported = (function() { var check = [ 'Worker', 'Uint16Array', 'Uint32Array', 'ArrayBuffer', // Typed Arrays 'Blob', 'FormData' // xhr2 ]; var supported = true; for (var i = 0, l = check.length; i<l; i++) { if (!(check[i] in window)) { supported = false; break; } } return supported; })(); Examples

The code from article is published on JS Bin: page , worker . Open the page, open the developer tools and look at the size of the three post requests.

In a real project, the solution works here . You can download the test file, add something unique to it to bypass the cache and try to load it for processing.

Conclusion

Of course, this method is not suitable for all cases, but it has the right to life. Sometimes it is easier / smarter to make several requests instead of compression Or maybe you initially have numeric data, then you do not need to translate them into a string and compress - just use Typed Arrays.

Summary:

- You can use compression not only server → client, but also client → server.

- XHR 2 and Typed Arrays can significantly reduce the amount of transmitted data.

- Using Workers will not block the user interaction with the page.

- And, of course, do not transfer unnecessary data unnecessarily.

I am pleased to answer questions and accept improvements for the code. Errors and typos checked, but just in case - write in private messages. All good.

UPDATE 1:

We should also say about the image. Most formats (jpeg, png, gif) are already compressed, so it makes no sense to compress them again. Images should be transferred as binary data, and not as a string (base64). I made a small example for canvas showing the conversion of base64 to Blob.

UPDATE 2:

If you use or plan to use SSL, then read this article . SSL already has two-way request compression.

UPDATE 3:

Replaced base64 with escaping unicode characters. It turned out much more efficient. Thank you consumer , seriyPS and TolTol .

Source: https://habr.com/ru/post/186202/

All Articles