The development of custom data types in programming

I would like to stop and look at the development of programming languages in terms of the development of user-defined data types (PDD).

I want to make a reservation right away, by users, programmers are understood as people who write code in these languages. Well, those who accompany this code or just read.

User data types are data types that can be created by the user based on what is available in the language.

Users want to have these types of data.

Users wanted to be able to compile data the way they want it. Wanted, wanted, and surely will want. More and more, more diverse and stronger.

That is why it is useful to follow the development of user-defined data types in programs and programming languages.

There was once no user data type. Somehow got out

')

At the dawn of the computer era, the languages were not so hot - machine: either you obey their dictates (and the dictates are simple: either you use low bit numbers in binary-decimal system of calculation (or, what processor works) and processor commands) or not.

We will not touch those "dark ages".

One thing is to say - there was no user data type there, but programmers somehow survived and somehow wrote programs.

Built-in types are so comfortable! As long as you use them as planned by the developers ...

The first more or less normal language was Assembler (in fact, there are many assemblers, we are talking about the backbone of languages, which appeared in the 50s). In addition to readability, he brought a lot of new things in terms of user data.

The largest and undeniable achievement is the ability to create variables! Since then, the possibility has been inserted in almost all languages - the ability to create variables.

The second no less major achievement of the assembler, made of good intentions, is an attempt to insert into the language all data types (of those that the programmer might need) directly into the language.

Well, the rest of the little things - first of all, the ability to record numbers not only in binary, but also in hexadecimal, octal, binary-decimal systems.

Then it seemed, well, what else can the user need?

Years passed, and the need not only for high-level abstractions, but also for user data types grew.

And then struck 1957th year with FORTRAN.

The written code on it looked almost like modern languages, although its version on punch cards may shock people who want to read it.

Fortran gave everything that is needed ... for calculating the flight of ballistic missiles - such data as integers (of type int), with a comma (of type float), and complex.

Strings? Who needs them on punch cards? They will appear in FORTRAN later - real lines only after 20 years, and their imitation - in 10 (together with the logical data type).

And Fortran gave an almost-real user data type as an array (although its use is somewhat different from the modern one), we'll talk more about this in the chapter on Group User Data.

But users are few - they want more and more user data.

And now it appears - Algol, already in 1958, the programs on which are easily read and in our days.

That's just Algol brought the basis of what is now everywhere - Boolean types, string, a variety of integer types and numbers with a comma. A little later, Fortran will apply it all, but Algol was a pioneer.

It would seem - all appetites are satisfied, what other types are needed by users? Yes, everything is already implemented - just take it and use it.

And then, stepping on the heels of Algola with FORTRAN, in 1958 another language appeared, quite different from the language - Lisp.

Lisp can do unimaginable functions. Just how to live with it?

He gave another, completely new data type, real user data types - functions (of the C-expressions type), which had firmly begun to enter all modern languages only from the beginning of the 21st century (first of all, thanks to the multiparadigm languages Python and Ruby). If we take into account that Lisp allows to operate with macros - something like programming with eval (in 58), it is not surprising that the world was not ready for it.

But is the world ready for Lisp now? Probably not.

Focus on why. Lisp, like any other functional programming, works with strongly interconnected objects, somewhat reminiscent of linked gears of a mechanical clock. Unlike imperative languages, any wedging into a gear will stop the whole mechanism. Because of this, language requirements, including user data types, are much stricter. Those problems that arose in Lisp immediately, in imperative languages, they only worsened by the end of the 80s.

Lisp makes it possible to build any C-expression. But he gives only one toolkit - the standard and simple toolkit for working with C-expressions.

It turns out that there is an opportunity to write any user data, but you can work with them only as with primitives. The development of Lisp-like languages has shown that so far no good tools have been found for unwritten C-expressions.

Not all were satisfied for long. The development of user data has slowed down for almost a decade.

If they wanted something, then we finished these data types directly into the language. But in those days, languages did not evolve and were updated as quickly as they are now, so they were mainly engaged in simulating the creation of custom data types.

Imitation from the original data types is almost no different in functionality. Basically in those days imitation was based on emulation by functions (procedural style).

The main difference from the real user types is one thing - if you want to “slightly” change the data type, you have to rewrite all the functionality. Nobody wanted to do this.

Therefore, to imitate become more flexible.

Now I will say what everyone knows, but what is important here is not the described imitation technique itself, but the angle of view of this technique.

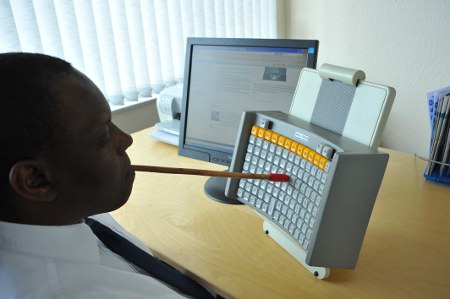

Sometimes you need to work only with the opportunities that are

First, a configurable flag-based functionality appeared. This is not the easiest method, but it very much resembled an assembler, and it was then still known.

The essence of the transfer flags is simple - the transfer of the number as a parameter, the number is represented as a binary number of flags. Despite the widespread use, still no special data types exist in languages like a series of flags. Instead, they found a good substitute - named constants and bit operations that look like logical ones.

The simplest method — it is still widely used everywhere — is parameter configuration: the more you need to configure, the more parameters are passed. One only has a minus - too easy to get confused in the order of passing parameters. Therefore, they try to transfer up to 4-5 arguments with such methods.

For more arguments, with the development of real user data, primarily group and composite, it became possible to transmit one complex argument - this is the same configuration not only horizontally, but vertically.

The development of this method can be called the creation of embedded languages (DSL) for configuring functions.

The third method of simulator flexibility is the invention of manipulators (handlers), although at that time they were not called that, and often they were substitutes for manipulators - they were numbers and strings, and not references or pointers.

The era of built-in data types has ended.

But 1972 came, appeared ... Sy. And the era of dinosaur domination (embedded data types) continued for another decade, although user data types began to win their place in the sun.

Including in the very Si.

But for now let us return to one more built-in data type, which has become one of the reasons for the growing popularity of the language. C introduced a low-level data type, which was in assembler, and was completely forgotten in the first high-level languages - dynamic types. First of all, these are references (reference) and pointers (pointer). A null data service type is added to them - null.

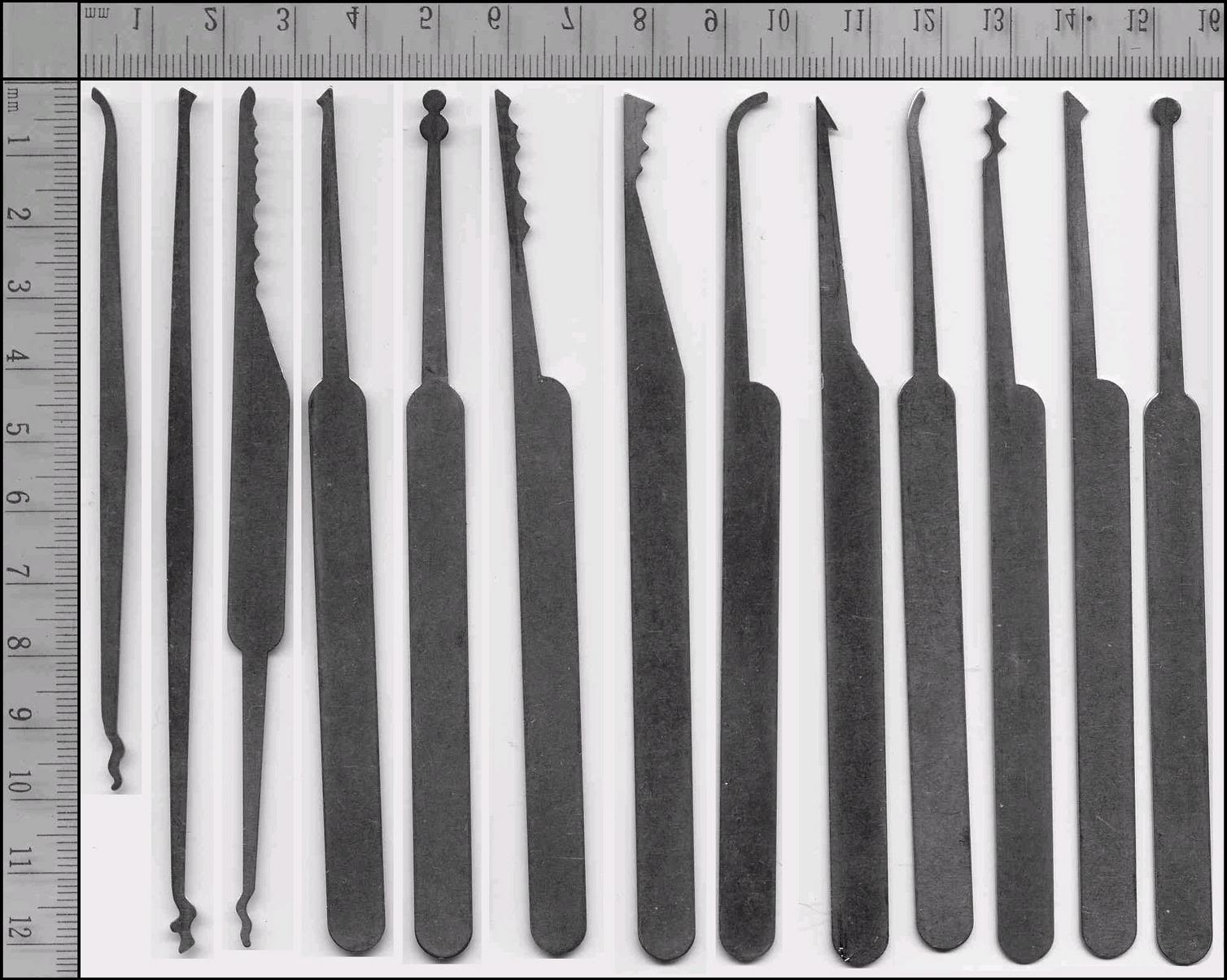

Dynamic data types like master keys are inconspicuous, but what kind of secret rooms can not be reached with them ?!

The link can be viewed as one of the options for implementing this type of user data as a synonym.

The development of synonyms can be found in PCP and its concept of variables, when you can return the value of a variable or function whose name is written in the value of the variable being called.

In C, calling a function can be called with a function call, or you can pass a callback function.

In addition to this, dynamic data types help to speed up the execution of compiled code well.

To these advantages, dynamic data types have another huge plus - with them it is quite simple to implement something that was not laid down in the language itself. Only one afflicts, written with their use can be accessed only with the help of tools for working with dynamic data. But the crawling technique for simulating data is known - to close in functions and return a reference / pointer to the created one — handlers. Manipulators are one of those data types, in different languages of which it can be called quite differently.

For example, in PCP they are called resources, in Erlang they are called ports and process identifiers. In Cobol there is such a data type as a file and a picture.

However, dynamic data types are not only advantages. Cons, too, sometimes very large.

The more freedom in using dynamic data types is given by language, the:

1) the possibilities for creating something that was not laid in the language (and not necessarily positive opportunities) are increasing

2) the compiler interferes less and less with the user's actions, and all responsibility for the actions falls on the programmer

2) code insecurity increases dramatically

3) sharply increases the possibility of injections into the namespace

4) the garbage collector intervenes less and less, and all responsibility falls on the user

Further history has shown that the creators of subsequent languages (or when added to already existing languages) when adding dynamic data balanced the balance between security and capabilities.

The end of the 70s was nearing, and these basic built-in data types began to go off to the periphery, into a routine, giving way to real user data.

However, the reality sometimes presents surprising surprises.

Who knows how much more can be found in the old-kind and long-understood data types?

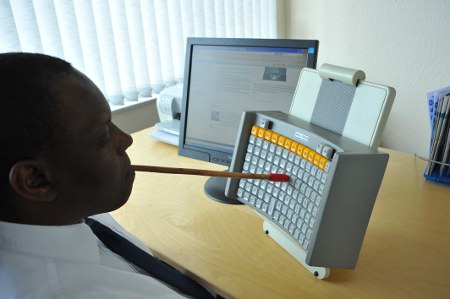

Sometimes you just need to see a new where everything has long been known. As, for example, this sorting of M & Ms

Then, in the late 70s, the AWK scripting language appeared (using the grep utility development), and a decade later based on it, in 1987, a language like Pearl appeared. And among other things, he had (still has) such an exotic built-in data type as regular expressions.

Perl helped look at such an old data type as strings from a new side. The toolkit for working with it in early languages could be considered as simplified parsers.

Regular expression languages have proven to be very flexible and super-powerful tools for working with character data types.

Some groups are big. I want to work with them quickly

In fact, group data types are a lot of something that is in a language, as a rule, a monomorphic grouping. Often, these data types are not user-defined, but built-in; however, they are sometimes so flexible that this is enough.

Already Fortran supported group data types - these are arrays (array), although they looked a bit different than they are now. Arrays, very similar to the modern ones, were already in Algol. There were sets in Pascal (set)

In Lisp there were lists (list).

Then came the hash tables, associated arrays, vectors, heaps, queues, stacks, trees ...

Something was embedded, something was simulated or created using custom data types.

Further development of group data types led to 2 different branches of development.

1) the need to use its functionality with each group data type was not the most satisfactory, I wanted to work with them uniformly. The main tools for this in imperative languages are collections and iterators. Mostly been added in the early 2000s.

2) In the 80s with the development of data growth, the need to expand the tools for working with group data types grew by leaps and bounds. Databases have appeared, and with them both queries and query languages. In the mid-80s, the structured query language (SQL) becomes dominant. Like parsers for strings, the query language made it clear that the toolkit that was used for group data types can be viewed as a primitive query language. Databases, as a rule, are taken out of the language, and in the language there are only methods of working with them, therefore they cannot be considered as full-fledged user-defined data types. Although due to their flexibility, this is immaterial.

In the era of built-in data types in different languages, you can find support for very exotic built-in data types.

For example, in C, this type of data was enumeration. He was first introduced to Pascal by Pascal (1970), although he called him a scalar.

What is an enumeration is easy to explain even to children on the fingers

Enumeration is the most authentic user data type! It would seem, it is necessary to put a monument to C for this. No, except that the gravestone.

The fact is that users have the opportunity to build any transfers. Only here to work with them in C there is nothing (there was a basic set in Pascal). Nothing at all. You can create transfers, but you cannot work with them.

Since C was in the mainstream, few people wanted to add this data type to other languages. Only in C ++ 11 there appeared at least some tools for working with enumerations.

This example, like the development of Lisp, showed how important it is to have not only user-defined data types, but also tools for working with them.

The recordings are so varied. Still learn how to use them

But in C there was another real user data type. Although it was invented much earlier - back in Cobol, published in the early 60s (the language itself was created in 1959).

This is a record, in C, it is called a structure (struct).

A record is nothing more than a group of heterogeneous data types.

Records toolkit is attached to the records. For example, C not fully gives the standard minimum of work with records (for example, only one-sided initialization).

With records it is easy not to imitate, but to create real lists and trees.

Really, everything is in the language again?

No and no again.

It is not enough to have just custom data types. It is not enough to have the tools for working with the PDD as with the PDD. Another thing comes to the fore.

No one gives any tool for uncreated data types!

Now, languages with records support have fallen into a similar trap like the one Lisp has got into - you can create your own data, and you can only work with them with a basic set.

Only in Lisp the situation is worse: in this language everything is C-expressions, and in C, Cobol, and others, the record is an independent type, besides it has its own, albeit small, toolkit.

Fortunately, the solution to this impasse has long been known - an imitation of working with user-defined data types using functions.

It was thanks to the records / structures (including in C) that programmers came to realize the importance of user-defined data types.

At the same time, an acute shortage of tools for working with yet-created data types was clearly indicated.

And the answer was. No matter how surprising it sounds, but he was still a couple of years before the creation of C, he was in Europe, and was called Simula (1967). And when C began to choke on the lack of tools for user-defined data types, C ++ (in 1983) took over the best from the Simula and applied the C syntax to it.

Objects can do everything. Themselves and above themselves

Objects are another type of user data. It has much greater capabilities than the recording.

This gave him the opportunity to win just wild popularity.

Ironically, the same as Simula, which came out a couple of years before C, which had no objects, and a couple of years before C ++, Smalltalk in 1980 declared the paradigm “all objects”.

But Smallt did not win great popularity, he had to wait until C ++ reached the level of stagnation, and only after that, in 1995, Java was again able to raise the “all objects” paradigm proudly overhead.

What is so good objects, because they are not so different from the records. In essence - the same entries with the addition of methods.

First, the tools for working with the objects themselves are much richer and stronger than the tools for working with the structure.

And secondly, there were no tools for working with objects that have not yet been created ... either.

Stop, one wonders, where is the “second” here, if objects have no tools with uncreated data types, and records have no. And, nevertheless, secondly! For the records, it was necessary to imitate this toolkit, while for objects you can simply implement this toolkit inside the object itself!

And if suddenly it was necessary to slightly change the user-defined data type, it was convenient to create a descendant using the toolkit of objects - by inheritance, and correct the behavior in it.

The boom and total use of objects has now led to stagnation.

What prevents objects from developing further?

As we remember, the implementation of the toolbox of a new object is entirely the responsibility of the programmer, and not in the language, so the level of code reuse is not as large as it could be.

Equally important and increasing closeness. The object itself will do everything, although it is almost never necessary to do everything from it. And vice versa, having the ability to do everything yourself, this object will not do anything to others. Maybe, but it won't.

Part of the problem helps to solve the introduction of interfaces (interfaces) and primisey (mixins, traits).

Interfaces are introduced for the first time by Delphi (in 1986, even as Object Pascal), later Java and C #. And this is understandable - they were leaders in object languages.

But what is surprising is that the primitives / traits appeared when trying to attach objects to Lisp (Flavors, CLOS) (CLOS is part of Common Lisp), later added to different languages.

However, even abstract helpers such as interfaces and impurities do not always help, for example, to an old object labeled “finalized” (final).Partially, one can solve the problem of hybridization based on prototype inheritance (the Self-open language (dialect of that Smalltalka) in the mid-1980s and gained popularity primarily thanks to Java Script a decade later), but this method has its drawbacks regarding class inheritance.

Interesting support for metaclasses (metaclass), which were incorporated in Smalltalk in 1980 and are now supported by some languages, for example, Python. Metaclasses work with classes as objects (such a recursive approach). This greatly improves the tools for working with objects as objects.

Nowadays, it is not the creation of a new object that comes to the forefront, but a competent approach to designing a system using design patterns (patterns).

What will happen next? The question is rhetorical.

Are there alternatives to such powerful custom data types as objects, structures? There are, and even better than objects! It is worth looking at them better to understand how objects can develop in the future.

Christ and Krishna together. Imperativeness and Functionality can be together

Where to look for alternatives?

Declarative (like HTML) and logical languages (like Prolog) currently do not contain alternatives. They are based on the fact that instead of the programmer, the compiler / interpreter works.

And here we must either

1) just quit trying to add user-defined data types and enter a symbiosis with another language (for example, HTML + Java Script)

2) connect other programming paradigms.

By the way, at the expense of connecting other paradigms, it would seem, how good it is to have multi-paradigm languages? Python (1991) and Ruby (1994) did not think so.

And, they were right. Where it is easy to do all Lisp - it is convenient to use the functional programming paradigm, where simplicity is needed - there is a procedural style, for other cases - objects do well.

It would seem that no new user data has been added, and the efficiency of writing code has greatly increased.

And when in the yard of 2011, even in C ++, lambda functions from Lisp came.

Scala has grown from Java since 2003, accepting the premise that objects are also functions.

Metaprogramming is good. But, as a rule, it allows either to expand (make it more flexible) the built-in data types, or to generate new code, or simply to create generalizations, it works with the built-in data types. So far only with embedded. But even without user-defined data types, beyond the metaprogramming, the future (and already in many ways the present) in terms of getting rid of the programming routine.

ATD are so beautiful that I want to insert into the frame and hang. And sometimes, leave there and no longer touch

Wow, how menacing it sounds!

Standard ML in 1990 for the first time introduces Algebraic Data Types (ATD).

Algebraic data types are one of the most powerful custom data types. They were found by mathematicians Hindley and Milner in lambda calculus.

ATD is “all in one” - unitary data types (like null), enumeration (like enum, bool), protected basic types (like how resource versus link), switching types (something like in C - union), list-like structures, tree structures, functions, tuples, records, object-like structures (but not objects). And any combination of all this.

It is much better than objects! At least from the point of view of creating the various user-defined data types themselves - yes, better, of course.

Only from other points of view, this data type, both in the Standard MLS and in the later Okamle, is very, very poor.

Tools for working with ADT as with ADT is not much more than working with records, and significantly less than with objects.

Secondly, there are no tools for working with not yet created data types. And, unlike objects, there is no place to hide the samopisny toolkit. Only imitate.

Kaml, as a descendant of the Standard ML, without thinking twice, added objects to himself and became the Omemle (by 1996). And in parallel, Okaml began to develop an alternative, more functional solution, where to hide the implementation of the user data toolkit - in parametric modules. And, for 15 years Okaml built a decent functional replacement for objects. There is another interesting approach - as we remember, parametric module-functions were introduced in order to get rid of the problem of lack of tools for unwritten ADT data, only the parametric modules themselves nowadays do not differ much from ADT, which means that now there are no tools for more not written ... modules. Straight recursion!

And for these 15 years, Okaml finds another solution to the problem of lack of tools for uncreated data types, he called them so - variant (variant). This is a different, non-ATD data type, although it looks similar. This is a switching data type, at the same time it can be shared in any proportions, including mixing (it is impossible to share ATD data in proportions, as well as to mix). It’s good that it is possible to proportionally share (with objects it can be achieved only with the help of interfaces or traits, and even then not completely), it is often tempting to mix (you cannot create this with objects), it’s bad that in any proportions. There is still something to work on. This data type is underdeveloped. From the simple ways of development - to add hitherto meager toolkit of work with the options toolkit of sets.

ATD with types of classes look rough, but they are capable of much.

In the early 90s, based on the Standard ML, as well as several academic languages, another language was developed, first standardized only in 1998. It was Haskel.

He had the same algebraic data types as the Standard ML and OCM. And the same meager set to work with ADT as with ADT. But Haskell had (and still has) something that no one type of user data had before. Haskell has a toolkit for the still unwritten custom data types - these are the type classes (class).

The types of classes themselves are something like interfaces or impurities most closely to the roles in Perl, only the introduction of classes differs from the introduction of interfaces / traits.

Interfaces for simple classes of types, admixtures for complicated ones.

Moreover, for complex classes, the analogy with an admixture will no longer be suitable. For complex classes there is no need to connect all the data, just join at the entry points, or at one of several entry points, if allowed.

And with the help of the implementation of the class (instance), one can share tools, and not only with the code written after the creation of the class, but also for the data created earlier (as if one could add behavior to the finalized objects). If we take the analogy in object languages, this is achieved, first of all, by not attaching interfaces and impurities to objects, but, on the contrary, objects to impurities and interfaces (in part, roles in Perl already have this).

But Haskell did not stop even on this. He implemented automatic deriving of classes for various types of data.

This is definitely much more on the capabilities of user-defined data types than is incorporated in the objects.

Haskel is booming. Already, Algebraic Data Types are only a fraction of the larger.

Now it is quite possible to create data families, Generalized Algebraic Data Types (GADTs), existential, multi-rank.

Classes of types also do not stand still. Already now it is possible to use multiparameter, function-dependent, species-specific classes.

The toolkit for working with ADT as with ADT in the context of metaprogramming is expanding.

Much of this new language exists as an extension of the language, and in 2014 it can enter the standard of the language.

The story openly showed an urgent need for custom data types.

User data types are needed more and more. More diverse. With deep support for tools that can work with both a generic type of user data, and with what will be created by programmers.

As soon as we managed to hide the samopny toolkit in the user data itself, the rapid growth of user data began.

However, as history has shown, it is sometimes not enough for a language to own progressive technologies in order to become popular. The invention of objects in Simula before the advent of C (in which there were not even objects) did not make a breakthrough for the Simula itself.

History has shown that finding what no one sees under their feet is also useful. Combining several paradigms and achieving more than individually (Ruby, Python, ..), opening low-level dynamic data types (C), summarizing the work with strings — like working with parsers (Pearl) —has greatly helped both these languages and programming in general.

Will Lisp-like languages find their own toolkit for the data types that have not yet been created?

Will objects stop developing? If not, where will they go - along the path of the Scala language? Ruby? Pearl?

When is the golden age of algebraic data types? Is there any chance of developing a variant data type?

Will the Haskele classes be borrowed in other languages? Where will Haskel go?

Time will tell and answer questions.

I want to make a reservation right away, by users, programmers are understood as people who write code in these languages. Well, those who accompany this code or just read.

User data types are data types that can be created by the user based on what is available in the language.

Users want to have these types of data.

Users wanted to be able to compile data the way they want it. Wanted, wanted, and surely will want. More and more, more diverse and stronger.

That is why it is useful to follow the development of user-defined data types in programs and programming languages.

No user data types

There was once no user data type. Somehow got out

')

At the dawn of the computer era, the languages were not so hot - machine: either you obey their dictates (and the dictates are simple: either you use low bit numbers in binary-decimal system of calculation (or, what processor works) and processor commands) or not.

We will not touch those "dark ages".

One thing is to say - there was no user data type there, but programmers somehow survived and somehow wrote programs.

Built-in data types

Built-in types are so comfortable! As long as you use them as planned by the developers ...

The first more or less normal language was Assembler (in fact, there are many assemblers, we are talking about the backbone of languages, which appeared in the 50s). In addition to readability, he brought a lot of new things in terms of user data.

The largest and undeniable achievement is the ability to create variables! Since then, the possibility has been inserted in almost all languages - the ability to create variables.

The second no less major achievement of the assembler, made of good intentions, is an attempt to insert into the language all data types (of those that the programmer might need) directly into the language.

Well, the rest of the little things - first of all, the ability to record numbers not only in binary, but also in hexadecimal, octal, binary-decimal systems.

Then it seemed, well, what else can the user need?

Years passed, and the need not only for high-level abstractions, but also for user data types grew.

And then struck 1957th year with FORTRAN.

The written code on it looked almost like modern languages, although its version on punch cards may shock people who want to read it.

Fortran gave everything that is needed ... for calculating the flight of ballistic missiles - such data as integers (of type int), with a comma (of type float), and complex.

Strings? Who needs them on punch cards? They will appear in FORTRAN later - real lines only after 20 years, and their imitation - in 10 (together with the logical data type).

And Fortran gave an almost-real user data type as an array (although its use is somewhat different from the modern one), we'll talk more about this in the chapter on Group User Data.

But users are few - they want more and more user data.

And now it appears - Algol, already in 1958, the programs on which are easily read and in our days.

That's just Algol brought the basis of what is now everywhere - Boolean types, string, a variety of integer types and numbers with a comma. A little later, Fortran will apply it all, but Algol was a pioneer.

It would seem - all appetites are satisfied, what other types are needed by users? Yes, everything is already implemented - just take it and use it.

And then, stepping on the heels of Algola with FORTRAN, in 1958 another language appeared, quite different from the language - Lisp.

Lisp can do unimaginable functions. Just how to live with it?

He gave another, completely new data type, real user data types - functions (of the C-expressions type), which had firmly begun to enter all modern languages only from the beginning of the 21st century (first of all, thanks to the multiparadigm languages Python and Ruby). If we take into account that Lisp allows to operate with macros - something like programming with eval (in 58), it is not surprising that the world was not ready for it.

But is the world ready for Lisp now? Probably not.

Focus on why. Lisp, like any other functional programming, works with strongly interconnected objects, somewhat reminiscent of linked gears of a mechanical clock. Unlike imperative languages, any wedging into a gear will stop the whole mechanism. Because of this, language requirements, including user data types, are much stricter. Those problems that arose in Lisp immediately, in imperative languages, they only worsened by the end of the 80s.

Lisp makes it possible to build any C-expression. But he gives only one toolkit - the standard and simple toolkit for working with C-expressions.

It turns out that there is an opportunity to write any user data, but you can work with them only as with primitives. The development of Lisp-like languages has shown that so far no good tools have been found for unwritten C-expressions.

Not all were satisfied for long. The development of user data has slowed down for almost a decade.

If they wanted something, then we finished these data types directly into the language. But in those days, languages did not evolve and were updated as quickly as they are now, so they were mainly engaged in simulating the creation of custom data types.

Imitation from the original data types is almost no different in functionality. Basically in those days imitation was based on emulation by functions (procedural style).

The main difference from the real user types is one thing - if you want to “slightly” change the data type, you have to rewrite all the functionality. Nobody wanted to do this.

Therefore, to imitate become more flexible.

Now I will say what everyone knows, but what is important here is not the described imitation technique itself, but the angle of view of this technique.

Sometimes you need to work only with the opportunities that are

First, a configurable flag-based functionality appeared. This is not the easiest method, but it very much resembled an assembler, and it was then still known.

The essence of the transfer flags is simple - the transfer of the number as a parameter, the number is represented as a binary number of flags. Despite the widespread use, still no special data types exist in languages like a series of flags. Instead, they found a good substitute - named constants and bit operations that look like logical ones.

The simplest method — it is still widely used everywhere — is parameter configuration: the more you need to configure, the more parameters are passed. One only has a minus - too easy to get confused in the order of passing parameters. Therefore, they try to transfer up to 4-5 arguments with such methods.

For more arguments, with the development of real user data, primarily group and composite, it became possible to transmit one complex argument - this is the same configuration not only horizontally, but vertically.

The development of this method can be called the creation of embedded languages (DSL) for configuring functions.

The third method of simulator flexibility is the invention of manipulators (handlers), although at that time they were not called that, and often they were substitutes for manipulators - they were numbers and strings, and not references or pointers.

The era of built-in data types has ended.

But 1972 came, appeared ... Sy. And the era of dinosaur domination (embedded data types) continued for another decade, although user data types began to win their place in the sun.

Including in the very Si.

But for now let us return to one more built-in data type, which has become one of the reasons for the growing popularity of the language. C introduced a low-level data type, which was in assembler, and was completely forgotten in the first high-level languages - dynamic types. First of all, these are references (reference) and pointers (pointer). A null data service type is added to them - null.

Dynamic data types like master keys are inconspicuous, but what kind of secret rooms can not be reached with them ?!

The link can be viewed as one of the options for implementing this type of user data as a synonym.

The development of synonyms can be found in PCP and its concept of variables, when you can return the value of a variable or function whose name is written in the value of the variable being called.

In C, calling a function can be called with a function call, or you can pass a callback function.

In addition to this, dynamic data types help to speed up the execution of compiled code well.

To these advantages, dynamic data types have another huge plus - with them it is quite simple to implement something that was not laid down in the language itself. Only one afflicts, written with their use can be accessed only with the help of tools for working with dynamic data. But the crawling technique for simulating data is known - to close in functions and return a reference / pointer to the created one — handlers. Manipulators are one of those data types, in different languages of which it can be called quite differently.

For example, in PCP they are called resources, in Erlang they are called ports and process identifiers. In Cobol there is such a data type as a file and a picture.

However, dynamic data types are not only advantages. Cons, too, sometimes very large.

The more freedom in using dynamic data types is given by language, the:

1) the possibilities for creating something that was not laid in the language (and not necessarily positive opportunities) are increasing

2) the compiler interferes less and less with the user's actions, and all responsibility for the actions falls on the programmer

2) code insecurity increases dramatically

3) sharply increases the possibility of injections into the namespace

4) the garbage collector intervenes less and less, and all responsibility falls on the user

Further history has shown that the creators of subsequent languages (or when added to already existing languages) when adding dynamic data balanced the balance between security and capabilities.

The end of the 70s was nearing, and these basic built-in data types began to go off to the periphery, into a routine, giving way to real user data.

However, the reality sometimes presents surprising surprises.

Who knows how much more can be found in the old-kind and long-understood data types?

Sometimes you just need to see a new where everything has long been known. As, for example, this sorting of M & Ms

Then, in the late 70s, the AWK scripting language appeared (using the grep utility development), and a decade later based on it, in 1987, a language like Pearl appeared. And among other things, he had (still has) such an exotic built-in data type as regular expressions.

Perl helped look at such an old data type as strings from a new side. The toolkit for working with it in early languages could be considered as simplified parsers.

Regular expression languages have proven to be very flexible and super-powerful tools for working with character data types.

Group data types

Some groups are big. I want to work with them quickly

In fact, group data types are a lot of something that is in a language, as a rule, a monomorphic grouping. Often, these data types are not user-defined, but built-in; however, they are sometimes so flexible that this is enough.

Already Fortran supported group data types - these are arrays (array), although they looked a bit different than they are now. Arrays, very similar to the modern ones, were already in Algol. There were sets in Pascal (set)

In Lisp there were lists (list).

Then came the hash tables, associated arrays, vectors, heaps, queues, stacks, trees ...

Something was embedded, something was simulated or created using custom data types.

Further development of group data types led to 2 different branches of development.

1) the need to use its functionality with each group data type was not the most satisfactory, I wanted to work with them uniformly. The main tools for this in imperative languages are collections and iterators. Mostly been added in the early 2000s.

2) In the 80s with the development of data growth, the need to expand the tools for working with group data types grew by leaps and bounds. Databases have appeared, and with them both queries and query languages. In the mid-80s, the structured query language (SQL) becomes dominant. Like parsers for strings, the query language made it clear that the toolkit that was used for group data types can be viewed as a primitive query language. Databases, as a rule, are taken out of the language, and in the language there are only methods of working with them, therefore they cannot be considered as full-fledged user-defined data types. Although due to their flexibility, this is immaterial.

Real user data types

In the era of built-in data types in different languages, you can find support for very exotic built-in data types.

For example, in C, this type of data was enumeration. He was first introduced to Pascal by Pascal (1970), although he called him a scalar.

What is an enumeration is easy to explain even to children on the fingers

Enumeration is the most authentic user data type! It would seem, it is necessary to put a monument to C for this. No, except that the gravestone.

The fact is that users have the opportunity to build any transfers. Only here to work with them in C there is nothing (there was a basic set in Pascal). Nothing at all. You can create transfers, but you cannot work with them.

Since C was in the mainstream, few people wanted to add this data type to other languages. Only in C ++ 11 there appeared at least some tools for working with enumerations.

This example, like the development of Lisp, showed how important it is to have not only user-defined data types, but also tools for working with them.

Composite data types

The recordings are so varied. Still learn how to use them

But in C there was another real user data type. Although it was invented much earlier - back in Cobol, published in the early 60s (the language itself was created in 1959).

This is a record, in C, it is called a structure (struct).

A record is nothing more than a group of heterogeneous data types.

Records toolkit is attached to the records. For example, C not fully gives the standard minimum of work with records (for example, only one-sided initialization).

With records it is easy not to imitate, but to create real lists and trees.

Really, everything is in the language again?

No and no again.

It is not enough to have just custom data types. It is not enough to have the tools for working with the PDD as with the PDD. Another thing comes to the fore.

No one gives any tool for uncreated data types!

Now, languages with records support have fallen into a similar trap like the one Lisp has got into - you can create your own data, and you can only work with them with a basic set.

Only in Lisp the situation is worse: in this language everything is C-expressions, and in C, Cobol, and others, the record is an independent type, besides it has its own, albeit small, toolkit.

Fortunately, the solution to this impasse has long been known - an imitation of working with user-defined data types using functions.

It was thanks to the records / structures (including in C) that programmers came to realize the importance of user-defined data types.

At the same time, an acute shortage of tools for working with yet-created data types was clearly indicated.

And the answer was. No matter how surprising it sounds, but he was still a couple of years before the creation of C, he was in Europe, and was called Simula (1967). And when C began to choke on the lack of tools for user-defined data types, C ++ (in 1983) took over the best from the Simula and applied the C syntax to it.

Objects

Objects can do everything. Themselves and above themselves

Objects are another type of user data. It has much greater capabilities than the recording.

This gave him the opportunity to win just wild popularity.

Ironically, the same as Simula, which came out a couple of years before C, which had no objects, and a couple of years before C ++, Smalltalk in 1980 declared the paradigm “all objects”.

But Smallt did not win great popularity, he had to wait until C ++ reached the level of stagnation, and only after that, in 1995, Java was again able to raise the “all objects” paradigm proudly overhead.

What is so good objects, because they are not so different from the records. In essence - the same entries with the addition of methods.

First, the tools for working with the objects themselves are much richer and stronger than the tools for working with the structure.

And secondly, there were no tools for working with objects that have not yet been created ... either.

Stop, one wonders, where is the “second” here, if objects have no tools with uncreated data types, and records have no. And, nevertheless, secondly! For the records, it was necessary to imitate this toolkit, while for objects you can simply implement this toolkit inside the object itself!

And if suddenly it was necessary to slightly change the user-defined data type, it was convenient to create a descendant using the toolkit of objects - by inheritance, and correct the behavior in it.

The boom and total use of objects has now led to stagnation.

What prevents objects from developing further?

As we remember, the implementation of the toolbox of a new object is entirely the responsibility of the programmer, and not in the language, so the level of code reuse is not as large as it could be.

Equally important and increasing closeness. The object itself will do everything, although it is almost never necessary to do everything from it. And vice versa, having the ability to do everything yourself, this object will not do anything to others. Maybe, but it won't.

Part of the problem helps to solve the introduction of interfaces (interfaces) and primisey (mixins, traits).

Interfaces are introduced for the first time by Delphi (in 1986, even as Object Pascal), later Java and C #. And this is understandable - they were leaders in object languages.

But what is surprising is that the primitives / traits appeared when trying to attach objects to Lisp (Flavors, CLOS) (CLOS is part of Common Lisp), later added to different languages.

However, even abstract helpers such as interfaces and impurities do not always help, for example, to an old object labeled “finalized” (final).Partially, one can solve the problem of hybridization based on prototype inheritance (the Self-open language (dialect of that Smalltalka) in the mid-1980s and gained popularity primarily thanks to Java Script a decade later), but this method has its drawbacks regarding class inheritance.

Interesting support for metaclasses (metaclass), which were incorporated in Smalltalk in 1980 and are now supported by some languages, for example, Python. Metaclasses work with classes as objects (such a recursive approach). This greatly improves the tools for working with objects as objects.

Nowadays, it is not the creation of a new object that comes to the forefront, but a competent approach to designing a system using design patterns (patterns).

What will happen next? The question is rhetorical.

Are there alternatives to such powerful custom data types as objects, structures? There are, and even better than objects! It is worth looking at them better to understand how objects can develop in the future.

Search alternatives

Christ and Krishna together. Imperativeness and Functionality can be together

Where to look for alternatives?

Declarative (like HTML) and logical languages (like Prolog) currently do not contain alternatives. They are based on the fact that instead of the programmer, the compiler / interpreter works.

And here we must either

1) just quit trying to add user-defined data types and enter a symbiosis with another language (for example, HTML + Java Script)

2) connect other programming paradigms.

By the way, at the expense of connecting other paradigms, it would seem, how good it is to have multi-paradigm languages? Python (1991) and Ruby (1994) did not think so.

And, they were right. Where it is easy to do all Lisp - it is convenient to use the functional programming paradigm, where simplicity is needed - there is a procedural style, for other cases - objects do well.

It would seem that no new user data has been added, and the efficiency of writing code has greatly increased.

And when in the yard of 2011, even in C ++, lambda functions from Lisp came.

Scala has grown from Java since 2003, accepting the premise that objects are also functions.

Metaprogramming is good. But, as a rule, it allows either to expand (make it more flexible) the built-in data types, or to generate new code, or simply to create generalizations, it works with the built-in data types. So far only with embedded. But even without user-defined data types, beyond the metaprogramming, the future (and already in many ways the present) in terms of getting rid of the programming routine.

Algebraic Data Types

ATD are so beautiful that I want to insert into the frame and hang. And sometimes, leave there and no longer touch

Wow, how menacing it sounds!

Standard ML in 1990 for the first time introduces Algebraic Data Types (ATD).

Algebraic data types are one of the most powerful custom data types. They were found by mathematicians Hindley and Milner in lambda calculus.

ATD is “all in one” - unitary data types (like null), enumeration (like enum, bool), protected basic types (like how resource versus link), switching types (something like in C - union), list-like structures, tree structures, functions, tuples, records, object-like structures (but not objects). And any combination of all this.

It is much better than objects! At least from the point of view of creating the various user-defined data types themselves - yes, better, of course.

Only from other points of view, this data type, both in the Standard MLS and in the later Okamle, is very, very poor.

Tools for working with ADT as with ADT is not much more than working with records, and significantly less than with objects.

Secondly, there are no tools for working with not yet created data types. And, unlike objects, there is no place to hide the samopisny toolkit. Only imitate.

Kaml, as a descendant of the Standard ML, without thinking twice, added objects to himself and became the Omemle (by 1996). And in parallel, Okaml began to develop an alternative, more functional solution, where to hide the implementation of the user data toolkit - in parametric modules. And, for 15 years Okaml built a decent functional replacement for objects. There is another interesting approach - as we remember, parametric module-functions were introduced in order to get rid of the problem of lack of tools for unwritten ADT data, only the parametric modules themselves nowadays do not differ much from ADT, which means that now there are no tools for more not written ... modules. Straight recursion!

And for these 15 years, Okaml finds another solution to the problem of lack of tools for uncreated data types, he called them so - variant (variant). This is a different, non-ATD data type, although it looks similar. This is a switching data type, at the same time it can be shared in any proportions, including mixing (it is impossible to share ATD data in proportions, as well as to mix). It’s good that it is possible to proportionally share (with objects it can be achieved only with the help of interfaces or traits, and even then not completely), it is often tempting to mix (you cannot create this with objects), it’s bad that in any proportions. There is still something to work on. This data type is underdeveloped. From the simple ways of development - to add hitherto meager toolkit of work with the options toolkit of sets.

Algebraic Data Types paired with Type Classes

ATD with types of classes look rough, but they are capable of much.

In the early 90s, based on the Standard ML, as well as several academic languages, another language was developed, first standardized only in 1998. It was Haskel.

He had the same algebraic data types as the Standard ML and OCM. And the same meager set to work with ADT as with ADT. But Haskell had (and still has) something that no one type of user data had before. Haskell has a toolkit for the still unwritten custom data types - these are the type classes (class).

The types of classes themselves are something like interfaces or impurities most closely to the roles in Perl, only the introduction of classes differs from the introduction of interfaces / traits.

Interfaces for simple classes of types, admixtures for complicated ones.

Moreover, for complex classes, the analogy with an admixture will no longer be suitable. For complex classes there is no need to connect all the data, just join at the entry points, or at one of several entry points, if allowed.

And with the help of the implementation of the class (instance), one can share tools, and not only with the code written after the creation of the class, but also for the data created earlier (as if one could add behavior to the finalized objects). If we take the analogy in object languages, this is achieved, first of all, by not attaching interfaces and impurities to objects, but, on the contrary, objects to impurities and interfaces (in part, roles in Perl already have this).

But Haskell did not stop even on this. He implemented automatic deriving of classes for various types of data.

This is definitely much more on the capabilities of user-defined data types than is incorporated in the objects.

Haskel is booming. Already, Algebraic Data Types are only a fraction of the larger.

Now it is quite possible to create data families, Generalized Algebraic Data Types (GADTs), existential, multi-rank.

Classes of types also do not stand still. Already now it is possible to use multiparameter, function-dependent, species-specific classes.

The toolkit for working with ADT as with ADT in the context of metaprogramming is expanding.

Much of this new language exists as an extension of the language, and in 2014 it can enter the standard of the language.

Conclusion

The story openly showed an urgent need for custom data types.

User data types are needed more and more. More diverse. With deep support for tools that can work with both a generic type of user data, and with what will be created by programmers.

As soon as we managed to hide the samopny toolkit in the user data itself, the rapid growth of user data began.

However, as history has shown, it is sometimes not enough for a language to own progressive technologies in order to become popular. The invention of objects in Simula before the advent of C (in which there were not even objects) did not make a breakthrough for the Simula itself.

History has shown that finding what no one sees under their feet is also useful. Combining several paradigms and achieving more than individually (Ruby, Python, ..), opening low-level dynamic data types (C), summarizing the work with strings — like working with parsers (Pearl) —has greatly helped both these languages and programming in general.

Will Lisp-like languages find their own toolkit for the data types that have not yet been created?

Will objects stop developing? If not, where will they go - along the path of the Scala language? Ruby? Pearl?

When is the golden age of algebraic data types? Is there any chance of developing a variant data type?

Will the Haskele classes be borrowed in other languages? Where will Haskel go?

Time will tell and answer questions.

Source: https://habr.com/ru/post/184558/

All Articles