Fault tolerance for Hyper-V VM and MSSQL

Instead of the preface

Fault tolerance is understood - within one data center - that is, protection against the failure of 1-2 physical servers.

We will have an inexpensive implementation from the point of view of hardware, namely, what a renowned German hoster is renting.

In terms of software cost, it is either free or already available. Microsoft Partner Program, so to speak, in action.

With the introduction of Windows Server 2012 on the market, there was a lot of advertisements from “From server to cloud”, “Your applications always work”. This is what we will try to implement.

Of course, there is a topic for "holivar": what is better than VMWare or Hyper-V - but this is not the topic of this post. I will not argue. The taste and color - all markers are different.

Probably, some will say that this business can be sent to Azure - it may turn out that it is even cheaper, but we are paranoid and want

Solution Requirements

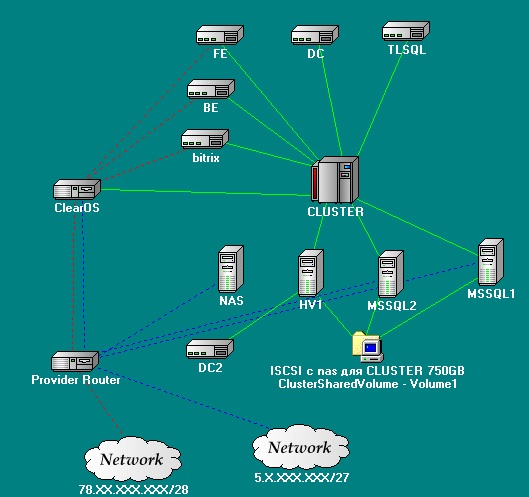

There is a project that uses:

- Database - MSSQL

- backend - IIS

- frontend is a kind of PHP application

')

It is necessary to implement:

- This bundle worked "always."

- The failure of the "iron" server did not cause downtime.

- No data was lost.

- There was some load balancing.

- Scalable.

- To implement the above, it was not necessary to make a garden with software (for example, Identity for MSSQL).

All this farm will be located on one well-known German hosting.

Implementation

We split the implementation into logical parts:

- Hardware requirements.

- Preparatory actions

- Fault tolerance MSSQL (with elements of balancing).

- Fault tolerance backend and frontend

- Network configuration

- Fault tolerance: another milestone.

Hardware Requirements

For our plans we need at least 4 servers. It is highly desirable that they be in the same data center, and preferably in one switch. In our case, we will have a separate rack. And once the rack is alone, then as they explained to us, the switch from her is also dedicated.

Server

2 servers - a processor with virtualization support, 32GB of RAM, 2HDD X 3TB (RAID 1)

The 2 remaining ones will go under SQL, so we will use a small change in them (in the configuration, we replace one hard disk with a RAID controller and 3 SAS disks of 300 GB each (they will go to RAID 5 - fast storage for MSSQL)).

In principle, this is not necessary. Fault tolerance, of course, decreases, but speed is more important.

You also need a flash drive (but more on that below.)

Optional: a separate switch for the organization of the local network, but this can be done later as the project grows.

Preparatory actions

Since we are raising Failover Cluster, we will need an Active Directory domain.

He will then simplify the task of authorizing our backend on the SQL server.

We lift the domain controller in the virtual machine.

It is also necessary to determine the addressing of the local network.

Our DC (Domain Controller), of course, will not have a white ip address and will go out through NAT.

In the settings of all machines virtual and not very Primary DNS: our domain controller.

The second ip address, in addition to the white ones issued, is necessary to register the addresses of our local network.

The ideal option is described in terms of scalability below.

Fault tolerance MSSQL.

We will use MSSQL clustering, but not in the classical sense, that is, we will not cluster the entire service, but only the Listener. Clustering an entire MSSQL requires shared storage, which will be the point of failure. We are going the way of minimization of points of failure. To do this, we will use the new feature of MSSQL Server 2012 - Always On.

Ode to this chip is well described by SQL Server 2012 - Always On by Andrew Fryer . It also describes in detail how to configure.

In short, the combination of two technologies of replication and mirroring. Both database instances contain identical information, but each uses its own repository.

It is also possible to use load balancing using read-only replicas. Before you set up routes for reading, read more - Read-Only Routing with SQL Server 2012 Always On Database Availability Groups

In general, Best Practice on this issue is covered in detail in the Microsoft SQL Server Guide for LeRoy Tuttle

I’ll only focus on the fact that the configuration of paths in MSSQL installations should be identical.

Fault tolerance backend and frontend.

We will implement this functionality by clustering virtual machines.

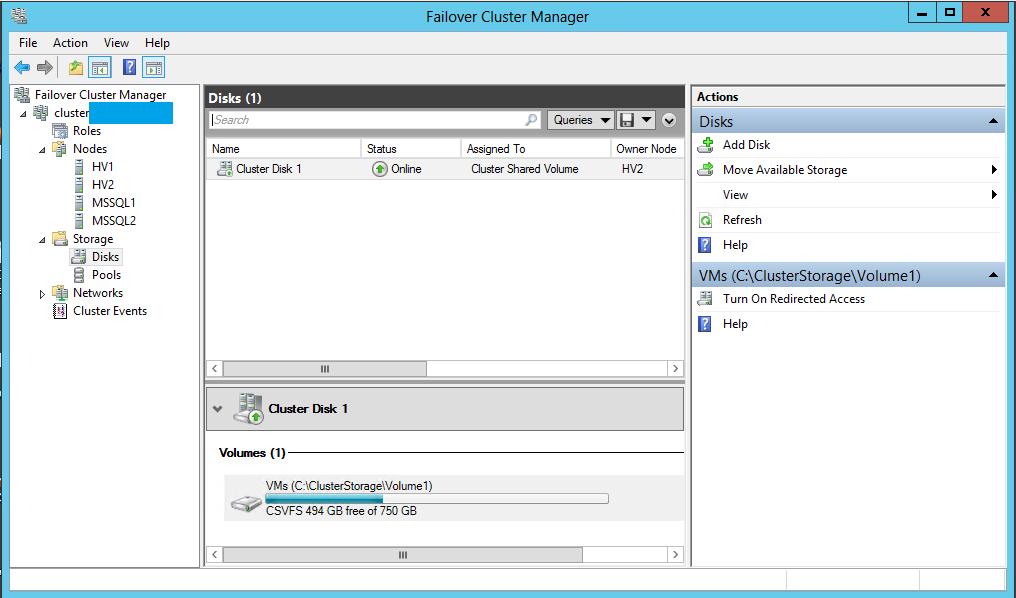

To cluster virtual machines, we need Cluster Shared Volume (CSV).

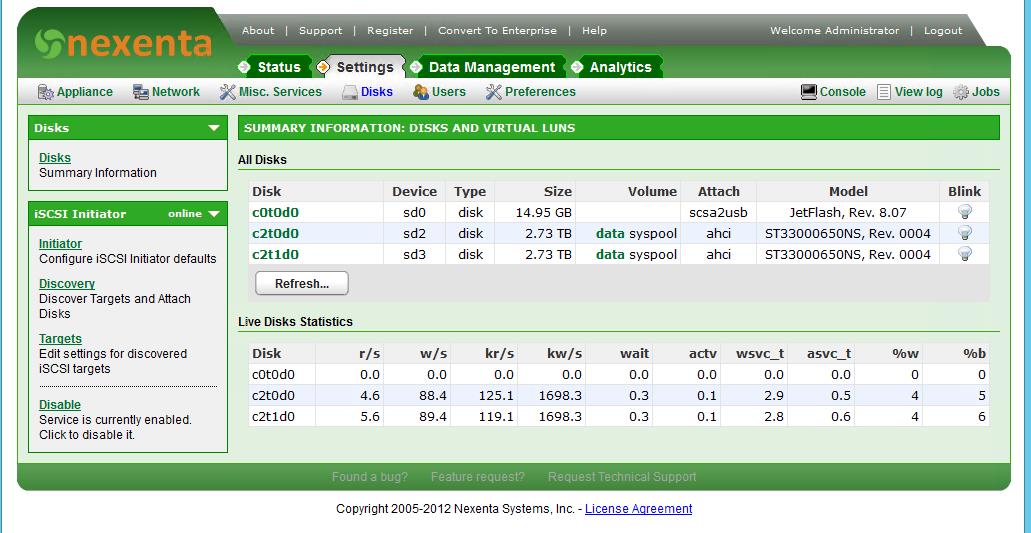

And to create a CSV, we need a SAN, and it should be validated by a cluster and be free. It turns out, not such an easy task. A dozen solutions were sorted out (Open Source and not very). As a result, the desired product was discovered. He is called NexentaStor

18 TB raw space for free, a bunch of protocols and chips.

The only thing that, during the deployment, it is necessary to take into account the experience and recommendations of the ULP Operating experience Nexenta, or 2 months later

We, unfortunately, walked on this rake independently.

Nexenta also periodically has a “disease” - the Web interface stops responding, while all other services are functioning normally. The decision is available http://www.nexentastor.org/boards/2/topics/2598#message-2979

So. Read more about installation.

We are trying to deliver Nexenta, the installation is successful. We go in the system and we are surprised - all the free space went under the system pool and there is no place for us to place the data. It would seem that the solution is obvious - we are ordering hard drives to the server and creating a pool for storing data, but there is another solution. For this, we will use the flash drive.

We put the system on a flash drive (this process takes about 3 hours).

After installation we create a system pool and a pool for data. We attach a flash drive to the system pool and synchronize it. After that, the flash drive from the pool can be removed. Described in detail on http://www.nexentastor.org/boards/1/topics/356#message-391 .

And we get this picture.

Create zvol. After creation we bind it to the target and publish it via iSCSI.

We connect it to each node of our cluster. And add it to Cluster Shared Volume.

Accordingly, in the Hyper-V settings on each node of the cluster, we specify the location of the virtual machine configurations and hard disk files on it.

It is also not unimportant - the names of virtual switches on each node should also be the same.

After that, you can create virtual machines and set up a faylover for them.

The choice of OS, of course, is limited to MS Windows and Linux, for which there are integration services , but it so happens that we use them.

Just do not forget to add our domain controller in the Hyper-V cluster.

Network configuration

We already have fault tolerant SQL, we have fault tolerant frontend and backend.

It remains to be done so that they are accessible from the outside world.

Our hosting provider has 2 services for the implementation of this functionality:

- It is possible to request an additional IP address for our server and bind it to the MAC address.

- It is also possible to request a whole subnet / 29 or / 28 and ask to route it to an address of 1 point.

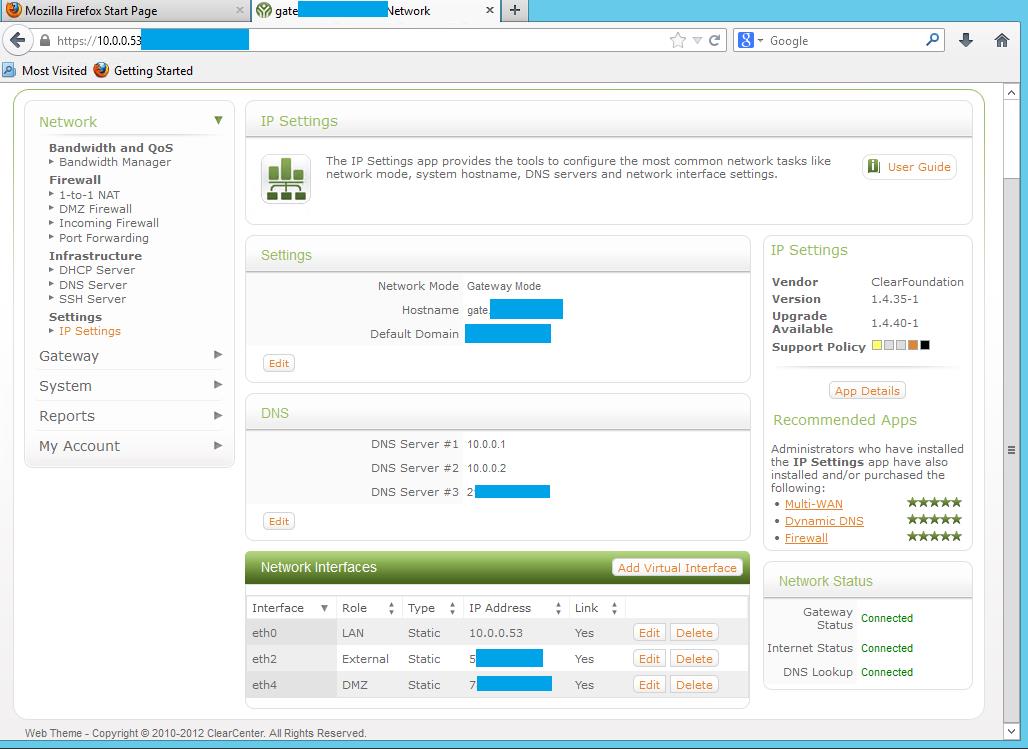

We create one more virtual machine in our Hyper-v cluster. For this purpose we will use ClearOS . The choice fell on it, immediately, because it is built on the basis of CentOS and therefore it is possible to put integration services on it.

We do not forget to install them after installation, otherwise there may be problems with the disappearance of network interfaces.

She will have 3 interfaces:

- The local network

- DMZ

- External network

External network is the additional address that we asked the provider

DMZ - the subnet that the provider gave us.

Also, this machine will release our virtual machines (not having a white ip address) outside through NAT.

Thus, we made our routing fault tolerant as well. The router is clustered and can also move from node to node.

On the nodes themselves, we do not forget to configure the firewall (block access to all ports of ip, except trusted and local, on dangerous ports). And it is better to disable white ip addresses.

Of course, if there is no need for more than one white address, it makes no sense to allocate a whole subnet and access from the outside can be implemented through Port Forwarding and Reverse Proxy.

Fault tolerance: another milestone

As mentioned earlier, we are on the path to reducing the points of failure. But one point of failure, we still remained - this is our SAN. Since all clustered virtual machines are on it, including our domain controller, the disappearance of this resource will not only lead to the disappearance of the backend and frontend, but also lead to the collapse of the cluster.

We still have one more server. We will use it as the last frontier.

We will create a virtual machine with a second domain controller on our backup server and configure AD replication on it.

Do not forget the secondary DNS server on all of our machines to prescribe it. In this case, when CSV disappears, those services that do not depend on CSV, namely our clustered SQL listener, will continue to function.

In order for the backend and frontend, after the fall of CSV, to return to the system, we will use the new feature of Windows Server 2012 - Hyper-V replication. Critical for the project machine, we will replicate to our 4 server. The minimum replication period is 5 minutes, but this is not so important, since frontend and backend contain static data that is updated very rarely.

In order to accomplish this, you need to add the “Hyper-V Replica Broker” cluster role. And in its properties to configure the properties of replication. More about replication:

Hyper-V Replica Overview

Windows Server 2012 Hyper-V. Hyper-V Replica

And, of course, do not forget about backup.

About scalability

In the future, this solution can be scaled by adding server nodes.

The MSSQL server is scaled by adding replica nodes that are available for reading and balancing the reading paths.

Virtual machines can be “inflated” to the size of a node in terms of resources, without being tied to hardware.

In order to optimize traffic, you can add additional interfaces to the node servers and connect Hyper-V virtual switches to these interfaces. This will separate external traffic from internal.

You can replicate virtual machines in Azure.

You can add SCVMM and Orchestrator and get a “private cloud”.

Something like this, you can build your cluster to be fault tolerant, to a certain extent. As in principle, and all the fault tolerance.

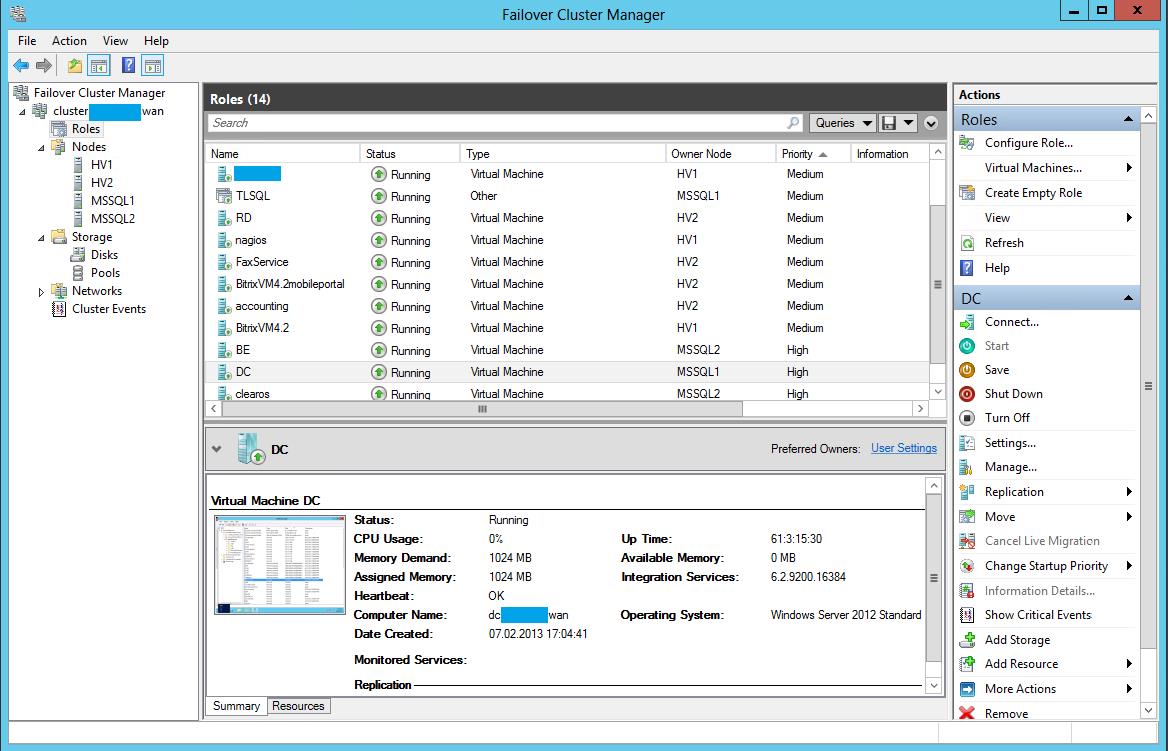

PS Three months - normal flight. The number of cluster nodes and virtual machines is growing. The screenshot shows that the system is already somewhat more described.

The post probably does not reveal all the details of the settings, but there was no such task. I think all the details will be tiring. If suddenly, who will be interested in the details - you are welcome. Criticism is welcome.

Source: https://habr.com/ru/post/184520/

All Articles