Mega-data centers are the pioneers of innovation. Part 2

We continue to get acquainted with the modern super-large data centers, begun by the last article , and today we will talk about how to solve one of the most important problems - data storage. In addition, we will talk a little about the near future of such mega-data center.

Of course, the data storage system could not but become a bottleneck for many, many applications of mega-data center. Despite the huge breakthrough in this area, not always existing solutions are enough for a smooth solution of the arising tasks. It is even difficult to imagine how much data is processed every second in data centers of Amazon, Google, Facebook and other companies. Not only the volume of data and the speed of their receipt are the essence of the problem, in reality it is necessary to take into account a lot of additional problems, starting with data integrity and data protection, and ending with legal requirements for the so-called “data retention”.

Almost without exception, storage systems of large data centers are built on the principle of direct storage connectivity (DAS): are they easier to buy (remember the discussion of the importance of cost optimization in the first part of the article?), They are cheaper to maintain, they provide better throughput compared to solutions on based on NAS and SAN, not to mention greater performance. Sometimes large consumer data storage systems use ordinary consumer drives and SSDs with SATA connections, Serial Attached SCSI is becoming more common, and many migrate to SAS, which is compatible with conventional SATA drives, also having some undeniable advantages in both speed and ease of management. There is also a migration process to SAS interfaces supporting 12 Gbit / s bandwidth.

Not so long ago, the main indicators for evaluating storage systems were the number of operations per second (IOPS) and the maximum interface bandwidth, measured in megabytes per second. The realities of mega-datacenter operations have shown that modern storage systems running on SSDs often reach maximum I / O performance (often up to 200,000 IOPS) and the data transfer rate stops playing a key role. This indicator comes to the forefront as a delay in I / O operations, it has a key influence on the most important infrastructure performance indicators for data centers of this size, server utilization rate and, of course, application speed.

')

The main optimization methods are the introduction of SSD, special cards for caching and a combination of these two options.

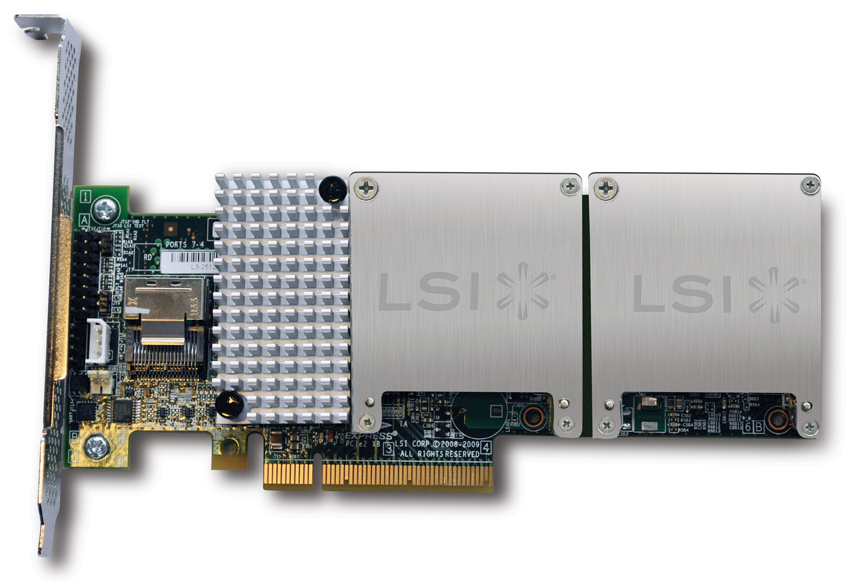

In reality, the I / O latency for a regular hard disk is about 10 milliseconds (0.01 seconds). In SSD, the read delay is about 200 microseconds, and the record is 100 microseconds (0.0002 and 0.0001 seconds, respectively). Specialized PCIe cards (we recently described a similar solution, LSI Nytro , in practice showing its effectiveness) can provide an even smaller delay of several tens of microseconds.

With proper use of SSD technologies, combining different solutions for data storage, you can achieve an average gain of 4 to 10 times, which in terms of mega-data centers can result in huge amounts of profit for the owner.

Corporate SAN storage from the introduction of a variety of caching solutions benefit even more, because the cache eliminates the effect of the slowest part of such storage - network infrastructure. Modern I / O optimization cards can store up to several terabytes of “hot” data in their cache, so it can often fit an entire database that is critical for the operation of the application.

In corporate structures, unlike mega-datacenters, I / O latency is not always used as one of the main criteria for evaluating storage, relying more on IOPS and the cost of storing one gigabyte of data, contrasting traditional SSDs. In turn, the experience of building modern ultra-large data centers shows that even using more expensive SSDs, you can get a more efficient infrastructure in the future, reducing the cost of its support and optimizing its efficiency. Compared to HDDs, modern SSDs are less susceptible to malfunction, easier to maintain and consume less electricity, which is a significant “bonus” to their main advantages: speed and low I / O latency, which allows processing more data on fewer servers, saving also on service contracts and software licenses.

What awaits mega-data centers in the future?

The first place to apply innovation is software. In some cases, super-large data centers pioneered the use of solutions, which later became part of the “everyday life” of IT (if of course this expression is applicable. Examples include such solutions as Apache Cassandra, Google Dremel and, of course, Hadoop. Nature like applications evolve with great speed, often it is not about years, but about months.

Now we can observe how two very young technologies are changing the world of corporate data centers, how Linux changed the server market in general at one time. OpenCompute is an initiative aimed at creating an open minimalistic and efficient architecture of computing data centers. The proposed Facebook in 2011, it is now experiencing a real boom and can lead to many changes (for example, the model of "open service" on the principle of "open source"). The second initiative, OpenStack, is now the cornerstone of most software-defined data centers, by creating a network infrastructure and a pool of resource processing resources that can be automatically managed.

Also in the near future, we are waiting for solutions to disaggregate servers at the rack level: they will allow to separate all components of the server (processor, memory, power supply, etc.) from each other, and manage them separately. This will further improve the efficiency of using “iron” in super-large DCs.

Summarizing, we can say that mega-data centers live at the forefront of innovation, this is exactly the IT area that can be considered as “pioneers” in many areas, they pave the way for the whole industry to follow.

... to boldly go where no man has gone before!

Source: https://habr.com/ru/post/184442/

All Articles