Network traffic analysis in application performance management

In this article, we will look at what network traffic analysis is for monitoring and managing the performance of network applications, as well as the differences between real-time network traffic analysis and retrospective network traffic analysis (Retrospective Network Analysis, RNA).

Introduction

There is such a marketing technique: select a product or service property in demand on the market and state that your product or service also has this property in demand. Everyone needs whiteness of teeth - we will say that our toothpaste whitens teeth.

With application performance management (APM, Application Performance Management, and Application Performance Monitoring) the same story. Today, almost every manufacturer of network management systems claims that its products allow you to manage application performance. Of course, “ponies are also horses,” but let's see what APM is and what functionality a toolkit must have in order to bear this proud name.

Signs of a Mature APM Solution

For many people, measuring the response time of a business application and managing its performance is almost the same. In fact this is not true. Measuring the reaction time is necessary, but not enough.

')

APM is a complex process that also includes measuring, evaluating, and documenting metrics related to IT infrastructure, and, most importantly, ensuring that the quality of application work and the quality of IT infrastructure can be linked with each other. In other words, it is necessary not only to determine that the application is working poorly, but also to find "guilty". To do this, in addition to measuring the response time, you need to track application errors, monitor the client-server dialogue, see trends. And all this must be done simultaneously for all active network equipment (switches, routers, etc.), all servers, as well as communication channels.

The table below lists the 10 key attributes of a mature APM solution.

| Key feature | Comment |

|---|---|

| 1. Comprehensive monitoring | To quickly determine the “scale of distress” and the root causes of failures, it is necessary to simultaneously monitor the metrics that characterize the operation of applications and the metrics that characterize the work of the entire IT infrastructure without exception. |

| 2. High-level reports | Necessary to understand the quality of the provision of IT services across the enterprise. Must include pre-installed panels (dashboards), reports, thresholds, baselines, crash report templates. It must be possible to drill down information. |

| 3. Ability to configure secure communication | Collaboration between different departments of the IT Service is needed to quickly troubleshoot and plan capacity. Therefore, a mature APM solution should provide the ability to securely exchange data. This applies to both real time data and the results of statistical, expert and other processing. |

| 4. Continuous capture of network packets | By monitoring objects via SNMP, WMI, etc., you can certainly evaluate the quality of application performance, but it’s difficult to see the whole picture and understand what happened and why. More correctly - to constantly capture all network traffic completely (not just headers), and when some kind of event that requires an investigation occurs, then analyze the contents of the captured network packets. |

| 5. Detailed information on the operation of applications | Analyzing only the application response time is not enough. To quickly diagnose failures, you need information about requests (which the application does), how they are executed, error codes and other information. |

| 6. Expert analysis | A serious expert analysis significantly speeds up the diagnosis of problems, because, firstly, it allows to automate the process of analyzing information, secondly, if the problem is known, it allows you to immediately get a ready-made solution. |

| 7. End-to-end (multiple segment / multihop) analysis | As more and more business applications are working in the cloud or through the WAN, you need to be able to see all the delays and errors that occur in each network segment through which network traffic passes (the same packet). This is the only way to quickly localize the root cause of the failure. |

| 8. Flexible baselines | Flexible (customizable) baselines allow you to effectively monitor both internal and cloud applications. For cloud applications, threshold values of monitored metrics (prescribed in SLA) are usually static (known in advance) and set manually. For internal applications, dynamic baselines are better suited, i.e. modified automatically over time, depending on the performance of the application. |

| 9. Ability to reconstruct information flows | When analyzing the problems associated with poor quality voice and video transmission, as well as problems in the field of information security, it is important to be able to reproduce network activity and application operation at the time (as well as before and after) when the critical event occurred. |

| 10. Scalability | It seems that no comment. |

Network traffic analysis

Experienced IT professionals will say, for the above signs, the ears of an APM solution stick out, based on network traffic analysis. It really is.

Network Instruments has identified four types of application performance management solutions:

- synthetic transaction based solutions (GUI robots)

- solutions using server-side software agents

- solutions using client-side software agents

- solutions based on network traffic analysis

Solutions based on network traffic analysis stand out on this list.

Unlike solutions based on synthetic transactions or using server-side or client-side software agents, data is collected without using system resources.

A network traffic analyzer usually consists of several probes and a remote administration console. The probes connect to the SPAN ports, which allows an APM solution based on network traffic analysis to passively listen to traffic without consuming any server resources (as is done by server-side software agents and synthetic transactions), or by a client (as is done by client side) and without creating additional traffic (as synthetic transactions and client side agents do).

The analysis itself is based on the decoding of network protocols of all levels, including the application level. For example, Observer from Network Instruments supports decoding and analysis of all seven layers of the OSI model for applications and services such as SQL, MS-Exchange, POP3, SMTP, Oracle, Citrix, HTTP, FTP, etc.

If you want to get information about the delays that are closest to the perception of the user, the probes are connected as close as possible to the client devices. However, in this case, you need a large number of probes. If the exact data on delays are not so critical, and the number of probes used is required to be reduced, the probes are connected closer to the application server. However, changes in the delay will be visible in both cases.

Note. You can also use monitoring switches that collect information from several SPAN ports and TAPs for subsequent redirection to the monitoring system. Monitoring switches can also conduct a primary traffic analysis.

The second important point. All four types of solutions listed by Network Instruments have their advantages and their own field of application, in which they cope with the tasks better than others. For example, the most detailed information about the application allows you to collect software agents that receive information directly from the application on the server side or client through the ARM-like API. This information is very useful during the development and initial run-in phase of the application, but will be redundant during normal operation.

On the other hand, both GUI robots with synthetic transactions and software agents collect data about the state of a particular application (or several applications). The use of software agents with the introduction of the code in general makes quite high demands - the vendor must integrate into the application support for a specific monitoring tool or provide an API. In order to know the context in which the application is running, it is necessary to use other tools (possibly included in the same comprehensive monitoring system, perhaps separate).

The traditional paradigm of network monitoring is the centralized collection, consolidation and analysis of data obtained from external sources of information. These are SNMP agents, WMI providers, various logs, etc. The task of the monitoring system is to collect these data, to show them in a convenient way, to analyze them using the service-resource model, and thus to understand what is happening. This is how almost all monitoring systems work, including the ProLAN monitoring system .

Network protocol analyzer allows you to immediately see the context.

Firstly, you can see how the application of interest worked against the background of network services and components of the IT infrastructure. For example, multihop-analysis allows you to identify a failed router, due to which the connection between the server and the client is broken, etc. In principle, the network protocol analyzer can be used as a universal solution that simultaneously performs the functions of application performance management, IT infrastructure monitoring, and security management.

Secondly, no other method allows us to see what happens at the level of a single transaction and a separate package. Which packets were sent, which were lost, which were passed with errors (each specific packet, and not how many at all), etc. Analysis of the application at the level of a single transaction can only be implemented using a network protocol analyzer.

Retrospective analysis of network traffic

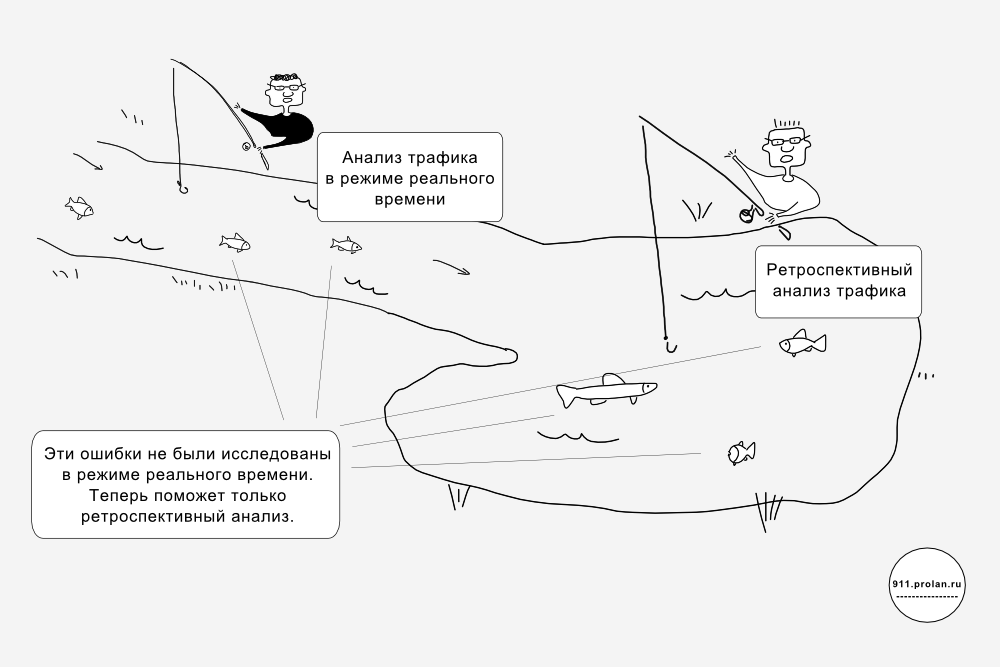

Network traffic analysis can be done in two ways:

- real-time traffic analysis (on the fly)

- retrospective traffic analysis

A retrospective analysis of traffic assumes that all or some of its traffic is first written to disk and then analyzed.

Consider how it works and why it is needed, using the example of GigaStor , a solution for recording and retrospectively analyzing traffic from Network Instruments. This is a software and hardware system that has a hardware probe, a full duplex network card, data storage and a local management console. Remote administration will require integration with other Network Instruments products - the already mentioned Observer or Observer Reporting Server. The network card allows you to capture traffic from networks with speeds up to 40 Gb / s, and disk arrays - to store up to 5 PB of data (alternatively, upload to SAN up to 576 TB).

Interception of network traffic allows you to find out what is happening with applications, users and IT infrastructure. But how do you know what was five minutes, or an hour, or a day ago? The traditional way is to remove the values of the metrics and write them to the database. So does the vast majority of monitoring systems. Values and estimates of metrics allow us to get some idea of what happened at an arbitrary point in time, but no more. What was recorded, we can use. That which was not written is lost irretrievably.

To go back in time and see what happened at the moment of interest to us, it is possible only in one way, as GigaStor and other solutions of this class do (they, however, are few), record all traffic. This causes the specific features of such solutions:

- firstly, access to a very large disk space (measured by terabytes and petabytes);

- secondly, the so-called. a time machine function that allows you to rewind time back and see what happened on the network at an arbitrary moment in the past.

The ability to travel in time is especially important in two cases:

- when the problem is complex, it is repeated irregularly or not clearly, to which area it is assigned (“gray problem”);

- when there is a security breach.

Briefly consider both cases.

In the first case, the system administrator is faced with a lack of information. If the problem appears irregularly, it is difficult to track and reproduce. The same applies to complex and "gray" problems. Retrospective analysis allows to fill this lack of information, speeding up the solution of the problem for hours, and maybe even days.

In the second case, the problem needs to be solved as quickly as possible - otherwise consequences may occur that will be difficult to fix. The most convenient way is to go back in time and track the actions of the attacker, as they actually happened, without wasting time researching indirect signs, etc.

1. A mature APM solution implies not only the ability to measure application response times, but also a number of properties aimed at evaluating measurement results and quickly detecting and diagnosing problems.

2. Of the four types of APM solutions, solutions based on network protocol analysis are the most versatile for managing the performance of network applications.

3. For solving complex, repetitive, irregular problems, as well as problems related to security breaches, analyzing network traffic in real time is not enough. In these cases, a retrospective analysis of network traffic is applied with traffic recorded to disk and so on. time machine function.

Source: https://habr.com/ru/post/184048/

All Articles