Software package for computing clusters Fujitsu HPC Cluster Suite

May 29 this year, Fujitsu introduced a special software package for computing clusters Fujitsu HPC Cluster Suite (HCS). This package is designed to manage clusters based on Fujitsu PRIMERGY servers of standard x86 architecture and is a set of fully tested high-performance software components. Initially, this product was developed for the supercomputer of the Institute of Physical and Chemical Research (RIKEN) K computer , which held primacy for two seasons and is currently in third place in the TOP500 list with a performance exceeding 10 PFLOPS, which in itself speaks about the potential and capabilities of the presented solutions. Now, with the release of the HCS package, the accumulated knowledge and experience is transferred to the classic x86 architecture and virtually any company becomes available.

The main components of any high-performance cluster are its computing nodes or nodes, the performance of the entire cluster depends on their number and configuration, since directly on them are calculated tasks. It is usually considered that a cluster consists of the same in terms of hardware components of servers, but, nevertheless, there are often situations when there are also different servers in a cluster. In this case, the nodes of different configuration within the same cluster are combined into groups of similar servers.

The interconnect used is important in a computing cluster; applied inter-node communication. Interconnect should provide interaction between processes running on compute nodes in order to synchronize and exchange data. Usually, the Infiniband high-speed bus (40 or 56 Gb / s) is used to solve this problem. In addition, traditional Ethernet is used to manage computing nodes.

')

The operation of the compute nodes is controlled by the control nodes. At least one such node must exist even in a small cluster, while in large clusters there are usually several of them in order to ensure fault tolerance. Managing nodes form a queue of tasks, distribute them between computational nodes and their groups, while the tasks themselves are started by users directly from their workplace.

Since compute nodes work with data, their placement in the cluster structure can be an important issue. So, a separate network storage system can be used, to which all nodes can connect, or, especially in the case of large clusters, the so-called parallel file system can be used. A parallel file system provides distributed data storage when parts are stored on different nodes, but at the same time are accessible to each of them. It provides distribution between nodes of not only tasks, but also relevant data.

As for the HPC environment, it is primarily focused on non-interactive tasks performed on a set of compute nodes. In this case, several tasks can be simultaneously performed on the same cluster.

The main feature of the HPC software stack is the need to install it on tens and thousands of servers of the same type (compute nodes), and all systems must have the appropriate basic sets of various settings. For example, the identifiers of users and groups, as well as the corresponding passwords must be the same on all compute nodes, which must also have the same access to the file system. In addition, you must have the tools to quickly put into operation new or replace broken computing nodes.

Obviously, in such conditions, changing, for example, only one password per thousand nodes can lead to a significant investment of time if you do not use special software that allows you to either manage passwords centrally or automate their replacement on all servers in the cluster.

The next problem faced by cluster administrators is the selection of software and drivers. At the moment, you can find a huge number of different versions of software used in cluster solutions, for example, various compilers, resource managers or MPI. Accordingly, the selection of these components, their testing for mutual compatibility and compatibility with iron, their configuration and synchronization is a very laborious process and can take a considerable amount of time.

This is where Fujitsu’s complete HPC Cluster Suite solution can help, as it is a set of pre-tested and proven software created in an alliance with leading developers that is guaranteed to run on Fujitsu hardware.

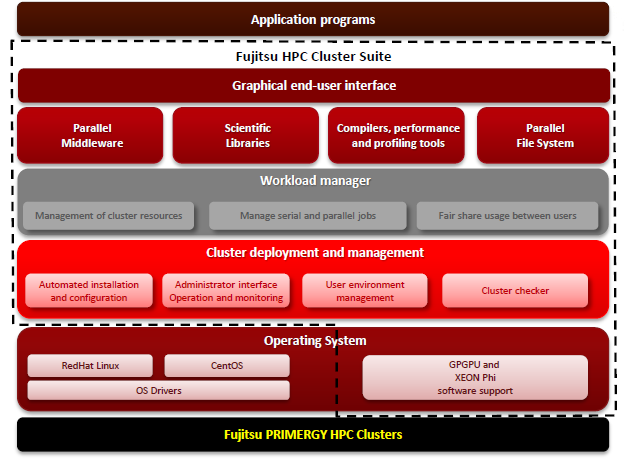

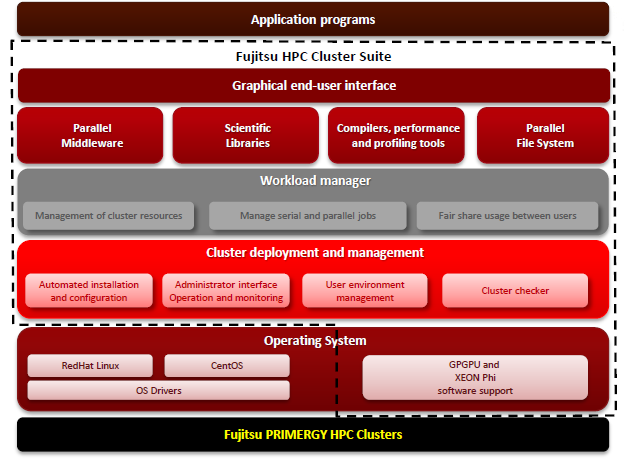

For a better understanding of the purpose and functionality of this product, we consider a typical HPC software stack; it is presented in the diagram below. In this diagram, the dotted lines outline those components of the stack that are implemented in the HPC Cluster Suite. As you can see, in fact, HCS allows you to fill all the necessary space between the application itself and the compute nodes, being a full-featured package for managing clusters built on the basis of Fujitsu PRIMERGY servers.

Now, based on the diagram above, we briefly analyze the main components of the HPC Cluster Suite:

Software support for nVidia Tesla K20 / K20X accelerators and Intel Xeon Phi co-processors. This suggests that tasks performed on a cluster can focus not only on the computational power of central processors, but also on the possibility of heterogeneous and parallel computations available on the computation accelerators mentioned.

Cluster Deployment Manager (CDM) plays an important role in the presented product - a package developed directly by Fujitsu, which provides automated installation and configuration of computing nodes, as well as their commissioning; contains an administrator interface to perform the above tasks and monitor the status of cluster nodes, manage user environments, and verify cluster health.

As a Workload manager , you can choose from three open source tools (TORQUE, SGE, SLURM - the last 2 will be available from the third quarter of 2013) or a commercial product (PBS Professional - only in HCS Advanced edition), which have already proven themselves to the market.

Parallel middleware (middleware) includes third-party software, in particular, open source versions: OpenMPI, MPICH, MVAPICH. In addition, HPC Cluster Suite is fully compatible with Intel MPI (sold separately).

Scientific Libraries include Lapack, ScalaPack, BLAS, netcdf, netcdf-devel, hdf5, fftw, fftw-devel, atlas, atlas-devel, GMP, Global Arrays, MKL. It also offers support for the following compilers, optimization and profiling tools : GNU c, c ++, gfort, Open64 (PathScale compiler), Intel Cluster studio, Allinea DDT, Intel vtune, PAPI, TAU. At the same time, all commercial products are purchased separately; in fact, their compatibility and integration are guaranteed.

An important component is the Fujitsu-developed Fujitsu Exabyte File System (FEFS) parallel file system based on Luster. In its name, it is not by chance that the word Exabyte is present, because the maximum size of its file system and one file is 8 dB, and the parallel implementation of I / O allows it to reach speeds of the order of TB / s.

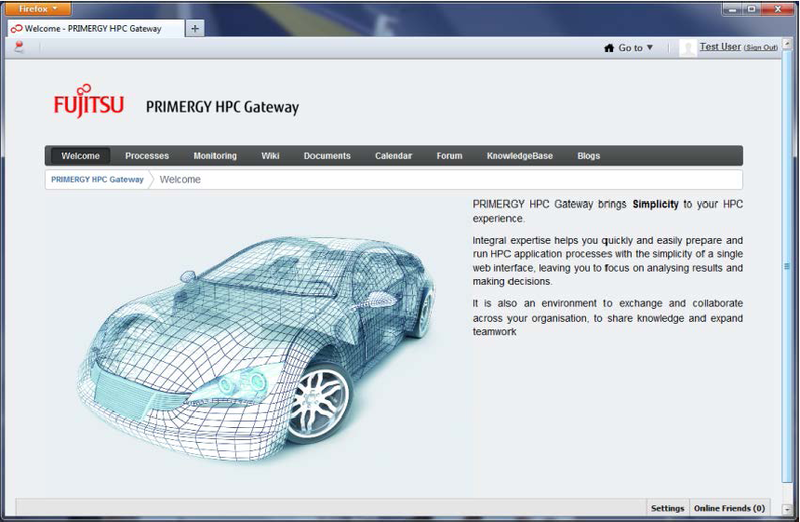

The HCS graphical user interface is called HPC Gateway, it is a portal created on the basis of Liferay, and allows users and their groups to work together through the integration of blogs, a forum, a Wiki, a knowledge base, and other components.

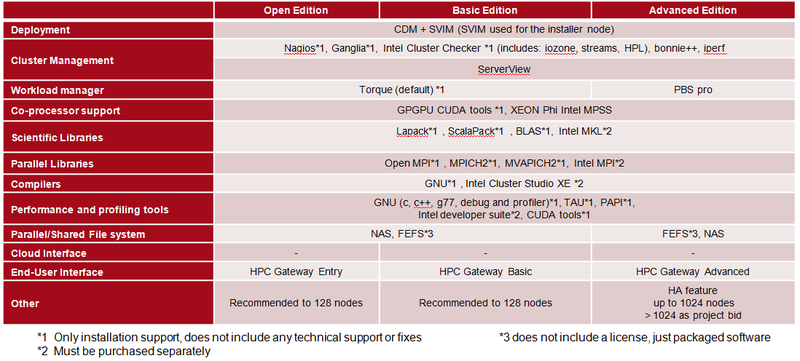

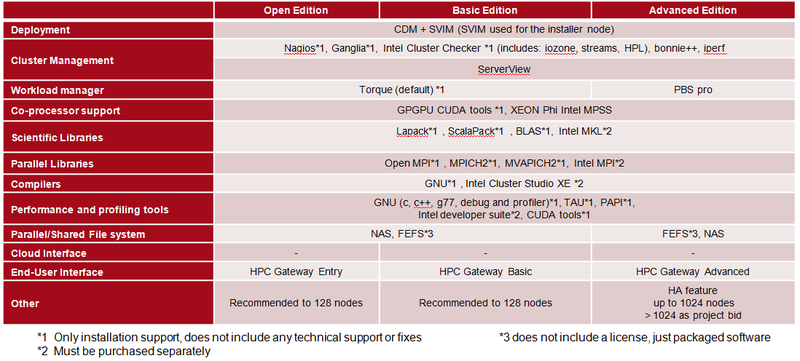

HPC Cluster Suite is available in three editions - Open, Basic and Advanced, differing in the bundled software bundle, subscription to updates and technical support, and a limit on the number of computing nodes in a cluster. As for the delivery sets of various revisions, they are graphically presented in the table below.

As for the other features of editions, for each of the editions excluding the Open edition, which lacks the subscription options for technical support and has limited access to updates, there are one, three and five-year subscription options. Also, the Open edition supports up to 128 nodes in a cluster, while the other editions have no such limitation and in fact allow the use of an unlimited number of nodes. The final price of a HCS package depends on the edition of the package, the use segment (academic or commercial license) and the number of nodes in the cluster.

Typical HPC Cluster Architecture

The main components of any high-performance cluster are its computing nodes or nodes, the performance of the entire cluster depends on their number and configuration, since directly on them are calculated tasks. It is usually considered that a cluster consists of the same in terms of hardware components of servers, but, nevertheless, there are often situations when there are also different servers in a cluster. In this case, the nodes of different configuration within the same cluster are combined into groups of similar servers.

The interconnect used is important in a computing cluster; applied inter-node communication. Interconnect should provide interaction between processes running on compute nodes in order to synchronize and exchange data. Usually, the Infiniband high-speed bus (40 or 56 Gb / s) is used to solve this problem. In addition, traditional Ethernet is used to manage computing nodes.

')

The operation of the compute nodes is controlled by the control nodes. At least one such node must exist even in a small cluster, while in large clusters there are usually several of them in order to ensure fault tolerance. Managing nodes form a queue of tasks, distribute them between computational nodes and their groups, while the tasks themselves are started by users directly from their workplace.

Since compute nodes work with data, their placement in the cluster structure can be an important issue. So, a separate network storage system can be used, to which all nodes can connect, or, especially in the case of large clusters, the so-called parallel file system can be used. A parallel file system provides distributed data storage when parts are stored on different nodes, but at the same time are accessible to each of them. It provides distribution between nodes of not only tasks, but also relevant data.

As for the HPC environment, it is primarily focused on non-interactive tasks performed on a set of compute nodes. In this case, several tasks can be simultaneously performed on the same cluster.

Features of the HPC software stack and HPC Cluster Suite application

The main feature of the HPC software stack is the need to install it on tens and thousands of servers of the same type (compute nodes), and all systems must have the appropriate basic sets of various settings. For example, the identifiers of users and groups, as well as the corresponding passwords must be the same on all compute nodes, which must also have the same access to the file system. In addition, you must have the tools to quickly put into operation new or replace broken computing nodes.

Obviously, in such conditions, changing, for example, only one password per thousand nodes can lead to a significant investment of time if you do not use special software that allows you to either manage passwords centrally or automate their replacement on all servers in the cluster.

The next problem faced by cluster administrators is the selection of software and drivers. At the moment, you can find a huge number of different versions of software used in cluster solutions, for example, various compilers, resource managers or MPI. Accordingly, the selection of these components, their testing for mutual compatibility and compatibility with iron, their configuration and synchronization is a very laborious process and can take a considerable amount of time.

This is where Fujitsu’s complete HPC Cluster Suite solution can help, as it is a set of pre-tested and proven software created in an alliance with leading developers that is guaranteed to run on Fujitsu hardware.

For a better understanding of the purpose and functionality of this product, we consider a typical HPC software stack; it is presented in the diagram below. In this diagram, the dotted lines outline those components of the stack that are implemented in the HPC Cluster Suite. As you can see, in fact, HCS allows you to fill all the necessary space between the application itself and the compute nodes, being a full-featured package for managing clusters built on the basis of Fujitsu PRIMERGY servers.

HPC Cluster Suite Components

Now, based on the diagram above, we briefly analyze the main components of the HPC Cluster Suite:

Software support for nVidia Tesla K20 / K20X accelerators and Intel Xeon Phi co-processors. This suggests that tasks performed on a cluster can focus not only on the computational power of central processors, but also on the possibility of heterogeneous and parallel computations available on the computation accelerators mentioned.

Cluster Deployment Manager (CDM) plays an important role in the presented product - a package developed directly by Fujitsu, which provides automated installation and configuration of computing nodes, as well as their commissioning; contains an administrator interface to perform the above tasks and monitor the status of cluster nodes, manage user environments, and verify cluster health.

As a Workload manager , you can choose from three open source tools (TORQUE, SGE, SLURM - the last 2 will be available from the third quarter of 2013) or a commercial product (PBS Professional - only in HCS Advanced edition), which have already proven themselves to the market.

Parallel middleware (middleware) includes third-party software, in particular, open source versions: OpenMPI, MPICH, MVAPICH. In addition, HPC Cluster Suite is fully compatible with Intel MPI (sold separately).

Scientific Libraries include Lapack, ScalaPack, BLAS, netcdf, netcdf-devel, hdf5, fftw, fftw-devel, atlas, atlas-devel, GMP, Global Arrays, MKL. It also offers support for the following compilers, optimization and profiling tools : GNU c, c ++, gfort, Open64 (PathScale compiler), Intel Cluster studio, Allinea DDT, Intel vtune, PAPI, TAU. At the same time, all commercial products are purchased separately; in fact, their compatibility and integration are guaranteed.

An important component is the Fujitsu-developed Fujitsu Exabyte File System (FEFS) parallel file system based on Luster. In its name, it is not by chance that the word Exabyte is present, because the maximum size of its file system and one file is 8 dB, and the parallel implementation of I / O allows it to reach speeds of the order of TB / s.

The HCS graphical user interface is called HPC Gateway, it is a portal created on the basis of Liferay, and allows users and their groups to work together through the integration of blogs, a forum, a Wiki, a knowledge base, and other components.

HPC Cluster Suite Editions

HPC Cluster Suite is available in three editions - Open, Basic and Advanced, differing in the bundled software bundle, subscription to updates and technical support, and a limit on the number of computing nodes in a cluster. As for the delivery sets of various revisions, they are graphically presented in the table below.

As for the other features of editions, for each of the editions excluding the Open edition, which lacks the subscription options for technical support and has limited access to updates, there are one, three and five-year subscription options. Also, the Open edition supports up to 128 nodes in a cluster, while the other editions have no such limitation and in fact allow the use of an unlimited number of nodes. The final price of a HCS package depends on the edition of the package, the use segment (academic or commercial license) and the number of nodes in the cluster.

Source: https://habr.com/ru/post/183320/

All Articles