Infrastructure of fail-safe data center class TIER-III

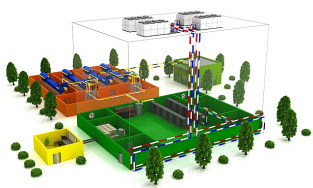

In the middle part of the first floor of the “Compressor” data center there are two machine rooms, strictly above them there are two machine rooms on the second floor. In each room, 1 MW of electrical power to the racks. On the first floor there is a transformer substation, distribution point, electrical room. On the left-top of the diagram is a household complex, a security room. To the right of the machine rooms - the cooling system room - pumping station. Next to the machine halls (on the sides) there are corridors with fan coils, in the center there is a corridor with switchboards.

The second floor basically repeats the layout of the first floor:

')

On-site transformer substations - UPS and batteries. The refrigerated part of the second floor is a glycol circuit pumping station (second cooling circuit). Plus, to the right of the machine rooms, a tank battery for 100 cubic meters for 15 minutes of independent cooling of the data center is installed (when the external power supply is disconnected, the already cooled water from the tank, which is fed into the cooling circuit, is used to cool the machine rooms).

Layout of data center

Here is the first topic about the construction of this data center .

Introductory

Before going further, for a start I will briefly tell you about the situation. Now CROC has 3 data centers, which are arranged like this:

The first two are in our office and under the adjacent parking building, respectively. The third (just “Compressor”) is far away, but also in Moscow, because of the developed infrastructure and good communication channels in the capital and from anywhere in the city. It is located where the system administrator or engineer can easily reach in 40-50 minutes from anywhere in the city or from the center by metro or car.

In general, today we have participated in more than 60 data center launches of various companies in Russia - we did a lot somewhere, consulted somewhere, and performed only a separate area of work somewhere. Experience has accumulated a lot. But it all began simply: the first data center was a pilot, with all the classic solutions. On the basis of it, we understand for ourselves how promising this area is and how far outsourcing data centers are in demand. Then we began to design and build a data center "Compressor". Along the way, it became possible to build another not very large data center under the parking lot. In it, for the first time in the Russian Federation, we used DDIBP (this is a very interesting UPS, where a huge rotor accumulates kinetic energy), plus on it, as well as on the Compressor, we tested several new pieces.

The first data center on 90 racks and 1MW.

The second data center with 110 racks and 2 MW.

DPC "Compressor" on 800 racks and 8 MW.

Basic parameters of the data center "Compressor"

- Fault tolerance: Tier III, confirmed by the Uptime Institute,

- Cooling: N + 1, on average 5 kW / rack (there are 30 kW racks, they are placed next to the less powerful ones in the machine room),

- Power supply: 2N, UPS - 15 minutes, diesel generator set - 24 hours without refueling,

- Capacity - 800 racks,

- The premises of the warehouse and the staff of the customer,

- Guarded territory

- Building owned

- Connected by a fiber-optic ring to the CROC data center network,

- 6000 kW total cooling capacity, of which 1500 kW reserve,

- Through reservation of the cooling system N + 1 (3 + 1),

- The average annual PUE is not worse than 1.45,

- Cold margin of 15 minutes when power is disconnected,

- The temperature range is -36 ... + 37 degrees (this is the absolute minimum and maximum for the last 10 years in Moscow).

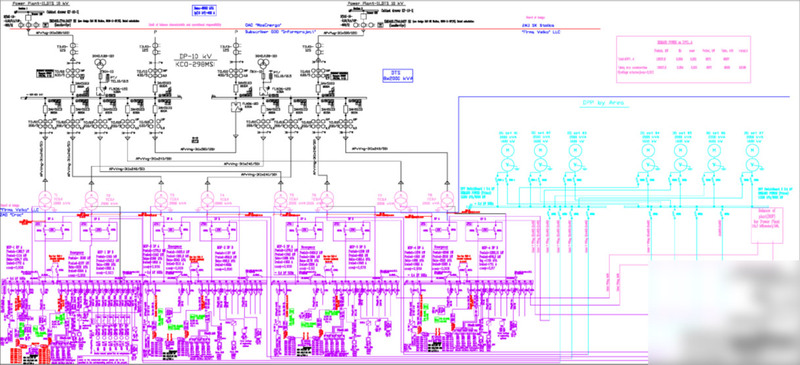

Power supply

Sorry, I was warned that if the scheme will read something, then the security men will shoot me, so that's it.

Power supply

- 8 MVA maximum data center power consumption,

- 4 MW (4x200x5 kW) IT power consumption,

- Energy directly from the generator (CHP-11) via two independent cable lines,

- PUE according to the results of measurements from the total consumption of the data center (including all consumers) to IT racks 1.35-1.45 (winter), on some days it was 1.25. 1.45-1.85 (summer). The planned annual average is 1.45, we are going a little better than the plan,

- Russia's largest assembly of DGU FGWilson in container design,

- 7 DGIs of 2000 MBA each,

- Parallel work of DGU for 2 tires,

- Reservation of communications 2N,

- Reservation DGU N + 1,

- Fuel reserve for 24 hours.

Uninterrupted power supply

- The largest in Russia assembly UPS GE Digital Energy,

- 38 pcs. GE DE SG series 300 kVA each

- Autonomy 15 min,

- Reservation of UPS assemblies 2N and N + 1.

Equipment

- Electrical and electrical equipment Schneider Electric,

- Sepam microprocessor relay protection,

- Prisma Plus distribution boards of the P and G series,

- Busbars Canalis KTA,

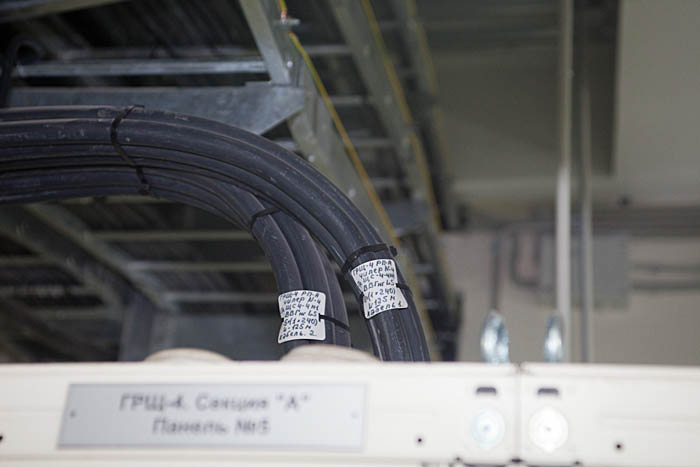

- Cable production: more than 100 km of cable with a cross section from 1.5 to 400 square millimeters (some cannot be bent with your hands, only with a special device) from domestic factories.

Full 8 MW are reached at the temperature of +37 outside and the machine rooms are fully loaded. Entry - 2 lines from the CHP, and we had to modify their supply cells and lay the infrastructure to the data center. Then 8 transformers, 4 groups of 2 pieces. We tried to minimize the number of switching devices. In Russia, one circuit breaker for 2.5 kiloampere is more expensive than a transformer. Therefore, we optimized the scheme based on cost minimization. For every machine hall, two independent transformers of 2,000 kilovolt-amperes each are currently operating. Odd transformers are powered by chillers and cooling towers, even ones are fan coils. We have the ability to turn off any of the transformers, and at the same time the performance of the data center is not disturbed. On the right of the diagram - 7 backup diesel power plants of 2000 kilovolt-ampere reserve power. Fuel stock is stored in two containers of 25 cubic meters.

UPS - classic static. 38 pieces of 300 kilovolt amperes each. For machine rooms redundancy 2N, for engineering load - N + 1. Provides 15 minutes of uninterrupted power.

Power transformers are domestic, they are quite high quality. Total - 8 pieces. Podolsky transformer plant did them, they work fine, no complaints. Cable products are also domestic, but we carefully checked every cable during shipment, because it is no secret that, in large batches, our factories can drive out defects. Fought for every meter.

Cooling

The cooling system is a balance between environmental friendliness, price and efficiency. Yes, we love eco-friendly solutions, and even then we thought about it, and not just about money.

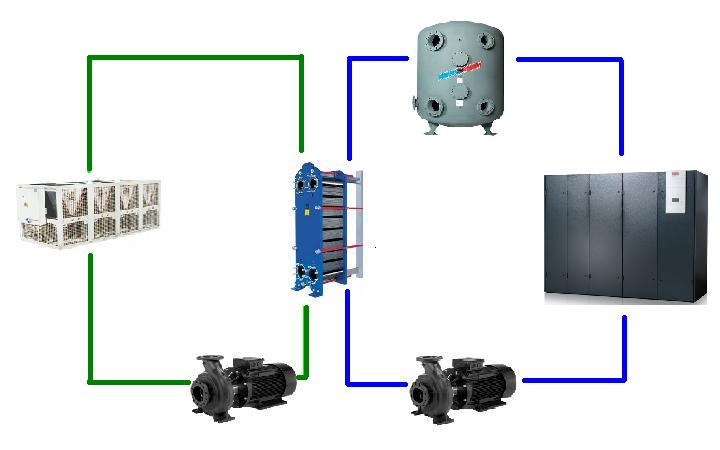

The system is two-circuit, the first circuit with water is 200 tons. In the event of a spill, no problems. Water is also good in thermal properties. Our storage tanks are made of reinforced concrete, the pressure is created by a natural water column (the system is open).

We set high temperature parameters to minimize power losses due to condensation of water on heat exchangers. In our case, 13 degrees on the flow of 18 degrees on the return line. In the future, in the following data centers (we are constantly building them in Russia) we want to raise the temperature, we can move on.

The outer contour is filled with ethylene glycol. Chillers and drycoolers are connected in series - that is, the temperature range of operation in the free cooling mode is expanded, it is possible to remove heat from the coolers in the free cooling mode almost to +15 outside. 100% full power freecooling is available already at +5 and below.

Used a computer-aided design system, which allowed us to optimize the final scheme. In the process of testing, we obtained all the values incorporated into the project in terms of temperature, pressure, and so on quite clearly and without difficulty. Manually it would be difficult because of the high branching of pipelines.

Main settings:

- 64 STULZ fan coil for 4000 kW power. 48 + 16 backup. To save, we use everything that allows you to work on warmer water.

- Water 13/18 degrees Celsius,

- Humidifier on every 4th machine

- Power 97 kW (Qak = Qfull),

- Air consumption 28,800 cubic meters / h.

Suppliers:

- Chillers & Cooling Towers: Nordvent,

- Ventilation equipment: LENNOX,

- Pumping equipment: GRUNDFOS,

- Heat exchangers: Alfa Laval,

- Valves: BROEN, GROSS.

There is nothing under the raised floor, except for the pipes of the gas fire extinguishing system, and there is nothing zoned there, we are working on the total volume. Chillers are all air + “dry” cooling towers that are included in the circuit in series (first, cooling towers stand in the flow, then chillers). This allows you to extend the range of the system in full and partial free-cooling mode.

In the previous topic there was a question about exactly this solution through the raised floor, I will tell you in more detail why. Work through raised floor is quite effective, convenient and structurally simple for almost all modern applications in data centers. One of the tasks that we solved when designing engineering systems was to ensure that there were no “outside” (non-tenant) engineering equipment inside the machine rooms. This is necessary because, for example, if a bank places racks with us, then most often it places special fences right in the machine room. Any maintenance of equipment within the client area means the need to call their security personnel. Safety issues of our customers are paramount.

The second task is the lack of water in the machine rooms and above them (no “intra-riders" and "refrigerators" on the ceilings). Working through the raised floor, we both decided to do these tasks, because our fan coils were moved from the car halls to special side corridors.

Another question was about the "megahot" rack. They are cooled on common grounds. Next to them we put "empty" racks with plugs. There is a condition - in one of the perforated tiles of the raised floor, in our conditions, it is possible to blow out enough air to remove approximately 5 kW of heat (one “average” server cabinet). If the server allocates 30 kW, then it needs to give 6 tiles.

Dust protection

Air billet

In a post about the construction of this data center asked about the protection from dust. In general, the thing is quite simple, but I will tell you in more detail. Several operations are needed:

- First, it is necessary to remove everything after the installation of equipment after construction. To do this, we attracted a special cleaning company, which cleaned everything up as if it were necessary to establish quarantine for aliens.

- Secondly, when there is already no dust inside, it is important not to let it in the data center anymore. Air filtration inside the machine rooms is provided by EU4 fan coil filters. That is, outside the dust can get only with visitors.

- Thirdly, any dust that came to the data center along with the guests is filtered by the same filters, since the air inside the machine rooms circulates practically in a confined space with huge multiplicity.

- And, fourthly, in the machine halls air is provided with a special ventilation machine (also with filters, of course), that is, inside the halls there is a slight overpressure that prevents dust from sucking in from outside.

Certification

In short, there are two approaches to certification - “build as we said and everything will be OK” - according to TIA and “build according to the requirements, and we will check the object” using the UI. We certified in the second methodology, that is, we drove actual tests on the data center, which is quite rare in Russia. The list of TIER-III certified data centers can be found here: uptimeinstitute.com/TierCertification/certMaps.php .

The difference about certification approaches is right here habrahabr.ru/company/croc/blog/157099 .

Differences Between TIER I - TIER IV Levels

Uptime TIER-III involves parallel maintenance when routine maintenance and accidents do not cut down the data center and do not reduce its output parameters.

It was done this way: first, Uptime receives project documents in English. They issue recommendations, then if everything is good, they issue a certificate for the project. ATD specialists (certified UI specialists) can be of great help at this stage, plus they guarantee the project’s compliance with the requirements of the institute. This is a paper certification, that is, a project.

Then it is much more difficult to certify the built data center in order to obtain certification of the object. After the approval of the project and the construction of the guys from UI come to the site. They stayed with us for 4 days, conducted complex tests with imitation of a heap of failures and imitation of routine maintenance. The key to success is full compliance of the data center with the project, plus the experience of the service team. If you haven’t carried out “training alerts” before - a high chance of not passing the test. For the preparation of training programs and staff, you can again attract ATD, if you need help.

In general, when designing and building a data center with increased responsibility, it is very important to have the best specialists in each field . As a rule, now they are engineers of the Soviet school, who know the subject extremely deeply and have an enormous practice. The new generation is also growing, the benefit of IT in the CIS is developing quite rapidly. Even in the project team, we need a person from the business who ensures that the project’s goals correspond to the business goals. It will also help to attract the best resources, if necessary.

We need proven subcontractors. In addition to the work itself, you need to understand that complex tests will be carried out several times, and it’s good if contractors help.

It is very important to involve the operation service in the project itself, so that you don’t have to start learning all over again. Well, when the operational team from the inside understands what and how it works.

That's all. More pictures are here in the photo tour . The performance characteristics of the facility are on www.croc.ru/dc .

Source: https://habr.com/ru/post/183154/

All Articles