Acquaintance with shaders on the example of GPUImage

In this article I am going to describe the development of an application for an iphone that will process video from the device’s camera in real time. To do this, we will use the GPUImage framework, we will write our own shader on OpenGL ES and try to understand what filters are for image processing.

GPUImage framework

GPUImage is an iOS library written by Brad Larson and distributed under a BSD-licensed license that allows you to apply filters and other effects using the GPU to movies, live video and images.

')

GPU vs CPU

Each iphone is equipped with two processors: CPU and GPU, each of which has its own strengths and weaknesses.

When you write in C or Objective-C in Xcode, you create instructions that will be executed exclusively on the CPU. The GPU, on the contrary, is a specialized chip, particularly well suited for computing, which can be divided into many small, independent operations, such as, for example, graphics rendering. The types of instructions for the GPU are radically different from the CPUs, so we write code in another language, in OpenGL (or more precisely in the shader language GLSL).

Comparing the performance of video rendering on the CPU and GPU, it is noticeable that the differences are huge:

Frame rate: CPU vs. GPU (Larger FPS is better)

| Calculations | GPU FPS | CPU FPS | Δ |

|---|---|---|---|

| Threshold ☓ 1 | 60.00 | 4.21 | 14.3 |

| Threshold ☓ 2 | 33.63 | 2.36 | 14.3 |

| Threshold ☓ 100 | 1.45 | 0.05 | 28.7 |

GPUImage vs Core Image

Core Image - a standard framework for image and video processing in almost real time. Appeared since ios 5, and for this version had not such a large set of filters (although for most tasks it is quite enough), with the release of ios 6, the number of filters has increased significantly. Core Image also allows for processing on both the CPU and the GPU.

The main advantages of GPUImage over Core Image:

- GPUImage allows you to write (create) your own filters (Core Image allows you to do this for now only on OS X, not on iOS);

- GPUImage is faster than Core Image;

- GPUImage uses GLSL instead of its own language;

- GPUImage is Open Source;

GPUImage is also a good way to start learning about OpenGL, as there are a lot of examples, documentation and ready-made solutions. You can immediately move on to more exciting things, for example, writing new filters, and soon see the results!

GPUImage structure

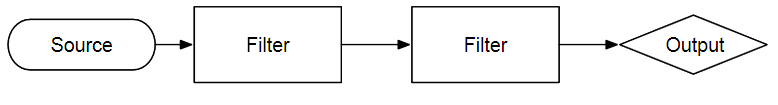

GPUImage is inherently an abstraction on Objective-C around the rendering pipeline. Images from an external source, be it a camera, a network or a disk, are loaded and modified passing through a chain of filters and giving the result as an image (UIImage), directly rendering to the screen (via GPUImageVIew) or just a data stream.

Speaking in a different language, the GPUImage API contains thousands of camera applications that are just waiting for the right combination of filters and a little imagination.

For example, you can apply a Color Levels filter to an image from a video camera to simulate various types of color blindness and display them in real time.

Color levels

GPUImageVideoCamera *videoCamera = [[GPUImageVideoCamera alloc] initWithSessionPreset:AVCaptureSessionPreset640x480 cameraPosition:AVCaptureDevicePositionBack]; videoCamera.outputImageOrientation = UIInterfaceOrientationPortrait; GPUImageFilter *filter = [[GPUImageLevelsFilter alloc] initWithFragmentShaderFromFile:@"CustomShader"]; [filter setRedMin:0.299 gamma:1.0 max:1.0 minOut:0.0 maxOut:1.0]; [filter setGreenMin:0.587 gamma:1.0 max:1.0 minOut:0.0 maxOut:1.0]; [filter setBlueMin:0.114 gamma:1.0 max:1.0 minOut:0.0 maxOut:1.0]; [videoCamera addTarget:filter]; GPUImageView *filteredVideoView = [[GPUImageView alloc] initWithFrame:self.view.bounds)]; [filter addTarget:filteredVideoView]; [self.view addSubView:filteredVideoView]; [videoCamera startCameraCapture] Seriously, the filter submission application that comes as an example for GPUImage can be hosted on the Apple Store for about $ 3.99 without any changes. And by adding Twitter integration and a couple of sound effects, you can raise the price to some $ 6.99.

Vertex Shaders GPUImage

A shader is a program for a graphics processor that controls the behavior of a single stage of the graphics pipeline and processes the corresponding input data.

For a better understanding of what is happening in the main part of the article, let's touch on top of the vertex shaders.

When working with an image, most of the time we deal with two-dimensional objects. The image is displayed on the plane, which is a rectangle. This is necessary for OpenGL, because everything exists in three-dimensional space. If we want to draw something, we must first create a surface where we will draw. OpenGL ES 2.0 can only draw triangles (as well as points and lines, but not rectangles), so the plane is built from two triangles.

Vertex shaders are a small program for processing ONE vertex.

This is what the standard vertex shader on GPUImage looks like:

Vertex shader

attribute vec4 position; attribute vec4 inputTextureCoordinate; varying vec2 textureCoordinate; void main() { gl_Position = position; textureCoordinate = inputTextureCoordinate.xy; } Three types of variables are passed to the shader: attributes, varyings, and uniforms. For each vertex, its own attributes are transferred - position in space, texture coordinates (how the texture will be displayed on the shape), color, normal, etc.

Varying variables are the connection between the vertex and fragment shaders. Variables of this type are declared and initialized in the vertex shader and then passed to the fragment shader. But, since the fragment shader operates with points on the whole shape - these values are interpolated linearly. For example, if the vertices of the left half of the shape are white and the right half is black, then the color of the shape will represent the gradient from white to black.

Uniforms - variables are necessary for the shader to communicate with the outside world (the program itself). They are the same for all vertices and fragments.

In GPUImage we transfer two sets of coordinates - these are the coordinates of the plane itself and the texture coordinates. As a rule, we will not have to take care of this, so we will not go into details.

We will not include the vertex shader in our developed filter, but we will use the standard shader from the GPUImageFilter class.

Create a new project GPUImage

Let's create a new project for iphone. To do this, run: File-> Project → Single View Application. You can leave or uncheck the storyboards and ARC.

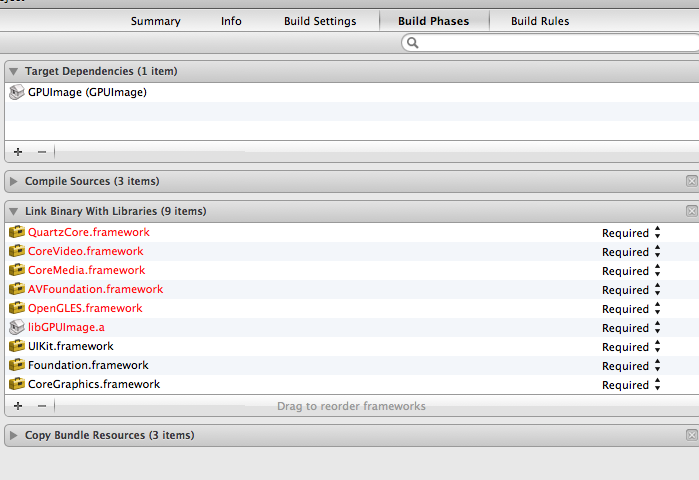

Next, connect the frameworks to our created project (right-click on the folder with the framework → Add files). Then we should add some frameworks and libraries shown in the screenshot:

Finally, in the project build settings, we need to add the -ObjC flag to the rest of the linker flags and specify the location of the folder with the GPUImage framework in the “search search paths” header.

Now everything is ready and we can start writing our own filter! We are going to create a polar pixellate shader and expand it by adding curl and posterisation (reducing the number of displayed colors on the screen).

Polar Pixellate Posterize Filter

Our filter will use the polar coordinate system to pixelate the incoming image.

The first thing to do is create a new class inherited from GPUImageFilter. Let's call it GPUImagePolarPixellatePosterizeFilter.

GPUImageFilter

#import "GPUImageFilter.h" @interface GPUImagePolarPixellateFilterPosertize : GPUImageFilter { GLint centerUniform, pixelSizeUniform; } // The center about which to apply the distortion, with a default of (0.5, 0.5) @property(readwrite, nonatomic) CGPoint center; // The amount of distortion to apply, from (-2.0, -2.0) to (2.0, 2.0), with a default of (0.05, 0.05) @property(readwrite, nonatomic) CGSize pixelSize; @end We are going to pass two uniform variables in this filter. The centerUniform variable is the point from which the polar coordinate system originates - by default it is 0.5, 0.5, that is, the center of the screen. The coordinate system in OpenGL ranges from 0.0, 0.0 to 1.0, 1.0, with the beginning in the lower left corner (note the text about texture coordinates). The pixellate value determines how large the 'pixels' will be after the filter is applied. Since we use the polar coordinate system, the value of "x" is the radius (distance from the "center"), and the other value is the angle in radians.

And although GPUImageFilter takes responsibility for configuring OpenGL and creating the necessary framebuffers, we still need to write the shader itself and pass the necessary uniform variables to it.

Our first shader

Add the following code before the @ implementation line:

Code

NSString *const kGPUImagePolarPixellatePosterizeFragmentShaderString = SHADER_STRING ( varying highp vec2 textureCoordinate; uniform highp vec2 center; uniform highp vec2 pixelSize; uniform sampler2D inputImageTexture; void main() { highp vec2 normCoord = 2.0 * textureCoordinate - 1.0; highp vec2 normCenter = 2.0 * center - 1.0; normCoord -= normCenter; highp float r = length(normCoord); // to polar coords highp float phi = atan(normCoord.y, normCoord.x); // to polar coords r = r - mod(r, pixelSize.x) + 0.03; phi = phi - mod(phi, pixelSize.y); normCoord.x = r * cos(phi); normCoord.y = r * sin(phi); normCoord += normCenter; mediump vec2 textureCoordinateToUse = normCoord / 2.0 + 0.5; mediump vec4 color = texture2D(inputImageTexture, textureCoordinateToUse ); color = color - mod(color, 0.1); gl_FragColor = color; } ); The shader code is enclosed in the SHADER_STRING () macro to represent it as an NSString string.

A few words about data types and operations in the GLSL. The main data types used are int, float, vector (vec2, vec3, vec4) and matrices (mat2, mat3, mat4). You can perform simple arithmetic operations on matrices and vectors, such as, for example, the addition of vec2 + vec2. In addition, operations to multiply a vector by a number (int or float) are allowed, for example: float * vec2 = vec2.x * float, vec2.y * float. You can also use the appeal: vec4.xyz, if you want to get vec3. A full list of supported types can be found here .

Let's see what's going on here. Varying textureCoordinate of type vec2 comes to us from the vertex shader by default. Uniform - the center and pixelSize variables are variables that we pass from our filter class. And finally, we have a variable inputImageTexture of type sampler2D. This uniform is set by the superclass, GPUImageFilter, and is a two-dimensional image texture that we want to process.

You may have noticed that we use the highp classifier all the time. This is done to tell GLSL about the level of accuracy of our data types. As you can imagine, the higher the accuracy, the greater the accuracy of our data types. But this is not always relevant - for a simple rendering on the screen, less accuracy will fit, which will allow to perform calculations a little faster. Accuracy classifiers are lowp, mediump, highp. You can learn more about accuracy and actual limitations here .

The shader always has the main function main (). The result of the fragment shader is the color that will be set for the fragment being processed. This color in our case is taken from the original image at the coordinates normCoord. We will use this value to produce pixelation depending on the position in the polar coordinate system.

The first thing we do is turn our coordinate system into a system with polar coordinates. The variable TextureCoordinate is defined on the interval from 0.0, 0.0 to 1.0, 1.0. The uniform variable Center is defined in the same range. In order to describe our screen in polar coordinates, we need coordinates from -1.0, -1.0 to 1.0, 1.0. The first two lines do this conversion. The third line subtracts from the center normCoord. Those. we simply shifted the coordinate system to a new point centered on normCoord. Find the value of the radius and angle phi, after which we will again return to the Cartesian coordinate system, shifting its center to its original place. Thus, we obtain the range 0.0, 0.0 1.0, 1.0, which is needed for texture search. To do this, call the texture2D () function, which takes two parameters as input: a two-dimensional texture object (in our case, inputImage) and coordinates (textureCoordinateToUse).

Finally, we reduce the color gamut for red, green, blue (and alpha, but alpha is always 1.0 in our case, so ...) from 256 values for each component (16.8 million colors) to 10 (1000 colors) .

This is our fragment shader and it will work very quickly. If we had to do the same operation on a processor (CPU) to achieve the same goal, it would take much longer. In many cases, real-time video filtering can be performed on the GPU, which would be impossible using the CPU.

Finishing the development of the filter

After we wrote the shader, the only thing left for us is setters for uniform variables. During initialization, we pass the shader text to the superclass, define pointers to uniform — variables, and set some default values.

Add the following code after @implementation:

Code

@synthesize center = _center; @synthesize pixelSize = _pixelSize; #pragma mark - #pragma mark Initialization and teardown - (id)init { if (!(self = [super initWithFragmentShaderFromString:kGPUImagePolarPixellatePosterizeFragmentShaderString])) { return nil; } pixelSizeUniform = [filterProgram uniformIndex:@"pixelSize"]; centerUniform = [filterProgram uniformIndex:@"center"]; self.pixelSize = CGSizeMake(0.05, 0.05); self.center = CGPointMake(0.5, 0.5); return self; } When initWithFragmentShaderFromString is called, our shader passes through the appropriate methods for checking and compiling so that it is ready to run on the GPU. If we wanted to submit a vertex shader in the same way, then there is the same challenge for it to go through all the same operations.

We must call [filterProgram uniformIndex:] for each uniform variable in our shader. This method returns a Glint pointer to a Uniform variable, with which we can set the value of the variable.

Finally, we set some default values during the initialization phase so that our filter works without user interaction.

The last thing we need to do is set the setters and getters for our uniform variables:

Code

- (void)setPixelSize:(CGSize)pixelSize { _pixelSize = pixelSize; [self setSize:pixelSize forUniform: pixelSizeUniform program:filterProgram]; } - (void)setCenter:(CGPoint)newValue; { _center = newValue; [self setPoint:newValue forUniform: centerUniform program:filterProgram]; } Create an application

Now we will create a simple video application. Let's go to the View Controller class that we took as a template and set up earlier. Modify the following lines in this file:

Code

#import "JGViewController.h" #import "GPUImage.h" #import "GPUImagePolarPixellatePosterizeFilter.h" @interface JGViewController () { GPUImageVideoCamera *vc; GPUImagePolarPixellatePosterizeFilter *ppf; } @end @implementation JGViewController - (void)viewDidLoad { [super viewDidLoad]; vc = [[GPUImageVideoCamera alloc] initWithSessionPreset:AVCaptureSessionPreset640x480 cameraPosition:AVCaptureDevicePositionBack ]; vc.outputImageOrientation = UIInterfaceOrientationPortrait; ppf = [[GPUImagePolarPixellatePosterizeFilter alloc] init]; [vc addTarget:ppf]; GPUImageView *v = [[GPUImageView alloc] init]; [ppf addTarget:v]; self.view = v; [vc startCameraCapture]; } -(void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event { CGPoint location = [[touches anyObject] locationInView:self.view]; CGSize pixelS = CGSizeMake(location.x / self.view.bounds.size.width * 0.5, location.y / self.view.bounds.size.height * 0.5); [ppf setPixelSize:pixelS]; } -(void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event { CGPoint location = [[touches anyObject] locationInView:self.view]; CGSize pixelS = CGSizeMake(location.x / self.view.bounds.size.width * 0.5, location.y / self.view.bounds.size.height * 0.5); [ppf setPixelSize:pixelS]; } Create a video camera with a specific resolution and location GPUImageVideoCamera and our filter GPUImagePolarPixellatePosterizeFilter.

And set the GPUImageView as the main view of our view controller.

Thus, our pipeline looks like this: camera video - pixel filtering and pasteurization (polarpixellateposterizefilter) - GPUImageView, which we will use to display the video on the phone screen.

At this stage, we can already start the application and get a working filter. But it would be nice to add some more interactivity! To do this, we use the touchesmoved and touchesbegan methods , which capture the pressure, affecting the pixelSize uniform variable of our filter.

To get the smallest 'pixels', just touch in the upper left corner of the image, and for the largest - in the lower right. Now you can experiment with the filter yourself to get completely different results.

Congratulations! You wrote your first shader!

Other examples of image processing using shaders

Reducing the levels of red and green in the image, increasing the blue:

Code

lowp vec4 color = sampler2D(inputImageTexture, textureCoordinate); lowp vec4 alter = vec4(0.1, 0.5, 1.5, 1.0); gl_FragColor = color * alter; Brightness reduction:

Code

lowp vec4 textureColor = texture2D(inputImageTexture, textureCoordinate); gl_FragColor = vec4((textureColor.rgb + vec3(-0.5)), textureColor.w); Popular image blur:

Code

mediump float texelWidthOffset = 0.01; mediump float texelHeightOffset = 0.01; vec2 firstOffset = vec2(1.5 * texelWidthOffset, 1.5 * texelHeightOffset); vec2 secondOffset = vec2(3.5 * texelWidthOffset, 3.5 * texelHeightOffset); mediump oneStepLeftTextureCoordinate = inputTextureCoordinate - firstOffset; mediump twoStepsLeftTextureCoordinate = inputTextureCoordinate - secondOffset; mediump oneStepRightTextureCoordinate = inputTextureCoordinate + firstOffset; mediump twoStepsRightTextureCoordinate = inputTextureCoordinate + secondOffset; mediump vec4 fragmentColor = texture2D(inputImageTexture, inputTextureCoordinate) * 0.2; fragmentColor += texture2D(inputImageTexture, oneStepLeftTextureCoordinate) * 0.2; fragmentColor += texture2D(inputImageTexture, oneStepRightTextureCoordinate) * 0.2; fragmentColor += texture2D(inputImageTexture, twoStepsLeftTextureCoordinate) * 0.2; fragmentColor += texture2D(inputImageTexture, twoStepsRightTextureCoordinate) * 0.2; gl_FragColor = fragmentColor; Getting started with GPUImage is easy enough and it is quite powerful to embody everything you dreamed of. And even more, GPUImage is a dizzying number of filters, color settings, blending modes, and visual effects that you could only dream of (or did not even know about their existence). You can find a bunch of examples using modern filters that include contour detection, fisheye, and a ton of other cool stuff.

Sources

An introduction to OpenGL ES 2.0 (GLSL) and a graphics pipeline device: one , two , three .

The article is a creative adaptation with the processing of knowledge and translation: source 1 , source 2

Source: https://habr.com/ru/post/181794/

All Articles