Overview of the new Violin - flash storage system operating at a speed close to DRAM

The manufacturer made three bold marketing statements:

- The system doesn’t care whether the read or write is the same.

- With all this, the response time is stable 250-500 microseconds, even after a month of constant load.

- You can take out any components "on hot" - there will be nothing.

To begin with, we divided the space into several dozens of virtual volumes and launched a dozen applications that record in blocks of 4 kilobytes in 20/80 mode (80% of the record). And then kept the module under load for 5 days. It turned out that marketing had lied: the recording speed was very far from the 1 ms stated in the presentation and averaged only 0.4 ms (at 40/60, it reached 0.25).

Then, with a test drive in the office for IT directors, we started having real problems. The fact is that I mentioned in the invitation that somehow, during the demonstration of the Disaster Recovery solution, we cut down the rack in the data center live, after which there was simply no chance to finish the event peacefully. The audience was waiting for blood, and I had to call a service engineer with a screwdriver.

At 450k IOPS, I started by pulling out two fans. It almost did not impress the audience, because I wanted to get to one of the two controllers and see what Violin would say to that. Minus two fans made the system scream roar (it automatically accelerated the rest), so I only heard something like “your mother”, when the engineer simply took and pulled out one of the two controllers, and the piece of hardware “slipped” only by a third in speed.

')

Careful traffic: under the cut schemes and screenshots.

To tell what and how it worked with this and where such speed comes from, you will have to start from afar. Scroll directly to the system architecture, if you are well aware of the architecture of modern flash-storage systems. Or stay here, if, as the representative of one large hosting said, “I know that it is fast, but I don’t even know what it does.”

Introduction

Once upon a time, there was no separate data storage system as such: it was just that the server had a hard disk on which all information was recorded. Then information was needed to be written more and more, and as a result, separate storage facilities were created, created on magnetic tape, disk arrays and flash disk arrays.

The speed of development of processors significantly exceeded the speed of development of drives. Disk arrays gradually became a bottleneck. Considering the hardware limitations of the hard disk physics itself, we started to build huge fast RAIDs from them with additional redundancy and optimize the algorithms before writing directly. But still, one cannot jump higher than one's head, and the speed has not fundamentally increased for several years.

Then came the first flash drives. Here is a very interesting point: considering the current infrastructure, each flash drive was for the entire architecture a higher level than a regular hard disk, just with a higher speed. That is, flash solutions stuck in place of old hard drives - and the entire technology stack before the recording controller was already in the drive box thinking that it was working with a regular magnetic disk. And this immediately meant a huge overhead of completely unnecessary operations for flash technology: for example, the storage cache with its optimization algorithms is completely unnecessary and is just an extra delay for the data (and even if it is turned off, the data still “flies” through its chips) The classical scheme of recording by sector also led to an absolutely unnecessary slowdown. At the same time, the flash disks themselves had a different recording scheme, which led to the fact that you had to do the work three times: flashing the interface from the HDD to the flash, writing as a flash and also working with the garbage collector analogue with each recording. The approach itself did not change with the proliferation of SSD in the market - all the same problems with the bus, cache and write algorithms.

As a result, at some point the Violin guys looked at this picture and asked themselves a simple question: why if the flash memory chip works at a speed closer to DRAM than to classic HDD, and at the same time is non-volatile, it is necessary to screw it into the existing architecture Storage instead of classic disks? The answer was only in a huge outdated park of protocols and handlers at low levels. The problem is that the whole system would have to be developed from scratch - and that meant a serious investment of time and money.

Violin engineers did not stop it, they signed a contract with flash memory manufacturer Toshiba, which allows access to the chip's architecture. As a result, after a while, having done a lot of work, it was possible to create server storage, which gave out huge access speeds to information at a fantastically low level of delays, even compared to SSD.

It was necessary to get rid of the concept of disk as such, in order to remove the entire piece of the stack that worked as before. The output turned out to be a thing that can not be called a flash drive: we have natural chips on the table that look more like DRAM.

Limitations of flash memory

First, the number of write cycles per cell is limited. I found the first flash drives for servers that crashed in a month or two of hard work. But, truth, it is worth noting that in some cases it was even better than working with hard drives: the latter had the ability to fly at an unpredictable moment due to mechanics, and flash drives and chips always know their point of failure exactly and are able to warn in advance.

Secondly, on flash drives, unlike the classic magnetic disk, it is not possible to overwrite new data over old ones; you can only write to an empty cell. That is, there is a third operation in addition to the classic reading and writing - erasing. It would seem that this is not a problem, you just have to clear the cell before overwriting, but here lies the main danger: unlike reading and writing, the erasing operation is very slow. Factor of.

Thirdly, if it is possible to bypass the first two restrictions, a third one will arise: a controller can become a “bottleneck”, which should manage all this economy and prepare data for reading and writing.

If you do not bypass these restrictions, the usual SSD will give only 20-40 percent increase in power. If you bypass - you can achieve just fantastic numbers. Violin solved these questions quite interestingly.

Let's look at the system architecture

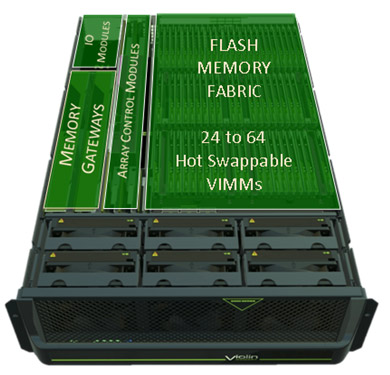

Here is the whole system

In the area designated as “flash memory fabric” you can see a huge pile of microcircuits viewed by large black fans. This is the flash memory located on such cards:

Separate brick flash factory.

On one module of 16 chips directly flash memory, several DRAM modules and one FPGA board for one "brick". Bricks in the factory a maximum of 64 pieces. All data operations within the board are carried out locally on the FPGA, which allows you not to load external controllers with these concerns.

Next to these things, there are 4 Virtual RAID controllers that are concerned with ensuring data integrity with parity using the same algorithm as RAID 3 4 + 1. By default, controllers divide memory boards into “responsibility” groups of 5, and even 1 HotSpare per controller. However, splitting is to some extent “virtual”, the same speed of access of each controller to each module is always provided by the switching structure of the array, i.e. as we would say for a classic storage architecture, the back-end is an absolutely honest active-active.

Integrity protection mechanisms will save data even if several memory boards fail one after another, the main thing is that 2 RAID 1 rails do not "die" at the same time, and the FLASH drives also go up orders of magnitude faster. The truth is there is a feature: with a standard replacement, the system sees the broken module and turns off the power on it automatically (or allows you to turn off the power of any module from the interface), then the module is pulled out, then a new one is installed and activated in the interface. What is the most fun - everything can be changed "on hot." During the tests, we created up to hundreds of volumes with different applications, and then took out 4 modules in series with an interval of 20 minutes (one emergency, three with preliminary shutdown via the interface) - and everything worked like a clock.

Specialized protection in case of disconnection of external power supply, such as batteries or supercapacitors in power supply units on the array is not provided. This does not mean that the array is not protected from sudden power outages: it has enough energy stored in the power supply unit to reset the last transaction to FLASH, and that is enough with a large margin. After the current disappears, the system can record all data on FLASH more than 40 times on residual energy.

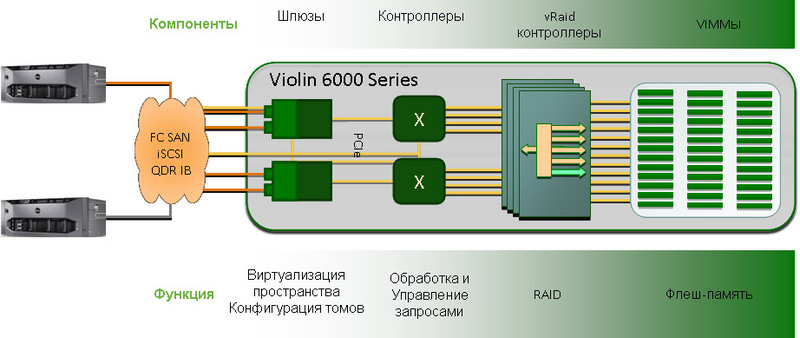

Now let's go up a higher level and see how, in terms of architecture, the array communicates with the outside world:

System architecture

So, at the entrance there is data from servers that send data over standard SAN interfaces, which allow working with an external unit at the required speeds and scaling. Then the data comes to the gateways, where useful information is transmitted to the PCIe x8-based transport infrastructure (nothing slower to work at such speeds is in principle suitable for maintaining the required level of delays) and delivered to the vRAID of controllers in 4 KB blocks. After that, the parity is calculated and then written to 1 of the fives of the memory bars of 1 KB per bar.

The transport infrastructure (Virtual Matrix in Violin terminology) is completely passive and is managed by 2 duplicated ACM modules. These modules are purely the control element of the array, they are not directly related to the logic of data processing, their function is purely control, they “rule” the power supply and monitor the health of the system. The transport infrastructure provides the ability to communicate all-with-all within the Violin package, even if part of the component fails, but it does not create a single point of failure, except that the passive charge can be calculated as such, but the probability of its failure is not much different from let's say the likelihood of a fire in the data center.

Now the most interesting and difficult: what to do with the restriction of flash technology to write? The solution is not complicated in general, but according to the Violin developers, this simplicity cost them the most. This problem is solved by pre-cleaning the cells, immediately after losing the relevance of the data. It works as follows: as is well known, the actual raw capacity of each FLASH chip is much more declared, it is done to evenly degrade cells, regardless of the load profile, so on the physical level, each write to the chip goes to a new cell, and the old one is marked as available for records At the same time, the old data remain in the same place where they were, therefore, very quickly we come to a state where there are no empty cells and we have to clean the cell before each entry, as a result, the speed drops dramatically.

This is what it looks like. "Break" on performance graphs for SSD

Violin works a little differently, the FPGA on each chip keeps track of the up-to-date information of each cell and, if the data is no longer needed, a special background process called the garbage collector clears it. Thus, each entry always goes to an empty cell, which allows you to avoid a performance drawdown and use FLASH-memory chips as efficiently as possible not only for reading, but also for writing.

Let's go over the architecture again:

- Controllers receive the data and transmit it further.

- The control module switches all components to each other over PCIe x8 and provides monitoring and control of components.

- Flash factory will distribute data to individual modules and balance everything in accordance with any peaks, plus ensures uniform wear and redundancy of internal paths.

All that can be implemented in hardware on FPGA chips, that is, provides a fundamentally different level of interaction and processing speeds than usual.

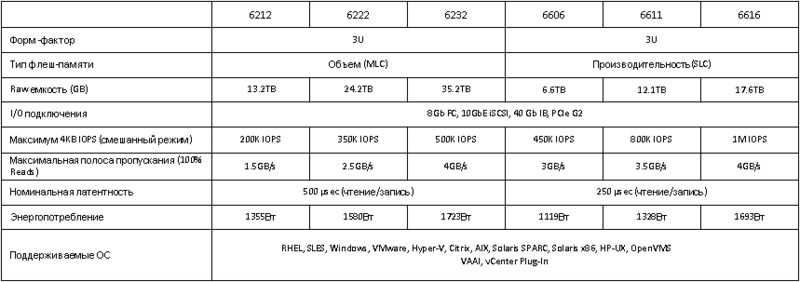

Iron rulers

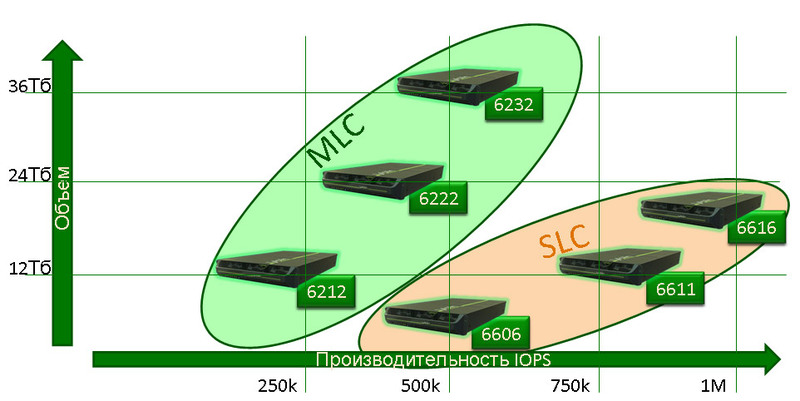

Now let's look at a lower level on a separate memory chip. The fact is that there are two technologies for writing to the crystal - SLC and MLC. SLC stores 1 bit in each memory, MLC more. As a result, it turns out that on the same physical crystal SLC provides greater speed and a longer module resource, and the MLC allows you to get more space.

At the same time, the dependence was linear - SLC gave two times less space with a speed increase of 2 times. However, thanks to Violin's smart software (they spent almost two years digging around in data processing at a low level), the MLC differs from the SLC in recording speed by about 50%. The resource of MLC chips is also increased, according to Violin statistics, even with a maximum write load, memory modules will last at least 4-5 years.

Here is the new generation line in terms of space and speed:

But the differences in the table:

This is how it looks in the data center:

For installation, all arrays are in a 3U package, power is 2 blocks of 480 watts each, heat generation is like that of a powerful 3 U server.

Interface and Administration

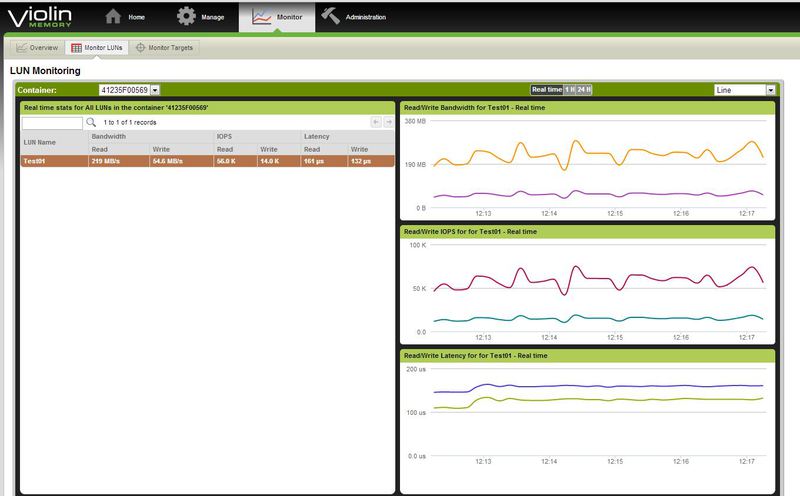

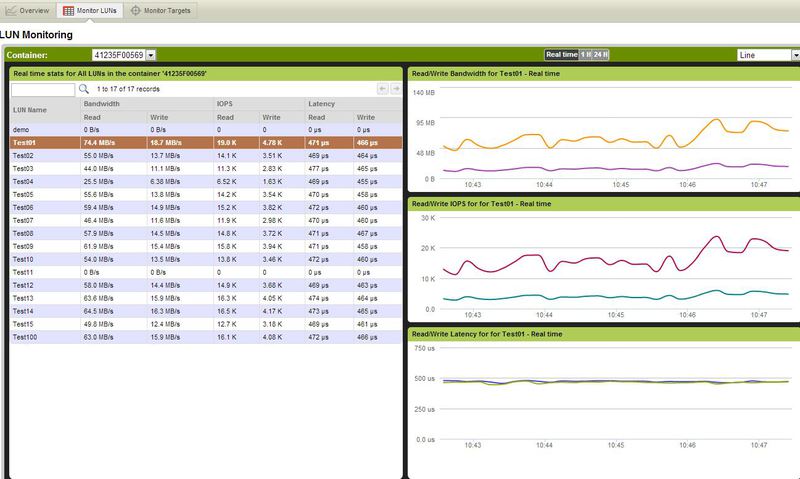

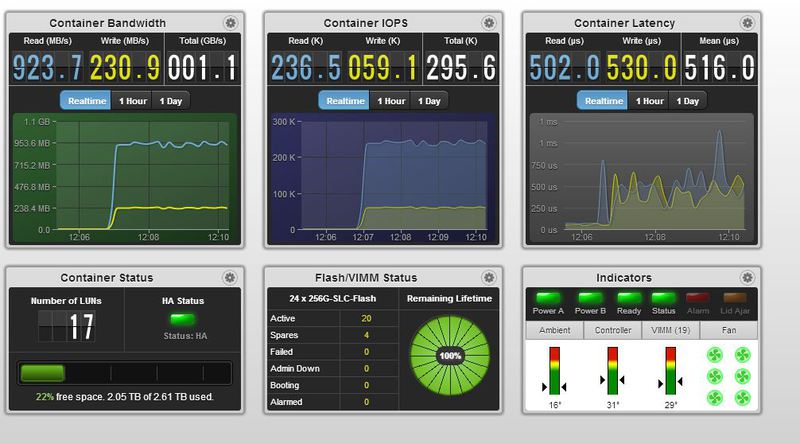

IOPS statistics on the array is the maximum load that 1 IBM 3850X5 server was able to create.

Same statistics, but megabytes per second.

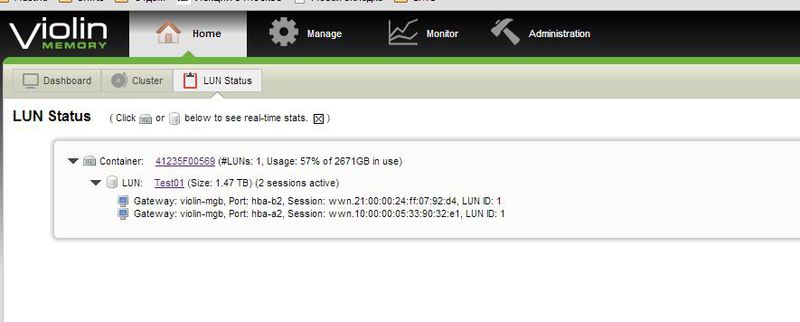

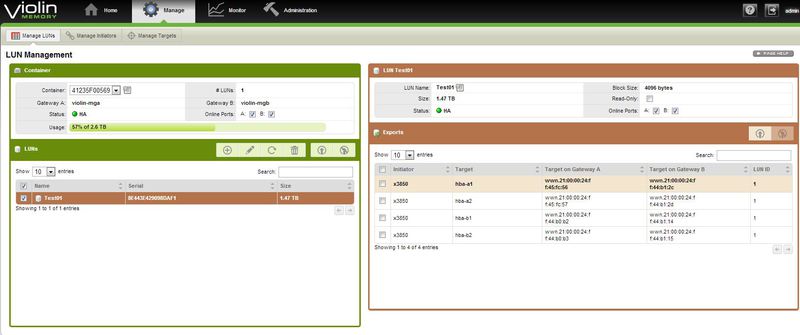

View the parameters of the volume.

The system is very simple and easy to manage, Violin pays great attention to the interface, both to its convenience and ease of operation, and to the actual reaction speed and clarity of the interface itself.

Shown here is the statistics for the individual moon.

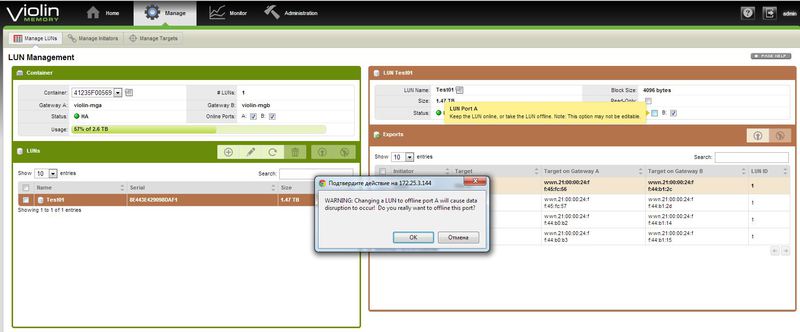

Protection "from the fool" in action. Any actions that could lead to interruption of access or loss of data require additional confirmation.

The state of the array ports.

General array statistics window.

Statistics window for volumes separately.

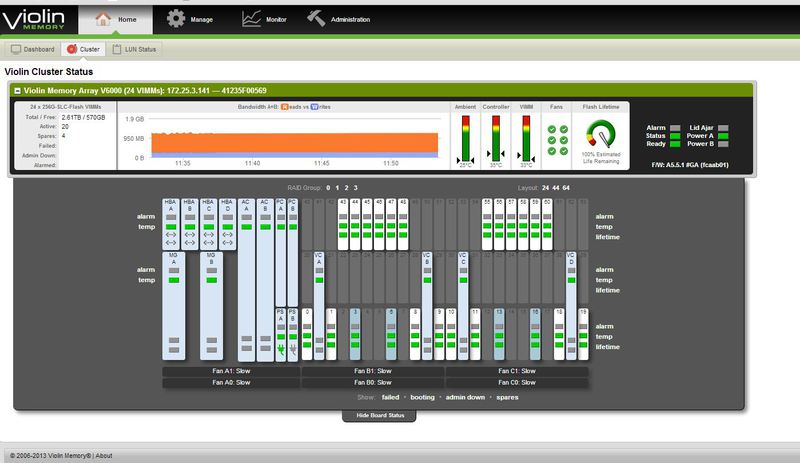

Interface monitoring system hardware components.

Volume management page.

Port load monitoring page.

The main monitoring page, fully customizable, allows you to display the most needed administrator, in his opinion, information on the status of the array.

These glands in Russia

How did Violin come about? As I said, it's quite simple - when it became clear that SSD capacities are still not enough to solve modern problems, and smearing applications on a heap of disks is not the coolest idea, they entered into a partnership with Toshiba and started working with their software controllers at a low level , close to close to the physics of processes. We collected the first line of systems in 2005, showed how cool they were, received various awards in the field of IT and became the “start-up of the year” in Silicon Valley, and then turned the world of storage systems upside down. Currently, many leading manufacturers of server solutions and software have used Violin solutions to unleash the potential of their platforms in benchmarks (TPC-C, VMmark and others).

Violin came to Russia literally only “yesterday”, and CROC received an exclusive one-year partnership. We, in turn, currently provided local spare parts warehouses, trained service engineers.

According to the implementations , there were several dozens of major integrations of the iron of the past generation and several new ones. For example, one very large company formed a monthly billing report to millions of its clients for 72 hours, and began to form only 22 hours. The VDI solution was able to avoid downtime (waiting) for a response from the storage system due to the ultra-short response time, which allowed the kernels to be loaded so that instead of the old 10 users per core, 25 users were obtained per core with similar performance, and this also saves on licenses and computing equipment.

By the way, many thanks to Violin marketing - thank God, they do not make particularly high-profile statements and show not peak parameters, but standard ones - we are integrators, and we absolutely don’t need a client charmed by someone else’s marketing genius to ask why not this way. Thanks to this approach, the client now receives more than he expects.

Still, many do not believe in the fantastic speed of flash systems, so for Violin we do not only test drives, where you can poke a finger at the piece of iron, but also calculations and test implementations. For example, when a client needed to deploy a storage VDI infrastructure per 1000 users, with 50.000 IOPs, for 50Tb of data, two options were considered: two storage systems 7 x 200GB Cache SSD 180 x 600GB SAS against Violin 6212, 12TB RAW = 7TB Usable for “hot” data and 60 x 600GB SAS 18 x 3TB SATA for storing “slow” data. The result is almost twice the savings with increased productivity from the claimed 50k IOPS to 200,000 IOPS.

If you are interested in the details, as it turned out, I can calculate the solution to your problem using flash storage, write to vbolotnov@croc.ru . I advise, just in case, just to have such a project and put it on the table - if you suddenly need to accelerate sharply, you can get it and show the calculation.

Source: https://habr.com/ru/post/181494/

All Articles