Optimum parallelization of unit tests or 17,000 tests in 4 minutes

Today we will talk about the utility developed by us, which optimizes the testing of PHP code using PHPUnit and TeamCity. At the same time, you need to understand that our project is not only a website, but also mobile applications, a wap site, a Facebook application, and much more, and development is carried out not only in PHP, but also in C, C ++, HTML5 and etc.

Today we will talk about the utility developed by us, which optimizes the testing of PHP code using PHPUnit and TeamCity. At the same time, you need to understand that our project is not only a website, but also mobile applications, a wap site, a Facebook application, and much more, and development is carried out not only in PHP, but also in C, C ++, HTML5 and etc.The methods we describe perfectly adapt to any language, any testing system and any environment. Therefore, our experience may be useful not only to developers of web sites in PHP, but also to representatives of other areas of development. In addition, in the near future we plan to transfer our system to Open Source - without necessarily binding to TeamCity and PHPUnit - surely it will be useful to someone.

Why do you need it?

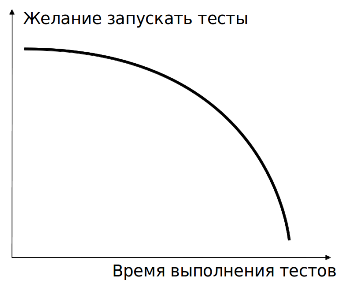

Unit testing is a mandatory ( we actually believe in it! ) Component of any serious project that dozens of people work on. And the bigger the project, the more unit tests it has. The more unit tests, the longer their execution time. The more time to complete them, the more developers and testers decide to "gently ignore" their launch.

Naturally, this can not positively affect the quality and speed of testing tasks. Tests are fully run just before the release, at the last minute, the clarification begins in the spirit of “Why did this test fall?”, And often it all comes down to “Let's decompose this way, and then we will fix it!”. And it's good if the problem is in the test. Much worse if it is in the test code.

Naturally, this can not positively affect the quality and speed of testing tasks. Tests are fully run just before the release, at the last minute, the clarification begins in the spirit of “Why did this test fall?”, And often it all comes down to “Let's decompose this way, and then we will fix it!”. And it's good if the problem is in the test. Much worse if it is in the test code.')

The best way out of this situation is to reduce the test time . But how? When testing each task, it is possible to run only tests covering the code affected by it. But even this is unlikely to give a 100% guarantee that everything is in order: after all, in a project consisting of many thousands of classes, it is sometimes difficult to trace the subtle connection between them ( unit tests, if you believe the books, NEVER should go beyond the tested class, or even better, the test method. But have you seen many such tests? ). Therefore, it is necessary to run ALL tests in parallel, in several threads.

The simplest solution for non - lazy people is, for example, to manually create several PHPUnit XML configuration files and run them as separate processes. But it will be enough for a short time: the constant support of these configs will be needed and the likelihood of missing a test or a whole package will increase, and the execution time will be far from optimal.

The easiest solution for the lazy is to write a simple script that will divide the tests equally between the threads. But at some point it will exhaust itself, because the number of tests is constantly growing, they all work at different speeds, from which there is a difference in the execution time among the threads. Consequently, we need more effective and controlled methods of dealing with them.

The easiest solution for the lazy is to write a simple script that will divide the tests equally between the threads. But at some point it will exhaust itself, because the number of tests is constantly growing, they all work at different speeds, from which there is a difference in the execution time among the threads. Consequently, we need more effective and controlled methods of dealing with them.Conclusion : we need an easy-to-manage system that will automatically and uniformly distribute the tests across threads and independently control the code coverage of the tests.

Search

Since PHPUnit is a very common system, we thought that probably someone had already done something like this, and we began to search.

The first results did not disappoint: there were many solutions of different degrees of readiness and level of functionality. These were bash scripts, PHP scripts, wrappers around PHPUnit, and even quite complex and sweeping patches for the system itself. We started trying some of them, digging through the code, trying to adapt to our project and faced with a huge amount of problems, ambiguities and logical errors. It was surprising: is there still no one hundred percent proven solution?

Most of the options were built on one of two schemes:

- We are looking for all the necessary files and divide them equally between the threads;

- We run a number of threads, which sequentially process the common test queue and “feed” them with PHPUnit.

The disadvantage of the first method is obvious: the tests are not distributed in an optimal way, which, of course, increases their speed, but often some thread runs 3-4 times longer than the others.

The disadvantage of the second method is no longer so obvious and is connected with interesting features of the internal structure of PHPUnit. First, every start of the PHPUnit process is mated with a variety of actions: emulation of the test environment, collection of information on the number and structure of test classes, and so on. Secondly, when running a large number of tests, PHPUnit collects all data providers for all tests in a tricky and smart way, minimizing the number of calls to disks and databases ( again: there are no very, very correct unit tests! ), But this is an advantage it is lost if one issues tests to the system one by one. In our case, this method caused a huge amount of unexplained errors in the tests, catching and repeating which was incredibly difficult, because each time you start, the sequence of tests almost always changed.

We also found information that PHPUnit developers want to implement support for multi-threading "out of the box." But the problem is that these promises have been for more than one year, comments on the development of the project are stingy and there are no even approximate forecasts, so it’s too early to count on this decision.

We also found information that PHPUnit developers want to implement support for multi-threading "out of the box." But the problem is that these promises have been for more than one year, comments on the development of the project are stingy and there are no even approximate forecasts, so it’s too early to count on this decision.Having spent several days studying all of these options, we came to the conclusion that not one of them solves the problem we have completely set and we need to write something of our own, as much as possible adapted to the realities of our project.

Flour of creativity

The very first idea lay on the surface. We determined that most of the test classes work for approximately the same time, but there are those that run tens or hundreds of times longer. Therefore, we decided to separate these two types of tests (by determining these “slow” tests on our own ) and drive them away from each other. For example, all the “fast” tests are carried out in the first five streams, and all the “slow” tests - in the other three. The result turned out to be quite good, with the same number of streams, the tests passed approximately twice as fast as with the double-stream option, but the variation in the operation time of different streams was still quite noticeable. Therefore, it is necessary to collect information on test runtime in order to distribute them as evenly as possible.

The next thought that came to our mind was to save the test runtime after each run, and then use this information for the subsequent combination. However, this idea also had flaws. For example, in order for statistics to be accessible from everywhere, it had to be stored in an external repository — for example, a database. Hence, there are two problems: firstly, a tangible time for performing this operation, secondly, there would be conflicts when several users access this repository at the same time (of course, they are solved using transactions, locks, etc., but we do all this for speed, and any conflict resolution slows down the process ). It would be possible to save data on some special condition or with a certain frequency, but these are all exclusively “crutches”.

It followed from this that it was necessary to collect statistics in a certain isolated place, where everyone would turn only for reading, and the recording would be made by something separate. It was at this moment that we remembered TeamCity, but about this a bit later.

It followed from this that it was necessary to collect statistics in a certain isolated place, where everyone would turn only for reading, and the recording would be made by something separate. It was at this moment that we remembered TeamCity, but about this a bit later.There is one more problem: the test runtime can always fluctuate both locally - for example, when the server performance drops dramatically, or globally - when the server load increases or decreases ( we run tests on the same machines that are being developed and run the developer of any resource-intensive script noticeably affects the test run time ). This means that in order to maximally update the statistics, it needs to be accumulated and the average value used.

How to configure this utility? At the first implementation, all control was carried out by command line parameters. That is, we ran the utility with parameters for PHPUnit, and it passed them to the processes being run and added its own — for example, the complete list of tests run ( and the amazing PHPUnit startup lines were thousands of characters long ). It is clear that this is inconvenient, makes you once again think about the transmitted parameters and contributes to the appearance of new errors. After a lot of experiments, the simplest solution finally came: you can use standard PHPUnit XML configuration files, changing the settings in them and adding a few of your own XML tags to the structure. Thus, using these configuration files, it will be possible to run both pure PHPUnit and our utility with the same result ( not taking into account, of course, the increase in speed in our case ).

Decision

So, after many hours of planning, developing, searching for serious bugs and catching minor ones, we came to the system that works now.

Our Multithread Launcher (or, as we affectionately call it, “launcher”) consists of three isolated classes:

- Class for collecting and saving statistics on test execution time;

- Class for obtaining these statistics and uniform distribution of tests;

- Class for generating PHPUnit configuration files and running its processes.

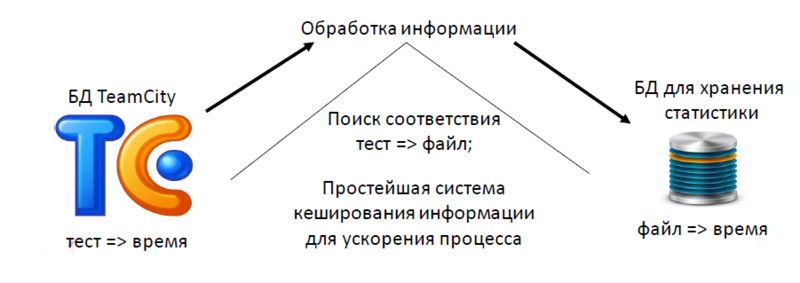

The first class works with TeamCity. She regularly runs a build that, among other things, runs absolutely all tests, and then turns on our statistics collector. This cooperation has both advantages and disadvantages. On the one hand, TeamCity itself collects statistics on all running tests, on the other hand, the TeamCity API does not provide any means to work with this information ( neither native nor even more advanced REST API ), so we have to contact the database directly. she works.

Here we have a little problem. Our initial architecture provided a file with a test class as a unit of information for uniform distribution of tests across threads ( since this is the most natural method for PHPUnit configuration files ). And TeamCity keeps statistics on individual tests, without linking them to the files. Therefore, our collector reads test statistics from the database, relates tests to classes, classes to files, and stores the statistics already in this form.

This may seem to be quite resource-intensive, but we wrote a simple system for caching matches "class => file", and hard work is done by the collector only in the case of the emergence of new classes, and their number at each launch is not so large.

Statistics is stored in its own database in seven copies - for the last seven days. Every day it is collected again, but when distributing files, data for the past week is used, and the newer the statistics, the higher its “weight” when calculating the average time. Thus, a single increase in test runtime does not have a significant effect on the statistics, and permanent changes are taken into account fairly quickly.

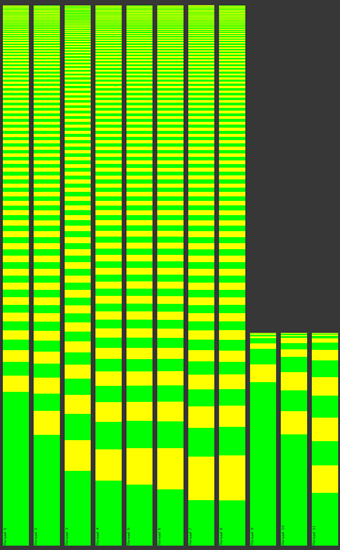

Statistics is stored in its own database in seven copies - for the last seven days. Every day it is collected again, but when distributing files, data for the past week is used, and the newer the statistics, the higher its “weight” when calculating the average time. Thus, a single increase in test runtime does not have a significant effect on the statistics, and permanent changes are taken into account fairly quickly.The second class generates file lists for each stream as simple as possible. It receives information from the database, sorts all files by runtime from the largest to the smallest, and distributes them according to the stream according to the principle “every next file is in the most free stream”. Due to the fact that there are a lot of tests, and much more small ones than large ones, the expected operation time of all the threads differs only by a couple of seconds (the real one, of course, is somewhat longer, but the difference is still not too significant ).

In the picture on the right, the bars are the streams, each “brick” is a separate test, the height of which is proportional to the passage time.

The third class is engaged in the most interesting. In the main mode, it accepts the standard PHPUnit configuration file, in which test directories and specific files are specified. It is possible to mark the test as “slow” ( the running time of some tests strongly depends on external factors and should be carried out separately from the others ) and “isolated” ( such tests are run in separate threads after all the others have completed ). Then the start-up generates its own PHPUnit config with all the necessary parameters like the required TestListener, and the test suites are lists of files to run in each stream. Accordingly, after that it is enough to just run several PHPUnit processes with this config and an indication of the required test suite. In addition to the main mode, there are opportunities for debugging tests: running with the latest or any other generated config, with the standard TestListener PHPUnit instead of ours, running the tests in a different order, etc.

Speaking of our TestListeners ( this is the standard PHPUnit interface used to display information about the tests performed ). Initially, the start-up displayed the information in the same form as PHPUnit by default. But for more convenience, we wrote our own Listener, which allowed us to make the information more compact and readable, and also added new features such as STDOUT and STDERR.

Plus, we have TestListener for displaying information in TeamCity in the form in which it expects it ( so scary that the weight of the text report on one launch eventually reaches five megabytes ).

Results

Now a few numbers.

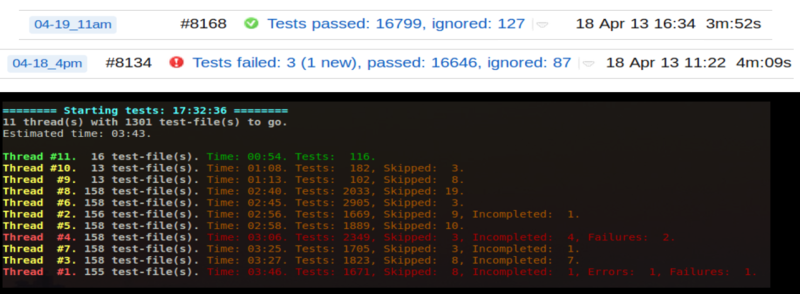

We carry out more than 17 thousand tests in 8 streams ( plus three additional tests for “slow” tests, which in a normal situation pass all together in a minute ).

In the best case ( with the standard load of test servers ), tests using the start-up pass in 3.5-4 minutes versus 40-50 minutes in one stream and 8-15 minutes in the distribution equally.

When heavily loaded servers start-up quickly adapts to the situation and work out for 8-10 minutes. When the tests were distributed equally, they worked for at least 20-25 minutes ... And we never even waited until the tests were completed in one stream.

As a result, we got a system that independently adapts to changes in server load due to statistics received from TeamCity, automatically distributes tests across threads and is easily managed and configured.

What gave us such an acceleration of test execution?

- First, we increased the speed of testing tasks.

- Secondly, due to the frequent running of tests, the probability of catching fatal errors during the mass merge of task branches to the release branch significantly decreased.

- Thirdly, the TeamCity agents were significantly unloaded: tests are not run in a single build, and now they actually have free time for special tasks from QA engineers.

- Fourthly, it became possible to realize the automatic launch of tests when sending a task for testing, so the tester has some information about the performance of the task code when it is received.

What's next?

We still have a lot of ideas and plans on how to improve the system. There are several known problems: sometimes there are difficulties with the isolation of tests left over from the single-threaded launch, while launching cannot be transferred to another project with one click - so the field for work ahead is still very, very large, although the system has achieved quite impressive results now.

In the near future (very much look forward to June), a total refactoring and revision of the system will be carried out so that it can be turned into an Open Source project.

Utilities will also be built into the start-up, which will allow one test to be divided into several streams containing a large number of data providers, will be able to look for tests that break the environment and determine commits that broke one test or another, etc.

In addition, the system architecture will change, so that developers have the opportunity to untie the TeamCity launch and tie it, for example, to Jenkins, or work exclusively locally without any problems.

I do not want to say that our Multithread Launcher is some kind of revolution or new word in test automation, but in our project it showed itself from the best side and, even despite some shortcomings, it works much more efficiently than all other publicly available solutions.

Ilya Kudinov, QA Engineer.

Source: https://habr.com/ru/post/181488/

All Articles