Doom 3 BFG - source code review: Multithreading (part 2 of 4)

Part 1: Introduction

Part 1: IntroductionPart 2: Multithreading

Part 3: Rendering (Approx. Translation - in the process of translation)

Part 4: Doom classic - integration (Approx. Translation - in the process of translation)

The engine for Doom III was written between 2000 and 2004, at a time when most PCs were single-processor. Although the idTech4 engine architecture was developed with support for SMP, it ended up with support for multithreading at the last minute ( see the interview with John Carmack ).

Since then, much has changed, there is a good article from Microsoft " Programming for multi-core systems ":

For many years, processor performance has steadily increased, and games and other programs have benefited from this increase in power without the need for effort.

The rules have changed. The performance of single-core processors is currently growing very slowly, if at all. However, the computing power of personal computers and consoles continues to grow. The only difference is that basically this increase is now obtained due to the presence of multi-core processors.

The increase in processor power is just as impressive as before, but now developers have to write multi-threaded code in order to unlock the full potential of this power.

')

Doom III BFG multi-core target platforms:

- The Xbox 360 has one Xenon 3 core processor. Simultaneous multithreading platform is 6 logical cores.

- The PS3 has a PowerPC-based main unit (PPE) and eight synergistic cores (SPE).

- The PC often has a quad core processor. With Hyper-Threading, this platform gets 8 logical cores.

As a result, idTech4 was strengthened not only with multi-threading support, but also with the idTech5 “Job Processing System” component, which adds support for multi-core systems.

For your information: the Xbox One and PS4 specifications were announced not so long ago: both will have eight cores. Another reason for any game developer to understand multi-threaded programming.

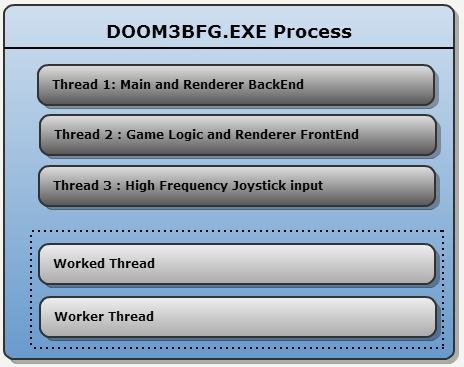

Doom 3 BFG Thread Model

On the PC, the game starts in three streams:

On the PC, the game starts in three streams:- Stream backend rendering interface (Sending GPU commands)

- The flow of game logic and rendering frontend interface

- Stream of high frequency joystick data entry (250Hz)

In addition, idTech4 creates two more worker threads. They are needed to help any of the three main streams. They are managed by the scheduler whenever possible.

main idea

Id Software announced the solution to the problems of multi-core programming in 2009 in the presentation " Beyond Programming Shaders ". Two main ideas here:

- Separate task processing for processing by different threads (“jobs” by “workers”)

- Avoid delegating synchronization to the operating system: do it yourself for atomic operations

System components

The system consists of 3 components:

- Tasks (Jobs)

- Handlers (Workers)

- Synchronization

Tasks are exactly what one would expect:

struct job_t { void (* function )(void *); // Job instructions void * data; // Job parameters int executed; // Job end marker...Not used. }; Note: According to the comments in the code, “the task must last at least a couple of 1000 cycles in order to outweigh the switching costs. On the other hand, a task should not last more than a few 100,000 cycles to maintain a good balance of workload between several processes.

A handler is a stream that will remain inactive pending a signal. When it is activated it tries to find the task. Handlers try to avoid synchronization using atomic operations, trying to get a task from the general list.

Synchronization is performed through three primitives: signals, mutexes, and atomic operations. The latter is preferable, as it allows the engine to maintain the focus of the CPU. Their implementation is described in detail at the bottom of this page .

Architecture

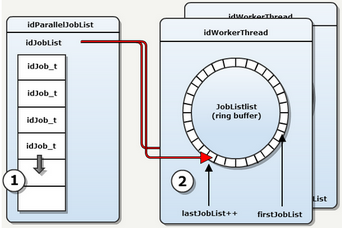

The brain of the subsystem is ParalleleJobManager. It is responsible for generating thread handlers and creating queues in which tasks are stored.

The brain of the subsystem is ParalleleJobManager. It is responsible for generating thread handlers and creating queues in which tasks are stored.And the first idea is to bypass the synchronization: divide the task lists into several sections, each of which is addressed only by one thread and, therefore, synchronization is not required. In the engine, such queues are called idParallelJobList.

There are only three sections in Doom III BFG:

- Render frontend-a

- Render backend-a

- Utilities

On a PC, two worker threads are created at startup, but probably more are created in XBox360 and PS3.

According to the 2009 presentation, more sections have been added to idTech5:

- Defect detection

- Animation processing

- Obstacle avoidance

- Texture processing

- Particle Transparency Processing

- Fabric simulation

- Water surface simulation

- Detailed generation of models

Note: The presentation also mentions the concept of a one-frame delay, but this part of the code does not apply to the Doom III BFG.

Distribution of tasks

Running handlers are constantly waiting for a job. This process does not require the use of mutexes or monitors: atomic increment distributes tasks without overlapping.

Running handlers are constantly waiting for a job. This process does not require the use of mutexes or monitors: atomic increment distributes tasks without overlapping.Using

Since tasks are divided into sections accessible only to one thread, synchronization is not necessary. However, giving the task to the system handler does imply a mutex:

//tr.frontEndJobList is a idParallelJobList object. for ( viewLight_t * vLight = tr.viewDef->viewLights; vLight != NULL; vLight = vLight->next ) { tr.frontEndJobList->AddJob( (jobRun_t)R_AddSingleLight, vLight ); } tr.frontEndJobList->Submit(); tr.frontEndJobList->Wait(); Methods:

- Adding task : no synchronization needed, tasks are added to the queue

- Sending : mutex synchronization, each handler replenishes total JobLists from its local JobLists.

- Synchronization signal (OS delegation) :

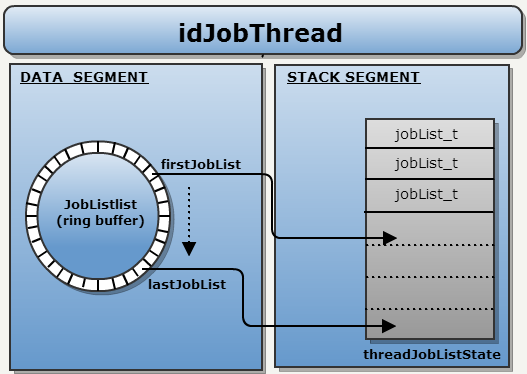

How the handler is executed

Handlers are executed in an infinite loop. In each iteration, the ring buffer is checked, and if the task is found, it is copied to the local stack.

Local stack: The stack of the thread is used to store the addresses of joblists to prevent the mechanism from stopping. If the thread cannot “block” the JobList, it falls into RUN_STALLED mode. This stop can be canceled by moving the stack from the local JobLists to the common list.

It is interesting that everything will be done without any reciprocal mechanisms: only atomic operations.

Endless cycle

int idJobThread::Run() { threadJobListState_t threadJobListState[MAX_JOBLISTS]; while ( !IsTerminating() ) { int currentJobList = 0; // fetch any new job lists and add them to the local list in threadJobListState {} if ( lastStalledJobList < 0 ) // find the job list with the highest priority else // try to hide the stall with a job from a list that has equal or higher priority currentJobList = X; // try running one or more jobs from the current job list int result = threadJobListState[currentJobList].jobList->RunJobs( threadNum, threadJobListState[currentJobList], singleJob ); // Analyze how job running went if ( ( result & idParallelJobList_Threads::RUN_DONE ) != 0 ) { // done with this job list so remove it from the local list (threadJobListState[currentJobList]) } else if ( ( result & idParallelJobList_Threads::RUN_STALLED ) != 0 ) { lastStalledJobList = currentJobList; } else { lastStalledJobList = -1; } } } Running Tasks

int idParallelJobList::RunJobs( unsigned int threadNum, threadJobListState_t & state, bool singleJob ) { // try to lock to fetch a new job if ( fetchLock.Increment() == 1 ) { // grab a new job state.nextJobIndex = currentJob.Increment() - 1; // release the fetch lock fetchLock.Decrement(); } else { // release the fetch lock fetchLock.Decrement(); // another thread is fetching right now so consider stalled return ( result | RUN_STALLED ); } // Run job jobList[state.nextJobIndex].function( jobList[state.nextJobIndex].data ); // if at the end of the job list we're done if ( state.nextJobIndex >= jobList.Num() ) { return ( result | RUN_DONE ); } return ( result | RUN_PROGRESS ); } Id Software Synchronization Tools

Id Software uses three types of synchronization mechanisms:

1. Monitors (idSysSignal):

| Abstraction | Operation | Implementation | Note |

| idSysSignal | Event objects | ||

| Raise | SetEvent | Sets the specified object event to an alarm state. | |

| Clear | ResetEvent | Sets the specified object event to a non-alarm state. | |

| Wait | WaitForSingleObject | Waits until the specified object is in a signal state or until the wait time has elapsed. |

2. Mutexes (idSysMutex):

| Abstraction | Operation | Implementation | Note |

| idSysMutex | Critical Section Objects | ||

| Lock | EnterCriticalSection | Waits to receive the specified critical section object. The function returns when the calling thread has received the property. | |

| Unlock | LeaveCriticalSection | Implements getting the specified object of the critical section. | |

3. Atomic operations (idSysInterlockedInteger):

| Abstraction | Operation | Implementation | Note |

| idSysInterlockedInteger | Interlocked variable | ||

| Increment | InterlockedIncrementAcquire | Incrementing the value of a given 32-bit variable as an atomic operation. The operation has the semantics “acquire”. | |

| Decrement | InterlockedDecrementRelease | Decrementing the value of a given 32-bit variable as an atomic operation. The operation has the release semantics. |

Source: https://habr.com/ru/post/181081/

All Articles