Advantages of placing in the Netherlands, EvoSwitch Data Center

In this publication, I would like to talk a little about the advantages of hosting in the Netherlands, the Dutch EvoSwitch Data Center, in which we are located, about the pros and cons of working with this data center (data processing center).

The Netherlands is the most loyal country in terms of legislation among the rest of Europe. A convenient geographical position makes it possible to build good connectivity, both with America and with Europe at the same time, including with Ukraine and Russia - the main consumers of the Russian-speaking Internet traffic segment. And in the Netherlands you can place legal sites for adults, which is impossible in many other countries due to imperfect legislation or possibly with restrictions. For example, in Germany, almost the entire territory, if there is a stub and paid access, it is allowed, but in open access it is not. For some reason, even there, politicians do not think that minors, if necessary, are able to use their parents' card or go to a site located in another region where there are no restrictions.

')

Adult makes up a huge share of all Internet traffic - on average, over 30% of the world, so it’s stupid and not far-sighted to prevent its placement, such countries have to pay dearly for external traffic, because when it’s impossible - the demand is even higher. This can be confirmed by Russia, where the share of adult foreign traffic is estimated at up to 54%. As a result, the development of internal networks of such countries leaves much to be desired, as local Data Centers lose up to half of the market. But as they said before, there is no sex in Russia and Ukraine, as the legislators apparently believe, introducing such bans. Only now this concept is relevant for the Internet, for which even criminal liability is foreseen, if God forbid you have placed a strawberry in Ukraine or Russia (in the Russian Federation, the situation is better with this, but also officially prohibited and punishable).

Banning adult sites is, alas, not the only problem. Unfortunately, in Ukraine and Russia, the sad situation has been developing lately, both in terms of connectivity between countries (there are very few quality channels, Russia and Ukraine do not develop connectivity with each other, apparently there is no interest in traffic exchange) and in law . Servers can be withdrawn without a court decision as a “proof” for various far-fetched motives at any time, and viewing streaming video cannot be made equally high quality for both countries at the same time, unless it is spent thoroughly on building normal peering. This is not capable, apparently, even Vkontakte. Watching videos from Vkontakte sometimes pretty dumb in Ukraine. Poor connectivity and losses on the channels, which are always present due to network congestion, lead to the fact that the speed of the stream between Russia and Ukraine sometimes reaches only 2 Mbit / s, sometimes lower, which is sometimes not enough to watch streaming video and the more HD video quality.

Very often, traffic between Ukraine and Russia goes on a very difficult route, for example via Budapest, Frankfurt, London:

But sadness is not only in this, but also in the internal networks of countries. For a similar reason, sometimes a site from abroad will respond better than a site located in the Data Center of the capital, if checked from the regions. In the same Ukrtelecom, which is an Internet monopolist in Ukraine, this is often observed. A user from a region can access a site hosted in Kiev, through Germany or even through several European countries. In Russia as a whole, the situation is no better, and sometimes even worse. For this reason, guaranteed gigabit on the Internet, which will have more or less acceptable connectivity, both with Russian and Ukrainian users at the same time, will cost considerably more money than a similar channel in the Netherlands.

A logical question arises, do we really need all these problems with hosting servers in Ukraine and Russia, and even for big money? In my opinion - no. It would be much easier to place the servers in a reliable Data Center and adequate to the country and provide excellent connectivity from there.

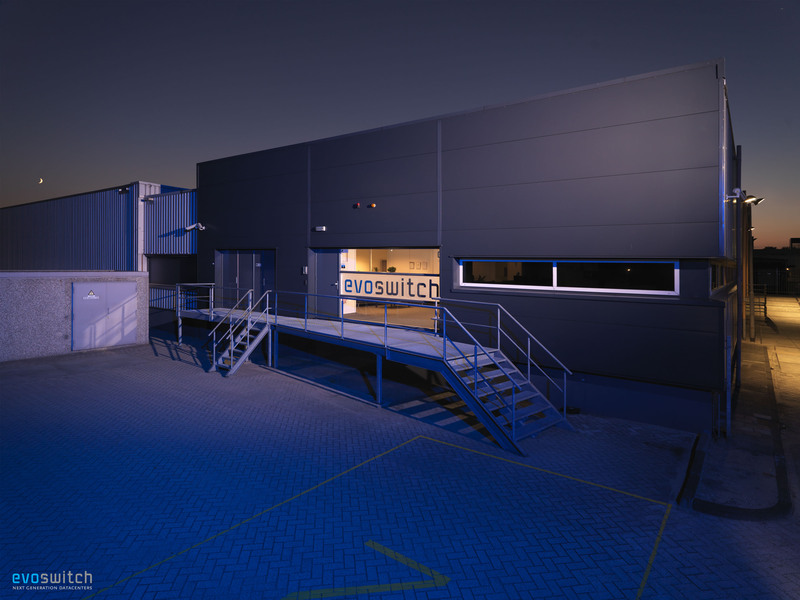

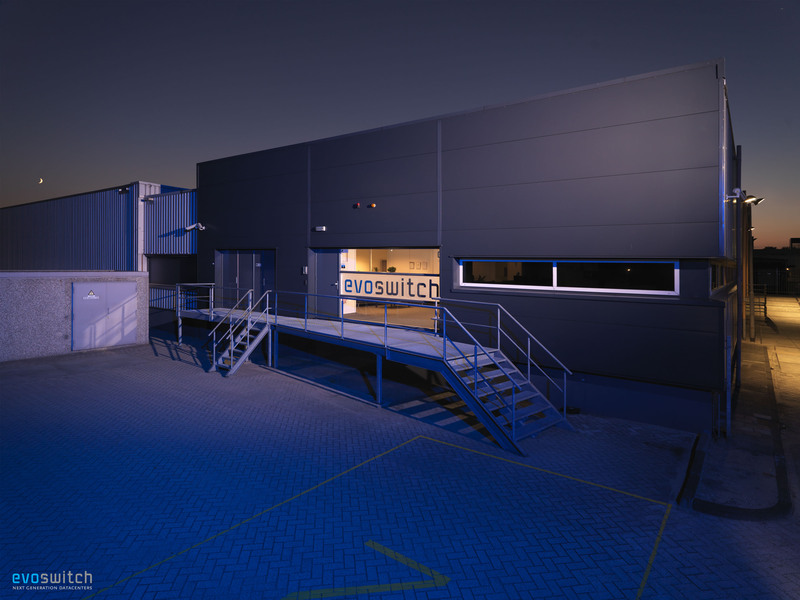

Located in Haarlem, 19 km west of the center of Amsterdam, founded in 2006 and commissioned in 2007, with an expansion plan for 5 halls and 2 switching rooms. The current occupied area is over 5000 square meters. m with the possibility of growth up to 10 000 square meters. m, and in the long term - up to 40 000 square meters. Due to its favorable location in an area with small electricity consumers, the EvoSwitch has the ability to expand consumption up to 60 MW of power, currently over 30 MW is used.

EvoSwitch uses 100% "green" energy, and the most efficient, energy efficiency ratio is about 1.2. This is the first Data Center in the Netherlands, as a result of which there are no emissions of carbon dioxide into the atmosphere, almost absent. The only sources are the vehicles of employees whose emissions Data Center compensates by contributions to the environmental fund.

Cooling is designed on the principle of hot and cold corridors, raised floor is used:

In each hall, servers are placed in isolated modules with adiabatic cooling, heat from the modules is emitted into the hall, and from the hall is removed by industrial air conditioners:

The capacity of one module is 32 server cabinets; in each of the cabinets, equipment consumption of up to 25 kW is allowed.

In addition, the Data Center EvoSwitch is the holder of ISO27001 and 9001 security certificates, PCI DSS (Payment Card Industry Data Security Standard) and SAS-70, which confirms the high reliability of the Data Center and allows even banks to be placed.

The EvoSwitch Data Center has excellent connectivity, both with Russia and Ukraine, as already indicated above, it is sometimes better than connectivity between different regions of Russia and Ukraine within the country. To confirm my words, here are some routes to Russia (Moscow and St. Petersburg):

[root@evoswitch.ua-hosting ~] # tracert mail.ru

traceroute to mail.ru (94.100.191.241), 30 hops max, 40 byte packets

1 hosted.by.leaseweb.com (95.211.156.254) 0.665 ms 0.663 ms 0.661 ms

2 xe4-0-0.hvc1.evo.leaseweb.net (82.192.95.209) 0.253 ms 0.266 ms 0.267 ms

3 xe1-3-0.jun.tc2.leaseweb.net (62.212.80.121) 0.918 ms 0.930 ms 0.932 ms

4 ae4-30.RT.TC2.AMS.NL.retn.net (87.245.246.17) 0.932 ms 0.934 ms 0.935 ms

5 ae0-1.RT.M9P.MSK.RU.retn.net (87.245.233.2) 44.981 ms 44.993 ms 44.994 ms

6 GW-NetBridge.retn.net (87.245.229.46) 52.378 ms 52.101 ms 52.095 ms

7 ae37.vlan905.dl4.m100.net.mail.ru (94.100.183.53) 52.093 ms 52.086 ms 52.081 ms

8 mail.ru (94.100.191.241) 41.870 ms 42.195 ms 42.181 ms

[root@evoswitch.ua-hosting ~] #

[root@evoswitch.ua-hosting ~] # tracert selectel.ru

traceroute to selectel.ru (188.93.16.26), 30 hops max, 40 byte packets

1 hosted.by.leaseweb.com (95.211.156.254) 0.494 ms 0.775 ms 0.793 ms

2 xe2-2-2.hvc2.leaseweb.net (82.192.95.238) 0.233 ms 0.239 ms 0.241 ms

3 xe1-3-2.jun.tc2.leaseweb.net (62.212.80.93) 0.767 ms 0.771 ms 0.772 ms

4 ae4-30.RT.TC2.AMS.NL.retn.net (87.245.246.17) 0.782 ms 0.996 ms 0.999 ms

5 ae5-6.RT.KM.SPB.RU.retn.net (87.245.233.133) 34.562 ms 34.573 ms 34.575 ms

6 GW-Selectel.retn.net (87.245.252.86) 41.945 ms 41.735 ms 41.728 ms

7 188.93.16.26 (188.93.16.26) 42.686 ms 42.469 ms 42.448 ms

[root@evoswitch.ua-hosting ~] #

Well, now I’ll show connectivity with my home provider in Ukraine:

[root@evoswitch.ua-hosting ~] # tracert o3.ua

traceroute to o3.ua (94.76.107.4), 30 hops max, 40 byte packets

1 hosted.by.leaseweb.com (95.211.156.254) 0.578 ms 0.569 ms 0.569 ms

2 xe2-2-2.hvc2.leaseweb.net (82.192.95.238) 0.253 ms 0.259 ms 0.260 ms

3 62.212.80.140 (62.212.80.140) 0.894 ms 0.895 ms 0.894 ms

4 ams-ix.vlan425-br01-fft.topnet.ua (195.69.144.218) 7.186 ms 7.192 ms 7.192 ms

5 freenet-gw2-w.kiev.top.net.ua (77.88.212.150) 35.204 ms 35.402 ms 35.404 ms

6 94.76.105.6.freenet.com.ua (94.76.105.6) 39.237 ms 39.285 ms 39.279 ms

7 94.76.104.22.freenet.com.ua (94.76.104.22) 38.184 ms 38.304 ms 38.289 ms

8 noc.freenet.com.ua (94.76.107.4) 39.080 ms 39.200 ms 39.190 ms

[root@evoswitch.ua-hosting ~] #

Such a wonderful connectivity allows me to work with my servers in the Netherlands at a speed higher than with servers located in Kiev in the Utel Data Center Ukrtelecom. Sad but true, connectivity from Kiev with the Data Center in Kiev is worse than connectivity with the Dutch data center ... These are the realities. And all because the data center EvoSwitch is not 2-3 aplinka, as often happens on Ukrainian and Russian sites, but a few dozen. Also, there are various peering and inclusion in the main traffic exchange points. The total number of uplinks and peerings is more than 30, and the total connectivity exceeds 3 Tbit / s. Perhaps this is the Data Center with the best connectivity in the world.

External routing is done through 3 different inputs in the building. Optical cables are safely routed to the optical cross in the switching room. All cables are anonymous and controlled by EvoSwitch, cable infrastructure information is constantly updated in the corresponding database. In the vicinity of the Data Center there are fibers of the following operators: BT, Colt, Ziggo, Enertel, Eurofiber, FiberRing, Global Crossing, KPN, MCI / Verizon, Priority, Tele2, UPC. As a result, such basic uplinks as BT, Cogent, Colt, Deutsche Telekom, FiberRing, Eurofiber, Global Crossing, Interoute, KPN, Relined, Tele2, Telecom Italia, Teleglobe / VSNL, TeliaSonera are used.

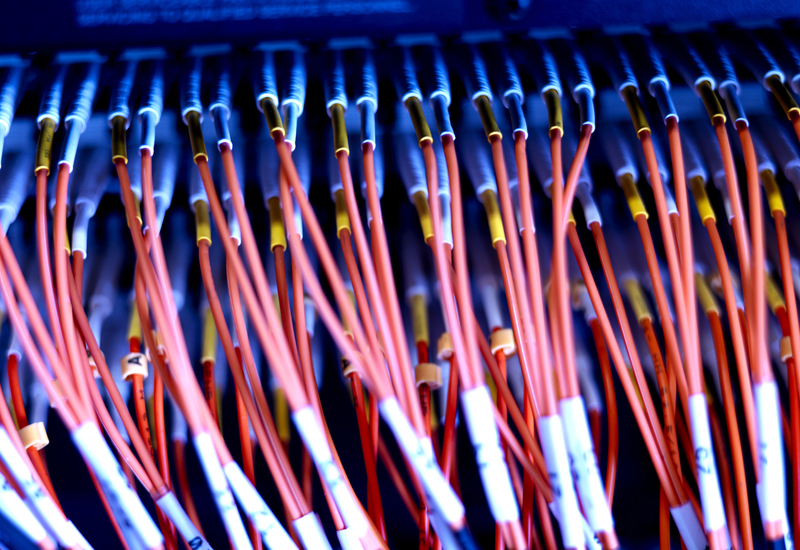

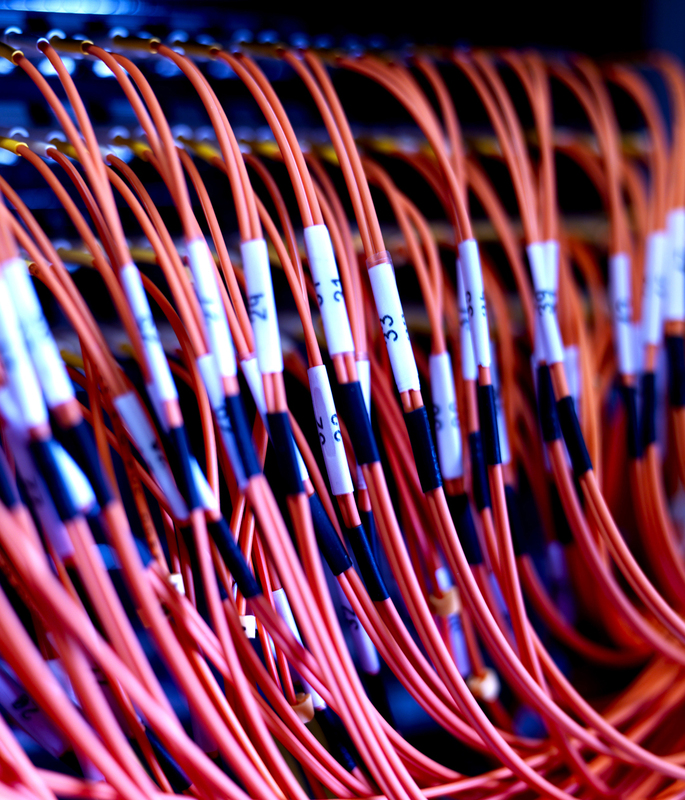

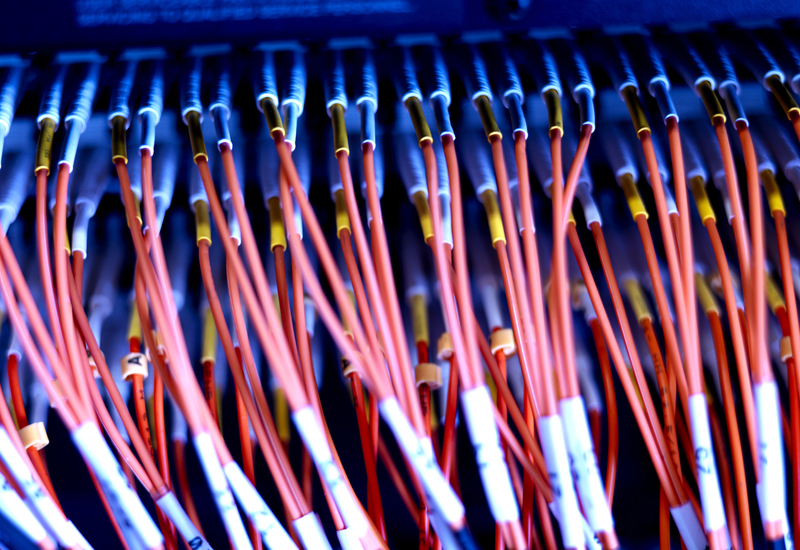

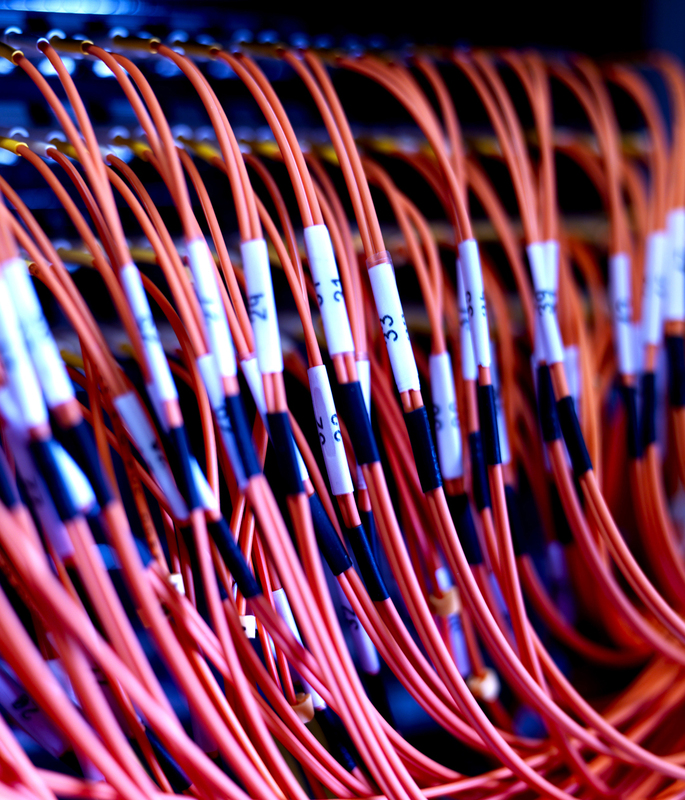

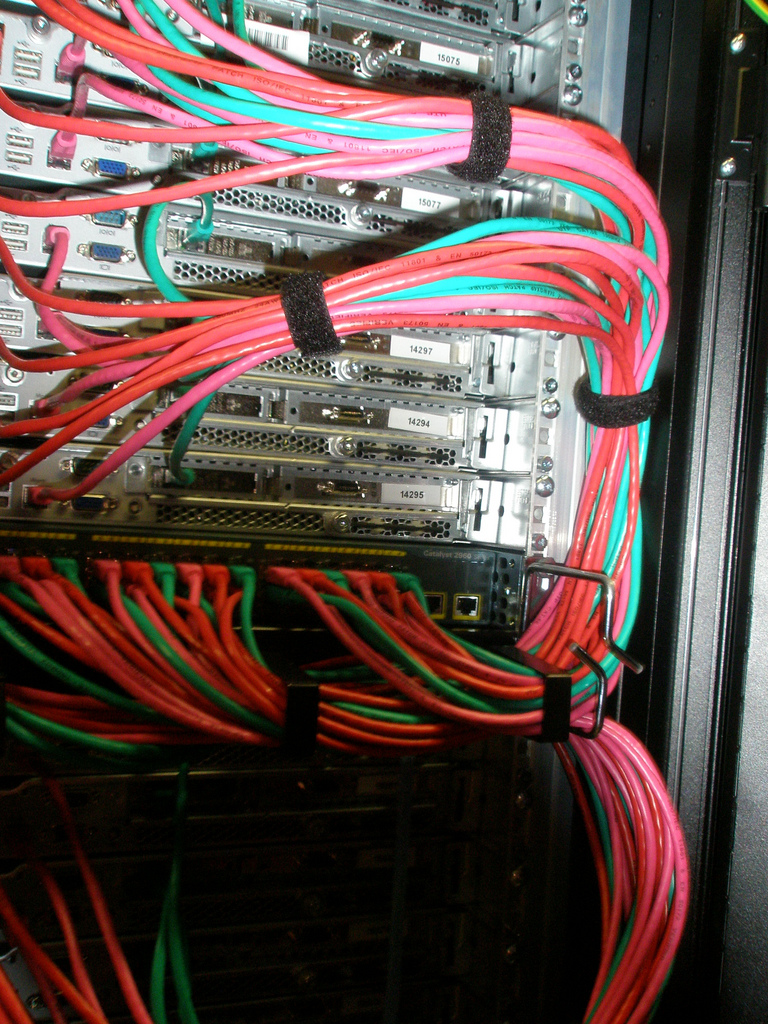

You can see spaghetti made up of hundreds of yellow-colored optical cables (with a capacity of 10 Gbit / s) and much less than orange ones (with a throughput of 1 Gbit / s) in the Data Center switching room.

Switching room:

This is the core of the Data Center, which costs more than 1 million euros, and this core is duplicated:

Here are the points of exchange, in which there are inclusions:

MSK-IX - 10 Gbit / s

FRANCEIX - 10 Gbit / s

AMSIX - 140 Gbit / s

DE-CIX - 60 Gbit / s

LINX - 40 Gbit / s

BNIX - 10 Gbit / s

EXPANIX - 10 Gbit / s

NLIX - 10 Gbit / s

DIX - 1 Gbit / s

NIX1 - 1 Gbit / s

NETNOD - 10 Gbit / s

DE-CIX - 60 Gbit / s

SWISSIX- 10 Gbit / s

MIX - 1 Gbit / s

NIX - 1 Gbit / s

VIX - 1 Gbit / s

SIX - 1 Gbit / s

BIX - 1 Gbit / s

INTERLAN - 1 Gbit / s

Currently, the growth rate of the data center's client base is quite high, in particular, thanks to partners who order servers for their clients, more than a hundred new servers are installed in the Data Center daily. For this reason, there are certain rules for the placement and issuance of servers.

So, there are standard cabinets (with so-called “automatic” pre-installed servers that are issued automatically), designed to provide each server with guaranteed 100 Mb / s Flat Fee (without traffic restrictions), guaranteed with 1 Gb / s, as well as cabinets for servers with limited traffic consumption.

In addition, configuration standardization is applied. One cabinet is filled with servers of the same type. The only thing that can differ in them is the number of disks and RAM.

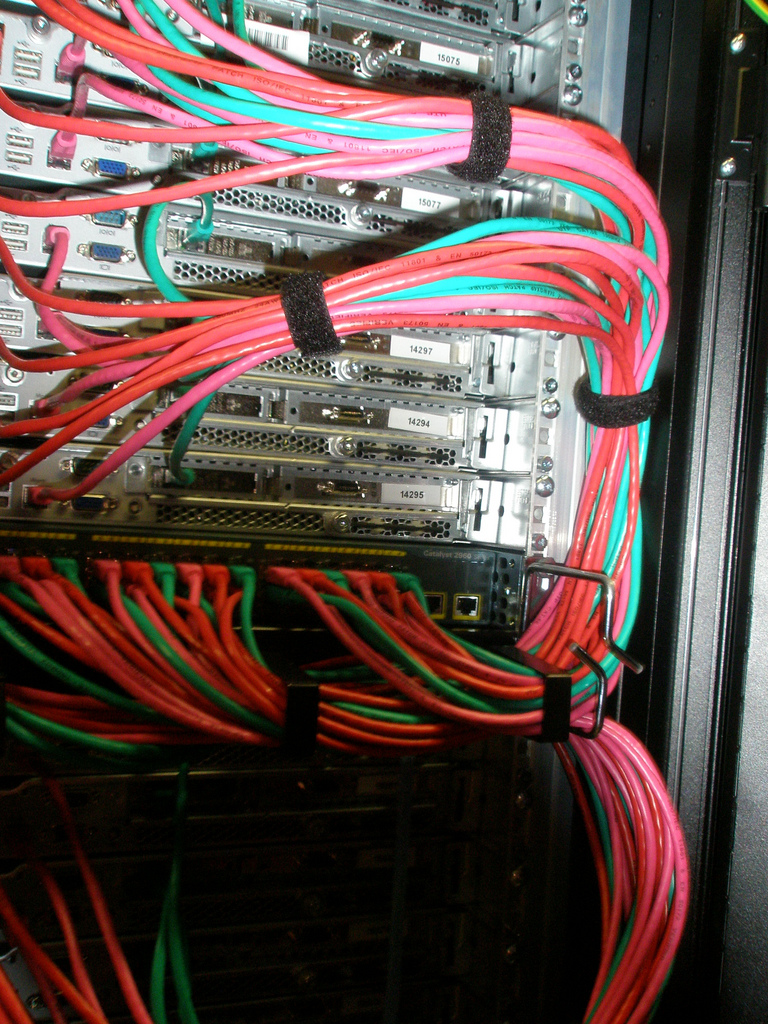

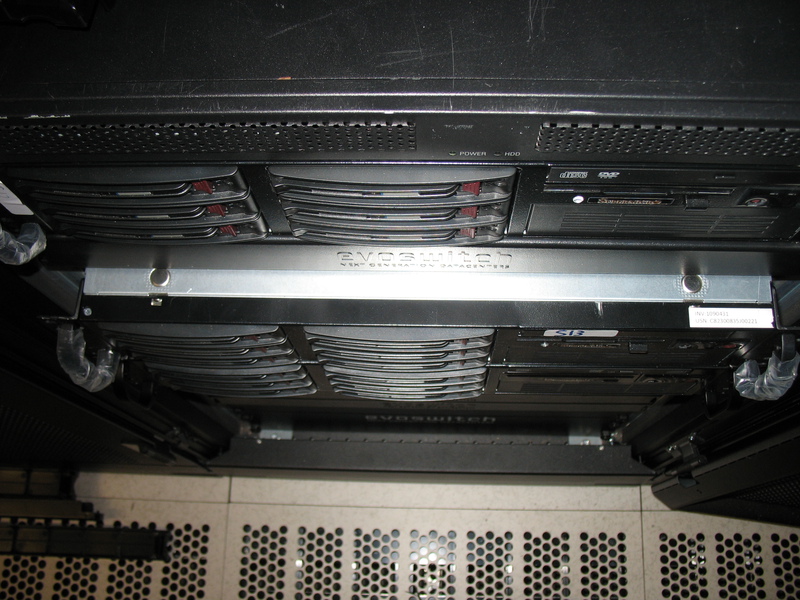

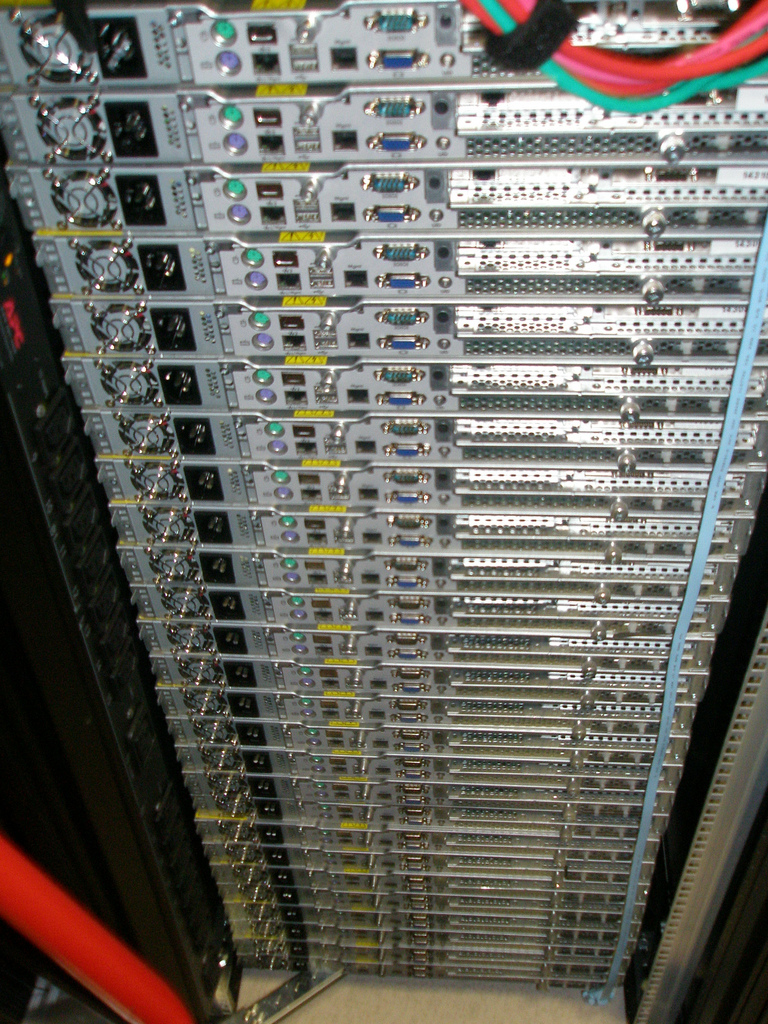

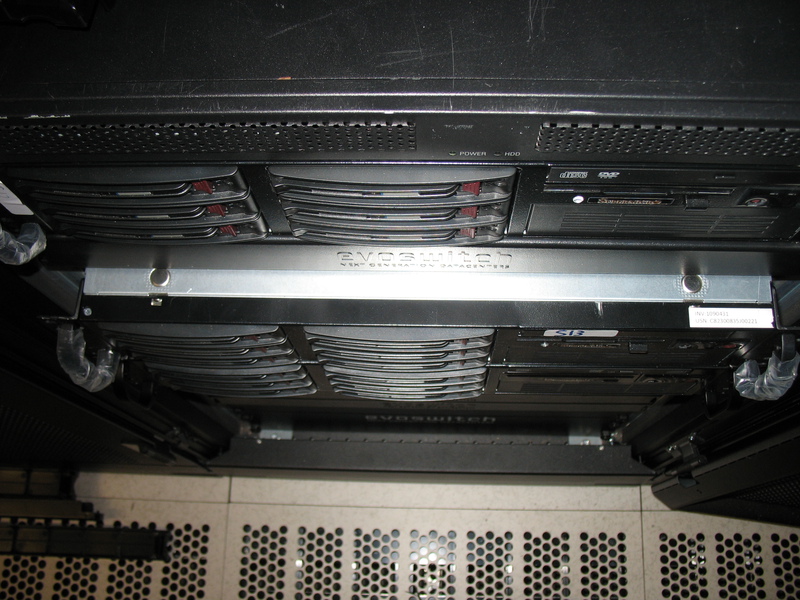

This is how a new batch of servers looks forward to being installed in a cabinet, 40 HP DL120 servers:

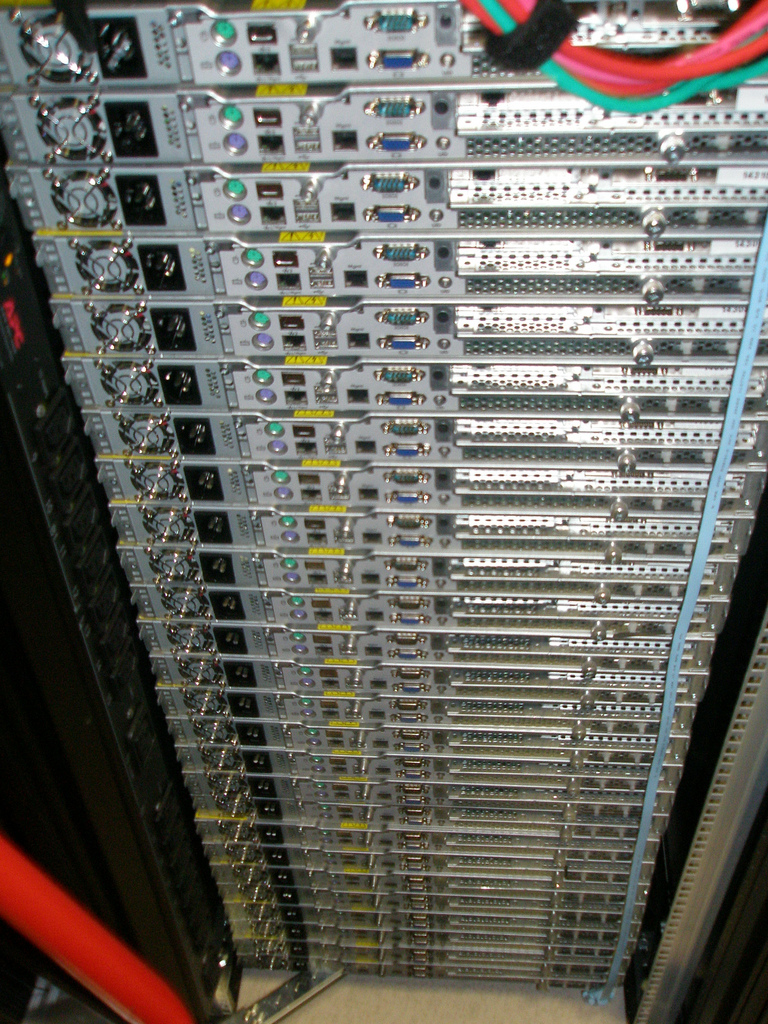

Already installed servers:

Each server is connected not only to the Internet, but also to a system that allows you to restart the server on power, turn off the port on the switch, and even reinstall the server’s operating system with a single click.

In such a standardization there are not only advantages, but also disadvantages. The advantage is that the servers are automatic and the system can sometimes issue them a few minutes after the payment is made, customized according to your wishes. The downside is the flexibility of configuration and initial configuration decisions.

So, if you need a server with different types of disks in one server (for example, SATA and SSD), despite the fact that the server configuration without problems allows their simultaneous use - you will often be sent to order Custom Built (not automatic servers) that are much more expensive than automatic (sometimes 2 times) due to the fact that they are manually installed and placed in cabinets in which you can connect a channel of any desired bandwidth and increase the bandwidth at any time to the level you need. Sometimes, of course, the data center goes to a meeting and agrees to add disks after installation (if the problem is only in disks), subject to payment of the engineer’s time, which costs half an hour to 50 euros (at least half an hour to pay).

However, in standard cabinets, where servers are connected to one hundred megabit, gigabit or channel with restricted traffic, it is not possible to change the rating of traffic or increase throughput. Just as it will not be possible to provide an additional port in the switch for permanent IPMI, for the reason that, as a rule, they are not reserved for this service.

There is another drawback - the inability to reinstall the server automatically with RAID. To get the right level of RAID, you often have to contact the engineer again (unless hardware RAID was not pre-configured before installing the server) or ordering a temporary KVM device on a paid basis, which will cost 50 euros per day (minimum period of days) for installation manually by yourself.

However, IPMI and the engineer in most cases are not necessary, because regardless of which server you have, whether it is automatic or not, you can use the server management system in the Self Service Center to not only re-install, reboot through power, but also load the server into recovery mode.

Rescue Mode provides you with the ability to load a Small Linux or BSD environment into the server’s RAM from the data center network by pressing one button. In the recovery mode, you can access the files on the server's hard drives, repair the bootloader, and many other operations. With the proper administrative skills, you can even reinstall the operating system using the software RAID level you need, but you still need to contact an engineer to configure the hardware. Thus, operations requiring physical intervention are extremely rare.

Features recovery mode. After pressing the download button in recovery mode, the password for the requested environment will be displayed within 10 minutes, if this does not happen, you need to restart the server using the button through the panel and wait another 5-10 minutes. In case the recovery mode fails to load, contact the engineer. To exit recovery mode, simply restart the server.

There is a flaw in the self-service system - in case the detractor gets access to your SSC, it can reinstall all servers by pressing the corresponding button, which will lead to data loss and it will be especially terrible if there are several servers in the account. The solution could be the ability to assign a password to the reinstallation function, both for a single server and for a group of servers. What SSC programmers are notified by us and I hope soon it will be implemented.

SLA in EvoSwitch is possible and marketing bullshit, but a vital necessity. And that's why. The data center is so big, and the employees are sometimes so slow that if you don’t buy the guaranteed level of support, then your server can be approached in 3 hours, or you can wait half a day, there were no precedents for a long wait, there’s nothing to do without a paid SLA. But sometimes the subscriber himself may be guilty of the delay, if not exactly indicated the subject of his request. There are several support lines in the Data Center; one line goes through the requests and assigns them priority, the other one deals with the decision. You can also try to determine the priority yourself by selecting the appropriate topic. For example, on the subject of Service Unavailable, the reaction is more rapid than on the rest, which is logical. But do not abuse this, since the first line of support still checks the priority of this request and you can please in the unspoken “black list” and then wait a very long time for answers to critical requests.

Now consider the SLA levels:

- Best Effort (data center engineers make every effort to resolve the issue as soon as possible, yeah, just run to the servers, no doubt), this is a free SLA level, which does not guarantee anything and resolving issues can take a very long time;

- SLA Bronze (24x7xNBD);

- SLA Silver (24x7x12);

- SLA Gold (24x7x4);

- SLA Platinum (24x7x1).

Paid levels, as we see, guarantee that the engineer will answer you within 24 hours, 12 hours, 4 and 1 hours, respectively. And for the fact that you are guaranteed to receive a RESPONSE (note that the answer is not a solution to the problem, it is for this reason that many consider the SLA in European data centers to be a marketing bullshit) during the day, you have to pay more than 50 euro / month for the server additionally, and in for an hour - 180 euros, which sometimes exceeds the cost of renting a server by 3 times and it is cheaper to build a cluster. For sure, many are not ready to pay this kind of money and the customers would noticeably diminish if there was no other scheme of work. Get paid SLA for free is possible if you work through partners, and not directly. But about this scheme later.

The high cost of SLA when working directly for private subscribers with a small number of servers is the cruel realities of this Data Center premium class. Why it is not possible to organize high-quality support without additional payments - I still do not understand, apparently a high level of zar. boards (compared to Russian and Ukrainian) lowers work efficiency, people are not used to tense up, especially serving such a high-tech platform like the EvoSwitch. This is my opinion based on the experience of working with Ukrainian sites, which are often not technological, but more like a warehouse. Nevertheless, for 10,000 units in the data center in Ukraine can sit 2 employees of those. support (with a salary of 400 euros / month each, compare with the cost of SLA in the Netherlands!) and at the same time without any paid SLA within 15 minutes have time to provide KVM to the server for all needy subscribers, replace the failed drive and at the same time, as our admin - “still have time to smoke, drink tea and sit in chat rooms” ... It is very strange that it is impossible in the Netherlands with such a huge number of employees and a much higher level of zar. boards Although I do not know, maybe only the part indicated by the administrator is executed? For some reason, support does not work effectively without additional payments (although sometimes with additional payments as well)? Perhaps this is due to the fact that in EvoSwitch for most servers there is no possibility of providing free KVM and the engineers themselves have to resolve the issue and restore server availability instead of the client if the client cannot download Rescue. Perhaps ... Although such cases are not very frequent, apparently there is still a problem of lack of personnel and low efficiency of their work. Therefore, without paid SLA, efficiency will be far from always.

Nevertheless, the management of the EvoSwitch Data Center perceives criticism and there are already positive points in improving service efficiency.

The Data Center has developed a partnership program with large hosting providers, which allows to reduce the burden on employees, increase sales and quality of service. Partner providers receive data center services at a discount, as well as depending on the volume, a certain level of SLA (Remote Hands) for all their servers for free, various other types of loyalty. The provision of SLA at no additional cost to its partners is primarily based on the fact that the provider can resolve up to 90% of customer requests on its own without contacting the engineers at the data center. In the case when clients work with Data Center directly, these 90% fall on the shoulders of those employees. department EvoSwitch, and as you know - Zar. fees there are not small. It is much more profitable for a data center to provide an SLA to a partner for free (with a reasonable free time limit of engineers per month), and with the help of its employees, it is possible to solve up to 90% of problems free of charge than to hire additional staff. The scheme is interesting and effective for all parties, including the client, who ultimately receives SLA up to the maximum level without huge payments. In order to understand this, it is enough to look at the statistics: for every hundred servers serviced by us, we created no more than 100 requests to Data Center for the year. In this case, we receive requests from 500 per year per hundred servers.

Now we give a complete list of the reasons why it is more profitable for the client to work through a partner, and not directly:

- support in their native language;

- SLA maximum level for free;

- flexibility in setting up and installing servers, the ability to order an individual solution at a lower price;

- more efficient provision of the server after the order, sometimes instant;

- providing as a bonus control panel or Windows license without payment;

- efficiency of problem solving, the possibility of ordering support at the lowest prices;

- more time to respond to the complaint;

- lower price per server than when buying directly, a variety of payment methods.

And most importantly, the providers of the affiliate program are major data center customers, their requests and needs are more attentive than the client with one or even several dozens of servers. Work through a partner will be beneficial even in the case when you need to accommodate a large infrastructure, hundreds of servers. Since, together with a partner, you will get a bigger discount than you can get when ordering a solution separately, the client also saves himself from unnecessary administrative and technical problems, in solving which the provider provider has more experience.

In the Data Center there is a separate from the sales department and those. support a team of professionals working exclusively with complaints. Since the data center is large - there are a lot of complaints per day. Abuse department employees analyze them and if, in their opinion, the complaint is justified, they are transferred to the client, assigning priority to the complaint. Unreasonable customer complaints do not bother! Depending on the assigned priority, the user has time to respond to the complaint from 1 to 72 hours. If the user does not respond to the complaint within the allotted time - the ip-address of the server to which the complaint was received, is temporarily blocked. Therefore, it is imperative to track complaints.

The greatest danger of remaining with an inactive server is represented by complaints of the URGENT category, where the response time is set to 1 hour. The reaction for such an urgent complaint implies that the user must answer during this time that he began to solve the problem. That is why it is better not to order servers directly in the data center, as a normal person who does not have his own technical department is often unable to respond within an hour. True, such complaints are very rare. These include high-intensity spamming, child porn and all sorts of perversions (this is forbidden even in the loyal Netherlands, which is correct), phishing sites (banking systems, etc.). If the complaint of intense spam is repeated and there is no response within an hour, the server’s address can be locked with unlocking only after reinstalling the server.

Nevertheless, for 99% of complaints, an adequate time limit is given to respond to a problem — 24, 48, or even 72 hours.

EvoSwitch holds ISO27001 and 9001 security certificates, PCI DSS (Payment Card Industry Data Security Standard) and SAS-70, which confirms the high reliability of the Data Center and even allows banks to be placed. Physically, the data center itself passes only by passes, including biometric:

In some areas of the Data Center, in which the servers of banks are located, access is restricted even for data center employees:

Despite the fact that the data center consumes over 30 MW of power from a variety of different sources, 16 x 1540 kVA of paired diesel power plants with redundancy according to the N + 1 scheme (with the possibility of expansion) were installed. The Data Center constantly keeps a stock of fuel sufficient for 72 hours of their operation, and a contract is signed with the supplier of fuel, which, according to the signed SLA, undertakes to supply additional fuel in the period up to 4 hours after the request.

In addition, there is a system of uninterruptible power supplies 1 x 1600 kVA + 3 x 2400 kVA static UPS with the possibility of expansion.

VESDA (Very Early Smoke Detection Apparatus) :

180 . , , .

. . , 1 .

EvoSwitch premium-, , , , . , , , , .

( ) . , , ( ) - . , 4 ! - — PNI , . Draw your own conclusions.

: www.evoswitch.com/en/news-and-media/video

Why the Netherlands?

The Netherlands is the most loyal country in terms of legislation among the rest of Europe. A convenient geographical position makes it possible to build good connectivity, both with America and with Europe at the same time, including with Ukraine and Russia - the main consumers of the Russian-speaking Internet traffic segment. And in the Netherlands you can place legal sites for adults, which is impossible in many other countries due to imperfect legislation or possibly with restrictions. For example, in Germany, almost the entire territory, if there is a stub and paid access, it is allowed, but in open access it is not. For some reason, even there, politicians do not think that minors, if necessary, are able to use their parents' card or go to a site located in another region where there are no restrictions.

')

Adult makes up a huge share of all Internet traffic - on average, over 30% of the world, so it’s stupid and not far-sighted to prevent its placement, such countries have to pay dearly for external traffic, because when it’s impossible - the demand is even higher. This can be confirmed by Russia, where the share of adult foreign traffic is estimated at up to 54%. As a result, the development of internal networks of such countries leaves much to be desired, as local Data Centers lose up to half of the market. But as they said before, there is no sex in Russia and Ukraine, as the legislators apparently believe, introducing such bans. Only now this concept is relevant for the Internet, for which even criminal liability is foreseen, if God forbid you have placed a strawberry in Ukraine or Russia (in the Russian Federation, the situation is better with this, but also officially prohibited and punishable).

Banning adult sites is, alas, not the only problem. Unfortunately, in Ukraine and Russia, the sad situation has been developing lately, both in terms of connectivity between countries (there are very few quality channels, Russia and Ukraine do not develop connectivity with each other, apparently there is no interest in traffic exchange) and in law . Servers can be withdrawn without a court decision as a “proof” for various far-fetched motives at any time, and viewing streaming video cannot be made equally high quality for both countries at the same time, unless it is spent thoroughly on building normal peering. This is not capable, apparently, even Vkontakte. Watching videos from Vkontakte sometimes pretty dumb in Ukraine. Poor connectivity and losses on the channels, which are always present due to network congestion, lead to the fact that the speed of the stream between Russia and Ukraine sometimes reaches only 2 Mbit / s, sometimes lower, which is sometimes not enough to watch streaming video and the more HD video quality.

Very often, traffic between Ukraine and Russia goes on a very difficult route, for example via Budapest, Frankfurt, London:

But sadness is not only in this, but also in the internal networks of countries. For a similar reason, sometimes a site from abroad will respond better than a site located in the Data Center of the capital, if checked from the regions. In the same Ukrtelecom, which is an Internet monopolist in Ukraine, this is often observed. A user from a region can access a site hosted in Kiev, through Germany or even through several European countries. In Russia as a whole, the situation is no better, and sometimes even worse. For this reason, guaranteed gigabit on the Internet, which will have more or less acceptable connectivity, both with Russian and Ukrainian users at the same time, will cost considerably more money than a similar channel in the Netherlands.

A logical question arises, do we really need all these problems with hosting servers in Ukraine and Russia, and even for big money? In my opinion - no. It would be much easier to place the servers in a reliable Data Center and adequate to the country and provide excellent connectivity from there.

Data Center "EvoSwitch"

Located in Haarlem, 19 km west of the center of Amsterdam, founded in 2006 and commissioned in 2007, with an expansion plan for 5 halls and 2 switching rooms. The current occupied area is over 5000 square meters. m with the possibility of growth up to 10 000 square meters. m, and in the long term - up to 40 000 square meters. Due to its favorable location in an area with small electricity consumers, the EvoSwitch has the ability to expand consumption up to 60 MW of power, currently over 30 MW is used.

EvoSwitch uses 100% "green" energy, and the most efficient, energy efficiency ratio is about 1.2. This is the first Data Center in the Netherlands, as a result of which there are no emissions of carbon dioxide into the atmosphere, almost absent. The only sources are the vehicles of employees whose emissions Data Center compensates by contributions to the environmental fund.

Cooling is designed on the principle of hot and cold corridors, raised floor is used:

In each hall, servers are placed in isolated modules with adiabatic cooling, heat from the modules is emitted into the hall, and from the hall is removed by industrial air conditioners:

The capacity of one module is 32 server cabinets; in each of the cabinets, equipment consumption of up to 25 kW is allowed.

In addition, the Data Center EvoSwitch is the holder of ISO27001 and 9001 security certificates, PCI DSS (Payment Card Industry Data Security Standard) and SAS-70, which confirms the high reliability of the Data Center and allows even banks to be placed.

Connectivity

The EvoSwitch Data Center has excellent connectivity, both with Russia and Ukraine, as already indicated above, it is sometimes better than connectivity between different regions of Russia and Ukraine within the country. To confirm my words, here are some routes to Russia (Moscow and St. Petersburg):

[root@evoswitch.ua-hosting ~] # tracert mail.ru

traceroute to mail.ru (94.100.191.241), 30 hops max, 40 byte packets

1 hosted.by.leaseweb.com (95.211.156.254) 0.665 ms 0.663 ms 0.661 ms

2 xe4-0-0.hvc1.evo.leaseweb.net (82.192.95.209) 0.253 ms 0.266 ms 0.267 ms

3 xe1-3-0.jun.tc2.leaseweb.net (62.212.80.121) 0.918 ms 0.930 ms 0.932 ms

4 ae4-30.RT.TC2.AMS.NL.retn.net (87.245.246.17) 0.932 ms 0.934 ms 0.935 ms

5 ae0-1.RT.M9P.MSK.RU.retn.net (87.245.233.2) 44.981 ms 44.993 ms 44.994 ms

6 GW-NetBridge.retn.net (87.245.229.46) 52.378 ms 52.101 ms 52.095 ms

7 ae37.vlan905.dl4.m100.net.mail.ru (94.100.183.53) 52.093 ms 52.086 ms 52.081 ms

8 mail.ru (94.100.191.241) 41.870 ms 42.195 ms 42.181 ms

[root@evoswitch.ua-hosting ~] #

[root@evoswitch.ua-hosting ~] # tracert selectel.ru

traceroute to selectel.ru (188.93.16.26), 30 hops max, 40 byte packets

1 hosted.by.leaseweb.com (95.211.156.254) 0.494 ms 0.775 ms 0.793 ms

2 xe2-2-2.hvc2.leaseweb.net (82.192.95.238) 0.233 ms 0.239 ms 0.241 ms

3 xe1-3-2.jun.tc2.leaseweb.net (62.212.80.93) 0.767 ms 0.771 ms 0.772 ms

4 ae4-30.RT.TC2.AMS.NL.retn.net (87.245.246.17) 0.782 ms 0.996 ms 0.999 ms

5 ae5-6.RT.KM.SPB.RU.retn.net (87.245.233.133) 34.562 ms 34.573 ms 34.575 ms

6 GW-Selectel.retn.net (87.245.252.86) 41.945 ms 41.735 ms 41.728 ms

7 188.93.16.26 (188.93.16.26) 42.686 ms 42.469 ms 42.448 ms

[root@evoswitch.ua-hosting ~] #

Well, now I’ll show connectivity with my home provider in Ukraine:

[root@evoswitch.ua-hosting ~] # tracert o3.ua

traceroute to o3.ua (94.76.107.4), 30 hops max, 40 byte packets

1 hosted.by.leaseweb.com (95.211.156.254) 0.578 ms 0.569 ms 0.569 ms

2 xe2-2-2.hvc2.leaseweb.net (82.192.95.238) 0.253 ms 0.259 ms 0.260 ms

3 62.212.80.140 (62.212.80.140) 0.894 ms 0.895 ms 0.894 ms

4 ams-ix.vlan425-br01-fft.topnet.ua (195.69.144.218) 7.186 ms 7.192 ms 7.192 ms

5 freenet-gw2-w.kiev.top.net.ua (77.88.212.150) 35.204 ms 35.402 ms 35.404 ms

6 94.76.105.6.freenet.com.ua (94.76.105.6) 39.237 ms 39.285 ms 39.279 ms

7 94.76.104.22.freenet.com.ua (94.76.104.22) 38.184 ms 38.304 ms 38.289 ms

8 noc.freenet.com.ua (94.76.107.4) 39.080 ms 39.200 ms 39.190 ms

[root@evoswitch.ua-hosting ~] #

Such a wonderful connectivity allows me to work with my servers in the Netherlands at a speed higher than with servers located in Kiev in the Utel Data Center Ukrtelecom. Sad but true, connectivity from Kiev with the Data Center in Kiev is worse than connectivity with the Dutch data center ... These are the realities. And all because the data center EvoSwitch is not 2-3 aplinka, as often happens on Ukrainian and Russian sites, but a few dozen. Also, there are various peering and inclusion in the main traffic exchange points. The total number of uplinks and peerings is more than 30, and the total connectivity exceeds 3 Tbit / s. Perhaps this is the Data Center with the best connectivity in the world.

External routing is done through 3 different inputs in the building. Optical cables are safely routed to the optical cross in the switching room. All cables are anonymous and controlled by EvoSwitch, cable infrastructure information is constantly updated in the corresponding database. In the vicinity of the Data Center there are fibers of the following operators: BT, Colt, Ziggo, Enertel, Eurofiber, FiberRing, Global Crossing, KPN, MCI / Verizon, Priority, Tele2, UPC. As a result, such basic uplinks as BT, Cogent, Colt, Deutsche Telekom, FiberRing, Eurofiber, Global Crossing, Interoute, KPN, Relined, Tele2, Telecom Italia, Teleglobe / VSNL, TeliaSonera are used.

You can see spaghetti made up of hundreds of yellow-colored optical cables (with a capacity of 10 Gbit / s) and much less than orange ones (with a throughput of 1 Gbit / s) in the Data Center switching room.

Switching room:

This is the core of the Data Center, which costs more than 1 million euros, and this core is duplicated:

Here are the points of exchange, in which there are inclusions:

MSK-IX - 10 Gbit / s

FRANCEIX - 10 Gbit / s

AMSIX - 140 Gbit / s

DE-CIX - 60 Gbit / s

LINX - 40 Gbit / s

BNIX - 10 Gbit / s

EXPANIX - 10 Gbit / s

NLIX - 10 Gbit / s

DIX - 1 Gbit / s

NIX1 - 1 Gbit / s

NETNOD - 10 Gbit / s

DE-CIX - 60 Gbit / s

SWISSIX- 10 Gbit / s

MIX - 1 Gbit / s

NIX - 1 Gbit / s

VIX - 1 Gbit / s

SIX - 1 Gbit / s

BIX - 1 Gbit / s

INTERLAN - 1 Gbit / s

Standardization and self-service system

Currently, the growth rate of the data center's client base is quite high, in particular, thanks to partners who order servers for their clients, more than a hundred new servers are installed in the Data Center daily. For this reason, there are certain rules for the placement and issuance of servers.

So, there are standard cabinets (with so-called “automatic” pre-installed servers that are issued automatically), designed to provide each server with guaranteed 100 Mb / s Flat Fee (without traffic restrictions), guaranteed with 1 Gb / s, as well as cabinets for servers with limited traffic consumption.

In addition, configuration standardization is applied. One cabinet is filled with servers of the same type. The only thing that can differ in them is the number of disks and RAM.

This is how a new batch of servers looks forward to being installed in a cabinet, 40 HP DL120 servers:

Already installed servers:

Each server is connected not only to the Internet, but also to a system that allows you to restart the server on power, turn off the port on the switch, and even reinstall the server’s operating system with a single click.

In such a standardization there are not only advantages, but also disadvantages. The advantage is that the servers are automatic and the system can sometimes issue them a few minutes after the payment is made, customized according to your wishes. The downside is the flexibility of configuration and initial configuration decisions.

So, if you need a server with different types of disks in one server (for example, SATA and SSD), despite the fact that the server configuration without problems allows their simultaneous use - you will often be sent to order Custom Built (not automatic servers) that are much more expensive than automatic (sometimes 2 times) due to the fact that they are manually installed and placed in cabinets in which you can connect a channel of any desired bandwidth and increase the bandwidth at any time to the level you need. Sometimes, of course, the data center goes to a meeting and agrees to add disks after installation (if the problem is only in disks), subject to payment of the engineer’s time, which costs half an hour to 50 euros (at least half an hour to pay).

However, in standard cabinets, where servers are connected to one hundred megabit, gigabit or channel with restricted traffic, it is not possible to change the rating of traffic or increase throughput. Just as it will not be possible to provide an additional port in the switch for permanent IPMI, for the reason that, as a rule, they are not reserved for this service.

There is another drawback - the inability to reinstall the server automatically with RAID. To get the right level of RAID, you often have to contact the engineer again (unless hardware RAID was not pre-configured before installing the server) or ordering a temporary KVM device on a paid basis, which will cost 50 euros per day (minimum period of days) for installation manually by yourself.

However, IPMI and the engineer in most cases are not necessary, because regardless of which server you have, whether it is automatic or not, you can use the server management system in the Self Service Center to not only re-install, reboot through power, but also load the server into recovery mode.

Rescue Mode provides you with the ability to load a Small Linux or BSD environment into the server’s RAM from the data center network by pressing one button. In the recovery mode, you can access the files on the server's hard drives, repair the bootloader, and many other operations. With the proper administrative skills, you can even reinstall the operating system using the software RAID level you need, but you still need to contact an engineer to configure the hardware. Thus, operations requiring physical intervention are extremely rare.

Features recovery mode. After pressing the download button in recovery mode, the password for the requested environment will be displayed within 10 minutes, if this does not happen, you need to restart the server using the button through the panel and wait another 5-10 minutes. In case the recovery mode fails to load, contact the engineer. To exit recovery mode, simply restart the server.

There is a flaw in the self-service system - in case the detractor gets access to your SSC, it can reinstall all servers by pressing the corresponding button, which will lead to data loss and it will be especially terrible if there are several servers in the account. The solution could be the ability to assign a password to the reinstallation function, both for a single server and for a group of servers. What SSC programmers are notified by us and I hope soon it will be implemented.

SLA (service-level agreement) and technical support features

SLA in EvoSwitch is possible and marketing bullshit, but a vital necessity. And that's why. The data center is so big, and the employees are sometimes so slow that if you don’t buy the guaranteed level of support, then your server can be approached in 3 hours, or you can wait half a day, there were no precedents for a long wait, there’s nothing to do without a paid SLA. But sometimes the subscriber himself may be guilty of the delay, if not exactly indicated the subject of his request. There are several support lines in the Data Center; one line goes through the requests and assigns them priority, the other one deals with the decision. You can also try to determine the priority yourself by selecting the appropriate topic. For example, on the subject of Service Unavailable, the reaction is more rapid than on the rest, which is logical. But do not abuse this, since the first line of support still checks the priority of this request and you can please in the unspoken “black list” and then wait a very long time for answers to critical requests.

Now consider the SLA levels:

- Best Effort (data center engineers make every effort to resolve the issue as soon as possible, yeah, just run to the servers, no doubt), this is a free SLA level, which does not guarantee anything and resolving issues can take a very long time;

- SLA Bronze (24x7xNBD);

- SLA Silver (24x7x12);

- SLA Gold (24x7x4);

- SLA Platinum (24x7x1).

Paid levels, as we see, guarantee that the engineer will answer you within 24 hours, 12 hours, 4 and 1 hours, respectively. And for the fact that you are guaranteed to receive a RESPONSE (note that the answer is not a solution to the problem, it is for this reason that many consider the SLA in European data centers to be a marketing bullshit) during the day, you have to pay more than 50 euro / month for the server additionally, and in for an hour - 180 euros, which sometimes exceeds the cost of renting a server by 3 times and it is cheaper to build a cluster. For sure, many are not ready to pay this kind of money and the customers would noticeably diminish if there was no other scheme of work. Get paid SLA for free is possible if you work through partners, and not directly. But about this scheme later.

The high cost of SLA when working directly for private subscribers with a small number of servers is the cruel realities of this Data Center premium class. Why it is not possible to organize high-quality support without additional payments - I still do not understand, apparently a high level of zar. boards (compared to Russian and Ukrainian) lowers work efficiency, people are not used to tense up, especially serving such a high-tech platform like the EvoSwitch. This is my opinion based on the experience of working with Ukrainian sites, which are often not technological, but more like a warehouse. Nevertheless, for 10,000 units in the data center in Ukraine can sit 2 employees of those. support (with a salary of 400 euros / month each, compare with the cost of SLA in the Netherlands!) and at the same time without any paid SLA within 15 minutes have time to provide KVM to the server for all needy subscribers, replace the failed drive and at the same time, as our admin - “still have time to smoke, drink tea and sit in chat rooms” ... It is very strange that it is impossible in the Netherlands with such a huge number of employees and a much higher level of zar. boards Although I do not know, maybe only the part indicated by the administrator is executed? For some reason, support does not work effectively without additional payments (although sometimes with additional payments as well)? Perhaps this is due to the fact that in EvoSwitch for most servers there is no possibility of providing free KVM and the engineers themselves have to resolve the issue and restore server availability instead of the client if the client cannot download Rescue. Perhaps ... Although such cases are not very frequent, apparently there is still a problem of lack of personnel and low efficiency of their work. Therefore, without paid SLA, efficiency will be far from always.

Nevertheless, the management of the EvoSwitch Data Center perceives criticism and there are already positive points in improving service efficiency.

Work through partners, why it is beneficial to all

The Data Center has developed a partnership program with large hosting providers, which allows to reduce the burden on employees, increase sales and quality of service. Partner providers receive data center services at a discount, as well as depending on the volume, a certain level of SLA (Remote Hands) for all their servers for free, various other types of loyalty. The provision of SLA at no additional cost to its partners is primarily based on the fact that the provider can resolve up to 90% of customer requests on its own without contacting the engineers at the data center. In the case when clients work with Data Center directly, these 90% fall on the shoulders of those employees. department EvoSwitch, and as you know - Zar. fees there are not small. It is much more profitable for a data center to provide an SLA to a partner for free (with a reasonable free time limit of engineers per month), and with the help of its employees, it is possible to solve up to 90% of problems free of charge than to hire additional staff. The scheme is interesting and effective for all parties, including the client, who ultimately receives SLA up to the maximum level without huge payments. In order to understand this, it is enough to look at the statistics: for every hundred servers serviced by us, we created no more than 100 requests to Data Center for the year. In this case, we receive requests from 500 per year per hundred servers.

Now we give a complete list of the reasons why it is more profitable for the client to work through a partner, and not directly:

- support in their native language;

- SLA maximum level for free;

- flexibility in setting up and installing servers, the ability to order an individual solution at a lower price;

- more efficient provision of the server after the order, sometimes instant;

- providing as a bonus control panel or Windows license without payment;

- efficiency of problem solving, the possibility of ordering support at the lowest prices;

- more time to respond to the complaint;

- lower price per server than when buying directly, a variety of payment methods.

And most importantly, the providers of the affiliate program are major data center customers, their requests and needs are more attentive than the client with one or even several dozens of servers. Work through a partner will be beneficial even in the case when you need to accommodate a large infrastructure, hundreds of servers. Since, together with a partner, you will get a bigger discount than you can get when ordering a solution separately, the client also saves himself from unnecessary administrative and technical problems, in solving which the provider provider has more experience.

Complaints response policy, response times

In the Data Center there is a separate from the sales department and those. support a team of professionals working exclusively with complaints. Since the data center is large - there are a lot of complaints per day. Abuse department employees analyze them and if, in their opinion, the complaint is justified, they are transferred to the client, assigning priority to the complaint. Unreasonable customer complaints do not bother! Depending on the assigned priority, the user has time to respond to the complaint from 1 to 72 hours. If the user does not respond to the complaint within the allotted time - the ip-address of the server to which the complaint was received, is temporarily blocked. Therefore, it is imperative to track complaints.

The greatest danger of remaining with an inactive server is represented by complaints of the URGENT category, where the response time is set to 1 hour. The reaction for such an urgent complaint implies that the user must answer during this time that he began to solve the problem. That is why it is better not to order servers directly in the data center, as a normal person who does not have his own technical department is often unable to respond within an hour. True, such complaints are very rare. These include high-intensity spamming, child porn and all sorts of perversions (this is forbidden even in the loyal Netherlands, which is correct), phishing sites (banking systems, etc.). If the complaint of intense spam is repeated and there is no response within an hour, the server’s address can be locked with unlocking only after reinstalling the server.

Nevertheless, for 99% of complaints, an adequate time limit is given to respond to a problem — 24, 48, or even 72 hours.

Safety and reliability

EvoSwitch holds ISO27001 and 9001 security certificates, PCI DSS (Payment Card Industry Data Security Standard) and SAS-70, which confirms the high reliability of the Data Center and even allows banks to be placed. Physically, the data center itself passes only by passes, including biometric:

In some areas of the Data Center, in which the servers of banks are located, access is restricted even for data center employees:

Despite the fact that the data center consumes over 30 MW of power from a variety of different sources, 16 x 1540 kVA of paired diesel power plants with redundancy according to the N + 1 scheme (with the possibility of expansion) were installed. The Data Center constantly keeps a stock of fuel sufficient for 72 hours of their operation, and a contract is signed with the supplier of fuel, which, according to the signed SLA, undertakes to supply additional fuel in the period up to 4 hours after the request.

In addition, there is a system of uninterruptible power supplies 1 x 1600 kVA + 3 x 2400 kVA static UPS with the possibility of expansion.

VESDA (Very Early Smoke Detection Apparatus) :

180 . , , .

. . , 1 .

findings

EvoSwitch premium-, , , , . , , , , .

( ) . , , ( ) - . , 4 ! - — PNI , . Draw your own conclusions.

: www.evoswitch.com/en/news-and-media/video

Source: https://habr.com/ru/post/180851/

All Articles