About ratings: Hu from hu in the market of digital analytics?

Among web development companies, it is customary to measure “success rates”. In addition to the primary features, such as the number of eminent clients and the thickness of the portfolio, secondary ratings were also invented.

Over time, it came to measuring followers and likes - banter, taken by many too seriously. However, there are also traditional "case" ratings, which everyone who is in the industry knows about.

')

By the way, Tagline , one of the largest Russian ratings of web development studios and Internet agencies, launches voting today.

In light of the relevance of the issue, a small study. In order:

Who is in the rating network of Runet?

Offhand remembered:

- Tagline . Conducted from the year

- Runet Rating (CMS Magazine). Conducted from

- 1C-Bitrix . Internal, for partners Bitrix, no comment.

- UMI.CMS Similarly, for UMI.

- NetCat . Similarly for NetCat.

- Rating SEO-companies (CMS Magazine).

- SEO News Rating .

- This is only global. There are still regional ones, of which there are many more. You can look through the search results.

- Ruward . Self-declared “rating ratings,” a combined rating of 52 others.

"Interstitial" ratings of commercial CMS in the study will not be used. Also, we will not take into account too specific ratings (for example, for developers of flash-sites). Those ratings, where about 20 participants are not representative, are also not taken.

So let's go.

Study

We narrow the circle to web developer ratings. Final list:

- Tagline ;

- Rating Runet ;

- Eastern European Guild Web Developer Ranking ;

- Wwwrating Rating ;

- BestWebDevs rating .

The basis of any rating is its criteria. It is clear that the criteria for web studios should be different from those for an SEO agency. This, by the way, is the first stone in the garden of "consolidated ratings", about which later.

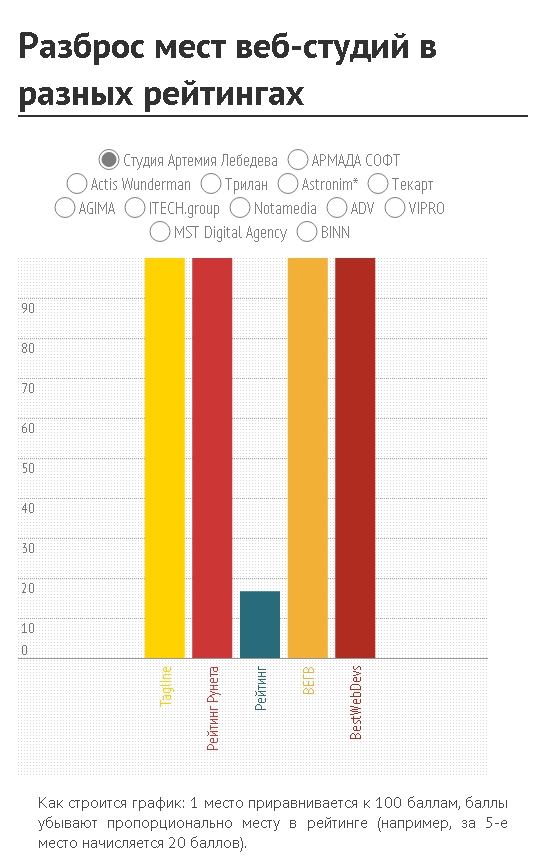

Hypothesis: Success rates for companies with the same field of activity should be very close to different ratings. Therefore - the spread of places should be insignificant.

We take as a basis the top twenty "Rating Runet." The first (and, in general, the expected news) - only 15 companies out of 20 are represented in more than one rating. This somewhat distorts the picture, but the most interesting is ahead. Click on the chart.

The effect is as if a grenade was thrown into the rating system of Runet. Too wide range of opinions. How to behave to the customer, who first faced the choice of a contractor - is not at all clear.

Let's go to the reasons. That is, to the evaluation criteria.

First, three of the five ratings above have a closed rating system. That is, it is physically impossible to subject them to any criticism, except to point out this very closeness.

Secondly, the “transparent” rating system for Tagline and Runet Rating is in fact not so transparent. Detailed methodology is, for example, on the site of the first.

Analysis

But opacity is half the problem. Assume that you can trust the analytical departments of the rating compilers. And we will assume that “equalizing” coefficients help create the necessary objectivity.

The reason for the failure of ratings number two: a lot of them. If some people loudly announce themselves in the media and attract as many participants as possible through their channels, the second ones are asleep at the bottom of the sea, like the Great Cthulhu, and then suddenly publish the results. The principle of "who managed - that and slippers" in action. A studio contractor physically cannot (and does not want) make it everywhere. Meanwhile, the spread increases.

Voluntary participation in ratings causes incomplete information. Incompleteness causes unreliability of the overall picture. Inaccuracy creates customer misconceptions. Fallacy leads to the dark side.

Another reason: the desire to embrace everything. Often, narrowly focused ratings are published "quietly", and most of all attention (and PR forces) goes to pushing through "general summary ratings." For places in which the battle then flare up. And in fact - it is a salad from the contractors completely different specialization. The client, of course, goes to the most popular rating - and gets a distorted picture.

Perhaps there is such a reason as the fanaticism of the participants. Although, at the same time, everyone understands that knowing the criteria can "optimize the metric." Yes, so that you get into the tops. Only here there will be a sense from it - one going off-scale ChSV and the increased flow of potential customers. Which can sober up the level of the company's portfolio - it did not grow while you were engaged in optimization of metrics.

Simple conclusions

- The dependence of the probability of successful completion of the project from a place in a particular rating is weak.

- Rationally use only “narrowly focused” ratings to create a list of contractors you can trust with. And then choose among them. Any aggregate ratings (web studios, internet agencies and design studios in a heap) are of no value.

- The most correct selection criteria are still recommendations from colleagues and the level of studio work. About which, by the way, I once wrote Runet Rating, reinforcing it with my own research .

- The more ratings - the less valuable becomes the participation in them. The less willing to participate - the less reliable the ratings themselves.

These are the conclusions. I propose to discuss in the comments.

Source: https://habr.com/ru/post/180551/

All Articles