Overview of data center network architectures

Part 1. "Particle of God"

The past year 2012 was rich in iconic scientific breakthroughs - both the decoding of the Denisovtsev genome, the landing of the Curiosity on Mars, and the mouse grown from a stem cell. However, the most important discovery of 2012 is unequivocally acknowledged by the event that occurred in July at CERN (European Center for Nuclear Research) - practical confirmation of the existence of the Higgs boson, the “god particle”, as Leon Max Lederman dubbed it.

“Well, where is HP?” You ask. HP has been working very closely with CERN for a long time, especially in the area of networking technologies. For example, in CERN Openlab R & D, promising developments are underway in the field of creating applications for an HP-based SDN controller, the development of network security systems, etc., are actively taking place. Therefore, HP took the most direct part in this discovery. In the literal sense, helped to catch the Higgs boson since the launch of the LHC, because LHC data collection and processing network is built on HP equipment. To understand the scale of the network in CERN - it is about 50,000 active user devices, more than 10,000 kilometers of cable, about 2500 network devices. Each year, this network digests approximately 15 Petabytes of information (a huge amount of data from the detectors — statistics from collisions of particle beams with speeds almost equal to the speed of light). All this mass of data is processed in a distributed data center network. It was there, in the data center, on the basis of the analysis of a huge amount of statistics that "a particle of God was sifted through a sieve."

')

Part 2. Where sifted "particle of God"

For obvious reasons, I can’t give here the architecture of the CERN data center network. Instead, in the next part I will give a brief description of typical data center architectures built on the basis of HP equipment and technologies and implemented in various projects.

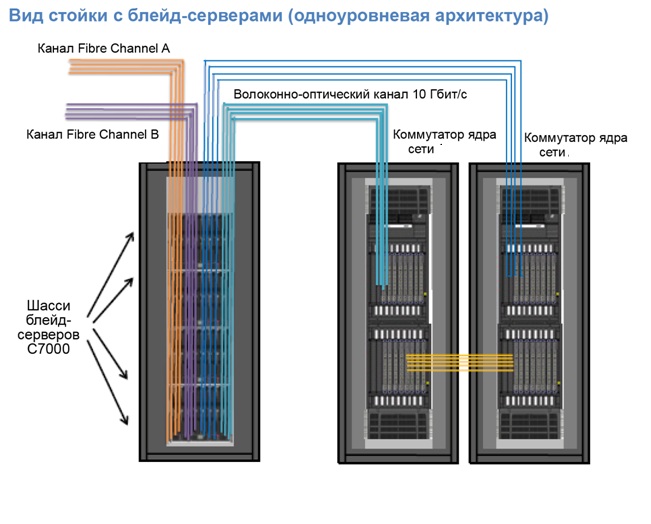

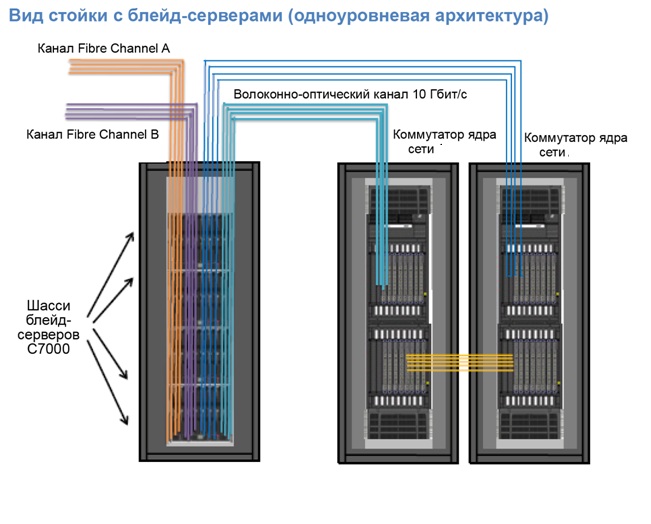

In short, the network of modern data centers can be built in one, two and three levels. Each option has its own purpose. A single-tier architecture implies a direct connection between the core / aggregation equipment and the servers. Something like this looks like in terms of the physical “cabling” of the racks:

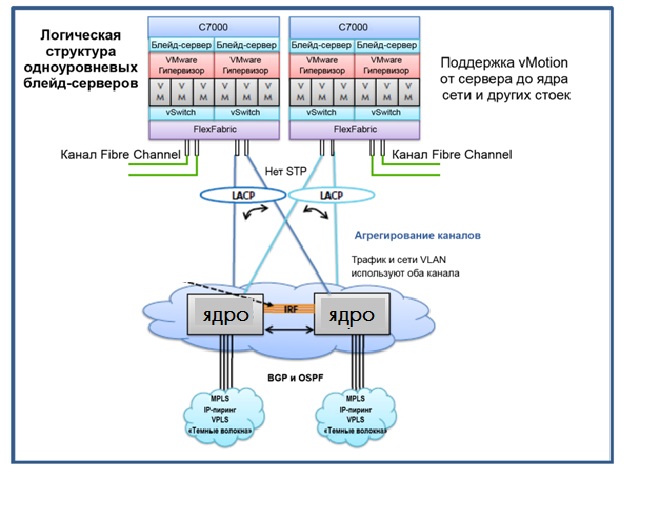

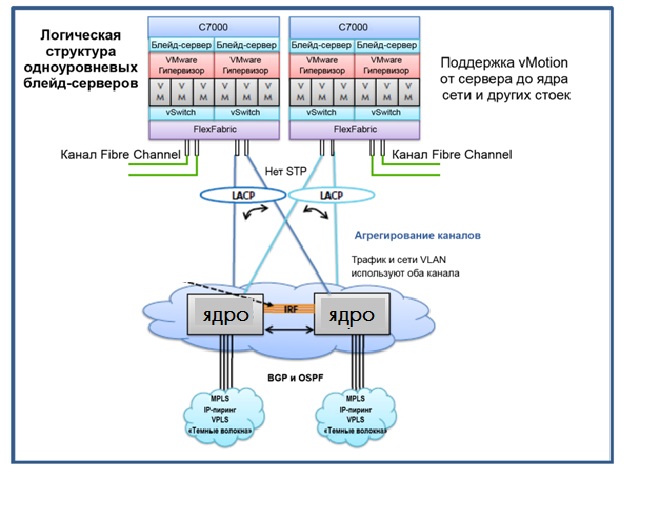

And it is applied, as a rule, in small data centers-ah, built mainly on blades and focused on virtualization. Something like this, the data center looks from the point of view of logic and the network protocols and technologies used:

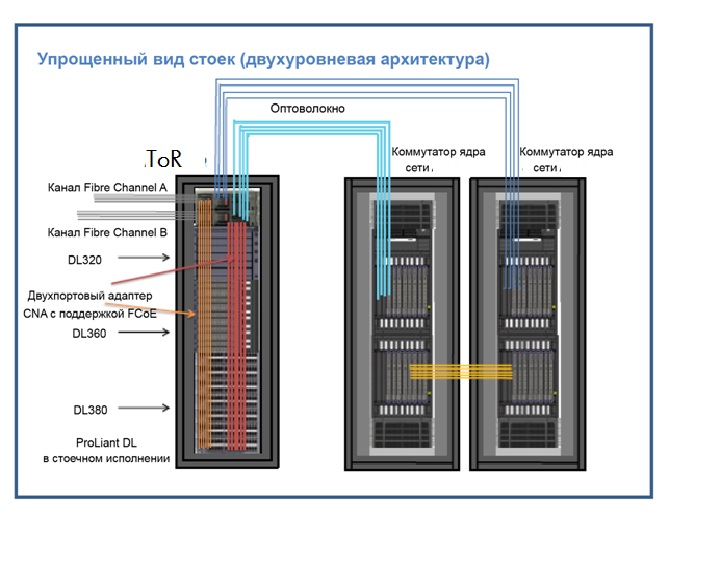

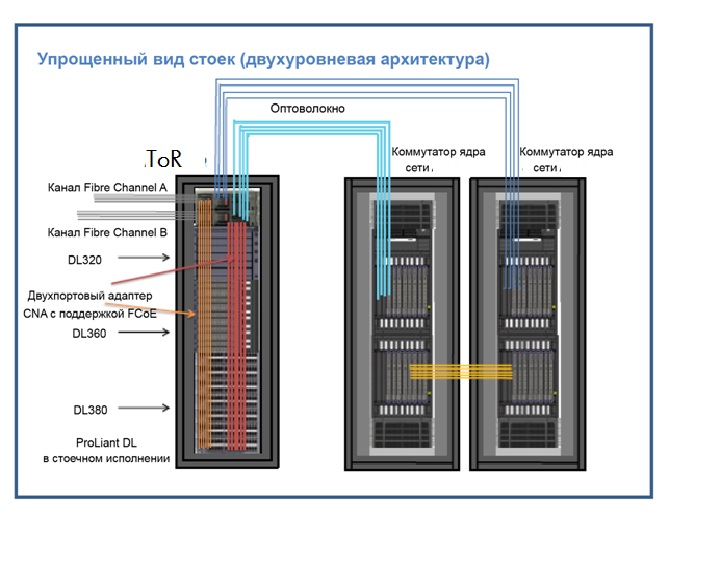

The next network option in the data center is a two-tier architecture. It already implies an additional level between the core / aggregation of the data center in the form of ToR (Top-of-Rack) switches. This design is well suited when a need arises for additional port capacity in the data center, or it is necessary to provide an additional level of client traffic termination (network policy settings, etc.). The ToR design, as it is sometimes called, is most often used in cases where most servers in the data center are rack-mounted. Sample diagrams are shown below:

The logical architecture in terms of technologies and protocols used looks like this:

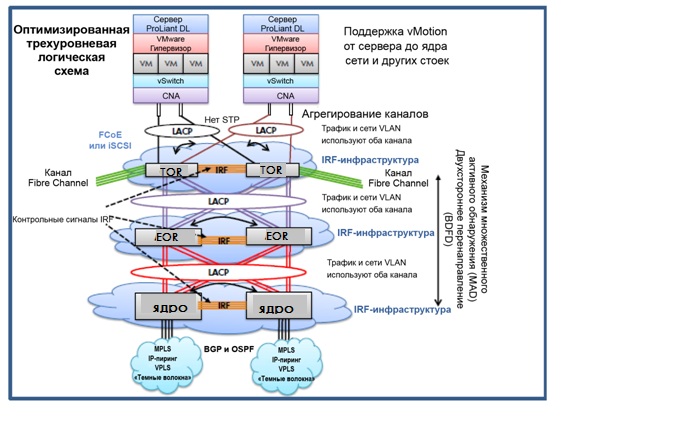

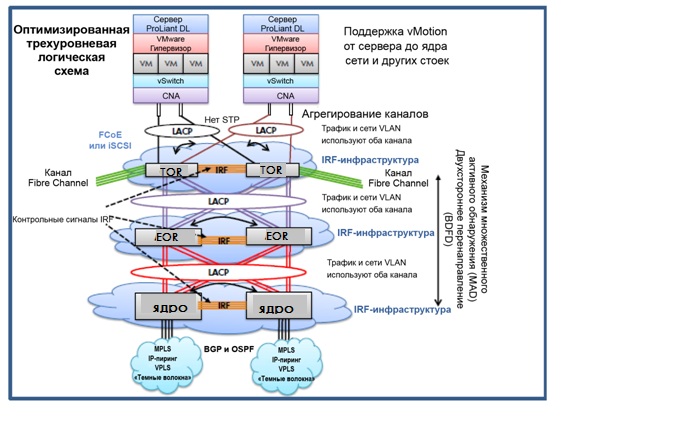

Well, the third design option is three-level, sometimes it is also called EoR (End-of-Row). This design option is more often implemented for data centers in which the total network capacity and the number of broadband interfaces (starting at 10 Gbps) are key factors. Although the design described is based on HP hardware, any equipment based on current standards can be integrated into it. The physical architecture looks like this:

In the 3-tier data center architecture, an additional level of aggregation switches adds flexibility in building network topologies and increases the potential capacity of the solution by ports (this design option allows you to aggregate a very large port capacity), which eliminates the potential problems of such a design. From the point of view of the logic of networking, it looks like this:

About all the above options, we can say that they give:

• L2 flexibility and space - a flat L2 network is better suited to the needs of virtualization. If the ultimate goal of a data center is virtual machine support, a flat L2 network makes it easy to move virtual machines between hosts.

• No need for STP / RSTP / MSTP - along with virtual switches, this design provides loop protection without using STP.

• Fast convergence - in case of failures, the network converges an order of magnitude faster than using traditional protocols of the STP family.

• Security -

- 1-level network design allows IPS devices to integrate into the data center core equipment in VLAN aggregation sites, which is often simpler and more convenient.

- 2 and 3-level design allows you to distribute the elements of network protection across levels, which, in turn, naturally provides echelon protection.

Which option to choose - in each particular case, the network architect decides and it depends on many factors (technical requirements for the data center, the wishes of the end users, forecasts for infrastructure development, personal experience, after all, etc.).

The past year 2012 was rich in iconic scientific breakthroughs - both the decoding of the Denisovtsev genome, the landing of the Curiosity on Mars, and the mouse grown from a stem cell. However, the most important discovery of 2012 is unequivocally acknowledged by the event that occurred in July at CERN (European Center for Nuclear Research) - practical confirmation of the existence of the Higgs boson, the “god particle”, as Leon Max Lederman dubbed it.

“Well, where is HP?” You ask. HP has been working very closely with CERN for a long time, especially in the area of networking technologies. For example, in CERN Openlab R & D, promising developments are underway in the field of creating applications for an HP-based SDN controller, the development of network security systems, etc., are actively taking place. Therefore, HP took the most direct part in this discovery. In the literal sense, helped to catch the Higgs boson since the launch of the LHC, because LHC data collection and processing network is built on HP equipment. To understand the scale of the network in CERN - it is about 50,000 active user devices, more than 10,000 kilometers of cable, about 2500 network devices. Each year, this network digests approximately 15 Petabytes of information (a huge amount of data from the detectors — statistics from collisions of particle beams with speeds almost equal to the speed of light). All this mass of data is processed in a distributed data center network. It was there, in the data center, on the basis of the analysis of a huge amount of statistics that "a particle of God was sifted through a sieve."

')

Part 2. Where sifted "particle of God"

For obvious reasons, I can’t give here the architecture of the CERN data center network. Instead, in the next part I will give a brief description of typical data center architectures built on the basis of HP equipment and technologies and implemented in various projects.

In short, the network of modern data centers can be built in one, two and three levels. Each option has its own purpose. A single-tier architecture implies a direct connection between the core / aggregation equipment and the servers. Something like this looks like in terms of the physical “cabling” of the racks:

And it is applied, as a rule, in small data centers-ah, built mainly on blades and focused on virtualization. Something like this, the data center looks from the point of view of logic and the network protocols and technologies used:

The next network option in the data center is a two-tier architecture. It already implies an additional level between the core / aggregation of the data center in the form of ToR (Top-of-Rack) switches. This design is well suited when a need arises for additional port capacity in the data center, or it is necessary to provide an additional level of client traffic termination (network policy settings, etc.). The ToR design, as it is sometimes called, is most often used in cases where most servers in the data center are rack-mounted. Sample diagrams are shown below:

The logical architecture in terms of technologies and protocols used looks like this:

Well, the third design option is three-level, sometimes it is also called EoR (End-of-Row). This design option is more often implemented for data centers in which the total network capacity and the number of broadband interfaces (starting at 10 Gbps) are key factors. Although the design described is based on HP hardware, any equipment based on current standards can be integrated into it. The physical architecture looks like this:

In the 3-tier data center architecture, an additional level of aggregation switches adds flexibility in building network topologies and increases the potential capacity of the solution by ports (this design option allows you to aggregate a very large port capacity), which eliminates the potential problems of such a design. From the point of view of the logic of networking, it looks like this:

About all the above options, we can say that they give:

• L2 flexibility and space - a flat L2 network is better suited to the needs of virtualization. If the ultimate goal of a data center is virtual machine support, a flat L2 network makes it easy to move virtual machines between hosts.

• No need for STP / RSTP / MSTP - along with virtual switches, this design provides loop protection without using STP.

• Fast convergence - in case of failures, the network converges an order of magnitude faster than using traditional protocols of the STP family.

• Security -

- 1-level network design allows IPS devices to integrate into the data center core equipment in VLAN aggregation sites, which is often simpler and more convenient.

- 2 and 3-level design allows you to distribute the elements of network protection across levels, which, in turn, naturally provides echelon protection.

Which option to choose - in each particular case, the network architect decides and it depends on many factors (technical requirements for the data center, the wishes of the end users, forecasts for infrastructure development, personal experience, after all, etc.).

Source: https://habr.com/ru/post/180537/

All Articles