Elastic redundant S3-compatible storage in 15 minutes

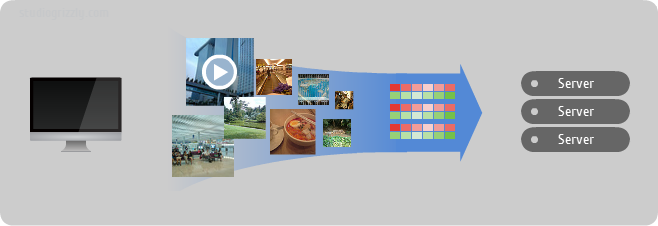

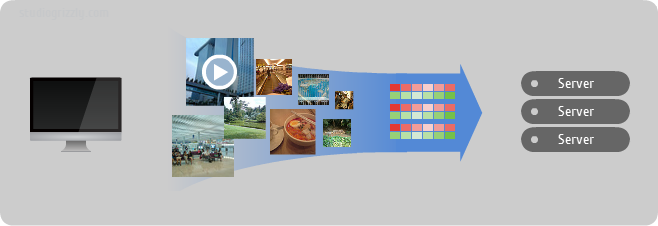

S3 today probably will not surprise anyone. It is used as a backend storage for web services, and as a file storage in the media industry, as well as an archive for backups.

Consider a small sample deployment of S3-compatible storage based on Ceph object storage.

Ceph is an open source development of resilient, highly scalable petabyte storage. The basis is the integration of disk spaces of several dozen servers into object storage, which allows for the implementation of flexible multiple pseudo-random data redundancy. Ceph developers complement this object storage with three more projects:

')

In my example, I continue to use 3 servers with 3 SATA disks in each:

Also in this example, I use only one RADOS Gateway on node01 node. S3 interface will be available at

It is worth noting that at the moment Ceph supports such S3 operations http://ceph.com/docs/master/radosgw/s3/ .

As I continue to use the already deployed Ceph cluster , I only need to slightly adjust the configuration of

and update it on other nodes

Include required modules

Create VirtualHost for RADOS Gateway

Turn on the created VirtualHost and turn off the default

Create the FastCGI script

and make it executable

Create the necessary directory

We generate a key for the new RADOS Gateway service

and add it to the cluster

Restarting Apache2 and RADOS Gateway

To use the S3 client, we need to obtain the

see the output of the command and copy the keys to your client

In order to earn buckets, we need a DNS server, when requesting any subdomain for

For example, when creating a bucket called

In my case, I'll just add a CNAME record.

In 15 minutes we managed to deploy an S3-compatible storage. Now try connecting your favorite S3 client.

I asked sn00p to tell about his experience of using RADOS Gateway in production at 2GIS . Below is his review:

We have Varnish, Varnish backends picked up 4 Apache Radosgateveev. The application first climbs into varnish, if there is a bummer, then roundrobin breaks directly into the Apaches. This piece presses 20,000 rps without any problems according to the synthetic tests jmeter with access log for a month. Inside half a million photos, the workload on the frontend is about 300 rps.

Ceph so far on 5 machines, there is a separate disk for osd and for the magazine a separate ssd. Default replication, ^ 2. The system without problems survives the fall of two nodes at the same time and there further with variations. For six months, no errors have yet been shown to the client.

There are no problems with flexibility - the size of the repository, the inodes, the layout of the directories - all this is left in the past.

All this has been working with us for half a year already and does not require administrator intervention))

Now I am trying to use Ceph to store and upload already 15 million files of ~ 4-200kb. With S3, this is not very convenient - there are no bulk-copy operations, it is impossible to delete the bucket with data in order to initially fill the storage - this is slowly gut. We investigate how to twist it.

But the main task is a geocluster, we are in Siberia and we want to give data from a geographically close point to the client. To Moscow, we have content flies with a delay already - up to 100ms plus, this is no good. Well, Ceph developers seem to have everything in their plans .

Consider a small sample deployment of S3-compatible storage based on Ceph object storage.

Quick reference

Ceph is an open source development of resilient, highly scalable petabyte storage. The basis is the integration of disk spaces of several dozen servers into object storage, which allows for the implementation of flexible multiple pseudo-random data redundancy. Ceph developers complement this object storage with three more projects:

')

- RADOS Gateway - S3- and Swift-compatible RESTful interface

- RBD - block device with support for thin growth and snapshots

- Ceph FS - Distributed POSIX Compatible File System

Example Description

In my example, I continue to use 3 servers with 3 SATA disks in each:

/dev/sda as the system and /dev/sdb and /dev/sdc for the data of the object storage. Various programs, modules, frameworks for working with an S3 compatible storage can act as a client. I have successfully tested DragonDisk , CrossFTP and S3Browser .Also in this example, I use only one RADOS Gateway on node01 node. S3 interface will be available at

s3.ceph.labspace.studiogrizzly.com s3.ceph.labspace.studiogrizzly.com .It is worth noting that at the moment Ceph supports such S3 operations http://ceph.com/docs/master/radosgw/s3/ .

Let's get started

Step 0. Preparing Ceph

As I continue to use the already deployed Ceph cluster , I only need to slightly adjust the configuration of

/etc/ceph/ceph.conf — add a definition for RADOS Gateway [client.radosgw.gateway] host = node01 keyring = /etc/ceph/keyring.radosgw.gateway rgw socket path = /tmp/radosgw.sock log file = /var/log/ceph/radosgw.log rgw dns name = s3.ceph.labspace.studiogrizzly.com rgw print continue = false and update it on other nodes

scp /etc/ceph/ceph.conf node02:/etc/ceph/ceph.conf scp /etc/ceph/ceph.conf node03:/etc/ceph/ceph.conf Step 1. Install Apache2, FastCGI and RADOS Gateway

aptitude install apache2 libapache2-mod-fastcgi radosgw Step 2. Apache configuration

Include required modules

a2enmod rewrite a2enmod fastcgi Create VirtualHost for RADOS Gateway

/etc/apache2/sites-available/rgw.conf FastCgiExternalServer /var/www/s3gw.fcgi -socket /tmp/radosgw.sock <VirtualHost *:80> ServerName s3.ceph.labspace.studiogrizzly.com ServerAdmin tweet@studiogrizzly.com DocumentRoot /var/www RewriteEngine On RewriteRule ^/([a-zA-Z0-9-_.]*)([/]?.*) /s3gw.fcgi?page=$1¶ms=$2&%{QUERY_STRING} [E=HTTP_AUTHORIZATION:%{HTTP:Authorization},L] <IfModule mod_fastcgi.c> <Directory /var/www> Options +ExecCGI AllowOverride All SetHandler fastcgi-script Order allow,deny Allow from all AuthBasicAuthoritative Off </Directory> </IfModule> AllowEncodedSlashes On ErrorLog /var/log/apache2/error.log CustomLog /var/log/apache2/access.log combined ServerSignature Off </VirtualHost> Turn on the created VirtualHost and turn off the default

a2ensite rgw.conf a2dissite default Create the FastCGI script

/var/www/s3gw.fcgi : #!/bin/sh exec /usr/bin/radosgw -c /etc/ceph/ceph.conf -n client.radosgw.gateway and make it executable

chmod +x /var/www/s3gw.fcgi Step 3. Prepare RADOS Gateway

Create the necessary directory

mkdir -p /var/lib/ceph/radosgw/ceph-radosgw.gateway We generate a key for the new RADOS Gateway service

ceph-authtool --create-keyring /etc/ceph/keyring.radosgw.gateway chmod +r /etc/ceph/keyring.radosgw.gateway ceph-authtool /etc/ceph/keyring.radosgw.gateway -n client.radosgw.gateway --gen-key ceph-authtool -n client.radosgw.gateway --cap osd 'allow rwx' --cap mon 'allow r' /etc/ceph/keyring.radosgw.gateway and add it to the cluster

ceph -k /etc/ceph/ceph.keyring auth add client.radosgw.gateway -i /etc/ceph/keyring.radosgw.gateway Step 4. Launch

Restarting Apache2 and RADOS Gateway

service apache2 restart /etc/init.d/radosgw restart Step 5. Create the first user

To use the S3 client, we need to obtain the

access_key and secret_key for the new user. radosgw-admin user create --uid=i --display-name="Igor" --email=tweet@studiogrizzly.com see the output of the command and copy the keys to your client

Step 6. DNS

In order to earn buckets, we need a DNS server, when requesting any subdomain for

s3.ceph.labspace.studiogrizzly.com point to the IP address of the host running RADOS Gateway.For example, when creating a bucket called

mybackups , the domain is mybackups.s3.ceph.labspace.studiogrizzly.com. should point to the node01 IP address, which is - 192.168.2.31.In my case, I'll just add a CNAME record.

* IN CNAME node01.ceph.labspace.studiogrizzly.com. Afterword

In 15 minutes we managed to deploy an S3-compatible storage. Now try connecting your favorite S3 client.

Bonus part

I asked sn00p to tell about his experience of using RADOS Gateway in production at 2GIS . Below is his review:

general description

We have Varnish, Varnish backends picked up 4 Apache Radosgateveev. The application first climbs into varnish, if there is a bummer, then roundrobin breaks directly into the Apaches. This piece presses 20,000 rps without any problems according to the synthetic tests jmeter with access log for a month. Inside half a million photos, the workload on the frontend is about 300 rps.

Ceph so far on 5 machines, there is a separate disk for osd and for the magazine a separate ssd. Default replication, ^ 2. The system without problems survives the fall of two nodes at the same time and there further with variations. For six months, no errors have yet been shown to the client.

There are no problems with flexibility - the size of the repository, the inodes, the layout of the directories - all this is left in the past.

Solution features

- Five HP Proliant Gen8 DL360e Servers. Under the Ceph tasks on each server, one 300 GB SAS 15krpm is allocated. For a significant increase in performance, the osd daemons logs are rendered on Hitachi Ultrastar 400M ssd disks.

- Two kvm virtual machines with apache2 and radosgw inside. How nginx works with FastCGI I personally did not like it. Nginx, when uploaded, uses buffering before giving content to the backend. Theoretically, problems may arise with large files or streams. But, a matter of taste and situation, nginx also works.

- Apache2 uses a modified one that allows you to handle a

100-continue HTTP response. Ready packages can be taken here . - The varnish application looks at both radosgw nodes. There can be any cache or balancer. If it crashes, the application can interrogate radosgw directly:to uncover

backend radosgw1 { .host = "radosgw1"; .port = "8080"; .probe = { .url = "/"; .interval = 2s; .timeout = 1s; .window = 5; .threshold = 3; } } backend radosgw2 { .host = "radosgw2"; .port = "8080"; .probe = { .url = "/"; .interval = 2s; .timeout = 1s; .window = 5; .threshold = 3; } } director cephgw round-robin { { .backend = radosgw1; } { .backend = radosgw2; } } - Each application has its own bucket. Different acl is supported, you can flexibly adjust access rights for the bucket and for each object in it.

- To work with the entire kitchen, we use

python-boto. Here is an example python script (carefully, indents), which is able to upload everything into the bucket from the file system. This method is convenient for batch processing of a heap of files in automatic mode. If you don't like python, no problem, you can use other popular languages.to uncover#!/usr/bin/env python import fnmatch import os, sys import boto import boto.s3.connection access_key = 'insert_access_key' secret_key = 'insert_secret_key' pidfile = "/tmp/copytoceph.pid" def check_pid(pid): try: os.kill(pid, 0) except OSError: return False else: return True if os.path.isfile(pidfile): pid = long(open(pidfile, 'r').read()) if check_pid(pid): print "%s already exists, doing natting" % pidfile sys.exit() pid = str(os.getpid()) file(pidfile, 'w').write(pid) conn = boto.connect_s3( aws_access_key_id = access_key, aws_secret_access_key = secret_key, host = 'cephgw1', port = 8080, is_secure=False, calling_format = boto.s3.connection.OrdinaryCallingFormat(), ) mybucket = conn.get_bucket('test') mylist = mybucket.list() i = 0 for root, dirnames, filenames in os.walk('/var/storage/photoes', followlinks=True): for filename in fnmatch.filter(filenames, '*'): myfile = os.path.join(root,filename) key = mybucket.get_key(filename) i += 1 if not key: key = mybucket.new_key(filename) key.set_contents_from_filename(myfile) key.set_canned_acl('public-read') print key print i os.unlink(pidfile) - Out of the box, radosgw is very talkative and generates large files with logs under normal load. With our loads, we have necessarily reduced the logging level:to uncover[client.radosgw.gateway]

...

debug rgw = 2

rgw enable ops log = false

log to stderr = false

rgw enable usage log = false

... - For monitoring, we use a template for Zabbix, the source code can be collected here .

All this has been working with us for half a year already and does not require administrator intervention))

Future plans

Now I am trying to use Ceph to store and upload already 15 million files of ~ 4-200kb. With S3, this is not very convenient - there are no bulk-copy operations, it is impossible to delete the bucket with data in order to initially fill the storage - this is slowly gut. We investigate how to twist it.

But the main task is a geocluster, we are in Siberia and we want to give data from a geographically close point to the client. To Moscow, we have content flies with a delay already - up to 100ms plus, this is no good. Well, Ceph developers seem to have everything in their plans .

Source: https://habr.com/ru/post/180415/

All Articles