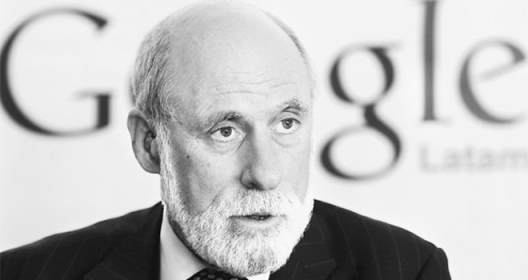

Google's top Internet evangelist Screw Surf talks about the interplanetary Internet

On that day, when future Martian colonists will be able to open the browser and see how a cat in a shark costume, riding a roomba robot vacuum cleaner, chases a duck , they should thank no one else but Wint Surf.

As the main internet evangelist of google, Surf spent plenty of time thinking about the future of networking technologies. And he has every right to do that - because it was Surf who, along with Bob Kahn, was responsible for developing TCP / IP. But, not satisfied with his role as the father of the Internet on this planet, Surf spent years trying to bring his brainchild into space.

')

Working in conjunction with NASA and JPL, Surf helped develop a new protocol stack that can be used in space — in which, given the limitations related to the speed of light, as well as the complexity of orbital mechanics, the network becomes very complex.

We talked with Surf about the role of this interplanetary Internet in space exploration, current problems, as well as how he sees the future of this technology.

Wired: Although the idea itself, in principle, is not new, the concept of the interplanetary Internet is new to most people. How can a space network be built?

Vint Cerf: Indeed, the idea is far from new - this project began in 1998. And it began in many ways because in 1997 the 25th anniversary of the modern Internet was celebrated. I wondered - what would I do so that it would still be useful after 25 years? And, after consulting with colleagues at JPL, we came to the conclusion that we needed much more advanced network technologies, compared to what the space agencies of the world had at that time.

Until that time (and, frankly, to this day), all communications in space passed over the radio channel through a point-to-point connection. We started to think about how TCP / IP can be used as a protocol for communication in space - it seemed to us that since it works well on Earth, it could well work on Mars. But the most important question was “will it work for communication between the planets?”. And, as it turned out, the answer was no.

There are two reasons for this. First, the speed of light, in relation to interplanetary distances, is rather small. To get from Earth to Mars, the signal takes from 3 and a half to 20 minutes. The return journey will take as much again. In addition, you must also take into account the rotation of the planet. If you are trying to connect with something on the surface, then after the planet turns around, you will lose this opportunity and you will have to wait until it makes a full turn. So, we have a big signal delay and its interruptions, and TCP in such conditions works, let's say, not very well.

One of the assumptions that TCP / IP relies on is that each of the routers may not have enough memory to store data. So if there is a packet that needs to be sent along the route you have, but you do not have enough memory, then this packet will simply be dropped.

We have developed a new protocol stack called Bundle. This technology is somewhat similar to ordinary Internet packages. Packages can be quite large, and are sent, simply, as a single unit. For transmission, we use the “store and forward” mode - each node can store information for quite a long time and wait until it has the opportunity to transfer it further.

Wired: How much more difficult, in comparison with the traditional Internet, to carry out communications in space?

Cerf: One of the most unpleasant moments is the impossibility of using the domain name system in its current form. Let me illustrate this with an example. Imagine that you are on Mars, and you want to open an HTTP connection to reach the site on Earth. You have a URL, but in order to open a connection, you need an IP address.

Naturally, you need at your URL to get the desired IP. So, you're on Mars, and the domain name you need is on Earth. You are doing a DNS lookup. But in order for you to get an answer, you need to wait at least 40 minutes, and at a maximum, it is not known how much, depending on the number of packet losses, target availability, depending on the rotation of the planet, etc. Well, then it may turn out that the answer you received is incorrect, because during the time that the answer reached you, the host could easily be moved to another IP. And this is not the worst scenario. If you were sitting somewhere on Jupiter, all this could take you many hours.

So, we have a need to get rid of such a scheme, and go to what we call delayed binding. First, you determine the planet that you need, then redirect traffic to this planet, and only then do a lookup.

Other problems arise if you think about the need to manage the network itself in these conditions - what we use in networks on Earth will not work here. For example, there is a protocol called SNMP — the simple network management protocol — it is based on the idea that you can send a packet and receive a response within a few milliseconds, or at least a few hundred milliseconds. If you know what ping is, then you need to understand what is at stake - after pinging something, you expect the answer as quickly as possible. If you do not receive it within a couple of minutes, then it makes sense to assume that something is going wrong, and perhaps the node that you are requesting is unavailable. But in space, the signal needs a large amount of time even to get to the goal, not to mention going back. This makes network management a significantly more complex task.

Finally, we had to take care of security. The reasons, of course, are obvious - we really would not like to see the headlines like “A 15-year-old teenager seized control of the Martian segment of the Network.” To avoid this, we took a number of measures - we added strong authentication, a three-way handshake, the use of cryptographic keys, etc.

Wired: Given that the message will occur over vast distances, the entire network should be just gigantic, right?

Cerf: Well, purely physically - in terms of distances - it’s really quite a big network. However, the number of nodes is very small. At the moment, these are mainly devices on Earth, for example, DSN stations that are under JPL control. They have large 70-meter antennas, and smaller antennas with a diameter of 35 meters, capable of establishing a point-to-point connection. All this, in turn, is part of the TDRSS system (read tee-driss) used by NASA for a large number of near-earth communications. There are also several nodes on the ISS that support our protocol stack.

Artificial satellites of Mars use a prototype of this software, and almost all the information from Mars comes to us in exactly the way I described. Spirit, Opportunity, and Curiosity rovers also use these protocols. Even Phoenix’s landing module used them before it turned off. [Read more about how exactly communication with Mars is carried out, and about the operation of DSN stations, you can read in this post - approx. trans.]

Finally, there is an apparatus called EPOXI, which is in orbit around the Sun, which was also used to test the operation of the protocols.

We hope that in the future - assuming that our protocols will be certified by the CCSDS, the committee dealing with standards in the field of space communications - every spacecraft, regardless of which country it was developed and launched, will use our protocols. And this, in turn, will mean that each of these devices, having completed its main mission, can be used as a relay node in the network.

Wired: And what are the next steps for expansion?

Cerf: First, we want to standardize all this. In addition, not all parts of the protocols have been tested, for example, our authentication systems. Secondly, we need to understand exactly how well we can control the operation of the network in difficult conditions.

Third, we need to think about real-time interactions, such as video and audio transmissions. We need to understand how best to move from real-time interactive chat to something that would be more like an exchange of emails, to which you can attach audio and video.

The delivery of a data package is somewhat like the delivery of an e-mail. If there is a problem with your email, it is usually forwarded again, and if it lasts a long time, it is rejected on timeout. Our protocols have similar behavior, so it should be borne in mind that the answer can go long enough, and the time of its delivery can vary from case to case.

Wired: We often talk about how technologies developed for space can be applied on Earth. Can the technologies you talk about be used on our planet?

Cerf: Certainly. For example, the DARPA agency allocated funds for testing these highly resistant protocols for tactical military communications. These tests were very successful - in unfavorable from the point of view of data transmission conditions, we were able to transmit 3-5 times more information than using TCP / IP.

In part, this is achieved due to the fact that network nodes can store the transmitted data themselves. So if something went wrong, we do not need to transfer again from one end to the other - it is enough to do it from one of the last nodes. This approach has been very effective. And, of course, this was possible mainly due to the strong cheapening of memory.

There was another interesting project with the participation of the European Commission - in the north of Sweden, in Lapland, there lives a people called Saami. Representatives of this nation have been breeding reindeer for over 8,000 years. So, the European Commission funded a research project in which we used our protocols on all-terrain vehicle laptops. With their help, it was possible to establish WiFi points in villages in the northern part of Sweden. Roughly speaking, the all-terrain vehicle served as a kind of mule carrying information from village to village.

Wired: There was also an experiment called Mocup, which included controlling a robot on Earth with the ISS. Were these protocols also used there?

Cerf: Exactly. We were all very excited about this experiment, as it showed that even though our protocols were designed to work over long distances and with uncertain delays, under good communication conditions they can also be used to transmit data in real time.

It seems to me that the field of communications as a whole will benefit from these developments. For example, applying these protocols to mobile phones, we will get a more stable communication platform than we have today.

Wired: That is, even if the phone in my home was very bad at catching the network, would I still be able to call my parents?

Cerf: Rather, you could have recorded your message, and they would eventually receive it. This would, of course, not be in real time - if problems with the network last long enough, then the message will come later. But in any case, it will be delivered without fail.

Source: https://habr.com/ru/post/179895/

All Articles