Some details about templates or fork-bomb compilation

Recently I became interested in instantiating plus templates. The term code bloat is used on the Internet . For c ++, this can mean an uncontrolled increase in the code generated by the compiler. The code is increased due to the fact that the instantiation of a new function has a higher priority than the conversion of arguments to a more convenient type. Those. template T foo (T a); for int and char, these are two different functions. You can get one function either by rejecting templates, or using explicit type conversion.

But let's turn the problem inside out and try to get the executable file of the maximum possible size from a minimum of lines of code.

The result was not very impressive - I only got 53Mb of 60 lines of code. And then only for one of the three tested compilers and at the cost of several hours of compilation. The maximum volume / line ratio is 2.3MB / line for a 14MB volume.

How and why it happened - under the cut.

Intel® Core (TM) i3-2330M CPU @ 2.20GHz processor

Linux OS 3.7.3-101.fc17.x86_64

and disabled swap partition.

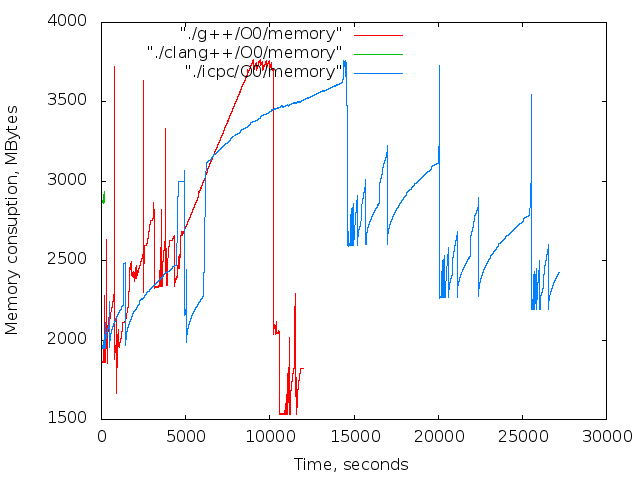

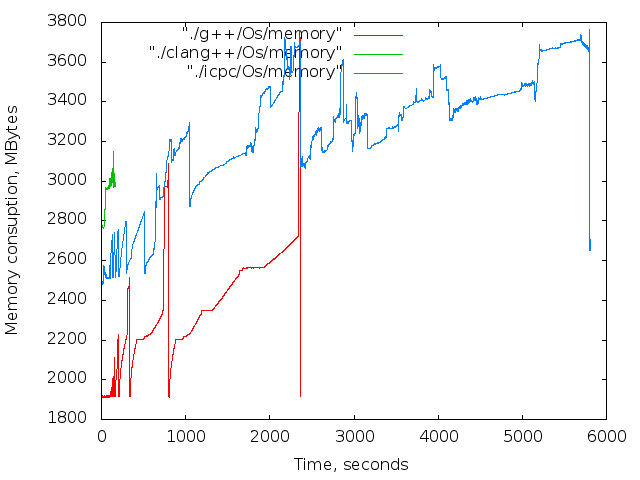

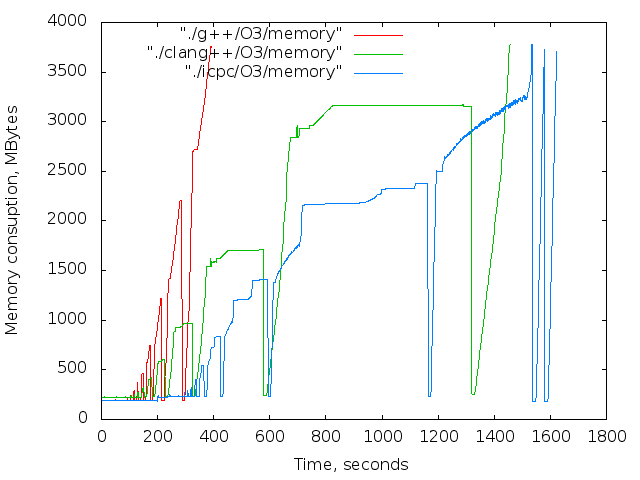

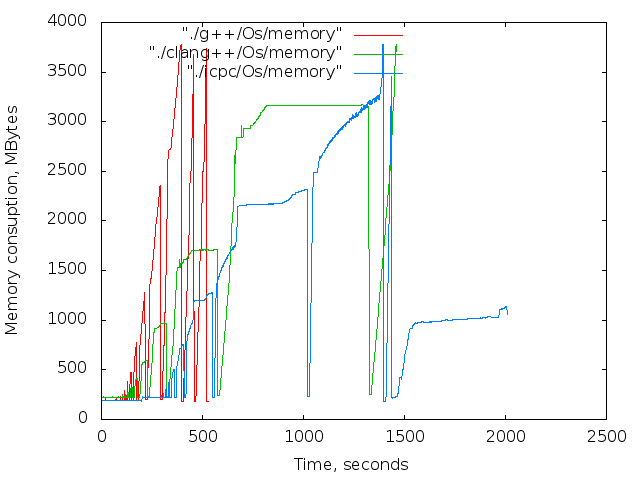

The swap had to be turned off for the same reason that a fork bomb appeared in the title of the post. With a sufficiently large volume of tasks, the compiler left all the memory and began to actively exchange with the disk, which tightly and permanently hung up the machine.

Compiler Versions:

As a result, the main () function should be filled with emptyuseless nop operations.

')

The size of the nop sequence is theoretically determined by the depth of the recursion. The g ++ mane states that the maximum depth is 17 and 1024 for c ++ 11.

4.7.1.14 for c ++ 03

4.7.1.15 for c ++ 11

Which was confirmed by experience. For a sufficiently large LVL compilers crashed. I was surprised by clang ++ which crashed to 2 13 , in contrast to g ++ and icpc reaching 2 17 .

The assembly was carried out by the teams:

It looks like the images are scaled correctly only in chrome.

For other browsers

Using a tree, we do not go beyond the maximum depth of recursion.

The source code takes 19 lines and looks like this:

The result is a tree, each node of which has its own type. Each type is characterized by a pair of numbers:

Each node within the same level is numbered from 0 to N.

I did not bother with the nop-s here, I used addition. Global long x - used to control the correctness of the assembly. As a result, returns 2 LVL + 1 .

The assembly was carried out by the teams:

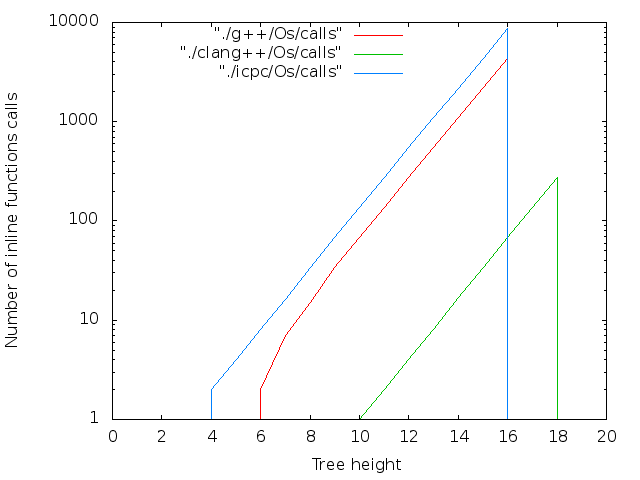

The maximum LVL turned out to be 18 for clang ++. For g ++ and icpc - 16, and regardless of whether the option - std = c ++ 11 was specified or not. The compilers did not have enough memory.

It looks like the images are scaled correctly only in chrome.

For other browsers

Maximum file size:

We modify the source code a little so that all template parameters are specified when describing it. We divide the source code into three files — the template description, the main function, and the partial instantiation of the subtrees:

and meaninglessness of the result.

The largest volume / number of lines = 2.3Mb / string was obtained for the array from the first part (icpc -O0 list.cc)

Metric is of course funny, but funny. 2.3 - the maximum that happened. I would be glad to know if someone gets more relevant.

Good luck to us all.

Upd : Strip did not, but it would be necessary. I thought there was a couple of kilobytes - but it turned out to be a percentage of the size, and quite large. After strip, the maximum size dropped to 37MB (from 53). and 8.6MB (p 14). Accordingly, the ratio is 1.43MB / string.

But let's turn the problem inside out and try to get the executable file of the maximum possible size from a minimum of lines of code.

The result was not very impressive - I only got 53Mb of 60 lines of code. And then only for one of the three tested compilers and at the cost of several hours of compilation. The maximum volume / line ratio is 2.3MB / line for a 14MB volume.

How and why it happened - under the cut.

Resources

One laptop with 4GB of memory,Intel® Core (TM) i3-2330M CPU @ 2.20GHz processor

Linux OS 3.7.3-101.fc17.x86_64

and disabled swap partition.

The swap had to be turned off for the same reason that a fork bomb appeared in the title of the post. With a sufficiently large volume of tasks, the compiler left all the memory and began to actively exchange with the disk, which tightly and permanently hung up the machine.

Compiler Versions:

- g ++ (GCC) 4.7.2 20120921 (Red Hat 4.7.2-2)

- Intel® C ++ Intel® 64 Compiler for Applications running on Intel® 64, Version 13.1.1.163 Build 20130313

- clang version 3.3 (trunk 179304)

Long arrays

The easiest way is to organize an array of the commerce step and string functions on it. Like this:template<long n> inline void nop(){nop<n-1>();asm("nop"); } template<> inline void nop<0>() {asm("nop");} int main(int argc, char ** argv) { nop<LVL>(); return 0; } As a result, the main () function should be filled with empty

')

The size of the nop sequence is theoretically determined by the depth of the recursion. The g ++ mane states that the maximum depth is 17 and 1024 for c ++ 11.

Quote from mana

In the standards of specific numbers, I did not find. There was only a point that the maximum depth of recursion is determined by the implementation:-ftemplate-depth = n

Set the maximum depth depth for the template classes to n. Depth limit is needed

to detect endless recursions during template class instantiation. ANSI / ISO C ++ conforming programs must not rely on a

maximum depth greater than 17 (changed to 1024 in C ++ 11). The default value is 900, as the compiler can run out of

stack space before hitting 1024 in some situations.

Set the maximum depth depth for the template classes to n. Depth limit is needed

to detect endless recursions during template class instantiation. ANSI / ISO C ++ conforming programs must not rely on a

maximum depth greater than 17 (changed to 1024 in C ++ 11). The default value is 900, as the compiler can run out of

stack space before hitting 1024 in some situations.

4.7.1.14 for c ++ 03

4.7.1.15 for c ++ 11

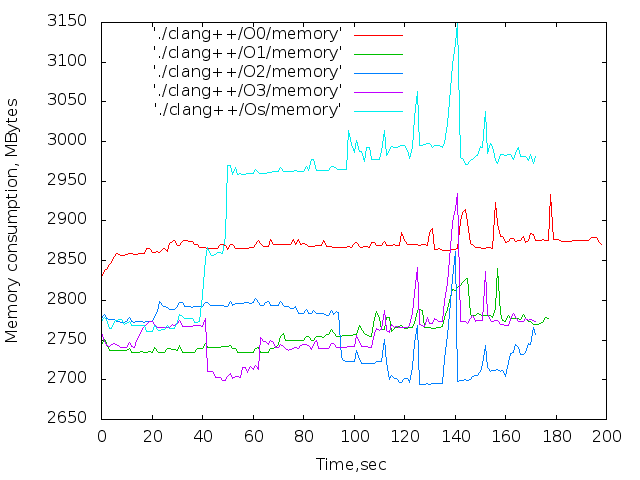

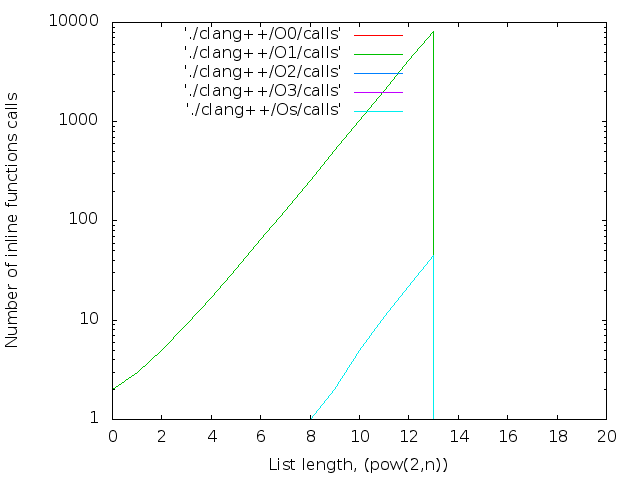

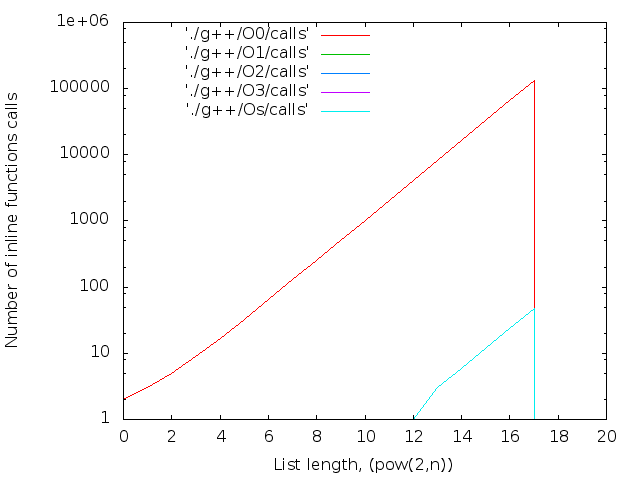

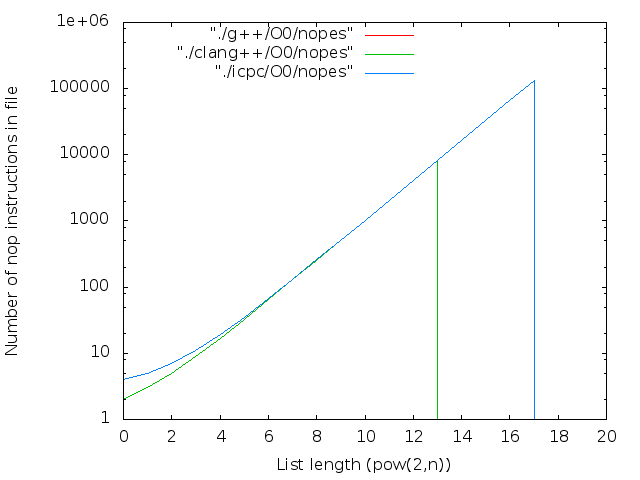

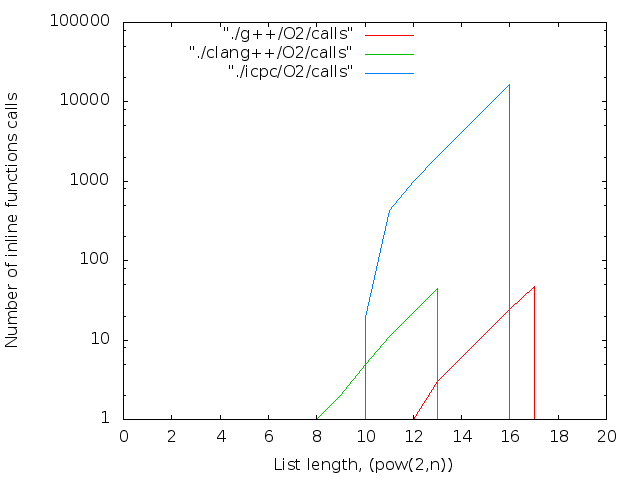

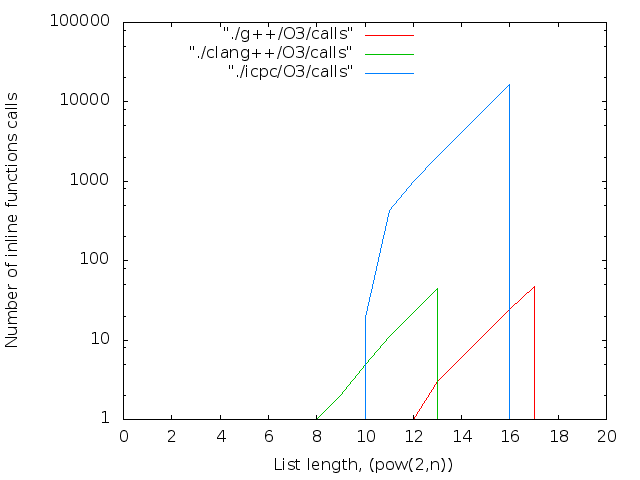

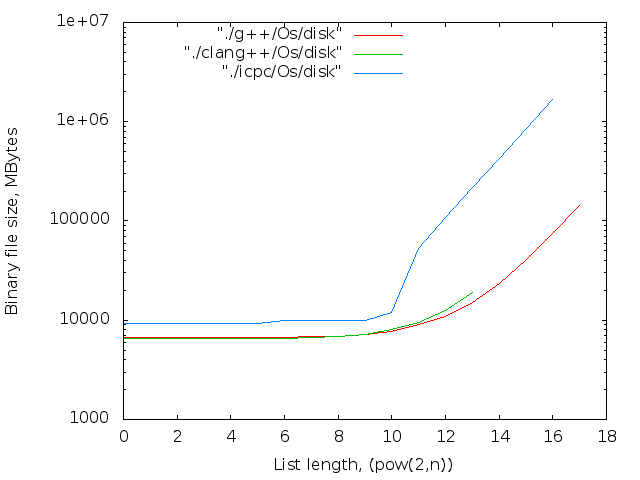

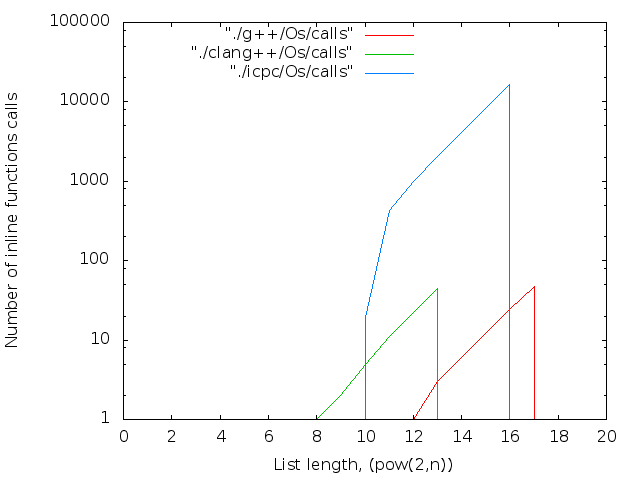

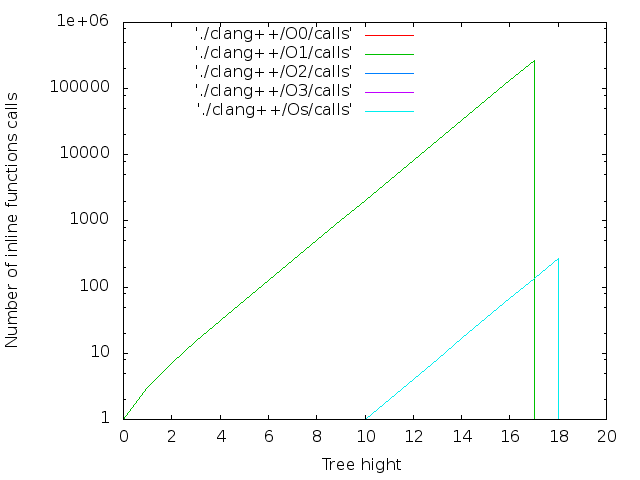

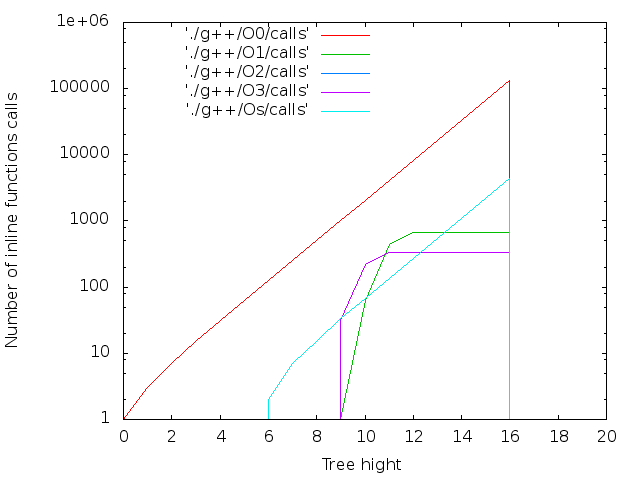

Which was confirmed by experience. For a sufficiently large LVL compilers crashed. I was surprised by clang ++ which crashed to 2 13 , in contrast to g ++ and icpc reaching 2 17 .

The assembly was carried out by the teams:

clang++ -DLVL=$(( 2**$n)) -o list$n ./list.cc -ftemplate-depth=3000000 -O$x g++ -DLVL=$(( 2**$n)) -o list$n ./list.cc -ftemplate-depth=3000000 -O$x icpc -DLVL=$(( 2**$n)) -o list$n ./list.cc -O$x withinbuild script

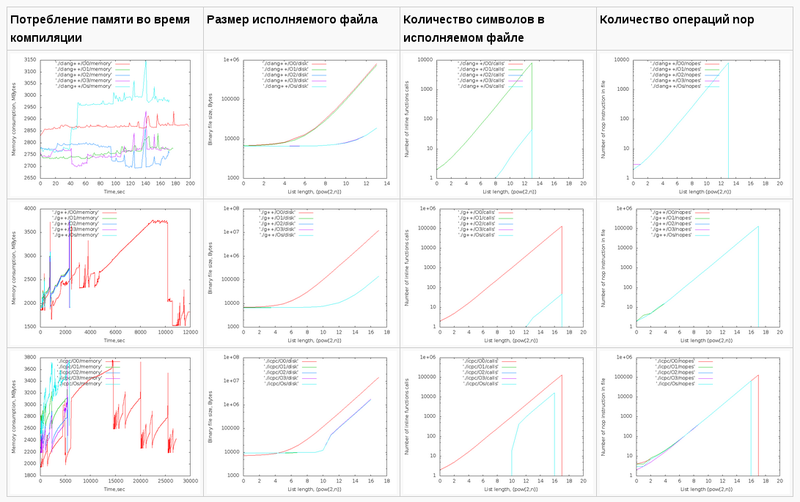

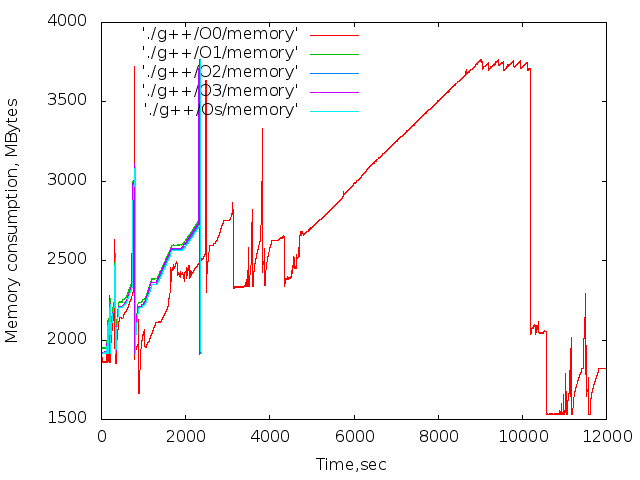

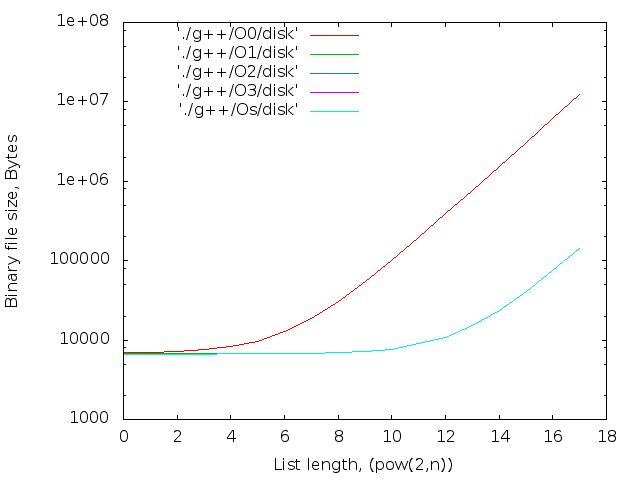

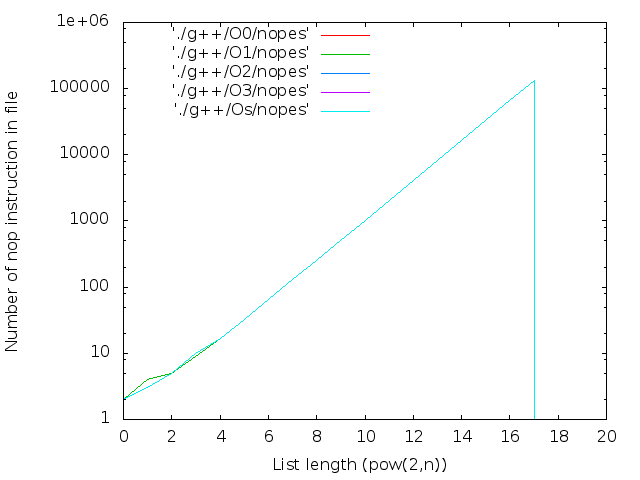

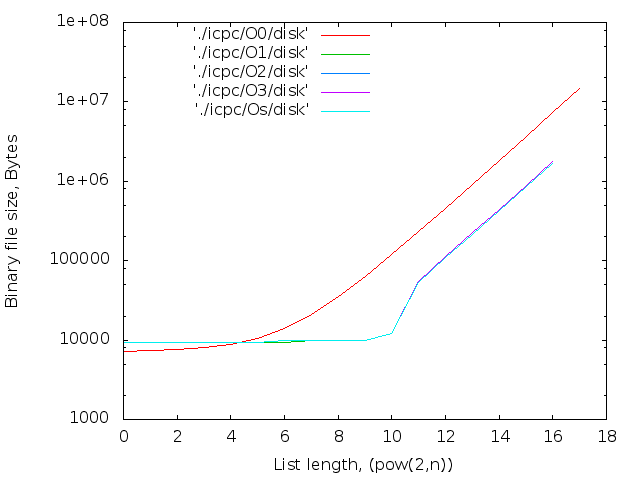

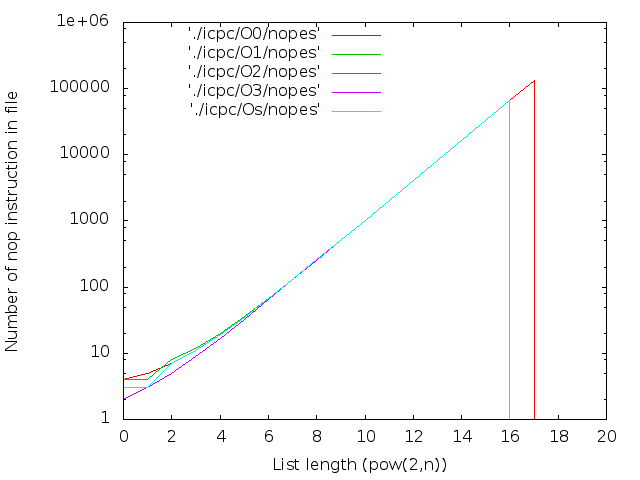

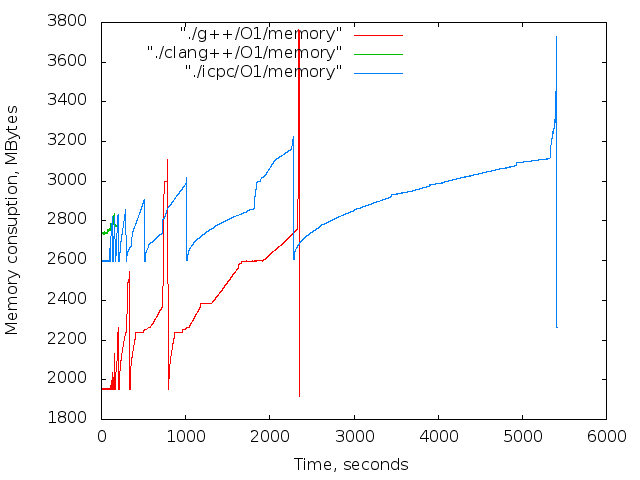

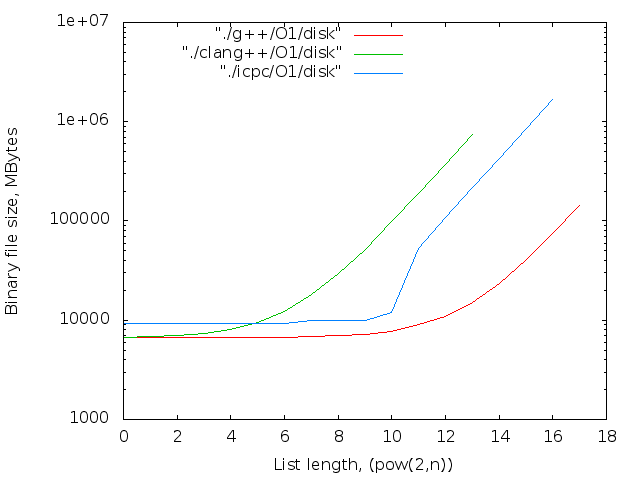

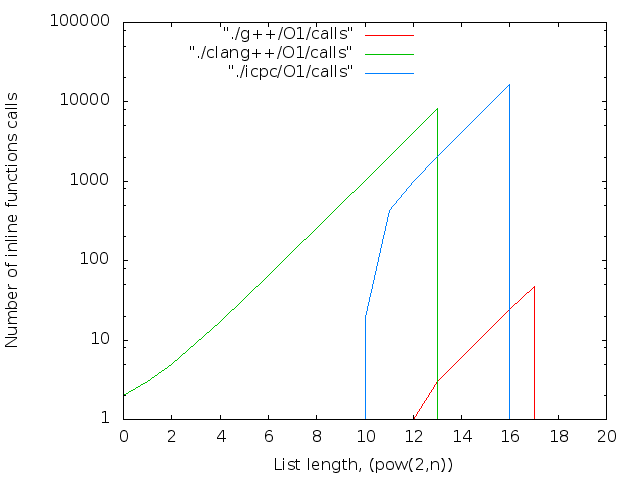

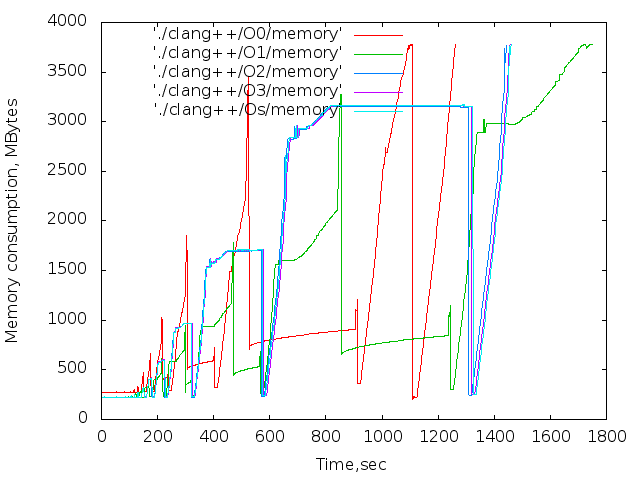

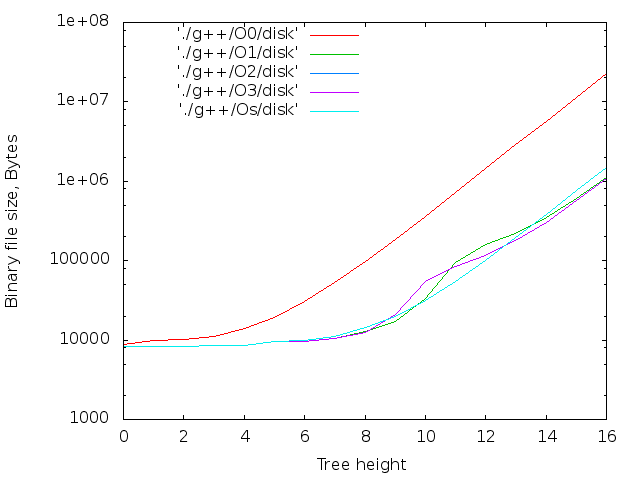

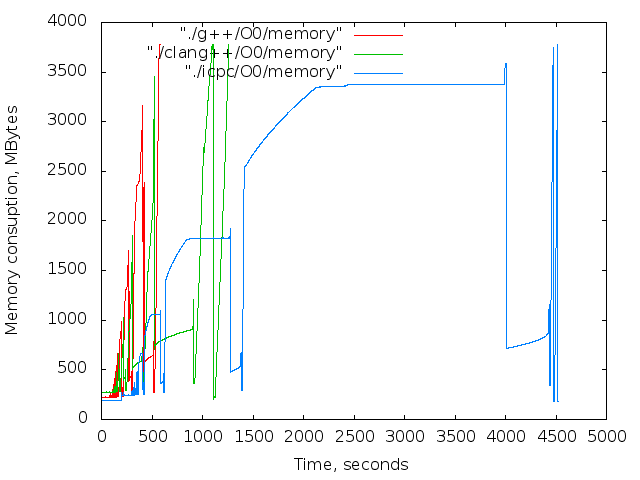

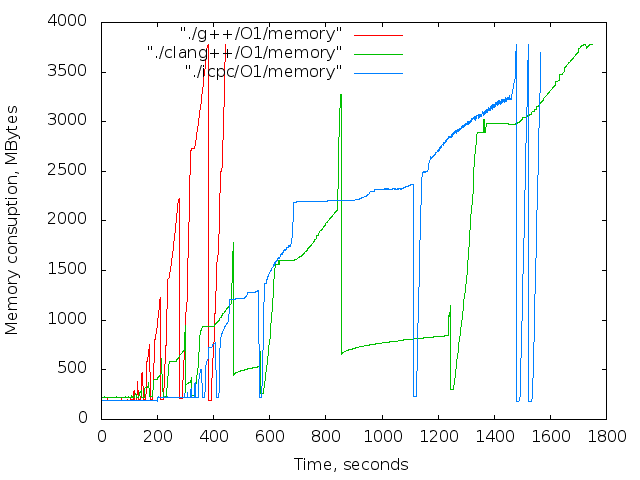

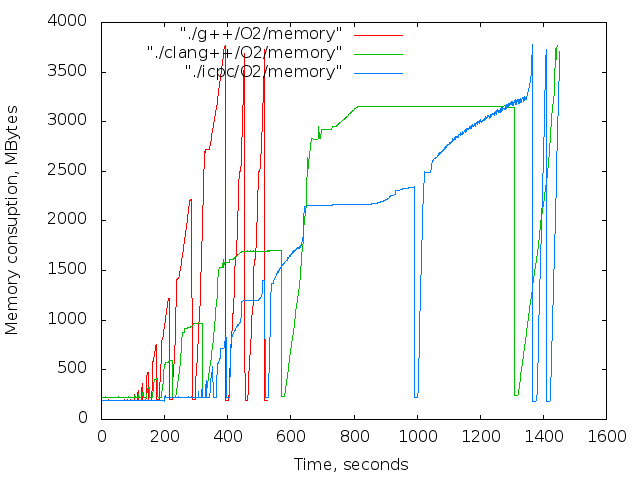

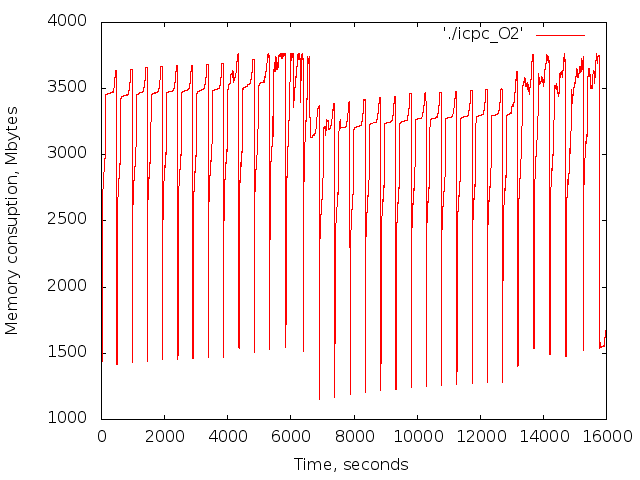

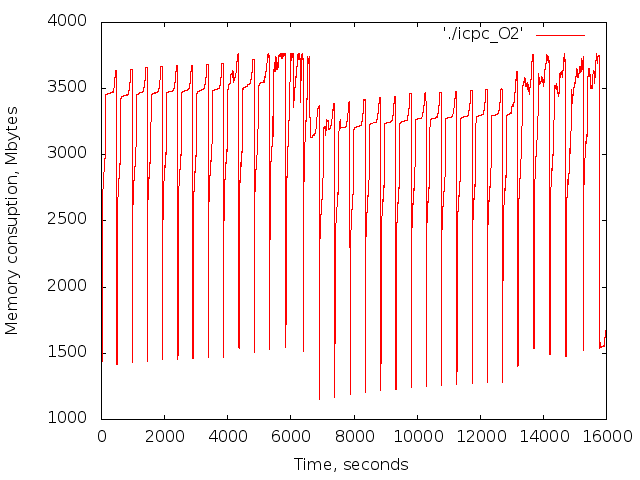

Binaries were collected consistently and for a long time. For each compiler, for each level of optimization. Assembly results are shown as graphs. Four columns of images: #/bin/bash MIN=0 MAX=19 for i in 2; do mkdir -p attempt$i cd attempt$i for CXX in clang++ g++ icpc do mkdir -p $CXX cd $CXX for O in O0 O1 O2 O3 Os do mkdir -p $O cd $O (while :;do free -m |grep Mem|awk '{print $3}' >> ./memory;sleep 1;done)& TIME=$! for i in $(seq $MIN $MAX) do # CMD="$CXX -$O ../../../fbomb.cc -DLVL=$i -o fbomb$i" #-save-temps=obj " CMD="$CXX -$O ../../../list.cc -DLVL=$(( 2 ** $i)) -o list$i -ftemplate-depth=3000000" #-save-temps=obj " echo $CMD $CMD sleep 5 sleep 5 done kill -9 $TIME cd .. done cd .. done cd .. done - The dependence of memory on time. These graphs are given for reference only, because at compile time, the X server and the browser worked, but the main trends are visible.

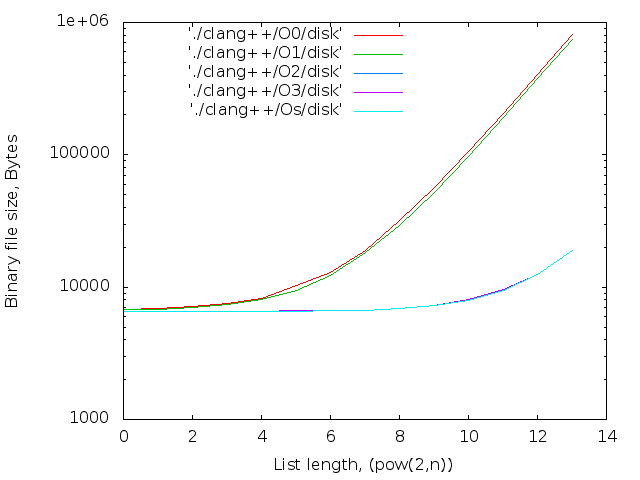

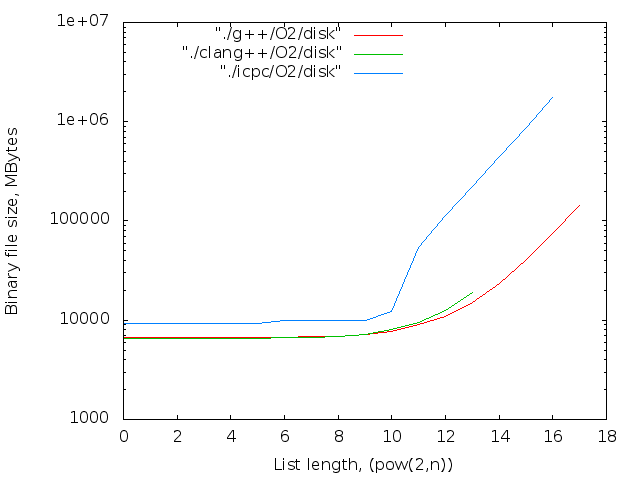

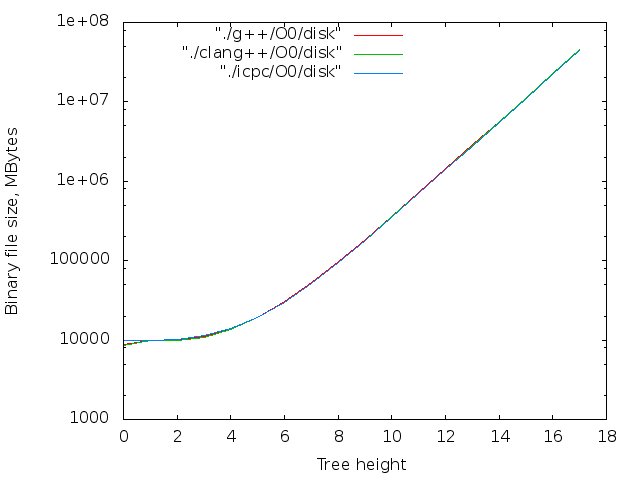

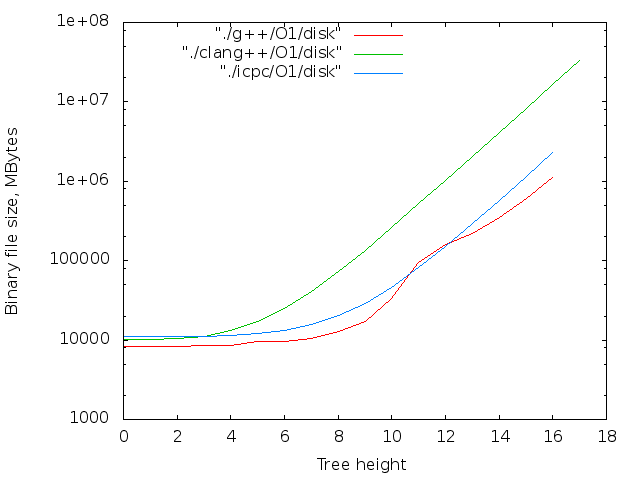

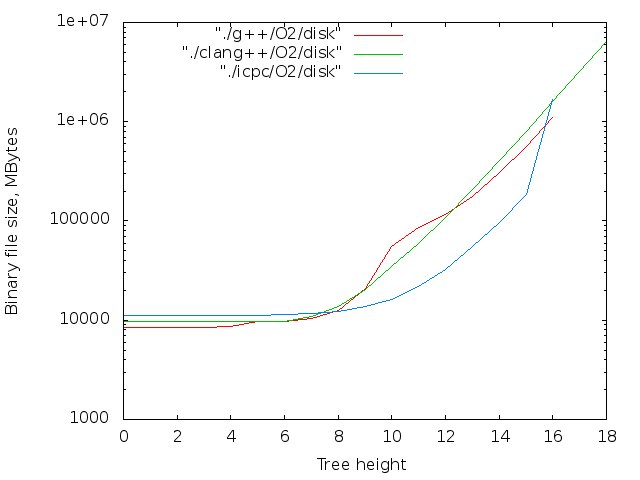

- The size of the binary file. Maximum turned out - 14MB

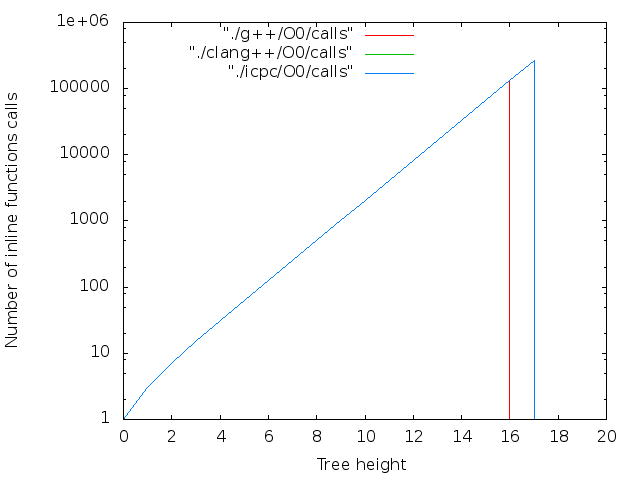

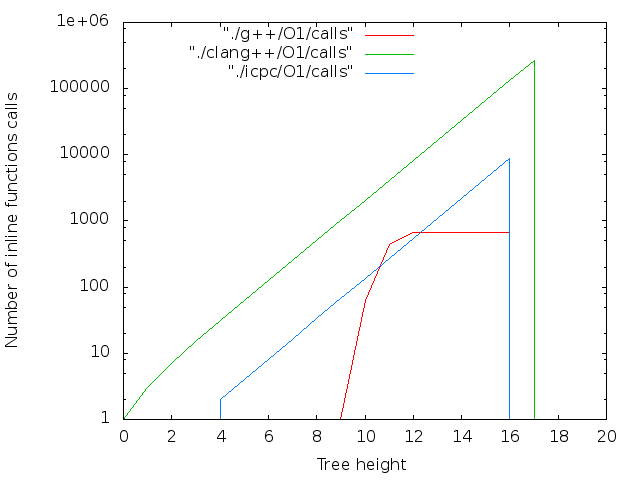

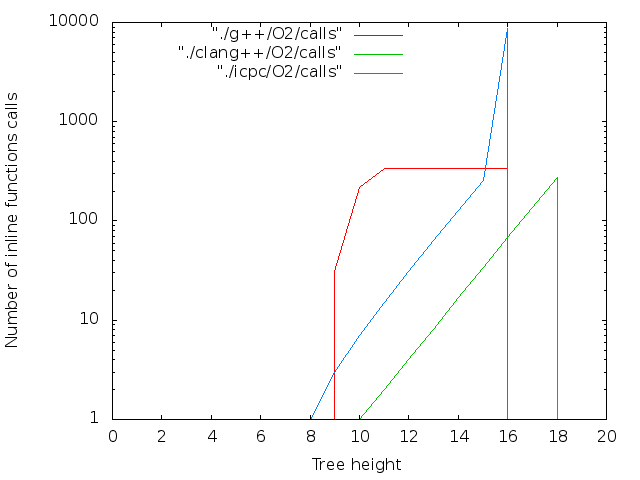

- Characters. The inline keyword is only recommending to the compiler, so for large N. The Nops are grouped into normal functions. They are calculated as follows:

nm --demangle ./list$i|grep nop|wc -l - The number of honest nop-s. Calculated from the disassembler:

objdump -d ./list$i|grep 'nop$'|wc -l

It looks like the images are scaled correctly only in chrome.

For other browsers

screenshot

and a link to the archive with all the images

Pictures

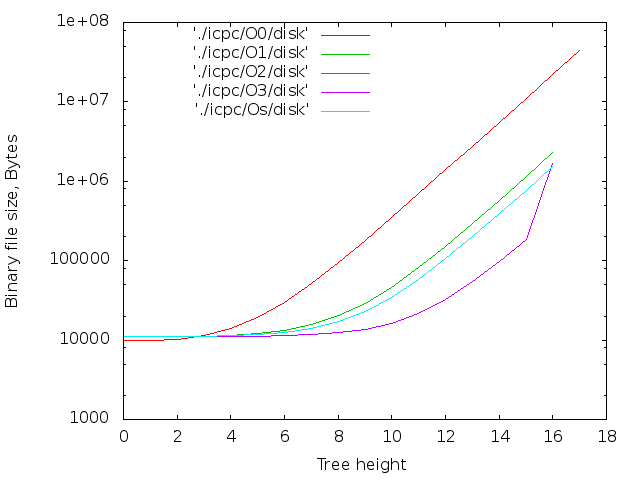

The maximum file size is 14MB for 2 17 icpc compilers. For g ++ - 12 MB. Both at O0. The O0 optimization level does not perform inline substitution, so the number of nop <long> characters is the same as the number of nop operations.Comparison of different levels of optimization for the same compilers

| Memory consumption at compile time | Executable file size | The number of characters in the executable file | Number of nop operations |

|---|---|---|---|

|  |  |  |

|  |  |  |

|  |  |  |

Comparing different compilers with different levels of optimization

| Memory consumption at compile time | Executable file size | The number of characters in the executable file | Number of nop operations |

|---|---|---|---|

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

Tall trees

For a linear data structure, the size of the generated file is limited by at least the maximum recursion depth of the default (256 for clang ++, 900 for g ++). To get around it, you can try to create a tree. The maximum theoretical depth of the compilation tree is (sizeof (long) -1) == 63. A 2 64 bytes will overflow any disk. The practical limit is much less.Using a tree, we do not go beyond the maximum depth of recursion.

The source code takes 19 lines and looks like this:

#ifndef LVL # define LVL 3 #endif long x = 0; template<int N=LVL, long I=0> struct foo{ inline static double bar(double m) {x++;return foo<N-1,I>::bar(m) + foo<N-1,((1<<(LVL-N))|I)>::bar(m) + I;}; }; template<long I> struct foo<0,I>{ inline static double bar(double m) {x++; return m;} }; #include <iostream> int main(int argc, char **argv){ double ret = foo<>::bar(argc); std::cout << x << " " << ret << std::endl; return int(ret); } The result is a tree, each node of which has its own type. Each type is characterized by a pair of numbers:

- N is the level number;

- I - node number level.

Each node within the same level is numbered from 0 to N.

I did not bother with the nop-s here, I used addition. Global long x - used to control the correctness of the assembly. As a result, returns 2 LVL + 1 .

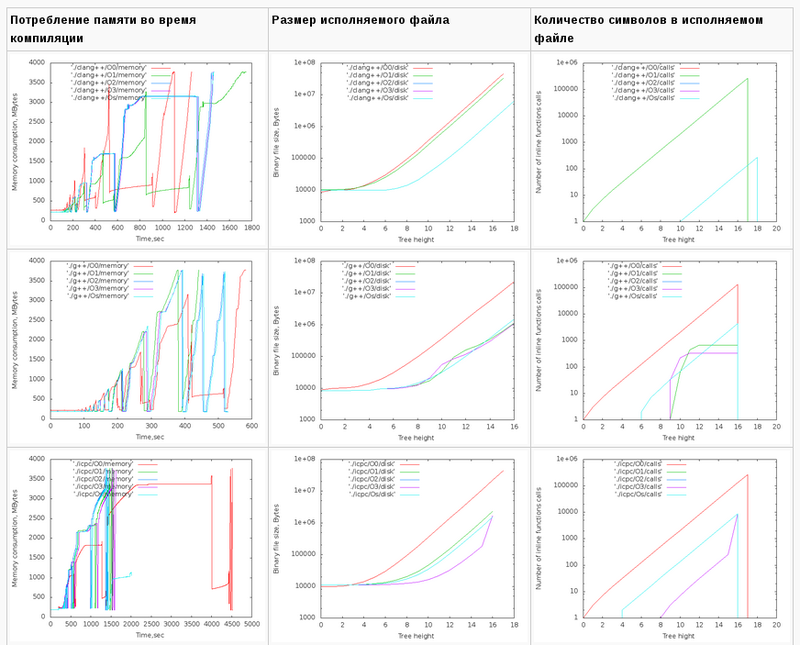

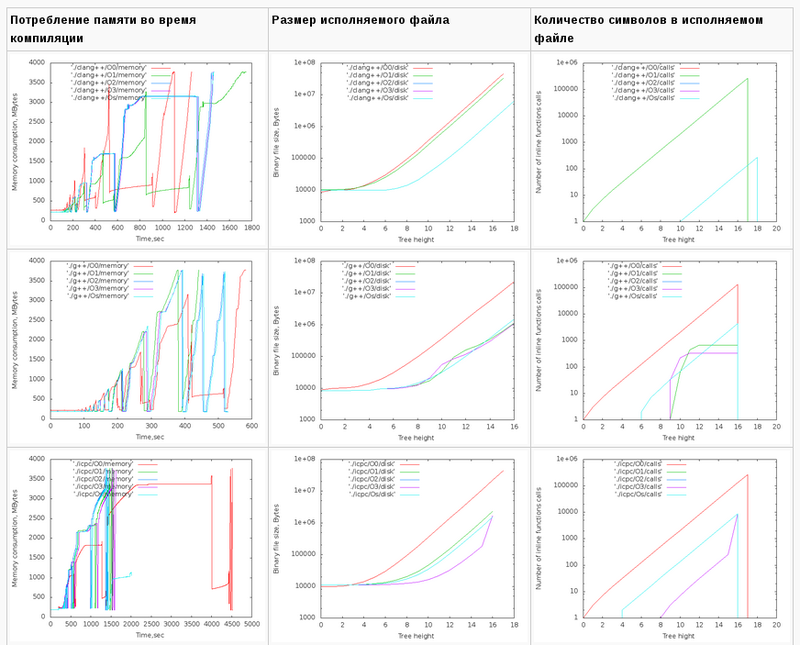

The assembly was carried out by the teams:

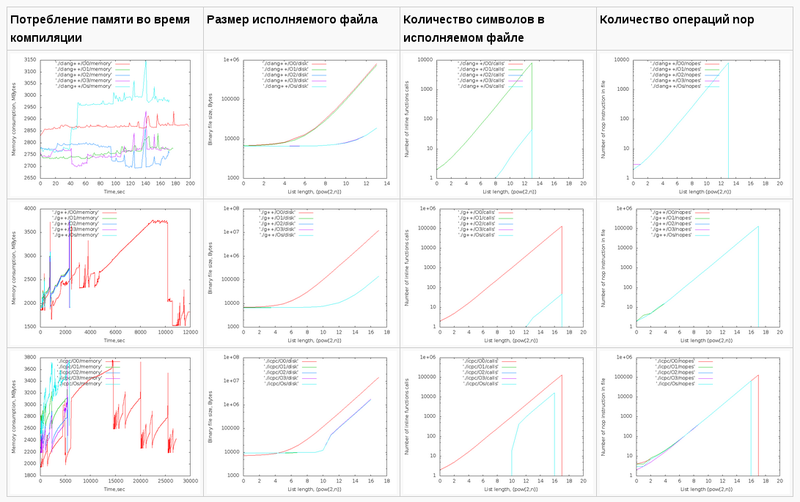

clang++ -DLVL=$n -o fbomb$n ./fbomb.cc -O$x g++ -DLVL=$n -o fbomb$n ./fbomb.cc -O$x icpc -DLVL=$n -o fbomb$n ./fbomb.cc -O$x in the same scenario as above.The maximum LVL turned out to be 18 for clang ++. For g ++ and icpc - 16, and regardless of whether the option - std = c ++ 11 was specified or not. The compilers did not have enough memory.

It looks like the images are scaled correctly only in chrome.

For other browsers

screenshot

and a link to the archive with all the images

Pictures for the tree

| Memory consumption at compile time | Executable file size | The number of characters in the executable file |

|---|---|---|

|  |  |

|  |  |

|  |  |

| Memory consumption at compile time | Executable file size | The number of characters in the executable file |

|---|---|---|

|  |  |

|  |  |

|  |  |

|  |  |

|  |  |

Maximum file size:

- 43MB for icpc -O0 -DLVL = 17;

- 42MB for clang ++ -O0 -DLVL = 17;

- 22MB for g ++ -O0 -DLVL = 16.

Explicit instantiation

43MB is not so little, but is it possible to make the file even more with a given amount of RAM? It turned out possible, but only one compiler out of three - icpc. To do this, use external templates and explicit instantiation.We modify the source code a little so that all template parameters are specified when describing it. We divide the source code into three files — the template description, the main function, and the partial instantiation of the subtrees:

fbomb.hh

extern long x; template<int L=LVL, int N=L, long I=0> struct foo{ inline static double bar(double m) {x++;return foo<L,N-1,I>::bar(m) + foo<L,N-1,((1<<(LN))|I)>::bar(m) + I;}; }; template<int L, long I> struct foo<L,0,I>{ inline static double bar(double m) {x++; return m;} }; main.cc

#include "fbomb.hh" //for i in $(seq 0 13);do echo "extern template struct foo<LVL,L,$i>;";done #define L (LVL-5) extern template struct foo<LVL,L,0>; extern template struct foo<LVL,L,1>; extern template struct foo<LVL,L,2>; extern template struct foo<LVL,L,3>; ... extern template struct foo<LVL,L,30>; extern template struct foo<LVL,L,31>; #include <iostream> long x = 0; int main(int argc, char **argv){ double ret = foo<LVL>::bar(argc); std::cout << x << " " << ret << std::endl; return int(ret); } part.cc

It turned out that g ++ -c main.cc -DLVL = 21 crashes due to lack of memory as well as with full instantiation, regardless of the version of the standard. The same situation for clang ++. Icpc compiles main.cc in less than a second. However, the compilation of subtrees took more than 4 hours: template class foo<LVL, _L, _I>; for i in $(seq 0 31);do echo -n "$i:";date; icpc -O2 -c ./part.cc -o part21_16_$io -DLVL=21 -D_L=16 -D_I=$i;sleep 10; done Memory consumption during subtree compilation

Linker took less than a minute. The result is a 53MB file. This file was built with -O2. -O0 would give a larger size, but I didn’t reassemble it several times because of the time

The largest volume / number of lines = 2.3Mb / string was obtained for the array from the first part (icpc -O0 list.cc)

Metric is of course funny, but funny. 2.3 - the maximum that happened. I would be glad to know if someone gets more relevant.

Good luck to us all.

Upd : Strip did not, but it would be necessary. I thought there was a couple of kilobytes - but it turned out to be a percentage of the size, and quite large. After strip, the maximum size dropped to 37MB (from 53). and 8.6MB (p 14). Accordingly, the ratio is 1.43MB / string.

Source: https://habr.com/ru/post/179137/

All Articles