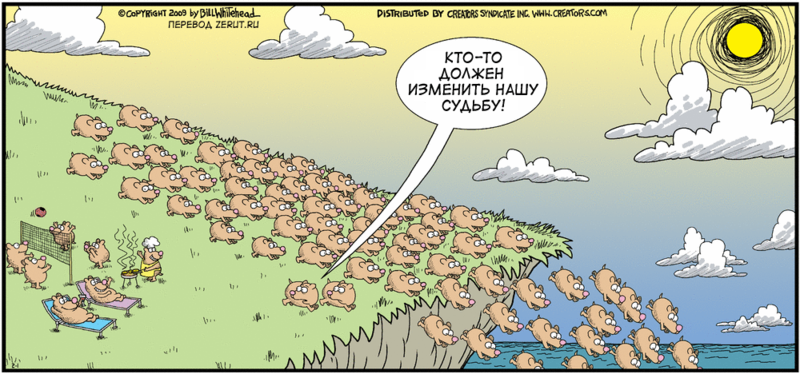

Click-transition problems: how many lemmings are drowning?

Once when communicating with colleagues from AdRiver, it turned out that not all clicks on advertisements become conversions to the target site. It would be normal for me to hear that 5-10% of visitors are lost (quite natural numbers within the framework of the total measurement error). But, as it turned out, losses can be up to 50%. And we decided to find out more about where leaks occur, why ordinary, healthy, adequate clicks do not become conversions.

Obstacle 1: On This Shore

Almost always, before switching to a third-party site from the advertiser's site, an invisible to the average user visits the click account page. This page does nothing, except that it simply fixes the transition on an advertisement and sends (redirect) the user further. And not even for all ad networks this behavior is relevant - only if there is a record of clicks (CPC) or actions (CPA), when you need to strictly consider each user action. But sometimes advertising sold by CPM (fixed cost per thousand impressions) is also taken into account in this way: between the advertiser site (where the ad is shown and where the click is made) and the target site (to which the user should go) the user enters the ad server, which is needed simply to take into account the very fact of a call (click) and which later uses information about user visits to build analytical reports.

Almost always, before switching to a third-party site from the advertiser's site, an invisible to the average user visits the click account page. This page does nothing, except that it simply fixes the transition on an advertisement and sends (redirect) the user further. And not even for all ad networks this behavior is relevant - only if there is a record of clicks (CPC) or actions (CPA), when you need to strictly consider each user action. But sometimes advertising sold by CPM (fixed cost per thousand impressions) is also taken into account in this way: between the advertiser site (where the ad is shown and where the click is made) and the target site (to which the user should go) the user enters the ad server, which is needed simply to take into account the very fact of a call (click) and which later uses information about user visits to build analytical reports.This obstacle does not affect the loss of clicks: there is no unnecessary action by the user between fixing the click and switching to the target site. But under certain conditions, this step (especially if there is more than one redirect, but several) can take significant (from 1 second and above) time, which leads to an increase in losses in the next steps. After all, a user with redirects sees a white screen in the browser and does not know whether the target site will seem to him after N seconds of waiting or not.

')

What can be done: if there is an opportunity to take into account the user's actions through JavaScript (sending invisible pixels to the server of statistics for those users who can do this), then this is what needs to be done. Additionally, you need to reduce the DNS query for the statistics server to 0 (it usually takes most of the waiting time during redirects), you can do this by calling a transparent pixel from this domain when loading an advertisement (or downloading ads from the same domain as performs conversion counts): the browser will make a normal request to the site, cache the DNS response and be able to use it in the near future. And, of course, we need round-the-clock monitoring of the availability and operability of both the statistics accounting server and ad serving servers, including in order for the response time not to exceed the minimum (10-20 ms) values.

Losses: up to 1.5% of clicks, almost always these are non-targeted visits. And these losses, if they occur, are not recorded by the advertising network in any way (since they occur even before the fact of the click is recorded).

Obstacle 2: local inaccessibility

The most famous problem, which is trying to write off all the troubles and losses. It is connected with the fact that the connection of a specific user to a specific site does not always occur when both the site and the user are on the Internet. If both the site and the user are in Moscow, then the situation is more or less stable, the losses are usually no more than 0.001%. But if the site is located somewhere in Europe (on the currently popular German sites), and users are located beyond the Urals, then the losses are not at all such miserable and can amount to 3% of clicks.

The most famous problem, which is trying to write off all the troubles and losses. It is connected with the fact that the connection of a specific user to a specific site does not always occur when both the site and the user are on the Internet. If both the site and the user are in Moscow, then the situation is more or less stable, the losses are usually no more than 0.001%. But if the site is located somewhere in Europe (on the currently popular German sites), and users are located beyond the Urals, then the losses are not at all such miserable and can amount to 3% of clicks.This also includes not only the problems of network accessibility of web resources, but also the problems of a long response time from the server, which these problems may exacerbate (and for users, by and large, there is no difference why their browser could not open the site: lost packages on the road or the browser did not wait for a response from the server). The normal response time of the server is up to 200 ms (taking into account all network delays, so if you have users all over Russia, the server should be strictly in Moscow and return pages no more than 100 ms): in this case, users have server problems will not notice. Even for large resources, up to 1% of users do not have time to wait for a response from the server (a typical situation is when the base station changes from the mobile user’s way, and you need to send the request again if the response from the server did not come quickly enough).

And of course, the site itself may also be physically inaccessible due to high attendance or problems on the server (we check problems with the site resiliency via loadimpact.com ). But usually the proportion of such problems (for high-quality and reliable sites) is small, up to 0.1%.

What can be done: you need to diagnose problems with connecting the target users to the site. This can be done by monitoring the availability of multiple points (it is even better to use several monitoring services to maximize the range). Next, you need to either transfer the site to another hosting site (which will have significantly less availability problems), or immediately write off the recorded amount of losses from the advertising budget: these users will not reach the site.

Losses: 1-10% of clicks.

Obstacle 3: on the road

You probably heard about the next category of obstacles, but you never thought about it in that way - a thief who steals money from your pocket. This is a white screen in the browser of users who have visited your site. This is a typical problem of slow sites, and it is also solved by quite typical methods: data compression, combining of style files and their minimization, transfer of client logic (JavaScript) to the bottom of the page or its maximum relief if loading is required at the beginning of the page. According to the WEBO Software study of the speed of online stores , now the white screen stage for popular sites for most users is no more than 1.5 seconds. This is a good value, and it guarantees a visit to the site for the absolute majority of users (clicks will turn into transitions), but if users have connection problems (regions, mobile Internet), the waiting time increases significantly.

You probably heard about the next category of obstacles, but you never thought about it in that way - a thief who steals money from your pocket. This is a white screen in the browser of users who have visited your site. This is a typical problem of slow sites, and it is also solved by quite typical methods: data compression, combining of style files and their minimization, transfer of client logic (JavaScript) to the bottom of the page or its maximum relief if loading is required at the beginning of the page. According to the WEBO Software study of the speed of online stores , now the white screen stage for popular sites for most users is no more than 1.5 seconds. This is a good value, and it guarantees a visit to the site for the absolute majority of users (clicks will turn into transitions), but if users have connection problems (regions, mobile Internet), the waiting time increases significantly.The most interesting thing is that, practically, all the counters do not record user failures at this stage of the site loading: they themselves have not downloaded to count anything.

What can be done: apply a maximum of client optimization methods (FEO) to speed up the site to reduce the white screen time (before the DOMready event) to 1-1.5 s on the 5 Mbit / s connection — you can use www.webpagetest.org to check the result.

Losses: 2-10% of clicks. In most cases, these are non-targeted visits: users have time to close the browser before their transition to the target site is recorded.

Obstacle 4: almost sailed

The problem here is how far (as the page loads) the counter is worth and how long it sends data. If the full page load can be several (tens) seconds, the user may simply not wait for the counter and close the page (or follow the link) before. This is where the loss occurs. By and large, losses at this stage characterize the very failure rate that everyone is trying to reduce (but, as can be seen from the article, this is only the tip of the iceberg of real problems with the availability of the site). Naturally, with the acceleration of the site, the bounce rate associated only with speed (page load time) decreases. A real decrease in the bounce rate is possible at the level of 2-10% (when the site becomes significantly faster, and users are already inclined to stay on it, ie the speed of the site ceases to influence the decision of users to visit this site).

The problem here is how far (as the page loads) the counter is worth and how long it sends data. If the full page load can be several (tens) seconds, the user may simply not wait for the counter and close the page (or follow the link) before. This is where the loss occurs. By and large, losses at this stage characterize the very failure rate that everyone is trying to reduce (but, as can be seen from the article, this is only the tip of the iceberg of real problems with the availability of the site). Naturally, with the acceleration of the site, the bounce rate associated only with speed (page load time) decreases. A real decrease in the bounce rate is possible at the level of 2-10% (when the site becomes significantly faster, and users are already inclined to stay on it, ie the speed of the site ceases to influence the decision of users to visit this site).In the case of slow sites (overloaded with graphics or interactive elements) there will always be a significant amount of speed related failures. But with the right approach, up to 80% of such failures can be eliminated.

What can be done: apply all known client optimization techniques - starting from compressing text information and optimizing images and ending with deferred loading of secondary information blocks (widgets). In the case of “heavy” sites, it makes sense to develop a “light” version (for regional or mobile users) to which users can be switched (for example, even dynamically at the white screen stage without additional redirects) and minimize the bounce rate thereby. The task is to minimize the time for full page load (onload). This time as the speed of the site shows Google Analytics and takes Google Webmasters into account, you can use the service mentioned in the previous paragraph - www.webpagetest.org for measurements.

Losses: 3-20% of clicks.

Obstacle 5: Was there a gopher?

Another well-known problem that people also like to use in arguing why there is a loss of clicks: the divergence of these counters. Even with the use of one statistics system for accounting and clicks, and transitions, minor deviations are possible (out-of-sync of the collected data, accounting errors). When using different systems for recording clicks and counting transitions to a site, discrepancies can be even greater. In our experience, the discrepancy between the data of different meters can be up to 20%. Why it happens?

Another well-known problem that people also like to use in arguing why there is a loss of clicks: the divergence of these counters. Even with the use of one statistics system for accounting and clicks, and transitions, minor deviations are possible (out-of-sync of the collected data, accounting errors). When using different systems for recording clicks and counting transitions to a site, discrepancies can be even greater. In our experience, the discrepancy between the data of different meters can be up to 20%. Why it happens?Counters use different geo-bases (different correspondence of IP addresses and cities / countries). This leads to the fact that users from Russia are counted as users from other countries. Or vice versa: Russian IP addresses are used by foreign users. The state of geo-bases in cities of Russia is especially pitiable, the accuracy is about 80%. Also, the counters use different accounting schemes for users with a variety of nuances: how cookies are set (from the same domain via JavaScript or from a third party - via HTTP headers); how are users with one IP (corporate users) counted - by browser, by cookie; how user devices (especially mobile ones) perceive cookies (set, do not install).

What can be done: use a single counter (base) for accounting and clicks and conversions. This will minimize potential discrepancies.

Losses: 1-10% of clicks.

Conclusion

As we see, seemingly insignificant and hardly noticeable problems in the aggregate can lead to a significant decrease in the effectiveness of advertising campaigns on the Internet (up to 50% loss of the advertising budget due to various reasons). Before launching an advertising campaign (or connecting a new channel to attract users), it is necessary to audit all steps of downloading a site and make sure there are no significant problems.

Source: https://habr.com/ru/post/178209/

All Articles