How to hold a two-day online developer conference for $ 10?

We have just completed the DotNetConf conference - our online conference of the community of developers who love the .NET platform and open source projects.

All conference reports are already available via the link on the official website .

')

Conference platform

It's pretty funny to call our software, on which the conference worked, the “platform”, it sounds too “enterprise” and official. In the past, we held the aspConf and mvcConf conferences with sponsors who paid for the necessary expenses. We used the power of Channel 9, the studio and broadcast video from Seattle or through Live Meeting.

However, this year we wanted to hold the conference as easy as possible, cheaper and more distributed. We wanted to invite speakers from any time zone. How cheap was it? At about $ 10. The exact score will be calculated later, we just wanted to raise capacity, hold an event and then abandon the resources received.

Video streaming and screen sharing

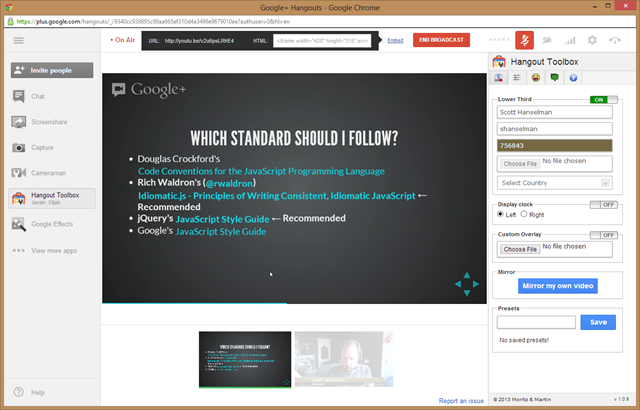

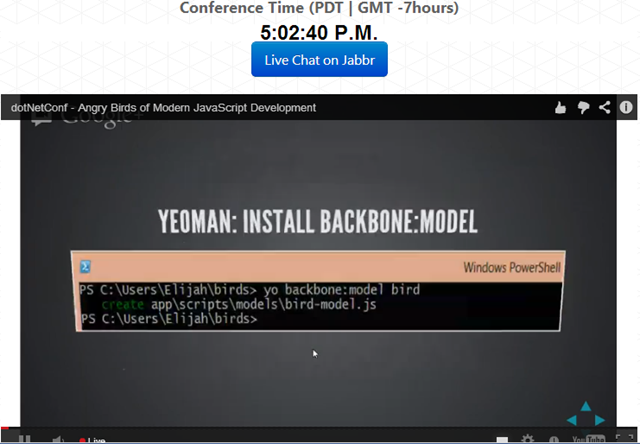

- This year we use Google Hangouts and their "Hangouts On Air" features. The dotnetconf account on Google invites the speaker to hang out and marks the "on air" function before starting the broadcast. Then we use the Hangout Toolbox to dynamically add graphics and speaker names to the image. All speakers set the resolution to 1280x768, and the live stream can be reduced to 480p;

- As soon as you click "" Start Broadcast, a YouTube link for you is formed for the live stream. When you click "End Broadcast" the recorded video will be ready to be viewed in your YouTube account in a few minutes. Video chat organizers (me and Javier ) click “Hide in Broadcast” and fade as you leave the air. You can see how I “left” in the screenshot below, I am still invisibly present, but will appear only when it is required. After that, only one speaker remains active and the video expands to full screen, which is what we need;

- Important note: instead of using one Hangout for 8 consecutive hours, we started and stopped a new Hangout for each speaker. This means that our posts are already divided on the YouTube page. YouTube videos can be cropped from the beginning and end, so there are no problems with video processing.

Database

Surprise! We did not use databases. We did not need them. We launched a two-page site using ASP.NET Web Pages, which was written in WebMatrix. The site works in the Windows Azure cloud , and since our data sets (speakers, schedule, video streaming, etc.) do not change much, we put this data into XML files. Of course this is a database, but it is a database for the poor. Why pay for what we do not need?

How did we update the “database” during the conference? Get ready to give your opinion. Data is in DropBox. (Yes. They can be on SkyDrive or any URL, but we used DropBox).

Our web application links the data from the DropBox and caches it. It works very well.

<appSettings> <add key="url.playerUrl" value="https://dl.dropboxusercontent.com/s/fancypantsguid/VideoStreams.xml" /> <add key="url.scheduleUrl" value="https://dl.dropboxusercontent.com/s/fancypantsguid/Schedule.xml" /> <add key="url.speakerUrl" value="https://dl.dropboxusercontent.com/s/fancypantsguid/Speakers.xml" /> <add key="Microsoft.ServiceBus.ConnectionString" value="Endpoint=sb://[your namespace].servicebus.windows.net;SharedSecretIssuer=owner;SharedSecretValue=[your secret]" /> </appSettings> The code is simple, and what the code should be. Want to see the schedule markup? Yes, this is <table>. This is the schedule table. Nya!

@foreach(var session in schedule) { var confTime = session.Time; var pstZone = TimeZoneInfo.FindSystemTimeZoneById("Pacific Standard Time"); var attendeeTime = TimeZoneInfo.ConvertTimeToUtc(confTime, pstZone); <tr> <td> <p>@confTime.ToShortTimeString() (PDT)</p> <p>@attendeeTime.ToShortTimeString() (GMT)</p> </td> <td> <div class="speaker-info"> <h4>@session.Title</h4> <span class="company-name"><a class="speaker-website" href="/speakers.cshtml?speaker=@session.Twitter">@session.Name</a></span> <p>@session.Abstract</p> </div> </td> </tr> } Scaling

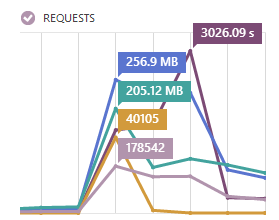

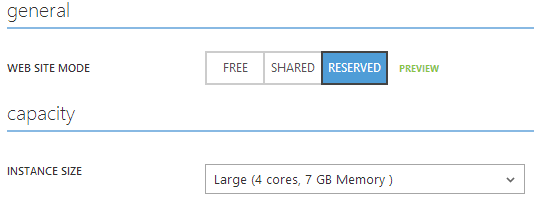

We started with an extra small instance of Windows Azure Web Site, and then switched to two large instances (after all, we rolled back to two medium-sized ones, since large instances turned out to be overly powerful) Web Site.

We scaled up (and therefore, paid more) only during the conference and after it was held, the site was translated into small copies. No need to spend money when this is required.

Real-time site update with SignalR

Since the link to the broadcast of the YouTube report changes with each speaker, we had the problem that the conference participants would have to refresh the page in the browser in order to get a new URL. There are many solutions to this scenario that you might think about. We could specify an update via meta, an update on a timer, but all these solutions are not implemented on demand . In addition, we wanted to show screensavers during the speaker change. While one of us was preparing the next speaker, viewers would get and watch video intros.

We found this problem around 22-00 the night before the conference. Javier and I talked on Skype and decided to make the next hack.

What if everyone has a SignalR client (real-time HTML / JS server and client interaction library) running while watching a video? Then we can send the next video to the viewers directly from the administration console.

Let me explain this. There is a viewer (you), there is an admin (s) and a server (Host). The viewer uses the main page with the following JavaScript code with the SignalR library:

$(function () { var youtube = $.connection.youTubeHub; $.connection.hub.logging = true; youtube.client.updateYouTube = function (message, password) { $("#youtube").attr("src", "http://www.youtube.com/embed/" + message + "?autoplay=1"); }; $.connection.hub.start(); $.connection.hub.disconnected(function () { setTimeout(function () { $.connection.hub.start(); }, 5000); }); }); The viewer listens, or rather, expects a message from SignalR from a server with a short code of YouTube video. When the message arrives, we replace the iFrame. Everything is simple and it works.

Below is the administrator console markup, through which we send the new YouTube video code to the pending viewers (I use Razor from ASP.NET Web Pages and WebMatrix, so this is mixed HTML / JS code):

<div id="container"> <input type="text" id="videoId" name="videoId"> <input type="text" id="password" name="passsword" placeholder="password"> <button id="playerUpdate" name="playerUpdate">Update Player</button> </div> @section SignalR { <script> $(function () { var youtube = $.connection.youTubeHub; $.connection.hub.logging = true; $.connection.hub.start().done(function () { $('#playerUpdate').click(function () { youtube.server.update($('#videoId').val(), $('#password').val()); }); }); $.connection.hub.disconnected(function() { setTimeout(function() { $.connection.hub.start(); }, 5000); }); }); </script> } We send a short code and password. All this should be quite difficult, eh? What does the powerful SignalR backend running in the Windows Azure Service Bus based cloud look like? Sure that the code should be too big to show it within the blog, right? Relax, friends.

public class YouTubeHub : Microsoft.AspNet.SignalR.Hub { public void update(string message, string password) { if (password.ToLowerInvariant() == "itisasecret") { Clients.All.updateYouTube(message); ConfContext.SetPlayerUrl(message); } } } This is both a purist’s nightmare and a pragmatist’s dream. In any case, we used this code during the conference and it worked. Between sessions we sent links to pre-recorded screensaver videos, and then sent a link to his video before the next report.

In addition, we updated the DropBox links with the current addresses of the video streams, so that new visitors receive the correct links to the latest videos, as it would be wrong for new visitors to wait for the next push with a link to the video.

What about scaling up? We had a couple of cars in the farm, so we needed SignalR to push updated video links for a scalable solution. It took another 10 minutes.

Scaling with SignalR using the Windows Azure Service Bus

We used SignalR 1.1 Beta plus Azure Service Bus Topics to scale and therefore added the Service Bus to our Windows Azure account. The launch code of our application has changed, a call to the UseServiceBus () method has been added:

string poo = "Endpoint=sb://dotnetconf-live-bus.servicebus.windows.net/;SharedSecretIssuer=owner;SharedSecretValue=g57totalsecrets="; GlobalHost.DependencyResolver.UseServiceBus(poo,"dotnetconf"); RouteTable.Routes.MapHubs(); SignalR now uses Service Bus Topics subscriptions for the “Publish / Subscriptions” mechanism to send push notifications between two web servers. I can do a push-send from the Web 1 server and this message will be delivered to everyone connected to both the Web 1 server and Web 2 (or Web N) via a real-time SignalR connection.

We will delete this Service Bus Topic as soon as we finish with the conference. I really would not want to get an account for a few pennies. ;) Below are the prices for this service in Windows Azure :

432,000 Service Bus messages cost 432,000 / 10,000 * $ 0.01 = 44 * $ 0.01 = $ 0.44 per day.

Not sure how many messages we used, but I can be sure that they won't cost us much.

Thanks to the community!

- Many thanks to designer Jin Yang , who created the dotnetConf logo and design. Thank him on twitter @jzy . Thanks to Dave Ward for the design layout in HTML!

- Thanks and reverence to Javier Lozano for coding, his organization, his brainstorms and his tireless hard work. Thanks to him for spending a few hours with me at night while we wrote the SignalR_code and updated the DotNetConf.net website;

- Thanks to David Fowler for the phrase “add Service Bus only takes 10 minutes”;

- Thanks to Eric Hexter and Jon Galloway for their organizational skills and time spent on all * Conf conferences!

- But most of all I want to thank the speakers who have spent their time and made the reports, as well as the community that joined the broadcast and communication!

Source: https://habr.com/ru/post/178193/

All Articles