Cisco UCS Manager. Management Interface Overview

In the course of the post about unpacking Cisco UCS , which arrived at our Competence Center, we will talk about Cisco UCS Manager, which is used to configure the entire system. We performed this task in preparation for testing FlexPod ( Cisco UCS + NetApp in MetroCluster mode) for one of our customers.

We note the main advantages of Cisco UCS, thanks to which the choice fell on this particular system:

- Cisco UCS is a unified system, not a scattered group of servers with an attempt to have a single management;

- the presence of a unified factory that contains embedded management, the use of universal transport, the absence of a switching level in the chassis and at the hypervisor level — the Virtual Interface Card, simple cabling;

- a single convergent factory for the whole system (one-time management of up to 320 servers), unlike competitors, has its own factory for each chassis (up to 16 servers);

- One point of management, quick configuration when using policies and profiles, which minimizes risks when configuring, deploying, replicating, provides high scalability.

Traditional blade systems have the following disadvantages :

- each server blade is ideologically the same rack or tower server from a management point of view, only in a different form factor;

- each server is a complex system with a large number of components and a large number of powerful management tools;

- each chassis, each server, and in most cases, each switch must be configured separately and most often "manually";

- “Umbrella” “unified” management systems exist, but in most cases this is just a set of links to individual utilities;

- “upward” integration for these systems is possible, but limited to “standard” simple interfaces.

Unlike competitors, Cisco UCS has a thoughtful management system - UCS Manager . This product has the following features:

- does not require a separate server, DBMS, OS and licenses for them - it is part of UCS;

- does not require installation and configuration - just turn on the system and set the IP address;

- has built-in fault tolerance;

- has efficient and simple configuration backup tools;

- updated with a few “clicks”;

- delegates all its functionality "up" through the open XML API;

- worth $ 0.00.

Cisco UCS Manager itself is located on both Fabric Interconnect, and, accordingly, all settings, policies, etc. also stored on them.

Up to 20 blade chassis can be connected to one pair of Fabric Interconnect. Each chassis is managed from a single point - UCS Manager. This is possible due to the fact that Fabric Interconnect is built as a distributed switch with the participation of Fabric Extenders. Each chassis has two Fabric Extender, with the ability to connect 4 or 8 10GB links each.

')

We start with the most basic: connect the console wire and set the initial IP address. Then we launch the browser and connect. (It will take java, since in fact UCS Manager's GUI is implemented on it). The pictures below show the appearance of the program, which appears on the screen after entering the login and password and logging into the UCS Manager.

LAN tab

We start setting up work on the “LAN” tab . It should be noted that setting up this tab, as well as the “SAN” tab, begins setting up the entire system. This is especially true in the case of diskless servers - just our case.

Everything that is related to the network is configured here: which VLANs are allowed on one or another Fabric Interconnect, which ports the Fabric Interconnect is connected to each other, which ports work in which mode and much more.

Also on this tab are configured templates for virtual network interfaces: vNIC, which will later be used in the service policies described above. For each vNIC (which can be up to 512 to a word on a host when installing 2 Cisco UCS VIC cards), a specific VLAN is tied, or the VLAN group and the “physical communication” of the physical host over the network go through these vNICs.

The organization of such a number of vNICs is possible using a Virtual Interface Card (VIC). There are 2 types of VIC in the Cisco UCS server blade family: 1240 and 1280.

Each of them has the ability to support up to 256 vNIC or vHBA. The main difference is that 1240 is a LOM module, and 1280 is a mezzanine card, also in bandwidth.

1240 independently has the ability to aggregate up to 40 GB, with the possibility of expanding up to 80GB when using the Expander Card.

1280 is initially able to pass through itself up to 80GB.

VICs allow you to dynamically assign bandwidth to each vNIC or vHBA, and also support hardware failover.

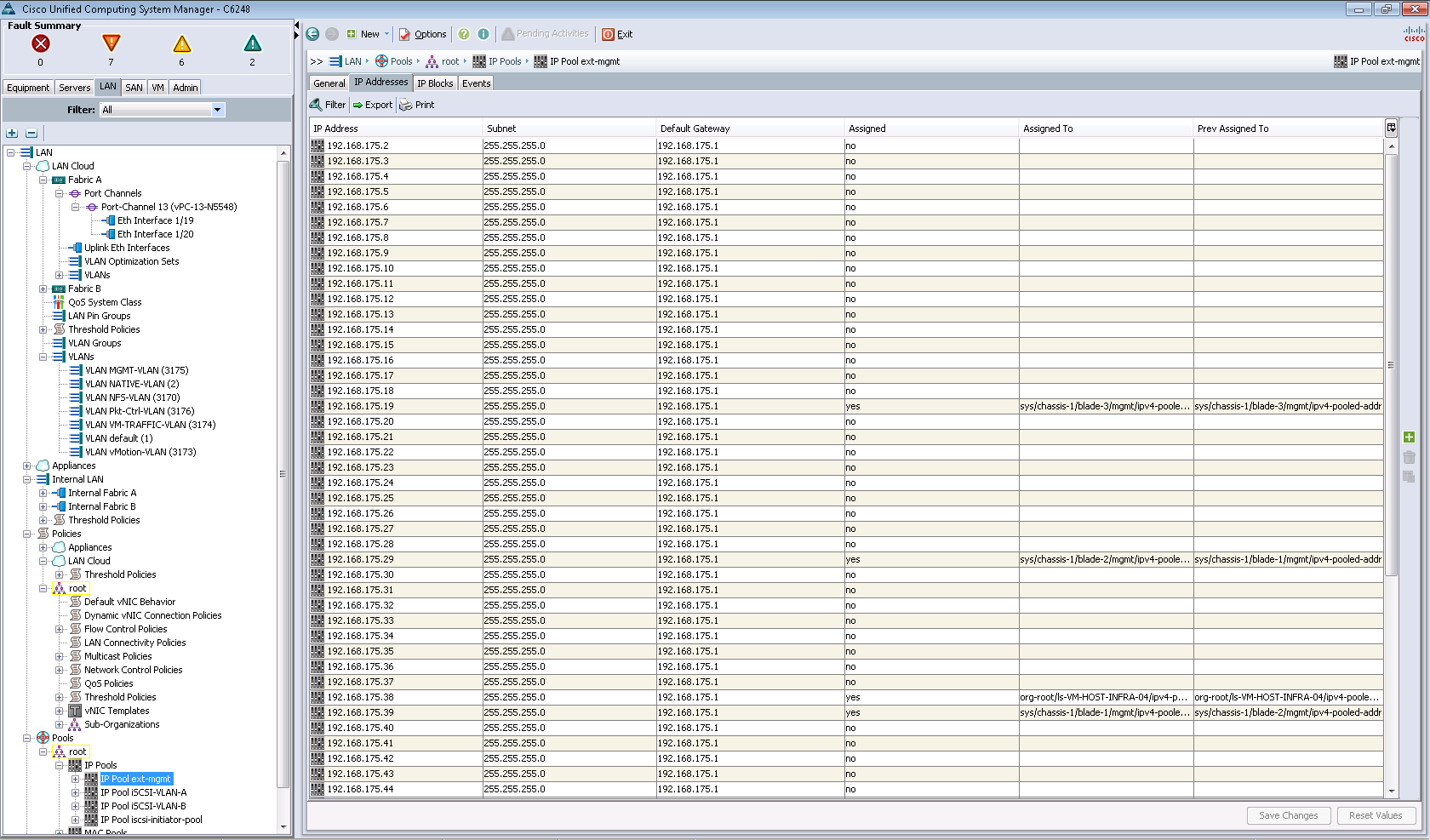

Below you can see several created pools of IP addresses, of which these same addresses will be assigned to network interfaces in certain VLANs (a sort of HP's EBIPA). The situation is the same with MAC addresses that are taken from MAC pools.

In general, the concept is simple: we create VLAN (s), configure ports on Fabric Interconnect, configure vNICs, issue vNIC MAC / IP addresses from pools and drive them into the service policy for future use.

Important note: As you know, there are validated Cisco designs with major players in the storage market, such as FlexPod, for example. The FlexPod design includes server and network equipment — Cisco, and NetApp acts as storage. Why do you ask there is also network equipment? The thing is that in version 1.4 and 2.0 UCS Manager itself Fabric Interconnect did not implement FC zoning. For this, an upstream switch (for example, Cisco Nexus) connected to Fabric Interconnect through Uplink ports was required. In the version of UCS Manager 2.1. Local area support has been added, making it possible to connect Storage directly to Fabric Interconnect. Possible designs can be found here . But still, you should not leave FlexPod without a switch, since FlexPod is a standalone data center building block.

Go to the next tab: "SAN"

Here is everything that relates to a data network: iSCSI and FCoE. The concept is the same as that of the vNIC network adapters, but with its differences: WWN, WWPN, IQN, etc.

Go to the tab "Equipment"

Through this interface, you can see information about our shared computing infrastructure, which has been deployed for testing. So, one chassis (Chassis 1) and four blade servers (Server1, Server2, Server3, Server4) were involved. This information can be obtained from the tree on the left side (Equipment) or in a graphical form (Physical Display).

In addition to the number of chassis / servers, you can view information about auxiliary components (fans, power supplies, etc.), as well as information about different types of alerts. The total number of alerts is displayed in the “Fault Summary” block. Information on each node can be viewed by clicking on it on the left. The colored frames around the names of nodes on the left help us to find out on which node there are alerts. Attentive readers will also notice small icons on the graphic view.

Our Fabric Interconnect are configured to work in failover mode (if one of the units fails, another one will immediately take over its work). Information about this is visible at the very bottom of the tree - designations primary and subordinate.

Next, go to the tab "Servers"

Here are various policies, service profile templates and the service profiles themselves. For example, we can create several organizations, each of which assigns its own boot device selection policy, access to certain VLAN / VSAN, etc.

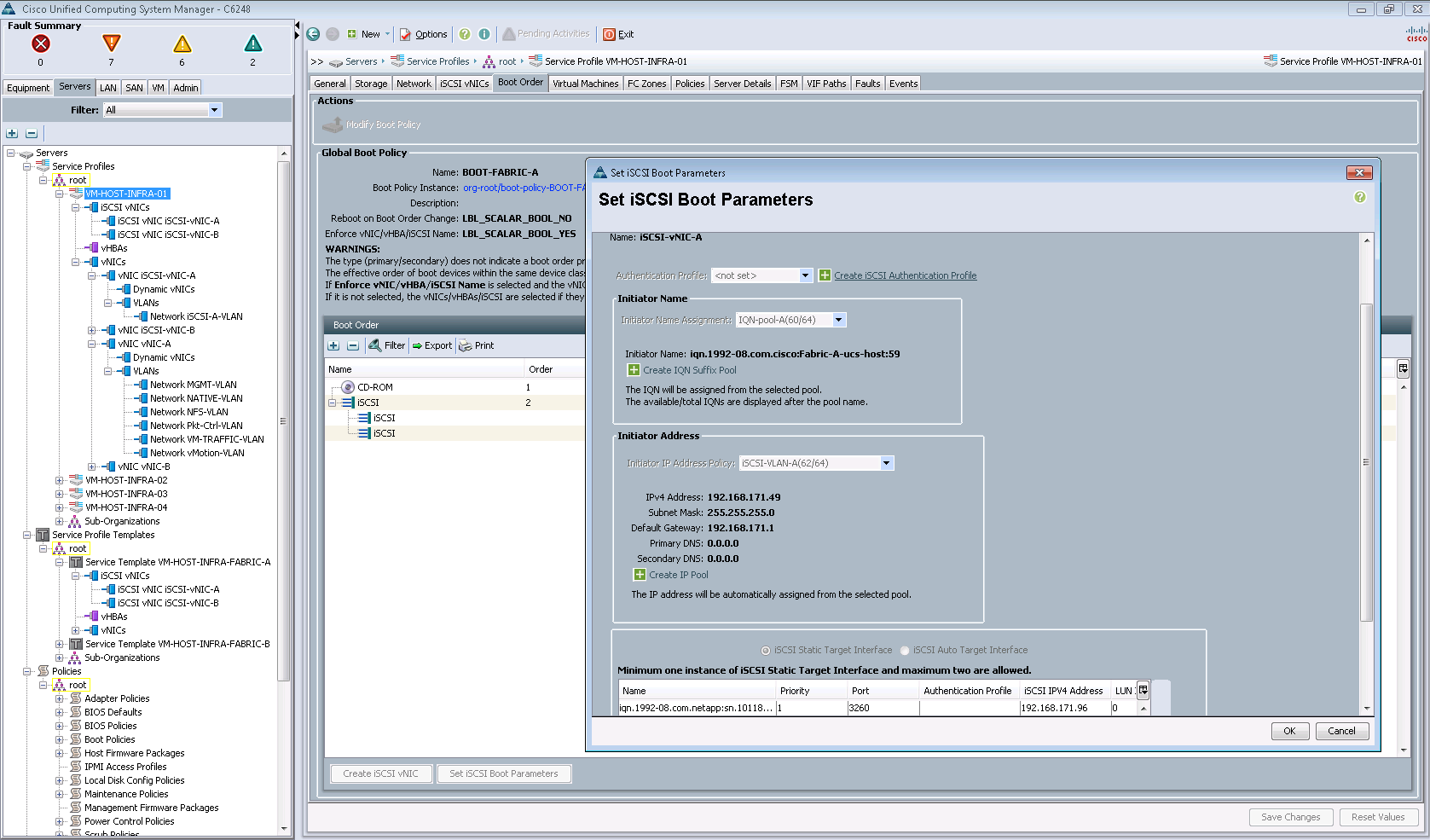

The following figure shows an example of setting up an iSCSI boot moon.

The global meaning of all this is: having pre-configured all policies and templates, you can quickly configure new servers to work using one or another service profile (Service Profile).

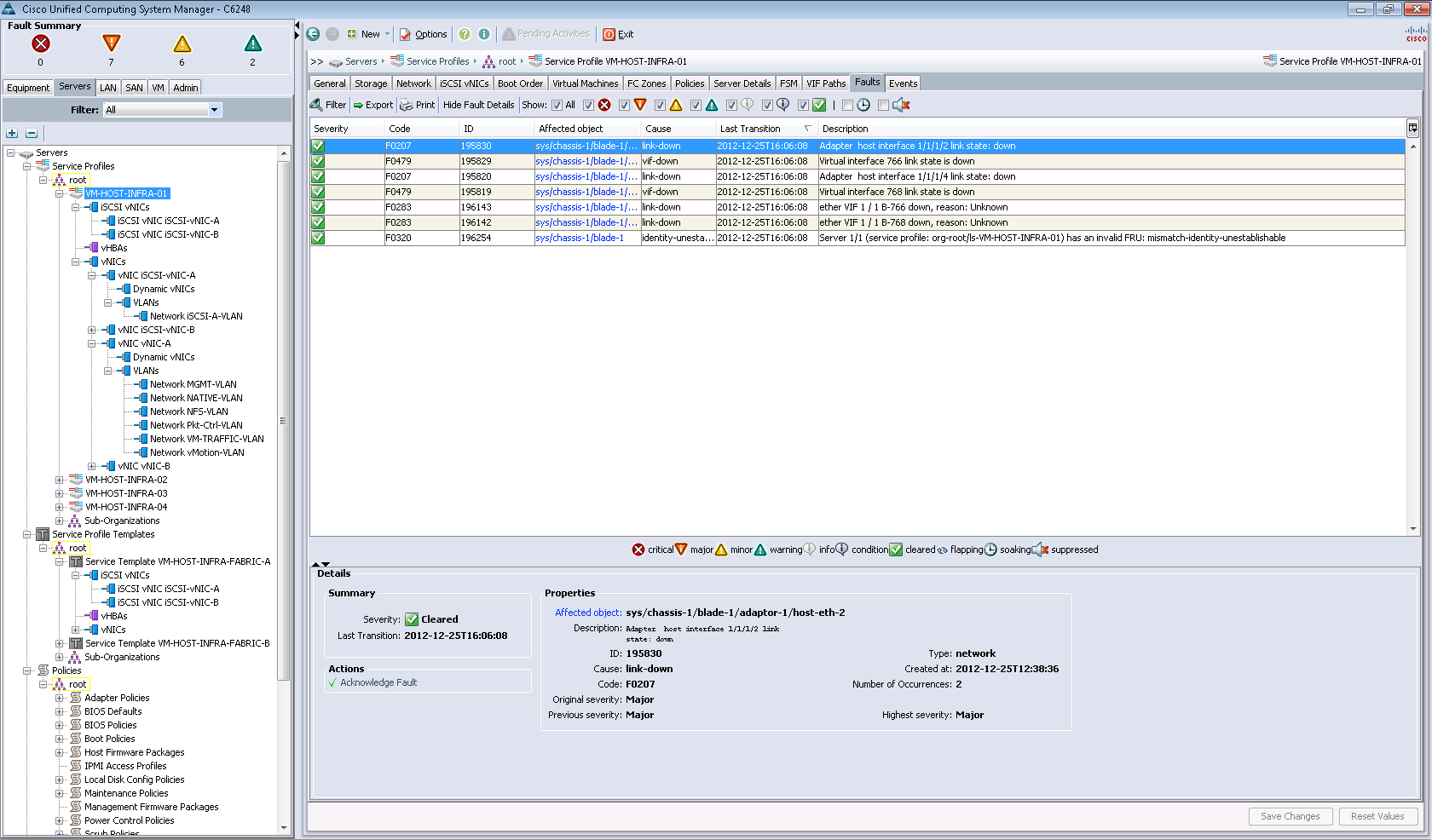

The service profile, which contains the above settings, can be applied to one specific server or group of servers. If any errors or messages occur during the deployment, we can view them in the corresponding tab “Faults”:

After that, users of these organizations can deploy and configure the server for themselves with the selected operating system and a set of pre-installed software. To do this, you must take advantage of the capabilities of the UCS Manager interface, or by turning to automation: for example, using the VMware vCloud Automation Center or Cisco Cloupia product.

Go to the tab "VM"

You can install plugins for Cisco UCS Manager in VMware vCenter. So, we connected a plugin through which we connected a virtual data center with the name "FlexPod_DC_1". It runs Cisco Nexus 1000v (nexus-dvs), and we have the opportunity to watch its settings.

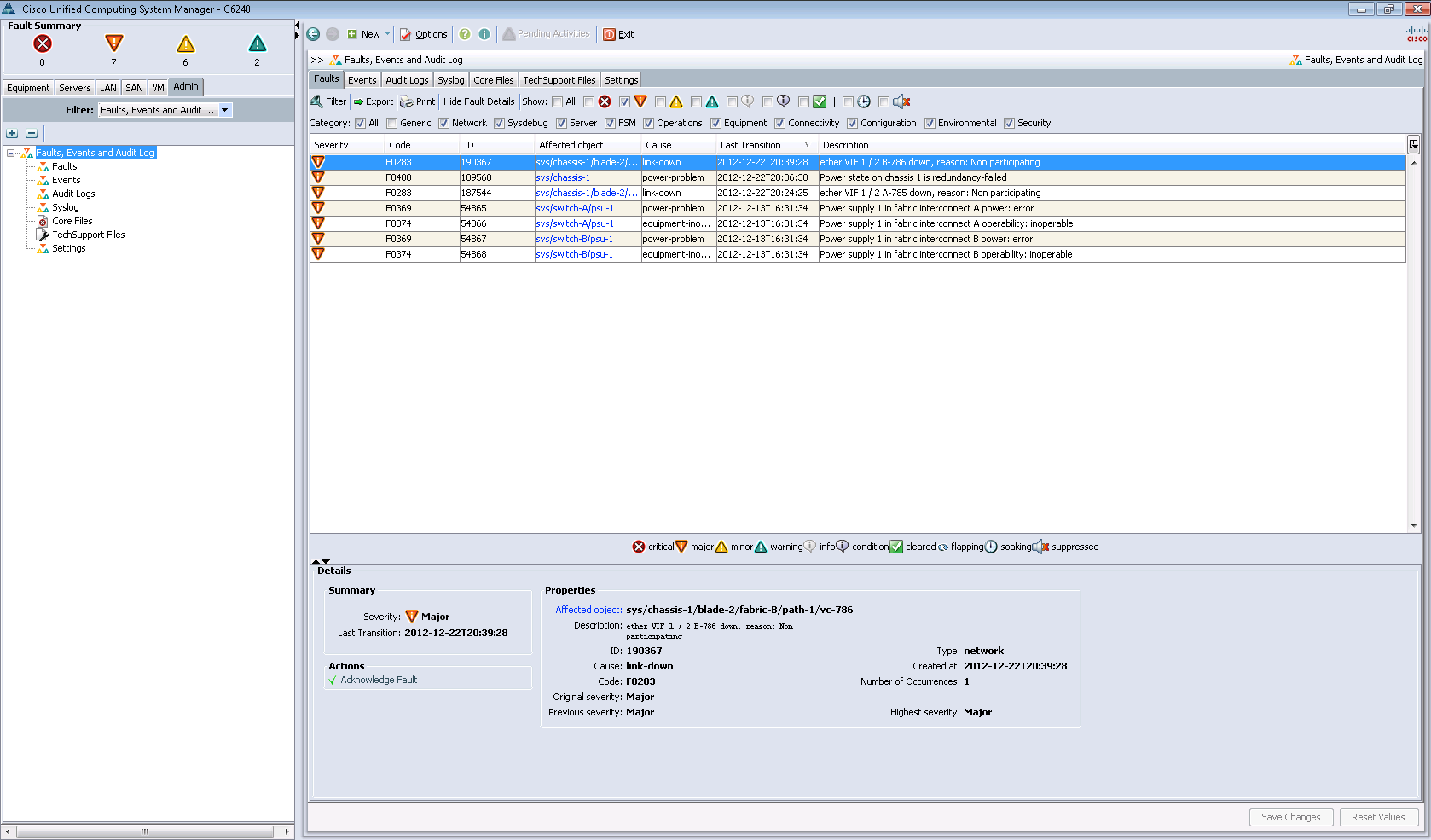

Well, the last tab: "Admin"

Here we can see global events: errors, warnings. Collect various data when contacting Cisco TAC support, etc. things. In particular, they can be obtained on the tabs "TechSupport Files", "Core Files".

The screenshot shows the errors associated with the outage. As part of testing, we turned off the power supply units in turn (Power supply 1 in fabric interconnect A, B) to demonstrate to the customer the stability of the system in a simulated case of a power outage at one power input.

As a conclusion

We would like to note that the entire process of configuring and testing the FlexPod, which is part of the configuration and test of Cisco UCS, took a whole week. The lack of documentation for the latest version of UCS Manager 2.1 made the task a bit more complicated. Had to use the option for 2.0, which has a number of discrepancies. Just in case we post a link to the documentation . Perhaps it will be useful to someone.

In the following posts we will continue to cover the topic of FlexPod . In particular, we plan to talk about how we, together with the customer, tested NetApp in MetroCluster mode as part of FlexPod . Do not disconnect! :)

Source: https://habr.com/ru/post/177283/

All Articles