Testing applications for Canvas: recipes on the example of testing the Yandex.Maps API

Despite the fact that HTML5 is still in the process of development, it already appears in web interfaces. One of the main innovations of this version of HTML was the Canvas element, which is used to render two-dimensional graphics. For example, everything you see and interact with in an MMORPG game from Mozilla or good old Command and Conquer is drawn and processed using Canvas. The most sophisticated minds even realize full-fledged shapes on Canvas. Or an interactive model of the solar system .

The frameworks for working with this element grow like mushrooms after rain; about how to start programming using Canvas, a huge number of articles have been written. But there is one point about which, apparently, because of the narrow specifics, they are rarely said and little. We are talking about testing applications for Canvas. In a sense, it becomes a problem for a testing engineer who is used to accessing elements on a page using their css or xpath selectors, and then performing some actions with the object. With Canvas, this approach does not work, because the DOM element is one, and there are many objects in it.

')

Under the cut, using the example of test automation of the Yandex.Maps API, I will tell you how we solved this problem in Yandex.

The engineer analyzes the service being tested and composes his Page Objects for his pages (at the request of the tester, this can be done using the HtmlElements library). If he wants beautiful reports, he can use the Thucydides framework. Then, in accordance with the existing test scenarios, automatic tests are written using the WebDriver API . What turned out, the tester runs on the farm of browsers through the Selenium Grid and looks for errors, looking at the reports that came in the mail.

Everything is simple and beautiful, if the tests do not need to interact with interactive graphics and test it. But what to do if you need to click in a circle on the map or drag a square from one place to another? Suppose we even find a Canvas, but we need a specific circle. How to click on it?

Faced with graphics, we realized that the classic approach through Page Object does not work here. And we don’t want to refuse WebDriver, because it gives us important bonuses: the ability to run tests in all popular browsers or, for example, execute arbitrary JavaScript code on the page (which is extremely useful when testing the JavaScript API). In addition, the tool is supported by a large developer community.

That is, our approach should be based on WebDriver , but at the same time be able to interact with all elements on the page, regardless of whether they are represented in the DOM document tree or not. In addition, we should be able to verify the result of our interaction and catch possible JavaScript errors.

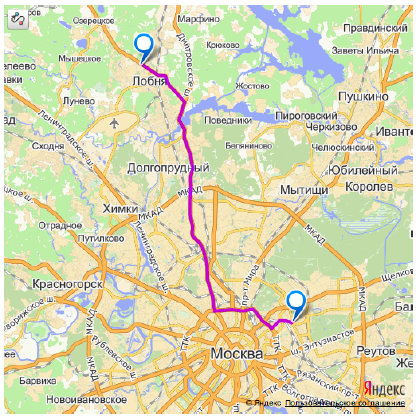

As I said above, we will examine the interface testing in the context of the Yandex.Maps API. So let's see what, in fact, we have to interact in tests.

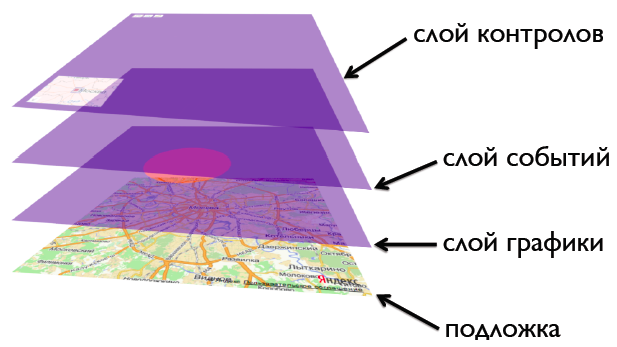

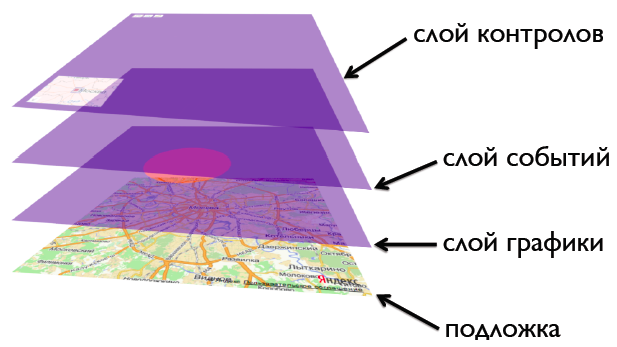

The result of the API is a map similar to puff pie. The very first, lower layer is the terrain map. Above it is a layer of graphics. These are different routes, ruler lines, and even labels that can be displayed using elements other than Canvas DOM. The third is the event layer, over which various map controls are already located (buttons, drop-down menus, input fields, sliders, etc.).

In this “cake” we are interested in interaction with the graphics layer, since the rest of the interface is represented as separate DOM elements and clicking on them using WebDriver is easy. Users of the Yandex.Maps API can say: "So you have an API for all graphic objects, interact with elements on the Canvas through it."

And this approach is used by many engineers to work with objects on Canvas. But he has one problem - he is far from the real actions of the user. An ordinary person does not call the JavaScript object in the

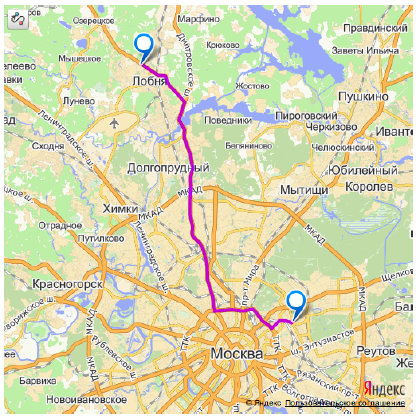

To understand how to better interact with Canvas, you need to know how the program itself understands which object the user clicked. In the case of the Yandex.Maps API, active areas technology is used. Something similar is used wherever there are interactive elements on the Canvas.

It turns out that we do not need a specific graphic object - we just need to pick up the coordinates of the event and throw it on the Canvas or some layer of events. But this is not so easy to do. Suppose we need to click in the label. Determining its coordinates by eye is not an easy task.

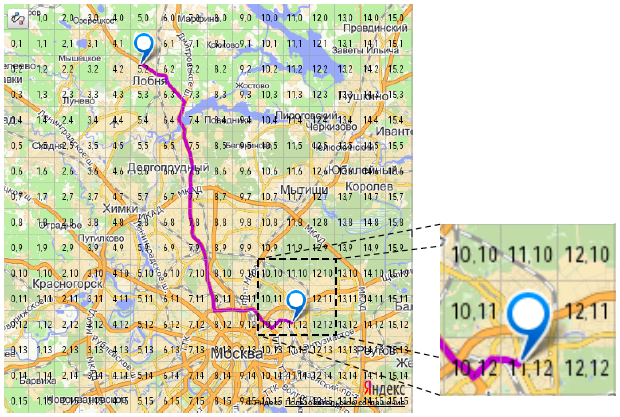

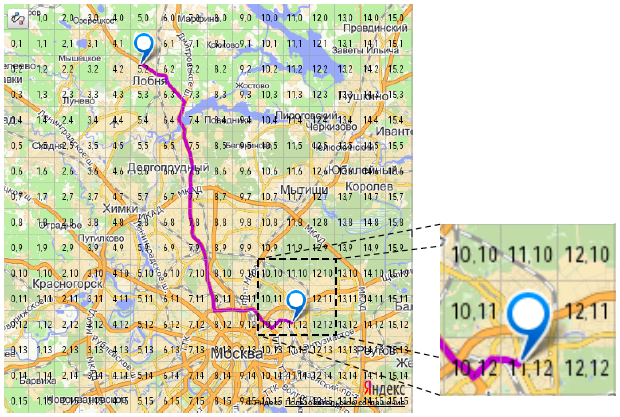

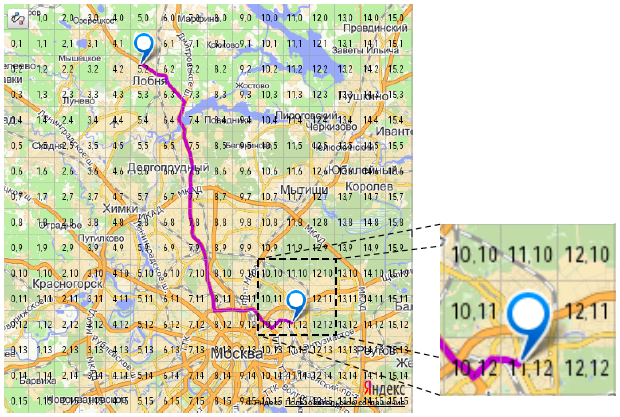

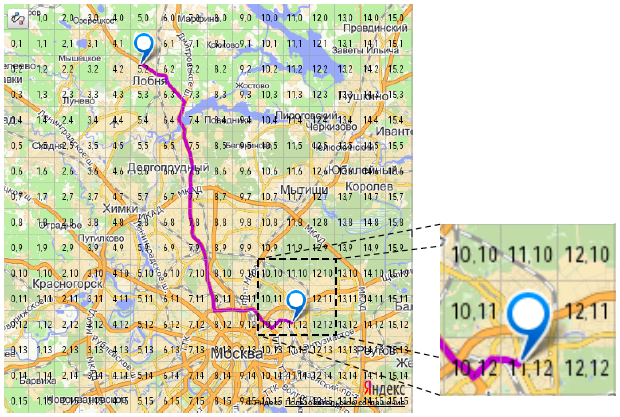

If we rely on pixels, then in the case of a Canvas object with a size of 512 by 512, we get 512x512 interaction points. Too much. To make your life easier, let's divide the Canvas into conditional squares, and for even more convenience, display them with a semi-transparent background above the Canvas so that the testing engineer can see through their eyes. We chose the size of the side of the square equal to 32 pixels.

Now it is clearly visible: in order to click in the label, you need to click in the center of the square with the coordinates [11, 11]. Knowing the size of the side of the square, these coordinates are easily converted into ordinary pixel coordinates, with which click on the Canvas will be triggered.

It is worth noting that we use this approach to interact with map controls, although they can also be accessed through the DOM tree. This was done so that the appeal to all elements on the map was in the same style. Unfortunately, WebDriver is not able to throw events at an arbitrary point of the window, but only on a specific element of the DOM tree. Therefore, before triggering an event, we define an element for interaction. This is done through the

When we press a button, it changes its appearance - the click animation takes place. In the test, this animation can be checked by querying the values of the attributes of the DOM element responsible for the appearance of the button. The necessary class has appeared - it means the animation has occurred. In the case of objects drawn on Canvas, everything is somewhat different. Here we can no longer request a class or position on the page. Only the Canvas itself has these attributes, but not the objects drawn on it, because they are not in the DOM tree. So how can we check whether the line is the right color and whether the position of the polygon changed after we dragged it with the mouse?

On the one hand, you can request the color and position by referring to the JavaScript objects displayed on the Canvas. But, as you remember, no one guarantees that the API does not lie to us. There may be an error in the code, and JavaScript will tell us that the line is red, and with our eyes we will see that it is blue.

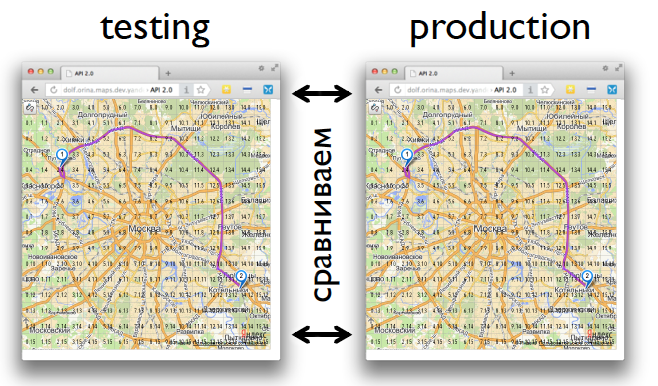

But there is a way out. It is enough to compare the appearance of a stable version of the interface with the one being tested. In other words, compare snapshots of two browser windows. We do this as follows:

This set of actions allows us to get rid of a number of problems associated with comparing the appearance of interfaces. First, tests do not depend on information that changes over time. Secondly, there is a resistance to browser-dependent layout. And thirdly, do not store the reference image.

The final point of our approach to testing is to capture JavaScript errors. Here, at first glance, everything is simple: we take and use the window object's onerror method. Everything is good in theory, but in practice this approach has one big problem . If the error occurred on a host other than the one opened in the browser, we will not be able to read its text. What to do?

There are two options:

What to choose from this is up to you. Both options have the right to life.

As it turned out, the task of testing a web interface that works using Canvas is solved quite successfully with the help of a regular WebDriver. But at the moment we decided not to stop at what has been accomplished and look in the direction of improving the interaction of tests with interfaces. If now we throw JavaScript events on DOM elements, then in the future we would like to do it the same way as the user. We plan the actual control of the mouse and keyboard. For this awt.Robot will be used. Follow the news!

The frameworks for working with this element grow like mushrooms after rain; about how to start programming using Canvas, a huge number of articles have been written. But there is one point about which, apparently, because of the narrow specifics, they are rarely said and little. We are talking about testing applications for Canvas. In a sense, it becomes a problem for a testing engineer who is used to accessing elements on a page using their css or xpath selectors, and then performing some actions with the object. With Canvas, this approach does not work, because the DOM element is one, and there are many objects in it.

')

Under the cut, using the example of test automation of the Yandex.Maps API, I will tell you how we solved this problem in Yandex.

How web interfaces are being tested

The engineer analyzes the service being tested and composes his Page Objects for his pages (at the request of the tester, this can be done using the HtmlElements library). If he wants beautiful reports, he can use the Thucydides framework. Then, in accordance with the existing test scenarios, automatic tests are written using the WebDriver API . What turned out, the tester runs on the farm of browsers through the Selenium Grid and looks for errors, looking at the reports that came in the mail.

Everything is simple and beautiful, if the tests do not need to interact with interactive graphics and test it. But what to do if you need to click in a circle on the map or drag a square from one place to another? Suppose we even find a Canvas, but we need a specific circle. How to click on it?

Faced with graphics, we realized that the classic approach through Page Object does not work here. And we don’t want to refuse WebDriver, because it gives us important bonuses: the ability to run tests in all popular browsers or, for example, execute arbitrary JavaScript code on the page (which is extremely useful when testing the JavaScript API). In addition, the tool is supported by a large developer community.

That is, our approach should be based on WebDriver , but at the same time be able to interact with all elements on the page, regardless of whether they are represented in the DOM document tree or not. In addition, we should be able to verify the result of our interaction and catch possible JavaScript errors.

Interaction with elements on the page

As I said above, we will examine the interface testing in the context of the Yandex.Maps API. So let's see what, in fact, we have to interact in tests.

The result of the API is a map similar to puff pie. The very first, lower layer is the terrain map. Above it is a layer of graphics. These are different routes, ruler lines, and even labels that can be displayed using elements other than Canvas DOM. The third is the event layer, over which various map controls are already located (buttons, drop-down menus, input fields, sliders, etc.).

In this “cake” we are interested in interaction with the graphics layer, since the rest of the interface is represented as separate DOM elements and clicking on them using WebDriver is easy. Users of the Yandex.Maps API can say: "So you have an API for all graphic objects, interact with elements on the Canvas through it."

And this approach is used by many engineers to work with objects on Canvas. But he has one problem - he is far from the real actions of the user. An ordinary person does not call the JavaScript object in the

click() console responsible for displaying the route on a map. He simply takes and clicks the mouse in the image. The operability of the click() method does not guarantee the correctness of processing a real click. Therefore, we went our own, alternative way.To understand how to better interact with Canvas, you need to know how the program itself understands which object the user clicked. In the case of the Yandex.Maps API, active areas technology is used. Something similar is used wherever there are interactive elements on the Canvas.

General algorithm of active areas technology:

- The program stores information about all elements drawn on the Canvas, about their pixel coordinates.

- It catches mouse events occurring over graphic objects. This can be done directly on the Canvas. In our case, above the graphics layer there is a special transparent event layer that covers it all.

- The coordinates of the mouse event correspond to the coordinates of the objects, and if the event occurs over an object, then the corresponding handler is called for it.

It turns out that we do not need a specific graphic object - we just need to pick up the coordinates of the event and throw it on the Canvas or some layer of events. But this is not so easy to do. Suppose we need to click in the label. Determining its coordinates by eye is not an easy task.

If we rely on pixels, then in the case of a Canvas object with a size of 512 by 512, we get 512x512 interaction points. Too much. To make your life easier, let's divide the Canvas into conditional squares, and for even more convenience, display them with a semi-transparent background above the Canvas so that the testing engineer can see through their eyes. We chose the size of the side of the square equal to 32 pixels.

Now it is clearly visible: in order to click in the label, you need to click in the center of the square with the coordinates [11, 11]. Knowing the size of the side of the square, these coordinates are easily converted into ordinary pixel coordinates, with which click on the Canvas will be triggered.

x = 11 * 32 + 32 / 2; y = 11 * 32 + 32 / 2; click(x, y); // click(368, 368); It is worth noting that we use this approach to interact with map controls, although they can also be accessed through the DOM tree. This was done so that the appeal to all elements on the map was in the same style. Unfortunately, WebDriver is not able to throw events at an arbitrary point of the window, but only on a specific element of the DOM tree. Therefore, before triggering an event, we define an element for interaction. This is done through the

elementFromPoint(x, y) method of the document object. If the button is at this point, then the event will be thrown on it, if the graphics are on the Canvas.Verify Interaction Results

When we press a button, it changes its appearance - the click animation takes place. In the test, this animation can be checked by querying the values of the attributes of the DOM element responsible for the appearance of the button. The necessary class has appeared - it means the animation has occurred. In the case of objects drawn on Canvas, everything is somewhat different. Here we can no longer request a class or position on the page. Only the Canvas itself has these attributes, but not the objects drawn on it, because they are not in the DOM tree. So how can we check whether the line is the right color and whether the position of the polygon changed after we dragged it with the mouse?

On the one hand, you can request the color and position by referring to the JavaScript objects displayed on the Canvas. But, as you remember, no one guarantees that the API does not lie to us. There may be an error in the code, and JavaScript will tell us that the line is red, and with our eyes we will see that it is blue.

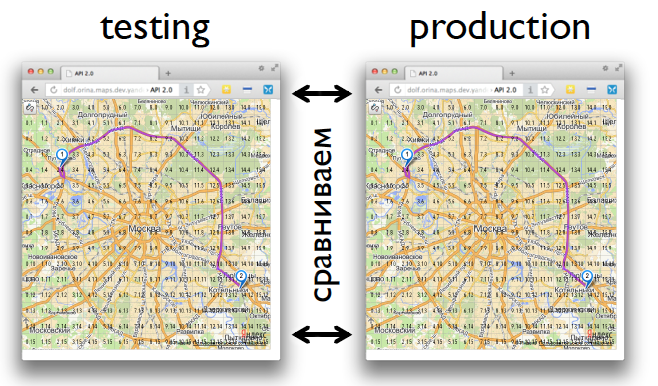

But there is a way out. It is enough to compare the appearance of a stable version of the interface with the one being tested. In other words, compare snapshots of two browser windows. We do this as follows:

- At the same time, open both versions of the interface;

- Both versions open in the same browser version, in different windows;

- Perform the same actions on the interface in both windows;

- At the right time, we take pictures of windows and compare them pixel-by-pixel.

This set of actions allows us to get rid of a number of problems associated with comparing the appearance of interfaces. First, tests do not depend on information that changes over time. Secondly, there is a resistance to browser-dependent layout. And thirdly, do not store the reference image.

JavaScript tracking errors

The final point of our approach to testing is to capture JavaScript errors. Here, at first glance, everything is simple: we take and use the window object's onerror method. Everything is good in theory, but in practice this approach has one big problem . If the error occurred on a host other than the one opened in the browser, we will not be able to read its text. What to do?

There are two options:

- add a specific header to the server’s response ( does not work in all browsers ).

- use a plugin for Firefox that collects errors at the browser console level.

What to choose from this is up to you. Both options have the right to life.

What is the result?

As it turned out, the task of testing a web interface that works using Canvas is solved quite successfully with the help of a regular WebDriver. But at the moment we decided not to stop at what has been accomplished and look in the direction of improving the interaction of tests with interfaces. If now we throw JavaScript events on DOM elements, then in the future we would like to do it the same way as the user. We plan the actual control of the mouse and keyboard. For this awt.Robot will be used. Follow the news!

Source: https://habr.com/ru/post/177163/

All Articles