How does LTE cope with inter-cell interference

For some reason, all Russian-language posts dedicated to LTE discuss only the principles of the underlying physical layer technologies - OFDMA [1] , SC-FDMA [2] , a bit of MIMO [3] , [4] , some aspects of the architecture [5] and VoLTE [6] . All this is certainly very important and useful, but this is not All! After all, LTE, in addition to the above, is packed with very interesting solutions related to the distribution of frequency-time resources in the uplink and downlink (various algorithms for Scheduler), with the adaptation of modulation, coding and bandwidth to radio conditions, with procedures for access to the medium, new types handovers, etc. - non-trivial approaches are used there ... But there is one more interesting question that is somehow ignored by the Habr community - how does the LTE network in general work in the absence of frequency-ter itorialnogo planning (Frequency Reuse Factor = 1!)? Consider older networks, let's say GSM (see below):

The entire frequency range was divided into sub-bands, and the main planning rule was to use different frequency bands in neighboring cells, otherwise, the signals from neighboring cells would interfere with each other and interfere with each other’s happy life. In UMTS (WCDMA), everything was somewhat more complicated - all base stations (NodeB) used the same time-frequency resource and used different types of orthogonal or pseudo-orthogonal sequences to separate signals from different cells or signals from different subscribers within the same cell.

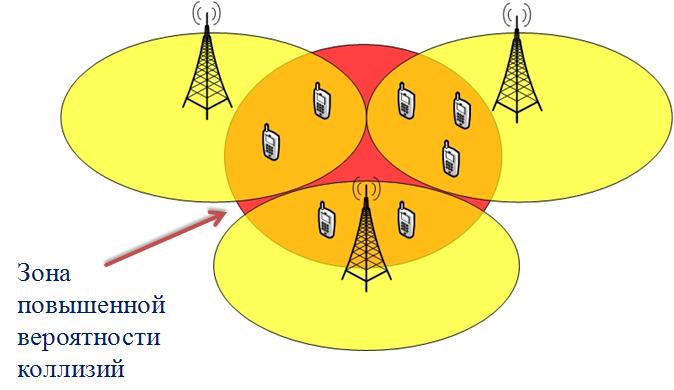

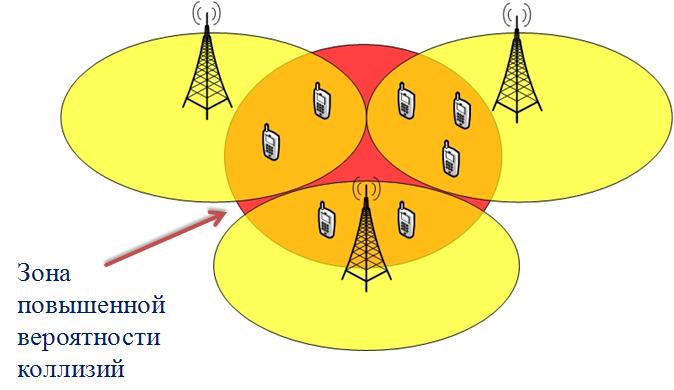

One way or another, the problem of inter-cell interference (ICI-Inter-cell Interference) in GSM and UMTS networks was not clear ... What do we see in LTE? Not only is the same frequency band used in all cells, so the scrambling of signals by orthogonal sequences (in general) is absent. What does it mean? If two neighboring base stations (eNB) allocate resource blocks to their subscribers for data transmission in the same frequency band and at the same time, then we can with a certain degree of probability say that these subscribers will interfere with each other and interfere. The most unpleasant situation will be observed at the edges of the cells:

')

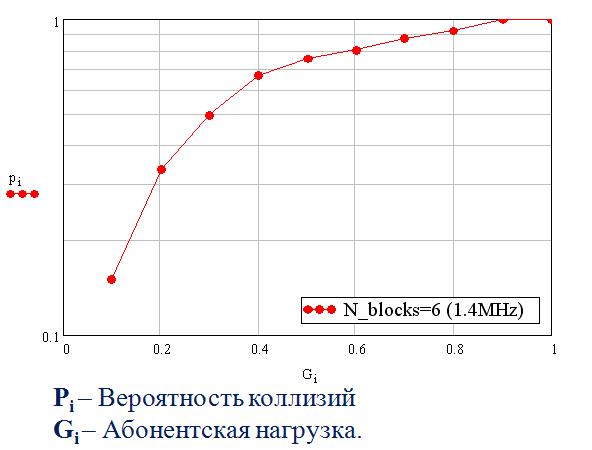

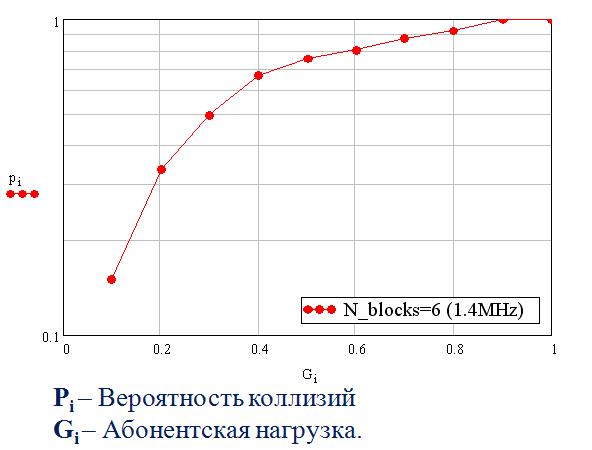

The probability of collision in this case (the probability of packet distortion due to simultaneous allocation of two or more base stations of the same resource to users) is obviously affected by two factors: 1) the distance of subscribers from each other, otherwise their proximity to the base station ( if the subscribers are close to the BS, then the Power Control mechanism (Power Control) turns on, which is likely to force the phone to lower the level of transmitted power, as a result, the overall level of interference between the cells will decrease). 2) the load in the cell (also quite obvious factor - the higher the load, the greater the likelihood of simultaneous allocation to subscribers at the edges of the cell of the same resource block). If you simulate the work of such a primitive scheduler, ignorant of the load on neighboring cells, etc., and derive the probability of a collision between packets in different cells (in fact, it is an indirect reflection of the level of inter-cell interference), you get this dependency:

Per unit or maximum load is taken as the situation when all blocks of the time-frequency resource are distributed. To say that such values of packet distortion probabilities are huge is to say nothing. This is a blatantly bad interference pattern. And, of course, hardly anyone would have released LTE with such characteristics in the light.

So, what has been done in LTE to avoid this catastrophic interference between the cells and not to resort to re-using frequencies again.

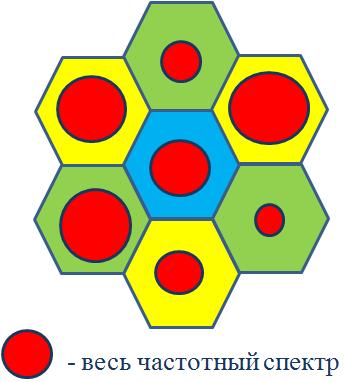

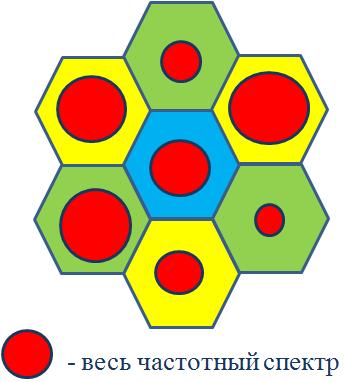

Firstly, a mechanism called ICIC (Inter-Cell Interference Coordination) works in LTE ... An interesting thing, I must say. Her detailed description with all the calculations can be found in the wonderful book given at the end of this article, in section 12.5, for whom it is interesting. The meaning of the fitch is that neighboring eNBs (BSNs) send information about their load in the form of Overload Indicator (OI) on the X2 interface. Thus, they actually have the opportunity to agree among themselves which of them will use which subband at which point in time. In this case, the frequency-territorial distribution will look like this:

That is, to subscribers who are closer to the antenna, the eNB can give any resource blocks, and to those who are far away, depending on the OI-indicator. This is by no means a classic reuse of frequencies. This is an adaptive allocation of resources, adapting to the load on neighboring cells, and this is the main way to reduce interference between cells (reduction - but not completely eliminated, of course).

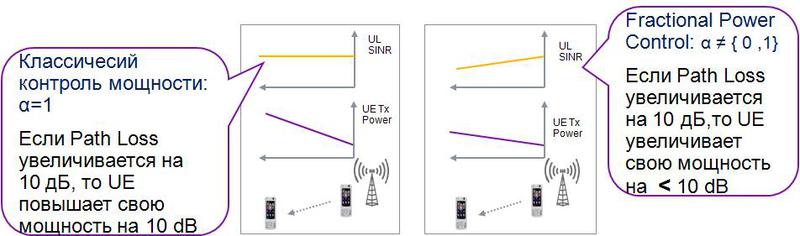

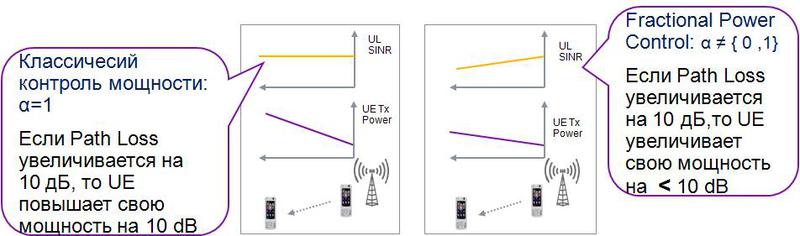

In addition to such directional mechanisms, indirect methods of reducing interference are provided for in LTE. For example, Fractional Power Control. If classical power control was aimed at full compensation for signal loss during propagation (PathLoss compensation), then partial power control means partial compensation for such losses.

The parameter that sets the value of the path loss compensation factor is called Alpha in the standard (takes values from 0 to 1). How it works: an alpha value of 0.8 (80% - compensation for signal loss) reduces the inter-cell interference by 10-20%! At the same time, subscribers on the edges of the cell do not have any noticeable problems caused by incomplete compensation of Path Loss.

There are many more parameters that can be adjusted so that the cells interfere less, but ICIC and Fractional Power Control are perhaps the two most powerful mechanisms.

Very useful book on LTE:

Stefania Sesia (ST-Ericsson, France), Issam Toufik (ETSI, France), Matthew Baker (Alcatel-Lucent), The UMTS Long Term Evolution.From Theory to Practice

The entire frequency range was divided into sub-bands, and the main planning rule was to use different frequency bands in neighboring cells, otherwise, the signals from neighboring cells would interfere with each other and interfere with each other’s happy life. In UMTS (WCDMA), everything was somewhat more complicated - all base stations (NodeB) used the same time-frequency resource and used different types of orthogonal or pseudo-orthogonal sequences to separate signals from different cells or signals from different subscribers within the same cell.

One way or another, the problem of inter-cell interference (ICI-Inter-cell Interference) in GSM and UMTS networks was not clear ... What do we see in LTE? Not only is the same frequency band used in all cells, so the scrambling of signals by orthogonal sequences (in general) is absent. What does it mean? If two neighboring base stations (eNB) allocate resource blocks to their subscribers for data transmission in the same frequency band and at the same time, then we can with a certain degree of probability say that these subscribers will interfere with each other and interfere. The most unpleasant situation will be observed at the edges of the cells:

')

The probability of collision in this case (the probability of packet distortion due to simultaneous allocation of two or more base stations of the same resource to users) is obviously affected by two factors: 1) the distance of subscribers from each other, otherwise their proximity to the base station ( if the subscribers are close to the BS, then the Power Control mechanism (Power Control) turns on, which is likely to force the phone to lower the level of transmitted power, as a result, the overall level of interference between the cells will decrease). 2) the load in the cell (also quite obvious factor - the higher the load, the greater the likelihood of simultaneous allocation to subscribers at the edges of the cell of the same resource block). If you simulate the work of such a primitive scheduler, ignorant of the load on neighboring cells, etc., and derive the probability of a collision between packets in different cells (in fact, it is an indirect reflection of the level of inter-cell interference), you get this dependency:

Per unit or maximum load is taken as the situation when all blocks of the time-frequency resource are distributed. To say that such values of packet distortion probabilities are huge is to say nothing. This is a blatantly bad interference pattern. And, of course, hardly anyone would have released LTE with such characteristics in the light.

So, what has been done in LTE to avoid this catastrophic interference between the cells and not to resort to re-using frequencies again.

Firstly, a mechanism called ICIC (Inter-Cell Interference Coordination) works in LTE ... An interesting thing, I must say. Her detailed description with all the calculations can be found in the wonderful book given at the end of this article, in section 12.5, for whom it is interesting. The meaning of the fitch is that neighboring eNBs (BSNs) send information about their load in the form of Overload Indicator (OI) on the X2 interface. Thus, they actually have the opportunity to agree among themselves which of them will use which subband at which point in time. In this case, the frequency-territorial distribution will look like this:

That is, to subscribers who are closer to the antenna, the eNB can give any resource blocks, and to those who are far away, depending on the OI-indicator. This is by no means a classic reuse of frequencies. This is an adaptive allocation of resources, adapting to the load on neighboring cells, and this is the main way to reduce interference between cells (reduction - but not completely eliminated, of course).

In addition to such directional mechanisms, indirect methods of reducing interference are provided for in LTE. For example, Fractional Power Control. If classical power control was aimed at full compensation for signal loss during propagation (PathLoss compensation), then partial power control means partial compensation for such losses.

The parameter that sets the value of the path loss compensation factor is called Alpha in the standard (takes values from 0 to 1). How it works: an alpha value of 0.8 (80% - compensation for signal loss) reduces the inter-cell interference by 10-20%! At the same time, subscribers on the edges of the cell do not have any noticeable problems caused by incomplete compensation of Path Loss.

There are many more parameters that can be adjusted so that the cells interfere less, but ICIC and Fractional Power Control are perhaps the two most powerful mechanisms.

Very useful book on LTE:

Stefania Sesia (ST-Ericsson, France), Issam Toufik (ETSI, France), Matthew Baker (Alcatel-Lucent), The UMTS Long Term Evolution.From Theory to Practice

Source: https://habr.com/ru/post/177097/

All Articles