SAN experience in SAS

Clouds and virtualization - terms already familiar and tightly embedded in our lives. They also came with new problems for IT. And one of the main ones is how to ensure in the cloud (read the cluster for virtualization) adequate performance of shared disk storage?

When creating our own virtualization platform, we set up tests to find the optimal answer to this question. With the test results, I want to introduce you today.

The correct system integrator will say that Infiniband and a pair of cabinets with 3Par will, having introduced them once, never again think about SAN performance, but not everyone has space in the hardware to install extra cabinets with disks. What then? First of all, you need to identify the bottleneck that prevents virtual machines from enjoying all 2000 IOPs that the shelf with disks promises to give.

')

At our booth, where we once learned to build a cluster, IOMeter from a virtual machine downloaded 2x1Gbe iSCSI 100% and showed excellent results - almost the same promised 2000 IOPs. But when the VM became several dozen, and all of them were quite actively trying to use the disk system, an unpleasant effect was discovered - the network load does not fit half, the controller on the shelf and IOPs are in stock, and on virtual machines everything slows down. It turned out that in such conditions a bottleneck is a delay in the data network (latency). And in the case of a SAN implementation on iSCSI 1Gbe, it is simply huge.

So based on what protocol to build a SAN, if the scale does not require connecting hundreds of storage systems, thousands of servers and it is planned to place everything in two or three racks of one data center? iSCSI 10Gbe, FC 8GFC? There is a better solution - SAS. And the best argument in the choice is the price / quality ratio.

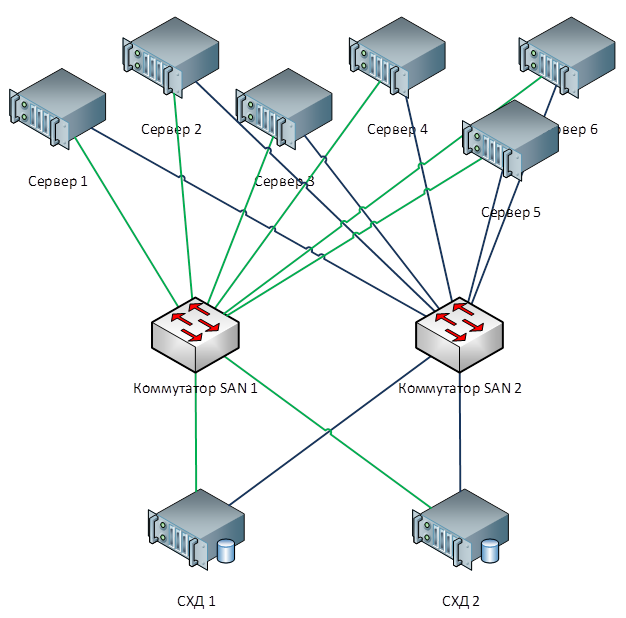

Let's compare the costs and results when building an unpretentious SAN from 2 storage systems with 6 server clients.

SAN Requirements:

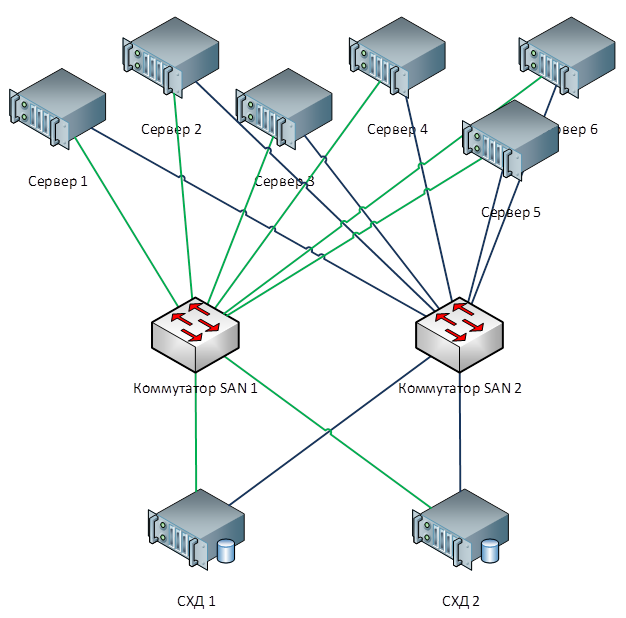

The classic solution to this problem looks like this:

Assume that the cost of storage with two iSCSI 10 Gbe, FC or SAS controllers is the same. The remaining components are listed in the table (you can pick on prices - but this is the order):

For the price solution based on SAS looks great! In fact, it is close to the cost of a solution on iSCSI 1Gbe.

This approach was used to build the first stage of the IaaS platform of the Cloud Library.

What did the tests show?

On a virtual machine running in a release SAN among 200 others, IOMeter was launched.

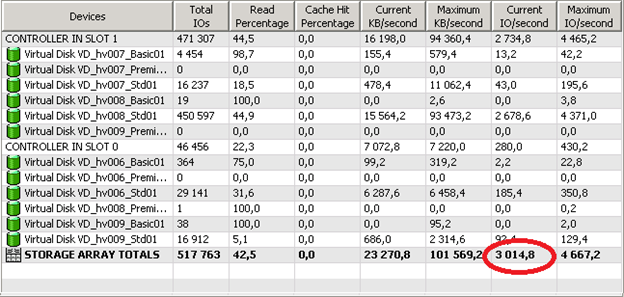

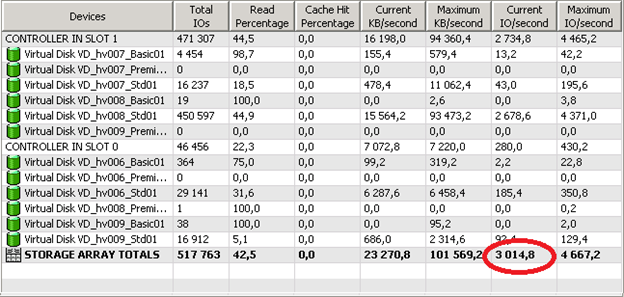

The load on the storage before the test:

The load on the storage system during the test:

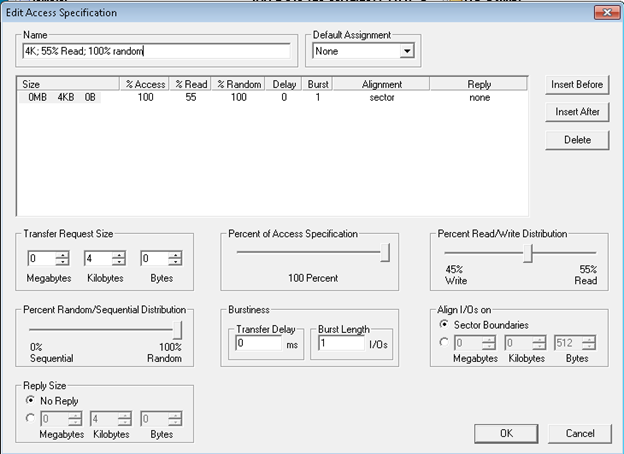

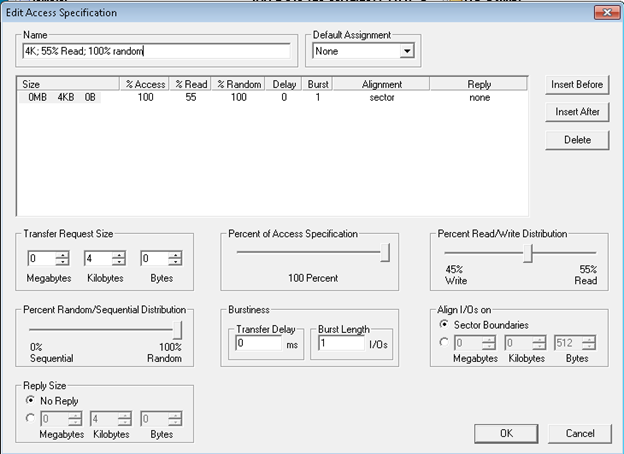

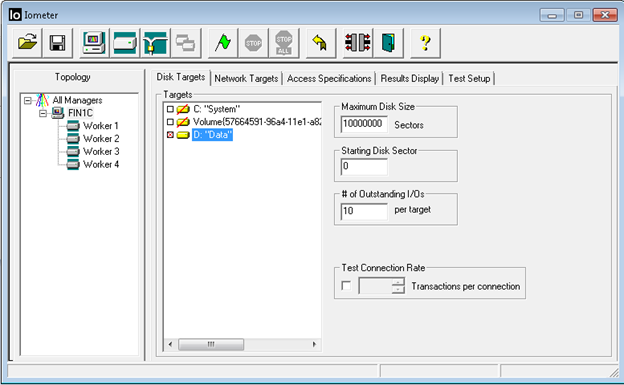

IOMeter test settings:

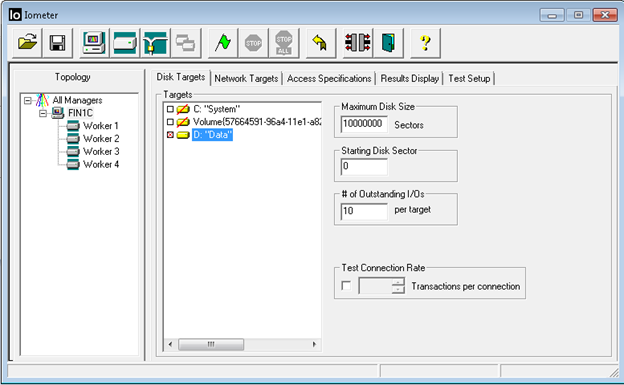

IOMeter workflow settings:

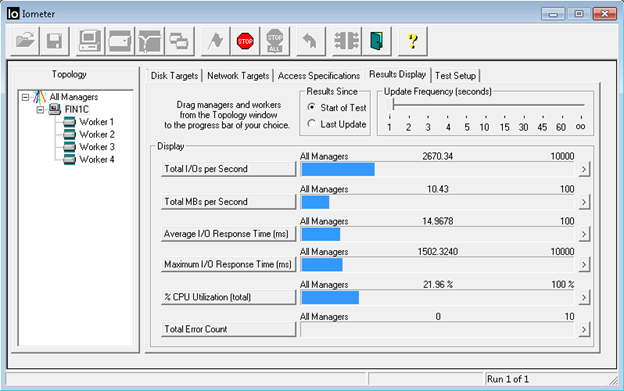

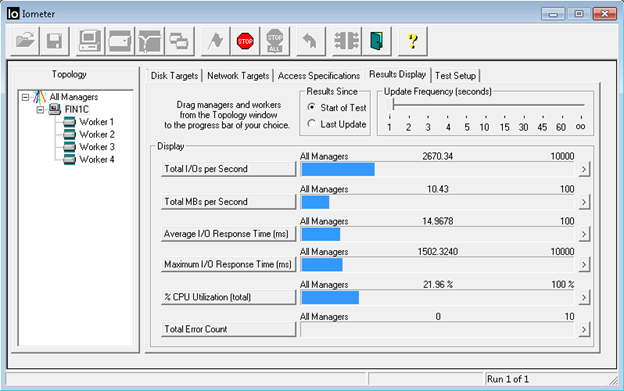

IOMeter performance during testing:

Findings:

The impact of hundreds of virtual machines simultaneously working with storage systems did not lead to a performance drop typical of iSCSI 1 Gbe. IOMeter showed the expected IOPs for the test virtual machine — the maximum possible minus the workload.

So, the advantages of this solution:

Of course there are also disadvantages:

With seemingly obvious advantages, the popularity of such a solution is not very high. At least, habr is not replete with reports. What is the main reason: the youth of technology, a narrow segment of the application, poor scalability ...? Or maybe all the sly long used? :)

When creating our own virtualization platform, we set up tests to find the optimal answer to this question. With the test results, I want to introduce you today.

The correct system integrator will say that Infiniband and a pair of cabinets with 3Par will, having introduced them once, never again think about SAN performance, but not everyone has space in the hardware to install extra cabinets with disks. What then? First of all, you need to identify the bottleneck that prevents virtual machines from enjoying all 2000 IOPs that the shelf with disks promises to give.

')

At our booth, where we once learned to build a cluster, IOMeter from a virtual machine downloaded 2x1Gbe iSCSI 100% and showed excellent results - almost the same promised 2000 IOPs. But when the VM became several dozen, and all of them were quite actively trying to use the disk system, an unpleasant effect was discovered - the network load does not fit half, the controller on the shelf and IOPs are in stock, and on virtual machines everything slows down. It turned out that in such conditions a bottleneck is a delay in the data network (latency). And in the case of a SAN implementation on iSCSI 1Gbe, it is simply huge.

So based on what protocol to build a SAN, if the scale does not require connecting hundreds of storage systems, thousands of servers and it is planned to place everything in two or three racks of one data center? iSCSI 10Gbe, FC 8GFC? There is a better solution - SAS. And the best argument in the choice is the price / quality ratio.

Let's compare the costs and results when building an unpretentious SAN from 2 storage systems with 6 server clients.

SAN Requirements:

- High Availability Connection

- Data transfer rate of at least 8GB / s

The classic solution to this problem looks like this:

Assume that the cost of storage with two iSCSI 10 Gbe, FC or SAS controllers is the same. The remaining components are listed in the table (you can pick on prices - but this is the order):

For the price solution based on SAS looks great! In fact, it is close to the cost of a solution on iSCSI 1Gbe.

This approach was used to build the first stage of the IaaS platform of the Cloud Library.

What did the tests show?

On a virtual machine running in a release SAN among 200 others, IOMeter was launched.

The load on the storage before the test:

The load on the storage system during the test:

IOMeter test settings:

IOMeter workflow settings:

IOMeter performance during testing:

Findings:

The impact of hundreds of virtual machines simultaneously working with storage systems did not lead to a performance drop typical of iSCSI 1 Gbe. IOMeter showed the expected IOPs for the test virtual machine — the maximum possible minus the workload.

So, the advantages of this solution:

- Bandwidth. Each port is a 4-channel SAS2 - i.e. in theory, you can get 24 Gbit per port.

- Latency. It is much lower than the iSCSI 10GbE and FC 8GFC. In the task of virtualizing a large number of servers, this becomes the decisive factor in the battle for virtual IOPs.

- Simple enough to configure.

Of course there are also disadvantages:

- No replication via SAS. For data backup, you need to look for another solution.

- Cable length limit - 20 meters.

With seemingly obvious advantages, the popularity of such a solution is not very high. At least, habr is not replete with reports. What is the main reason: the youth of technology, a narrow segment of the application, poor scalability ...? Or maybe all the sly long used? :)

Source: https://habr.com/ru/post/177047/

All Articles