Setting up a cluster with several regions for cloud storage of objects with OpenStack Swift

Author: Oleg Gelbukh

Last fall, an interesting review of their approach to creating multi-regional clusters of the OpenStack Object Storage (codename of the project - Swift) appeared in the SwiftStack team blog. This approach fits well with the Swift geographically distributed cluster scheme with a reduced number of replicas (3 + 1 instead of 3 + 3, for example), on which we worked together with Webex around the same time. I would like to briefly describe our approach and dwell on the implementation plan and proposed changes to the Swift code.

I would like to begin with a brief overview of the current Swift algorithms, in order to clarify what needs to be done in order to create a cluster from several geographically separated regions.

')

A standard ring (ring or hash ring) of a Swift cluster is a data structure that allows you to divide storage devices into zones. The ring build script (swift-ring-builder) included in the Essex release ensures that the replicas of objects do not fall into the same zone.

The structure of the ring includes the following components:

- Device list : includes all storage devices (disks) known to the ring. Each element of this list is a dictionary that includes a device identifier, its symbolic name, a zone identifier, the IP address of the data storage node on which the storage device is installed, the network port, weight, and metadata.

- Partition distribution table : a two-dimensional array with the number of rows equal to the number of replicas. Each cell in the array contains a device identifier (from the device list) on which the replica of the partition corresponding to the column index is located ...

- Partition area number: the number of bits from the MD5 checksum from the path to the object (/ account / container / objec), which determines the partitioning of the entire space of possible MD5 hashes into sections.

In the Folsom version, changes were made to the format of the ring file. These changes significantly improve processing efficiency and override the ring balancing algorithm. The strict condition, which required the distribution of replicas in different zones, was replaced by a much more flexible algorithm that organizes zones, nodes and devices into layers.

The ring balancer attempts to position the replicas as far apart as possible; preferably in different zones, but if only one zone is available, then in different nodes; and if only one node is available, then to different devices on the node. This algorithm, which operates on the principle of “maximum distribution,” potentially supports a geographically distributed cluster. This can be achieved by adding a region-level diagram to the top. A region is essentially a group of zones with one location, be it a rack or a data center.

In our proposal, the region is defined in the field of the same name (region) in the devs device dictionary.

The proxy server provides the Swift API public interface to clients and performs basic operations with objects, containers and accounts, including writing using a PUT request and reading using a GET request.

When servicing PUT requests, the proxy server follows the following algorithm:

1. Calculates MD5 checksum of the path to the object in the format / account [/ container [/ object]].

2. Calculates the partition number as the first N bits of the MD5 checksum.

3. Selects devices from the partitioning table on which the replicas of the computed partition are stored.

4. Selects the IP address and port of the data storage node from the list of devices for all devices found in step # 3.

5. Proubet establish a connection to all nodes on the corresponding ports, if it is impossible to connect to at least half of the nodes, rejects the PUT request.

6. Tries to load the object (or create an account or container) on all nodes to which the connection has been established; if at least half of the downloads are canceled, reject the PUT request.

7. If data is loaded on N / 2 + 1 nodes (where N is the number of nodes found in step # 4), sends a confirmation of a successful PUT request to the client.

When servicing GET requests, the proxy server basically performs the following algorithm:

1. Repeats steps 1-4 of the PUT request processing algorithm and determines the list of nodes that store replicas of objects.

2. Shuffles the list of nodes using the shuffle function and connects to the first of the received list.

3. If the connection cannot be established, proceeds to the next node from the list.

4. If the connection is established, it starts transmitting data to the client in response to the request.

Replication in Swift works on sections, but not on separate objects. The replicator workflow runs periodically, at a configurable interval. The default interval is 30 seconds.

The replicator in general follows the following algorithm:

1. Create a task replicator. That is, scan all devices on the site, scan all found devices and find a list of all sections, and then for each section create a dictionary of replication actions.

{

'path': <path_to_partition>,

'nodes': [replica_node1, replica_node2, ...],

'delete': <true | false>,

'partition':}

- <path_to_partition> is the path in the file system to the partition (/ srv / node // objects /)

- [replica_node1, replica_node2, ...] is a list of nodes that store replicas of partitions. The list is imported from the ring for objects.

- 'delete' is set to “true” if the number of replicas of this section exceeds the configured number of replicas in the cluster.

- is the ID number of the section.

2. To process each section in accordance with the task for replication. I.e:

- If a section is marked for deletion, the replicator maps each subdirectory of the job ['path'] directory to all the nodes in the job ['nodes'] list, sends a REPLICATE request to each node on which the replica is located, and deletes the job [[path]] directory.

- If the section is not marked for deletion, the replicator calculates the checksums of the contents of all the subfolders of the job ['path'] directory (that is, the account / container databases and the object files in the section). The replicator issues a REPLICATE request to all replicas of the job ['partition'] section and receives in response from the remote subfolders of the checksum correspondence section. It then compares the checksum matches and uses rsync to send the modified subfolders to the remote nodes. Replication success is checked by re-sending the REPLICATE request.

3. If there is no access to the so-called “main” replica, the replicator uses the get_more_node class of the ring. This method uses a specific deterministic algorithm to determine a set of “spare” nodes, where you can save a temporary copy of this section ... The algorithm determines the zone to which the “main” device belongs, which failed, and selects the “spare” device from another zone to save the time partition replicas If another device is also not available, a node is selected from the third zone, and the cycle continues until all zones and all nodes have been enumerated.

We suggest adding a field to the list of devices. This parameter should use the RingBuilder class when balancing a ring as described below. The region parameter is an additional level in the system that allows you to group zones. Thus, all devices that belong to zones constituting one region should belong to this region.

In addition, regions can be added to the ring as an additional structure — a dictionary with regions as keys and a list of zones as values, for example:

It is important to note that a zone can belong to only one region.

In this case, the regions are used similarly to the previous use, but the ring class must include additional code for processing the vocabulary of regional zone assignments and determine which region the particular device belongs to.

Assigning a region's default zone should assign all zones to a single default region to reproduce the standard Swift behavior.

In the latest release of the Swift project, support for the level of regions in the ring has already been added, which means an important step towards the full implementation of geographically distributed storage facilities based on Swift.

The RingBuilder balancing algorithm should recognize the region parameter in the device list. The algorithm can be configured to distribute replicas in various ways. Below we propose one of the possible implementations of the distribution algorithm that we have chosen to develop a prototype.

Partition replicas should be placed on devices under the following conditions:

- Replicas should be located on devices belonging to different groups at the highest possible level (standard behavior of the ring balancing algorithm).

- For N replicas and M regions (groups of zones), the number of replicas that fall into each region is equal to the whole number of quotients of N / M. The rest of the replicas is added to one region (which is the main one for this section).

- A region cannot contain more replicas than the number of zones in a region.

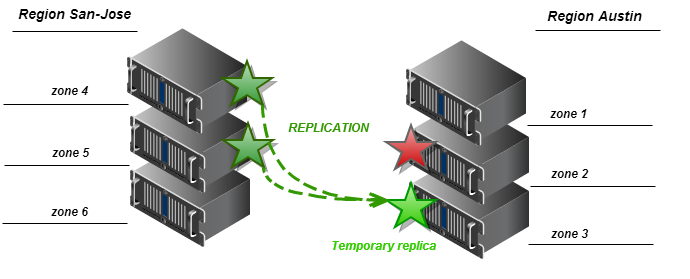

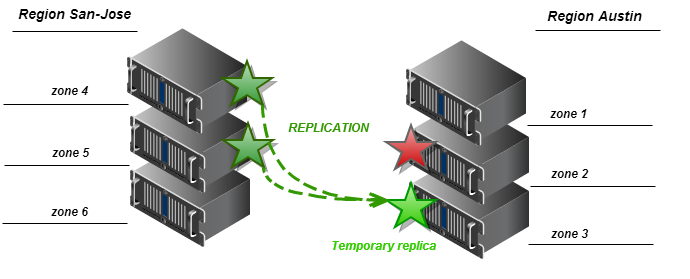

For example, if N = 3 and M = 2, with this algorithm we will have a ring in which one replica enters each region (the whole part of the fraction 3/2 is 1), and the remaining one replica enters one of two regions, selected randomly. The diagram below represents the distribution of replicas by region in the example above.

Making a direct PUT request from a proxy server to a storage node in a remote region is not so simple: in most cases we may not have access to the internal cluster network from the outside. Thus, for the initial implementation, we assume that only local replicas are recorded during the execution of a PUT request, and remote regional replicas are created by the replication process.

By default, the number of replicas is three, and one region. This case reproduces the standard Swift configuration and ring balancing algorithm.

We propose changes to the Ring class get_more_nodes method to recognize regions when selecting “spare” zones for temporary replicas. The algorithm must sort the candidates for “spare” so that the zones from the region that contains the lost replica are selected first. If there is no access to zones in the local region (for example, the network connection between regions is closed), the algorithm returns a node that belongs to a zone from one of the outer regions. The following two schemes describe the algorithm for the two extreme cases.

For proper operation, the Swift proxy server in an environment with several geographically distributed regions needs to have information on which region it belongs to. The proxy server can obtain this information based on network latency analysis when connecting to data storage servers, or directly from the configuration file. The first approach is implemented in the current version of Swift (1.8.0). The proxy server sorts data storage nodes based on response time after connecting to each of them, and selects the fastest one for reading. This approach works well for read requests, but when writing, it is required to work with all the “main” storage servers, and also, possibly, with several “spare” ones. In this case, the configuration method for determining the local region is better suited.

This is quite simply implemented by adding a region parameter to the [DEFAULT] section of the proxy configuration file (proxy-server.conf), for example:

[DEFAULT]

...

region = san-jose

This parameter is used by the proxy server for ring read operations, and also, possibly, when selecting nodes to serve GET requests. Our goal is for the proxy server to connect to the drive nodes from local zones (that is, zones that belong to the same region as the proxy server).

In the SwiftStack article, this functionality is called proxy affinity.

The proxy server should not read data from a host that belongs to an external region if a local replica is available. This reduces the load on network connections between regions, and also works when there is no network connection between regions (as a result of a temporary failure or features of the cluster topology).

We then replace the shuffle operation of the list of nodes in step # 2 of the GET request processing algorithm (see above) with a procedure that arranges the nodes in such an order that the drives belonging to the local proxy server region are first in the list. After such sorting, the lists of local regional and external regional nodes are mixed independently, and then the list of external regional nodes is added to the list of local regional nodes. Alternatively, at this stage, the method described above can be used to select the data storage node by the minimum response time.

Replication between geographically distributed data centers in our prototype works for the regions as a whole in the same way as for a cluster with one region. However, as part of the replication process, a huge number of REPLICATE requests can be sent between clusters over a low-speed WAN connection. This can lead to a serious decline in the performance of the cluster as a whole.

As a simple workaround for this problem, adding a counter to the replicator is used so that partitions are transferred to devices in a remote region for each Nth replication. More sophisticated solutions may include dedicated replicator gateways in their respective regions, and will be developed as part of our research project.

Original article in English

Last fall, an interesting review of their approach to creating multi-regional clusters of the OpenStack Object Storage (codename of the project - Swift) appeared in the SwiftStack team blog. This approach fits well with the Swift geographically distributed cluster scheme with a reduced number of replicas (3 + 1 instead of 3 + 3, for example), on which we worked together with Webex around the same time. I would like to briefly describe our approach and dwell on the implementation plan and proposed changes to the Swift code.

The current state of OpenStack Swift

I would like to begin with a brief overview of the current Swift algorithms, in order to clarify what needs to be done in order to create a cluster from several geographically separated regions.

')

Ring

A standard ring (ring or hash ring) of a Swift cluster is a data structure that allows you to divide storage devices into zones. The ring build script (swift-ring-builder) included in the Essex release ensures that the replicas of objects do not fall into the same zone.

The structure of the ring includes the following components:

- Device list : includes all storage devices (disks) known to the ring. Each element of this list is a dictionary that includes a device identifier, its symbolic name, a zone identifier, the IP address of the data storage node on which the storage device is installed, the network port, weight, and metadata.

- Partition distribution table : a two-dimensional array with the number of rows equal to the number of replicas. Each cell in the array contains a device identifier (from the device list) on which the replica of the partition corresponding to the column index is located ...

- Partition area number: the number of bits from the MD5 checksum from the path to the object (/ account / container / objec), which determines the partitioning of the entire space of possible MD5 hashes into sections.

In the Folsom version, changes were made to the format of the ring file. These changes significantly improve processing efficiency and override the ring balancing algorithm. The strict condition, which required the distribution of replicas in different zones, was replaced by a much more flexible algorithm that organizes zones, nodes and devices into layers.

The ring balancer attempts to position the replicas as far apart as possible; preferably in different zones, but if only one zone is available, then in different nodes; and if only one node is available, then to different devices on the node. This algorithm, which operates on the principle of “maximum distribution,” potentially supports a geographically distributed cluster. This can be achieved by adding a region-level diagram to the top. A region is essentially a group of zones with one location, be it a rack or a data center.

In our proposal, the region is defined in the field of the same name (region) in the devs device dictionary.

Proxy server

The proxy server provides the Swift API public interface to clients and performs basic operations with objects, containers and accounts, including writing using a PUT request and reading using a GET request.

When servicing PUT requests, the proxy server follows the following algorithm:

1. Calculates MD5 checksum of the path to the object in the format / account [/ container [/ object]].

2. Calculates the partition number as the first N bits of the MD5 checksum.

3. Selects devices from the partitioning table on which the replicas of the computed partition are stored.

4. Selects the IP address and port of the data storage node from the list of devices for all devices found in step # 3.

5. Proubet establish a connection to all nodes on the corresponding ports, if it is impossible to connect to at least half of the nodes, rejects the PUT request.

6. Tries to load the object (or create an account or container) on all nodes to which the connection has been established; if at least half of the downloads are canceled, reject the PUT request.

7. If data is loaded on N / 2 + 1 nodes (where N is the number of nodes found in step # 4), sends a confirmation of a successful PUT request to the client.

When servicing GET requests, the proxy server basically performs the following algorithm:

1. Repeats steps 1-4 of the PUT request processing algorithm and determines the list of nodes that store replicas of objects.

2. Shuffles the list of nodes using the shuffle function and connects to the first of the received list.

3. If the connection cannot be established, proceeds to the next node from the list.

4. If the connection is established, it starts transmitting data to the client in response to the request.

Replication

Replication in Swift works on sections, but not on separate objects. The replicator workflow runs periodically, at a configurable interval. The default interval is 30 seconds.

The replicator in general follows the following algorithm:

1. Create a task replicator. That is, scan all devices on the site, scan all found devices and find a list of all sections, and then for each section create a dictionary of replication actions.

{

'path': <path_to_partition>,

'nodes': [replica_node1, replica_node2, ...],

'delete': <true | false>,

'partition':}

- <path_to_partition> is the path in the file system to the partition (/ srv / node // objects /)

- [replica_node1, replica_node2, ...] is a list of nodes that store replicas of partitions. The list is imported from the ring for objects.

- 'delete' is set to “true” if the number of replicas of this section exceeds the configured number of replicas in the cluster.

- is the ID number of the section.

2. To process each section in accordance with the task for replication. I.e:

- If a section is marked for deletion, the replicator maps each subdirectory of the job ['path'] directory to all the nodes in the job ['nodes'] list, sends a REPLICATE request to each node on which the replica is located, and deletes the job [[path]] directory.

- If the section is not marked for deletion, the replicator calculates the checksums of the contents of all the subfolders of the job ['path'] directory (that is, the account / container databases and the object files in the section). The replicator issues a REPLICATE request to all replicas of the job ['partition'] section and receives in response from the remote subfolders of the checksum correspondence section. It then compares the checksum matches and uses rsync to send the modified subfolders to the remote nodes. Replication success is checked by re-sending the REPLICATE request.

3. If there is no access to the so-called “main” replica, the replicator uses the get_more_node class of the ring. This method uses a specific deterministic algorithm to determine a set of “spare” nodes, where you can save a temporary copy of this section ... The algorithm determines the zone to which the “main” device belongs, which failed, and selects the “spare” device from another zone to save the time partition replicas If another device is also not available, a node is selected from the third zone, and the cycle continues until all zones and all nodes have been enumerated.

Proposed Changes to OpenStack Swift

Adding the level of "region" in the ring

We suggest adding a field to the list of devices. This parameter should use the RingBuilder class when balancing a ring as described below. The region parameter is an additional level in the system that allows you to group zones. Thus, all devices that belong to zones constituting one region should belong to this region.

In addition, regions can be added to the ring as an additional structure — a dictionary with regions as keys and a list of zones as values, for example:

| Key (region) | Value (zone list) |

| Austin | 1,2,3 |

| San jose | 4,5,6 |

It is important to note that a zone can belong to only one region.

In this case, the regions are used similarly to the previous use, but the ring class must include additional code for processing the vocabulary of regional zone assignments and determine which region the particular device belongs to.

Assigning a region's default zone should assign all zones to a single default region to reproduce the standard Swift behavior.

In the latest release of the Swift project, support for the level of regions in the ring has already been added, which means an important step towards the full implementation of geographically distributed storage facilities based on Swift.

Tweaking RingBuilder Balancing Algorithm

The RingBuilder balancing algorithm should recognize the region parameter in the device list. The algorithm can be configured to distribute replicas in various ways. Below we propose one of the possible implementations of the distribution algorithm that we have chosen to develop a prototype.

Algorithm of alternate distribution

Partition replicas should be placed on devices under the following conditions:

- Replicas should be located on devices belonging to different groups at the highest possible level (standard behavior of the ring balancing algorithm).

- For N replicas and M regions (groups of zones), the number of replicas that fall into each region is equal to the whole number of quotients of N / M. The rest of the replicas is added to one region (which is the main one for this section).

- A region cannot contain more replicas than the number of zones in a region.

For example, if N = 3 and M = 2, with this algorithm we will have a ring in which one replica enters each region (the whole part of the fraction 3/2 is 1), and the remaining one replica enters one of two regions, selected randomly. The diagram below represents the distribution of replicas by region in the example above.

Making a direct PUT request from a proxy server to a storage node in a remote region is not so simple: in most cases we may not have access to the internal cluster network from the outside. Thus, for the initial implementation, we assume that only local replicas are recorded during the execution of a PUT request, and remote regional replicas are created by the replication process.

By default, the number of replicas is three, and one region. This case reproduces the standard Swift configuration and ring balancing algorithm.

Once again about Get_more_nodes

We propose changes to the Ring class get_more_nodes method to recognize regions when selecting “spare” zones for temporary replicas. The algorithm must sort the candidates for “spare” so that the zones from the region that contains the lost replica are selected first. If there is no access to zones in the local region (for example, the network connection between regions is closed), the algorithm returns a node that belongs to a zone from one of the outer regions. The following two schemes describe the algorithm for the two extreme cases.

Regional proxy server

For proper operation, the Swift proxy server in an environment with several geographically distributed regions needs to have information on which region it belongs to. The proxy server can obtain this information based on network latency analysis when connecting to data storage servers, or directly from the configuration file. The first approach is implemented in the current version of Swift (1.8.0). The proxy server sorts data storage nodes based on response time after connecting to each of them, and selects the fastest one for reading. This approach works well for read requests, but when writing, it is required to work with all the “main” storage servers, and also, possibly, with several “spare” ones. In this case, the configuration method for determining the local region is better suited.

This is quite simply implemented by adding a region parameter to the [DEFAULT] section of the proxy configuration file (proxy-server.conf), for example:

[DEFAULT]

...

region = san-jose

This parameter is used by the proxy server for ring read operations, and also, possibly, when selecting nodes to serve GET requests. Our goal is for the proxy server to connect to the drive nodes from local zones (that is, zones that belong to the same region as the proxy server).

In the SwiftStack article, this functionality is called proxy affinity.

The proxy server should not read data from a host that belongs to an external region if a local replica is available. This reduces the load on network connections between regions, and also works when there is no network connection between regions (as a result of a temporary failure or features of the cluster topology).

We then replace the shuffle operation of the list of nodes in step # 2 of the GET request processing algorithm (see above) with a procedure that arranges the nodes in such an order that the drives belonging to the local proxy server region are first in the list. After such sorting, the lists of local regional and external regional nodes are mixed independently, and then the list of external regional nodes is added to the list of local regional nodes. Alternatively, at this stage, the method described above can be used to select the data storage node by the minimum response time.

Swift Replication

Replication between geographically distributed data centers in our prototype works for the regions as a whole in the same way as for a cluster with one region. However, as part of the replication process, a huge number of REPLICATE requests can be sent between clusters over a low-speed WAN connection. This can lead to a serious decline in the performance of the cluster as a whole.

As a simple workaround for this problem, adding a counter to the replicator is used so that partitions are transferred to devices in a remote region for each Nth replication. More sophisticated solutions may include dedicated replicator gateways in their respective regions, and will be developed as part of our research project.

Original article in English

Source: https://habr.com/ru/post/176455/

All Articles