Ranking in Yandex: how to put machine learning on stream (post # 3)

Today we are completing a series of publications on the FML framework , in which we talk about how and why we have automated the use of machine learning technologies in Yandex. In today's post we will tell:

In the previous post, we dwelled on the fact that with the help of FML we were able to streamline the development of new factors for the ranking formula and an initial assessment of their usefulness. However, to follow that the factor remains valuable and does not waste the computational resources, it is necessary after its implementation.

For this, a special regular automatic check was created - the so-called monitoring of the quality of factors. Computationally, it is very complex, but it allows to solve a number of problems.

')

The first is the identification of applicants for "deletion." Having once found that the factor makes a great contribution and its price is acceptable, and after taking a decision on its implementation, it is important to ensure that it remains useful over time, despite the appearance of new and new factors. After all, the new can easily be more general and strong than the old, and not only create new value, but also duplicate it. For example, when we first introduced the factor “the occurrence of the query words in the URL written in Latin,” and after some time we made a new one that supports the entry in URLs recorded in both Latin and Cyrillic, the first one lost all value. The old version should be deleted for at least two reasons: 1) saving on the time of calculation of the old factor; 2) reducing the dimension of signs in learning.

Sometimes there is another situation. The factor used to bring "many benefits", and now it, although it remains useful, does not pass the quality / cost threshold. This can happen if it has lost its relevance, or has become partially duplicated by newer factors. Therefore, you need to create a healthy evolution - so that weak factors die and give way to strong ones. But there is not enough data from the FML, and the final decision on the removal of the factor is made by experts.

There is another problem that quality monitoring solves. By tracking the fact that the utility of a factor once implemented has not decreased, it provides regression testing. The quality of a factor may fall, for example, due to an accidental modification or a systemic change in the properties of the Internet on which it originally relied. In this case, the system will notify the developer that this factor needs to be “repaired” (correct the error or modify it so that it meets the new realities).

The third monitoring task that he will soon begin to solve is getting rid of redundancy factors. Until a certain point, we did not check whether the new factor duplicates any of the already implemented ones. As a result, it could well turn out that, for example, there are two factors that repeat each other. But if you measure what contribution each of them alone gives in relation to all the others, it turns out that it is equal to zero. And if you exclude both factors, the quality will fall. And the task is precisely to choose which of the duplicating factors are most effectively left, in terms of the same ratio of quality growth to the price of calculations. Computationally, this is many orders of magnitude more difficult than estimating a new factor. To solve this problem, we plan to use an extended cluster of 300 Tflops .

A situation in which each of two factors, I and J,

give zero contribution - (J, K, L) and (I, K, L),

but removing both of them leads to a deterioration in quality - (K, L).

You can exclude any one (I or J).

It is more profitable to exclude J, as more resource-intensive.

This and previous posts talked about specific applications for FML in the context of regular machine learning. But in the end, the framework went beyond these applications and became a full-fledged platform for distributed computing over a search index.

Dedicated readers probably noticed that everywhere where FML is used, we are talking about approximately the same dataset: downloaded documents, saved queries, assessor estimates, and the results of factor calculations. We also noticed it at one time, and also looked at the number of other tasks that are already being solved in the Search and rely on this data anyway; and decided to derive additional benefit from this. Namely, they made a full-fledged pipeline from FML for arbitrary distributed computing on this data set, which are performed on a computing cluster numbering several thousand servers.

We have achieved that FML makes it easier to perform distributed calculations on the search index and additional data specific to a particular task. The search index is updated several times a week, and with each update a substantial part of it is moved between servers for a more optimal use of resources. FML completely relieves the developer from worries about finding the right index fragment and gives him full and consistent access to it. The framework diagnoses the integrity of the index and runs the calculations on those servers in the cluster where the data necessary for the developer lies.

In contrast to the search index, FML data specific to the current task is decomposed by itself into servers. It also takes control of the distributed execution of competing user tasks. As soon as the calculations are started, FML begins to monitor the progress of the calculations and when one of the tasks on a particular server becomes unavailable, it gives a signal to the administrators. After receiving it, they begin to diagnose a specific situation with this task on this server, whether it is a disk failure, a network failure, or a complete server outage. In our future plans - to help administrators as much as possible detailed diagnosis and simplify the search for the causes of certain failures. The latter in terms of several thousand servers is a completely ordinary matter, and they occur many times a day. Therefore, we will greatly save the manual labor of administrators if we automate it at least a little.

At first glance, all this is very similar to the YAMR tasks — the same distribution of computations, the collection of results and the assurance of reliability. But there are two dramatic differences. First, FML deals with the search index, and not with the classic “key-value” structure adopted in YAMR. The search index implies that all samples come from a combination of a large number of keys at once (in the simplest case, several query words). Working with such samples in the key-value paradigm is fundamentally difficult. And secondly, if YAMR itself decides how to decompose data into servers, then FML can work with any data distribution predetermined by the external system according to its own laws.

The solution turned out to be so successful that most of the Search development teams on their own initiative switched to the use of FML, and, according to our estimates, today about 70% (in terms of processor time) of calculations in Yandex.Search development is managed by FML.

As we have said , FML and Matrixet are parts of the machine learning technology of Yandex. And it is used not only in the web search. For example, with its help, formulas are selected for the so-called “vertical” searches (for images, videos, etc.) and for preliminary screening of completely irrelevant documents in a web search. It helps to teach the classification algorithm for products by category in Yandex.Market. In addition, machine learning selects formulas for the search robot (for example, for a strategy that determines in which order to crawl websites). And in all these cases it solves the same task - it builds the function that best corresponds to the expert data submitted to the input. We think in the near future we will find for him many more applications in Yandex. For example, we use in training classifiers, of which we have a lot.

FML paired with the Matrixet machine learning library can be useful not only in search engine development, but also in other areas where data processing is required. With some teams, we have already tried them to build a search for specialized types of data, taking into account specific factors. For example, CERN (European Nuclear Research Center) uses Matrixnet to detect rare events in large amounts of data (units per billion). Traditionally, the TMVA package was used there, based on the Gradient Boosted Decision Trees (GBDT). Since Matriksnet on our problems and our metrics has long become more accurate than a simple GBDT, we expect that CERN physicists will be able to use it to improve the accuracy of their research.

Looking more broadly at what our technology can do, we are confident that it can be useful in many areas where typical machine learning tasks are encountered - especially if they are dealing with a large data array that changes over time. For example, in large online stores, online auctions, social networks.

Now we know only one industrial solution for a close problem, besides our own, - Google Prediction API . There are several startups like BigML . Unfortunately, we could not find information about their effectiveness for various applications. The Amazon Cloud Service can still serve as a pipeline for computational tasks, but a close examination showed that this is a very general solution for completely arbitrary tasks. While ours is created specifically for search engines and is maximally revealed in them. The analogs of FML in solving the problems of “Evaluating the effectiveness of a new factor” and “Monitoring the quality of factors” are completely unknown to us.

An external observer can indirectly judge the effectiveness of our technology, based on the results of international machine learning competitions. For example, Yandex specialists occupied high places in the rankings of Yahoo Learning to Rank and Facebook Recruiting , in which the struggle for the accuracy of the ranking function is in terms of thousandths of the ERR / NDCG. They showed good results at machine learning contests and in other areas.

To be sure that our technologies remain the best in their field, we regularly hold our own machine learning contests - in the framework of the “Internet-Mathematics” series . Topics include machine learning ranking , traffic jams prediction, classification of panoramic photos . Two years ago, our competitions became international, and the final stage of the competition on predicting relevance on user behavior was held at the WSDM 2012 conference in Seattle (USA). Quite recently, a competition devoted to predicting search engine switching has ended.

Despite the fact that the FML framework, which we told you about in this series of posts, was originally designed to work with Matrixnet, it can be adapted to any other known machine learning library (for example, Apache Mahout , Weka , scikit-learn ).

Despite the fact that the FML framework, which we told you about in this series of posts, was originally designed to work with Matrixnet, it can be adapted to any other known machine learning library (for example, Apache Mahout , Weka , scikit-learn ).

Recently, there have been several good online machine learning courses. In English, we can recommend the course of Stanford University , in Russian - the course of Konstantin Vorontsov , which is read in the School of Data Analysis.

From the “paper” editions, we note two editions: The Elements of Statistical Learning: Data Mining, Inference, and Prediction (Trevor Hastie, Robert Tibshirani, Jerome Friedman) (available in electronic form ) and Pattern Recognition and Machine Learning (Christopher M. Bishop ) .

In addition, an extensive selection of courses and textbooks collected at Kaggle will be helpful.

- why you need to monitor the quality of factors and how we do it;

- how FML helps in distributed computing tasks on a search index;

- how and for what our machine learning technologies are already used and can be applied both in Yandex and outside it;

- what literature can be advised for deeper immersion in the affected issues.

Monitoring the quality of already implemented factors

In the previous post, we dwelled on the fact that with the help of FML we were able to streamline the development of new factors for the ranking formula and an initial assessment of their usefulness. However, to follow that the factor remains valuable and does not waste the computational resources, it is necessary after its implementation.

For this, a special regular automatic check was created - the so-called monitoring of the quality of factors. Computationally, it is very complex, but it allows to solve a number of problems.

')

The first is the identification of applicants for "deletion." Having once found that the factor makes a great contribution and its price is acceptable, and after taking a decision on its implementation, it is important to ensure that it remains useful over time, despite the appearance of new and new factors. After all, the new can easily be more general and strong than the old, and not only create new value, but also duplicate it. For example, when we first introduced the factor “the occurrence of the query words in the URL written in Latin,” and after some time we made a new one that supports the entry in URLs recorded in both Latin and Cyrillic, the first one lost all value. The old version should be deleted for at least two reasons: 1) saving on the time of calculation of the old factor; 2) reducing the dimension of signs in learning.

Sometimes there is another situation. The factor used to bring "many benefits", and now it, although it remains useful, does not pass the quality / cost threshold. This can happen if it has lost its relevance, or has become partially duplicated by newer factors. Therefore, you need to create a healthy evolution - so that weak factors die and give way to strong ones. But there is not enough data from the FML, and the final decision on the removal of the factor is made by experts.

There is another problem that quality monitoring solves. By tracking the fact that the utility of a factor once implemented has not decreased, it provides regression testing. The quality of a factor may fall, for example, due to an accidental modification or a systemic change in the properties of the Internet on which it originally relied. In this case, the system will notify the developer that this factor needs to be “repaired” (correct the error or modify it so that it meets the new realities).

The third monitoring task that he will soon begin to solve is getting rid of redundancy factors. Until a certain point, we did not check whether the new factor duplicates any of the already implemented ones. As a result, it could well turn out that, for example, there are two factors that repeat each other. But if you measure what contribution each of them alone gives in relation to all the others, it turns out that it is equal to zero. And if you exclude both factors, the quality will fall. And the task is precisely to choose which of the duplicating factors are most effectively left, in terms of the same ratio of quality growth to the price of calculations. Computationally, this is many orders of magnitude more difficult than estimating a new factor. To solve this problem, we plan to use an extended cluster of 300 Tflops .

A situation in which each of two factors, I and J,

give zero contribution - (J, K, L) and (I, K, L),

but removing both of them leads to a deterioration in quality - (K, L).

You can exclude any one (I or J).

It is more profitable to exclude J, as more resource-intensive.

Distributed computing pipeline

This and previous posts talked about specific applications for FML in the context of regular machine learning. But in the end, the framework went beyond these applications and became a full-fledged platform for distributed computing over a search index.

Dedicated readers probably noticed that everywhere where FML is used, we are talking about approximately the same dataset: downloaded documents, saved queries, assessor estimates, and the results of factor calculations. We also noticed it at one time, and also looked at the number of other tasks that are already being solved in the Search and rely on this data anyway; and decided to derive additional benefit from this. Namely, they made a full-fledged pipeline from FML for arbitrary distributed computing on this data set, which are performed on a computing cluster numbering several thousand servers.

We have achieved that FML makes it easier to perform distributed calculations on the search index and additional data specific to a particular task. The search index is updated several times a week, and with each update a substantial part of it is moved between servers for a more optimal use of resources. FML completely relieves the developer from worries about finding the right index fragment and gives him full and consistent access to it. The framework diagnoses the integrity of the index and runs the calculations on those servers in the cluster where the data necessary for the developer lies.

In contrast to the search index, FML data specific to the current task is decomposed by itself into servers. It also takes control of the distributed execution of competing user tasks. As soon as the calculations are started, FML begins to monitor the progress of the calculations and when one of the tasks on a particular server becomes unavailable, it gives a signal to the administrators. After receiving it, they begin to diagnose a specific situation with this task on this server, whether it is a disk failure, a network failure, or a complete server outage. In our future plans - to help administrators as much as possible detailed diagnosis and simplify the search for the causes of certain failures. The latter in terms of several thousand servers is a completely ordinary matter, and they occur many times a day. Therefore, we will greatly save the manual labor of administrators if we automate it at least a little.

At first glance, all this is very similar to the YAMR tasks — the same distribution of computations, the collection of results and the assurance of reliability. But there are two dramatic differences. First, FML deals with the search index, and not with the classic “key-value” structure adopted in YAMR. The search index implies that all samples come from a combination of a large number of keys at once (in the simplest case, several query words). Working with such samples in the key-value paradigm is fundamentally difficult. And secondly, if YAMR itself decides how to decompose data into servers, then FML can work with any data distribution predetermined by the external system according to its own laws.

The solution turned out to be so successful that most of the Search development teams on their own initiative switched to the use of FML, and, according to our estimates, today about 70% (in terms of processor time) of calculations in Yandex.Search development is managed by FML.

Applications and comparison with analogues

As we have said , FML and Matrixet are parts of the machine learning technology of Yandex. And it is used not only in the web search. For example, with its help, formulas are selected for the so-called “vertical” searches (for images, videos, etc.) and for preliminary screening of completely irrelevant documents in a web search. It helps to teach the classification algorithm for products by category in Yandex.Market. In addition, machine learning selects formulas for the search robot (for example, for a strategy that determines in which order to crawl websites). And in all these cases it solves the same task - it builds the function that best corresponds to the expert data submitted to the input. We think in the near future we will find for him many more applications in Yandex. For example, we use in training classifiers, of which we have a lot.

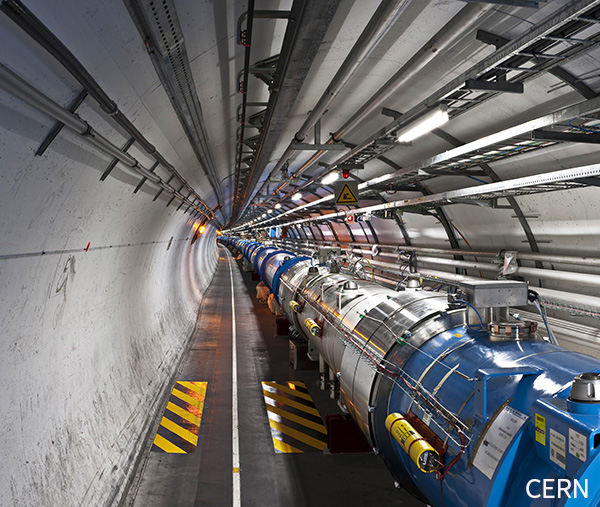

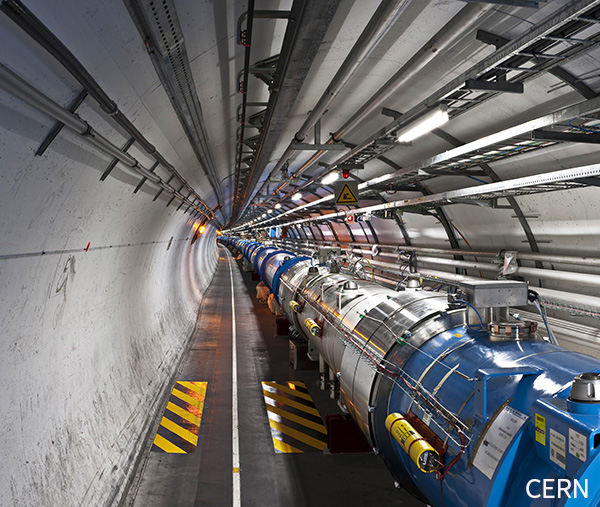

FML paired with the Matrixet machine learning library can be useful not only in search engine development, but also in other areas where data processing is required. With some teams, we have already tried them to build a search for specialized types of data, taking into account specific factors. For example, CERN (European Nuclear Research Center) uses Matrixnet to detect rare events in large amounts of data (units per billion). Traditionally, the TMVA package was used there, based on the Gradient Boosted Decision Trees (GBDT). Since Matriksnet on our problems and our metrics has long become more accurate than a simple GBDT, we expect that CERN physicists will be able to use it to improve the accuracy of their research.

Why Matriksnet can open the way for the Nobel Prize

One of the types of events for which FML / Matrixnet can be applied is the incidence of a strange B-meson into a muon-anti-muon pair. Physically, the real readings of one of the detectors of the Large Andron Collider after a collision of proton beams are compared with the reference values obtained in the event simulator.

The standard model considers such decays to be very rare events (approximately 3 events per billion collisions). And if, as a result of the analysis of experimental data, Matriksnet reliably shows that there are more such events and their number coincides with the predictions of one of the new physicists , this will mean the validity of these theories and could be the first long-awaited reason for awarding the Nobel Prize to their authors.

The standard model considers such decays to be very rare events (approximately 3 events per billion collisions). And if, as a result of the analysis of experimental data, Matriksnet reliably shows that there are more such events and their number coincides with the predictions of one of the new physicists , this will mean the validity of these theories and could be the first long-awaited reason for awarding the Nobel Prize to their authors.

Looking more broadly at what our technology can do, we are confident that it can be useful in many areas where typical machine learning tasks are encountered - especially if they are dealing with a large data array that changes over time. For example, in large online stores, online auctions, social networks.

Now we know only one industrial solution for a close problem, besides our own, - Google Prediction API . There are several startups like BigML . Unfortunately, we could not find information about their effectiveness for various applications. The Amazon Cloud Service can still serve as a pipeline for computational tasks, but a close examination showed that this is a very general solution for completely arbitrary tasks. While ours is created specifically for search engines and is maximally revealed in them. The analogs of FML in solving the problems of “Evaluating the effectiveness of a new factor” and “Monitoring the quality of factors” are completely unknown to us.

An external observer can indirectly judge the effectiveness of our technology, based on the results of international machine learning competitions. For example, Yandex specialists occupied high places in the rankings of Yahoo Learning to Rank and Facebook Recruiting , in which the struggle for the accuracy of the ranking function is in terms of thousandths of the ERR / NDCG. They showed good results at machine learning contests and in other areas.

To be sure that our technologies remain the best in their field, we regularly hold our own machine learning contests - in the framework of the “Internet-Mathematics” series . Topics include machine learning ranking , traffic jams prediction, classification of panoramic photos . Two years ago, our competitions became international, and the final stage of the competition on predicting relevance on user behavior was held at the WSDM 2012 conference in Seattle (USA). Quite recently, a competition devoted to predicting search engine switching has ended.

Despite the fact that the FML framework, which we told you about in this series of posts, was originally designed to work with Matrixnet, it can be adapted to any other known machine learning library (for example, Apache Mahout , Weka , scikit-learn ).

Despite the fact that the FML framework, which we told you about in this series of posts, was originally designed to work with Matrixnet, it can be adapted to any other known machine learning library (for example, Apache Mahout , Weka , scikit-learn ).Recommended literature

Recently, there have been several good online machine learning courses. In English, we can recommend the course of Stanford University , in Russian - the course of Konstantin Vorontsov , which is read in the School of Data Analysis.

From the “paper” editions, we note two editions: The Elements of Statistical Learning: Data Mining, Inference, and Prediction (Trevor Hastie, Robert Tibshirani, Jerome Friedman) (available in electronic form ) and Pattern Recognition and Machine Learning (Christopher M. Bishop ) .

In addition, an extensive selection of courses and textbooks collected at Kaggle will be helpful.

Source: https://habr.com/ru/post/175917/

All Articles