High Availability Cluster on Red Hat Cluster Suite

In search of a solution for building a HA cluster on linux, I came across a rather interesting product that, according to my observations, was unfairly deprived of the attention of a respected community. Judging by the Russian-language articles, if you need to organize fault tolerance at the service level, the use of heartbeat and pacemaker is more popular. Neither the first nor the second solution has taken root in our company, I don’t know why. The complexity of configuration and use, low stability, lack of detailed and updated documentation, support, may have played a role.

After the next update of centos, we discovered that the pacemaker developer stopped supporting the repository for this OS, and in the official repositories there was an assembly implying a completely different configuration (cman instead of corosync). There was no desire to reconfigure pacemaker, and we began to look for another solution. On one of the English-speaking forums, I read about the Red Hat Cluster Suite, we decided to try it.

RHCS consists of several main components:

')

As in heartbeat and pacemaker, cluster resources are managed by standardized scripts (resource agents, RA). The cardinal difference from pacemaker is that redhat does not mean adding custom custom RAs to the system. But this is more than compensated by the fact that there is a universal resource agent for adding ordinary init scripts, it is called script.

Resource management is only at the level of service groups. The resource itself cannot be turned on or off. To allocate resources among the nodes and prioritize startup on certain nodes, failover domains are used, the domain represents the rules for launching groups of resources on certain nodes, prioritization and failback. One resource group can be tied to one domain.

This instruction was tested on centos 6.3 - 6.4.

If there is interest, in the next article I can write about using shared storage and fencing on this system.

After the next update of centos, we discovered that the pacemaker developer stopped supporting the repository for this OS, and in the official repositories there was an assembly implying a completely different configuration (cman instead of corosync). There was no desire to reconfigure pacemaker, and we began to look for another solution. On one of the English-speaking forums, I read about the Red Hat Cluster Suite, we decided to try it.

general information

RHCS consists of several main components:

- cman - responsible for clustering, interaction between nodes, quorum. In essence, it collects the cluster.

- rgmanager is a cluster resource manager, is engaged in adding, monitoring, managing cluster resource groups.

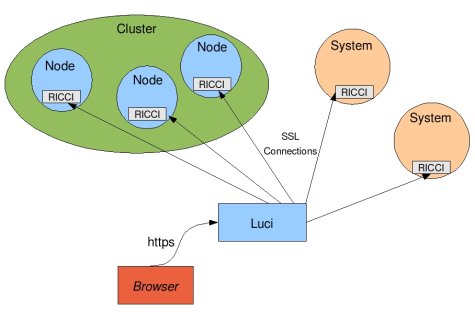

- ricci - daemon for remote cluster management

- luci is a beautiful web interface that connects to ricci on all nodes and provides centralized control through a web interface.

')

As in heartbeat and pacemaker, cluster resources are managed by standardized scripts (resource agents, RA). The cardinal difference from pacemaker is that redhat does not mean adding custom custom RAs to the system. But this is more than compensated by the fact that there is a universal resource agent for adding ordinary init scripts, it is called script.

Resource management is only at the level of service groups. The resource itself cannot be turned on or off. To allocate resources among the nodes and prioritize startup on certain nodes, failover domains are used, the domain represents the rules for launching groups of resources on certain nodes, prioritization and failback. One resource group can be tied to one domain.

Setup Guide:

This instruction was tested on centos 6.3 - 6.4.

- The entire set of packages that will be necessary for the full operation of one node is conveniently grouped into the High Availability group in the repository. Put them using yum, separately put luci. At the time of this writing, luci should be installed from the base repository, if installed with epel enabled, an incorrect version of python-webob is installed and luci starts incorrectly.

yum groupinstall "High Availability" yum install --disablerepo=epel* luci - To initially launch the first node, you need to set the cluster.conf config (in centos, the default is /etc/cluster/cluster.conf). For the initial launch we have enough of this configuration:

<?xml version="1.0"?> <cluster config_version="1" name="cl1"> <clusternodes> <clusternode name="node1" nodeid="1"/> </clusternodes> </cluster>

Where node1 is the FQDN of the node where other nodes will communicate with it.

This is the only config that I rules from the console when setting up this system. - We set the password for the user ricci. This user is created when the ricci package is installed, and will be used to connect the nodes in the luci web interface.

passwd ricci - We start services:

service cman start service rgmanager start service modclusterd start service ricci start service luci start

Here it should be noted that it is better to install luci on a separate server that is not clustered in a cluster, so that when the node is unavailable, luci is available.

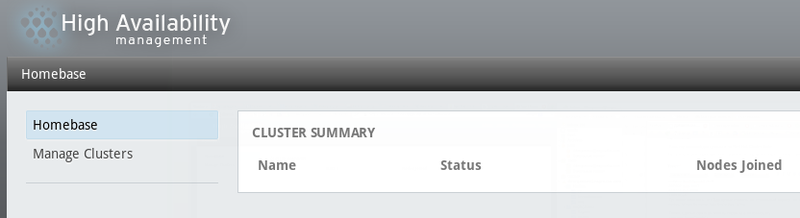

It is also convenient to immediately enable services in autoload:chkconfig ricci on chkconfig cman on chkconfig rgmanager on chkconfig modclusterd on chkconfig luci on - Now you can log in to the luci web interface, which, if you start the service properly, goes up via https on port 8084. You can log in as root.

We see a rather beautiful web interface:

In which we add our cluster from one node, click Manage clusters -> Add, specify the node name, ricci user password and click Add cluster. It remains only to add nodes. - To add a server as a node, it must have the “High Availability” package group installed and ricci launched. Nodes are added to the cluster management on the Nodes tab, the server name and the password of the ricci user are indicated on it. After adding the node, ricci synchronizes cluster.conf to it, and then starts all the necessary services on it.

If there is interest, in the next article I can write about using shared storage and fencing on this system.

Source: https://habr.com/ru/post/175653/

All Articles