Common mistakes when developing lockfree-algorithms and their solutions

On Habré there have already been several articles about lock-free algorithms. This post is a translation of an article by my colleague that we plan to publish on our corporate blog. By type of activity, we write a huge number of lock-free algorithms and data structures, and this article wants to show how interesting and difficult it is at the same time.

This article is in many ways similar to this article , but in that article not all the problems that can be encountered while developing lock-free data structures are considered, and very little attention is paid to solving these problems. In this article, I would like to dwell in detail on some solutions that we use in the actual implementation of lock-free data structures in our product, and to pay more attention to evaluating performance.

By definition, the lock-free algorithm ensures that the time between two consecutive completed operations is limited on top by some value that does not depend on how the operating system distributes these flows over time. This guarantee sounds very nice, because threads can access the same memory section at the same time, and even if the operating system decides to temporarily put down one or more threads in the middle of performing some operation with this memory section, the remaining threads will continue to work. No matter how two or more threads intersect with each other in time, at least one of them will always end in a finite time (unlike wait-free, lock-free does not guarantee that all of them will end in a finite time).

')

Lock-free algorithms are usually contrasted with the traditional approach to writing multi-threaded applications using locks around code that accesses common memory locations. When a thread wants to access memory, it blocks access from other threads. If some other thread has blocked access earlier, the current thread waits until the lock is released. If the operating system decides to temporarily lull the thread that owns the lock, the entire system stops without the ability to change the total memory location.

Instead of using locks, lock-free algorithms use a command known as compare and swap (CAS). Its logic can be described with the following code snippet, with the assumption that CAS is executed atomically:

The main problems of lock-free algorithms are the following three:

1. Often the lock-free implementation is less practical than the implementation with locks.

2. Writing a lock-free code is not easy.

3. Writing the correct lock-free code is incredibly difficult.

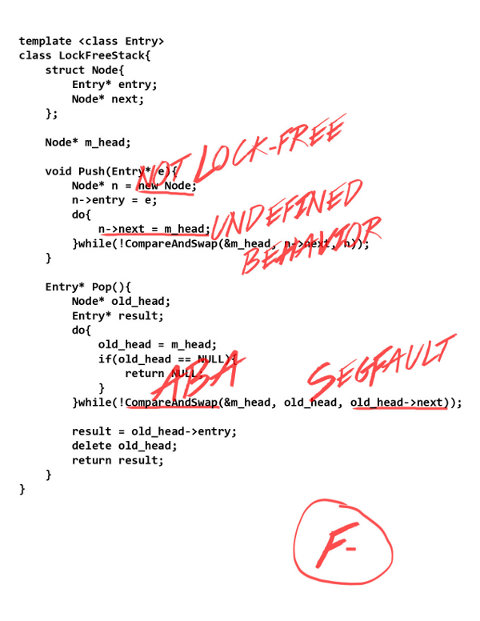

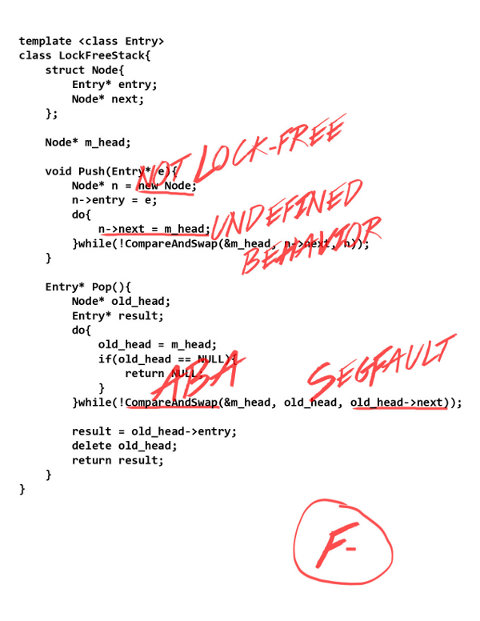

To prove point three, consider a simple example - the implementation of a stack on linked lists. If you ask a person not familiar with lock-free to write a stack, then its first implementation will be something like the following:

For the add to stack operation, we will create a new linked list element, the pointer to the next element of which points to the current top of the stack. Then try using CAS to swap the top of the stack for a new item. If the CAS succeeds, the item is inserted. If CAS returns with an error (which means that the top of the stack has changed between how we read it and how it completed CAS), we will repeat everything from the beginning.

For a delete operation from the stack, remember the current top of the stack, and, using CAS, try to change it to the value of its pointer to the next element. If the CAS was successful, the item was removed from the stack, otherwise the top of the stack was changed by another thread, and we try to remove it again.

In C ++, it will look something like this:

Unfortunately, this seemingly seemingly reasonable implementation of the lock-free stack contains a huge number of errors.

Even the simplest test of such a stack will quickly fall from a Segmentation Fault. The reason is that in line 22 we read a pointer to the current top of the stack. In stock 26, we refer to its next field. During the time between how we read the pointer and how we turned to its next field, a simultaneously running Pop could delete this item (line 29), thereby causing the memory read in drain 26 to be released.

For the correct operation of the algorithm requires some kind of secure memory management algorithm.

A successful CAS does not guarantee that during its execution the memory has not been changed. He guarantees that its value at the moment when he re-recorded it was equal to the value that was transmitted to it as expected. In other words, if you call CAS (ptr, 5, 6) at a time when the value in ptr is five, then another thread changes the value in ptr by seven, and then back to five, the CAS will succeed, reloading five six. This can lead to a problem known as an ABA problem. Consider the following example:

Suppose there are now two elements in the stack, A -> C.

Stream 1 calls Pop, reads 22 m_head (A) on line 22, reads old_head-> next (C) on line 26, and then the operating system puts the stream to sleep.

Stream 2 calls Pop, and successfully removes A.

Thread 2 calls Push, and successfully inserts element B.

Thread 2 calls Push again, and inserts an element located at the same address as A (either A was released, and then another element was brought to the same address, or the user decided to insert an element that he just took from the stack, back)

Stream 1 wakes up and causes CAS. CAS runs successfully, despite the fact that during this time m_head has changed three times! As a consequence, element B is lost.

Standard C ++ does not guarantee that new and delete are lock-free. What is the point of developing a lock-free algorithm if it calls library functions that are not lock-free? In order for the algorithm to be lock-free, working with memory must also be lock-free.

If the current value in some memory cell is 100, and one of the threads currently writes 200 to it, and the other stream reads its value (suppose that there are no other threads besides these two streams in the system). What value will read the second thread? It seems reasonable that he will read either 100 or 200. However, this is not so. In C ++, the described script leads to indefinite behavior, and the second thread can theoretically read 42. Before C ++ 11, people used volatile for variables that could be accessed simultaneously, but actually volatile should never be used for this purpose .

In our implementation, Push and Pop both read and write m_head, thus, each reading of m_head leads to indefinite behavior, and can return a value that has never been written to m_head.

It is well known that both the compiler and the processor can change the order in which commands are executed. Consider this example. Let x and y be both zero, and then the two threads execute the following code:

Stream 1: print x; x = 1; print y;

Stream 2: print y; y = 1; print x;

Suppose that both streams yielded two zeros. It follows from this that the first thread y brought up before the second thread assigned it a unit, what happened before the second thread printed x, what happened before the first thread assigned it a unit, what happened before the first thread brought y. That is, the first thread output y before it y output that does not make sense. However, such a scenario is possible, because both the compiler and the processor could change the order of reading and writing x and y in the streams.

In our implementation of the lock-free stack, we can see the value in the pointer to the next element, which was changed a long time ago, and, as a result, we can access the memory that was freed.

Most of the problems described have more than one solution. Here are the solutions that we use in our work for both the stack and other data structures.

Before removing a piece of memory, you need to make sure that no one reads this memory. The first thought that comes to mind is to use a reference counter, but this solution does not work: between how we got the address and how we increased the reference count, the memory could be freed. We use a solution called Hazard Pointers. In the case of a stack, each thread has a special pointer visible to everyone (let's call it “local pointer”), where the address of the element with which this thread is currently running is stored. Before deleting any element, the deleting thread is convinced that this element is not contained in any of the local pointers. If it is contained in local pointers, the item cannot be deleted. You can either wait and try again, or put the item in the queue for deletion and trust other threads to delete it later.

Every thread that wants to perform any operation at the top of the stack first saves the top of the stack to its local pointer, then makes sure that the item saved to the local pointer is still the top of the stack (it could be removed and the memory could be freed while we kept it to local pointer). After that, the thread can be sure that the memory occupied by the element will not be released.

A big disadvantage is that to remove an element, you need to scan an array, the size of which is equal to the number of threads in the system. One of the optimization options can be the following: instead of deleting an element, always put it in a queue, and only when the queue dials a sufficiently large number of elements for deletion (comparable to the number of threads), delete them all at once. In this case, you can copy the values of all local pointers at that time into some quick-look data structure (for example, a hash table), and use this data structure to check if the item can be deleted.

Data structures are more complex than the stack (linked lists, skiplists) may require more than one local pointer to a stream.

For an ABA problem to never occur, it is sufficient to ensure that m_head never takes the same value twice. To do this, you can store with a 64-bit pointer a number (let's call it a version), which is increased by one with each operation. In order to change the pointer and version at the same time, we need Double CAS, which is available on all modern processors.

To solve the problem with the institution of memory, you can simply not start the memory. In our code, we store the pointer to the next element directly in the structure of the element. But this is not always an acceptable solution, because it consumes 8 bytes for each element, even if the element is not on the stack.

We use boost :: atomic for variables that can be accessed from multiple threads. Although the compiler we are using (g ++ 4.6) already has an implementation of std :: atomic, it is much less efficient than boost :: atomic, because it uses memory_barrier after each operation, even if memory_barrier is not needed after it.

In C ++ 11, a new memory model was introduced and the memory access rules for atomic variables. For each read or write operation, you can specify which warranties are required. For calls to the top of the lock-free stack, we are forced to use CompareAndSwap with sequential consistency guarantee (this is the strictest guarantee, and, as a result, the slowest guarantee). Sequential consistency means that for all read and write operations performed by all threads for a certain period of time, there is an order such that each thread sees these operations as if they occurred in that order.

If you understand the C ++ 11 memory model, and try to analyze what warranties should be used to work with local pointers (hazard pointers), you can assume that aqcuire / release is sufficient.

We used acquire / release for some time, until one day the server fell on the successfully delivered assert. Detailed error analysis showed that when using acquire / release the following scenario is possible:

Thread 1 prepares to execute Pop and reads the top of the stack.

Thread 1 writes the top of the stack to its local pointer (using a release guarantee)

Thread 1 reads the top of the stack again, it has not changed

Thread 2 removes the top of the stack, and decides to remove it (or adds to the queue for deletion, and another thread decides to remove it — let's call the thread that deletes the element, Thread 3 )

Stream 3 reads an array of local pointers with a acquire guarantee. Here a breakdown occurs - it is not guaranteed that we see the change made by Thread 1 .

Stream 3 removes memory.

Thread 1 dereferences the saved top of the stack, which has been deleted, and drops from SegFault.

If you use the Sequential Consistency for both accessing local pointers and working with the top of the stack, such a scenario is not possible. Since the order of operations for all threads is the same, either Thread 2 removed the element from the top of the stack before Thread 1 checked it after writing to the local pointer (then Thread 1 will realize that the top of the stack has changed and start again), or Thread 1 successfully compared its local pointer with the top of the stack before Thread 2 removed the element from the top of the stack, which means that Thread 3 sees the top of the stack in the local pointer.

For this article we wrote two stack implementations: one lock-free, the other using std :: mutex. The test program ran several threads, and called Push and Pop with equal probability in an infinite loop. For measurements used Dell Precision T5500. The results are shown in the graph below. The X axis is the number of threads, the Y axis is the number of operations per second.

It doesn't look very impressive.

One of the problems is that, by its nature, the stack has a very narrow place — its top. All the streams fight to change it first, and the performance will be as high as there is a theoretical possibility to change the top. Push and Pop operations are so simple that even when using a single thread, they rest on the limit of the number of changes to the top of the stack per unit of time. Adding new threads only slows down overall performance, because now you need to synchronize changes to the top of the stack between threads.

Another problem is that when several threads try to change the top of the stack at the same time, only one of them will do it successfully, and all other threads will go to a new iteration. This is problem. As you know, every problem has a quick, beautiful but wrong solution. Such a solution for this problem would be to add usleep (250) after an unsuccessful CAS. This is a simple but not optimal way to make the threads intersect less when the top of the stack changes. The result may seem surprising - adding usleep (250) improves performance 10 times! In practice, we use slightly more complex approaches to reduce the number of unsuccessful CAS, but as you can see, even such a simple approach as usleep gives excellent results.

It is also interesting to look at how much resources various implementations consume:

This is what HTOP looks like to run with a lock-free stack without usleep in 16 threads:

As you can see, the processor is 100% busy. This is due to the fact that all threads in the loop are trying to change the top of the stack, again and again performing a new iteration, completely consuming the processor time available to them.

This is what HTOP looks like to run with a lock-free stack with usleep in 16 threads:

The processor is almost idle. The threads that failed to immediately change the top, sleep without occupying the processor. This is an amazing observation - adding usleep not only increased the useful performance 10 times, but also significantly reduced the consumption of processor time.

This is what HTOP looks like to run with a stack with locks in 16 threads:

Here you can clearly see that the processor is fully occupied by system calls. In other words, almost all the CPU time is spent on working with locks.

Lock-free ensures that the system will always perform useful work, even if some threads have been suspended by the operating system. But lock-free does not guarantee that this will be achieved effectively. In addition, lock-free algorithms often contain errors that are not always easy to notice or reproduce. The simple implementation of the stack at the beginning of this article contained five different errors, and in performance was inferior to the implementation with locks. If you want to use lock-free algorithms, make sure they are worth it, both in terms of performance and in terms of implementation complexity. Try not to invent your own lock-free algorithms, but find ready-made implementations or scientific work.

The benchmark code is also available on github (if you want to run the test locally):

https://github.com/memsql/lockfree-bench

At the suggestion, lostmsu added a spinlock stack to the benchmark instead of std :: mutex. For the purity of the experiment, I used spinlock, which, like the lockfree implementation, sleeps for 250 milliseconds if it fails to lock the lock on the first attempt. Not unexpectedly, such an implementation turned out to be more productive for both the lock-free implementation and the implementation with std :: mutex. The processor consumption is visually the same as that of the lock-free implementation with usleep (250).

The githaba repository has also been updated with a new implementation.

This article is in many ways similar to this article , but in that article not all the problems that can be encountered while developing lock-free data structures are considered, and very little attention is paid to solving these problems. In this article, I would like to dwell in detail on some solutions that we use in the actual implementation of lock-free data structures in our product, and to pay more attention to evaluating performance.

By definition, the lock-free algorithm ensures that the time between two consecutive completed operations is limited on top by some value that does not depend on how the operating system distributes these flows over time. This guarantee sounds very nice, because threads can access the same memory section at the same time, and even if the operating system decides to temporarily put down one or more threads in the middle of performing some operation with this memory section, the remaining threads will continue to work. No matter how two or more threads intersect with each other in time, at least one of them will always end in a finite time (unlike wait-free, lock-free does not guarantee that all of them will end in a finite time).

')

Lock-free algorithms are usually contrasted with the traditional approach to writing multi-threaded applications using locks around code that accesses common memory locations. When a thread wants to access memory, it blocks access from other threads. If some other thread has blocked access earlier, the current thread waits until the lock is released. If the operating system decides to temporarily lull the thread that owns the lock, the entire system stops without the ability to change the total memory location.

Instead of using locks, lock-free algorithms use a command known as compare and swap (CAS). Its logic can be described with the following code snippet, with the assumption that CAS is executed atomically:

1 bool CompareAndSwap(Value* addr, Value oldVal, Value newVal){ 2 if(*addr == oldVal){ 3 *addr = newVal; 4 return true; 5 }else{ 6 return false; 7 } 8 } The main problems of lock-free algorithms are the following three:

1. Often the lock-free implementation is less practical than the implementation with locks.

2. Writing a lock-free code is not easy.

3. Writing the correct lock-free code is incredibly difficult.

To prove point three, consider a simple example - the implementation of a stack on linked lists. If you ask a person not familiar with lock-free to write a stack, then its first implementation will be something like the following:

For the add to stack operation, we will create a new linked list element, the pointer to the next element of which points to the current top of the stack. Then try using CAS to swap the top of the stack for a new item. If the CAS succeeds, the item is inserted. If CAS returns with an error (which means that the top of the stack has changed between how we read it and how it completed CAS), we will repeat everything from the beginning.

For a delete operation from the stack, remember the current top of the stack, and, using CAS, try to change it to the value of its pointer to the next element. If the CAS was successful, the item was removed from the stack, otherwise the top of the stack was changed by another thread, and we try to remove it again.

In C ++, it will look something like this:

1 template <class Entry> 2 class LockFreeStack{ 3 struct Node{ 4 Entry* entry; 5 Node* next; 6 }; 7 8 Node* m_head; 9 10 void Push(Entry* e){ 11 Node* n = new Node; 12 n->entry = e; 13 do{ 14 n->next = m_head; 15 }while(!CompareAndSwap(&m_head, n->next, n)); 16 } 17 18 Entry* Pop(){ 19 Node* old_head; 20 Entry* result; 21 do{ 22 old_head = m_head; 23 if(old_head == NULL){ 24 return NULL; 25 } 26 }while(!CompareAndSwap(&m_head, old_head, old_head->next)); 27 28 result = old_head->entry; 29 delete old_head; 30 return result; 31 } 32 } Unfortunately, this seemingly seemingly reasonable implementation of the lock-free stack contains a huge number of errors.

Segfault

Even the simplest test of such a stack will quickly fall from a Segmentation Fault. The reason is that in line 22 we read a pointer to the current top of the stack. In stock 26, we refer to its next field. During the time between how we read the pointer and how we turned to its next field, a simultaneously running Pop could delete this item (line 29), thereby causing the memory read in drain 26 to be released.

For the correct operation of the algorithm requires some kind of secure memory management algorithm.

Item loss

A successful CAS does not guarantee that during its execution the memory has not been changed. He guarantees that its value at the moment when he re-recorded it was equal to the value that was transmitted to it as expected. In other words, if you call CAS (ptr, 5, 6) at a time when the value in ptr is five, then another thread changes the value in ptr by seven, and then back to five, the CAS will succeed, reloading five six. This can lead to a problem known as an ABA problem. Consider the following example:

Suppose there are now two elements in the stack, A -> C.

Stream 1 calls Pop, reads 22 m_head (A) on line 22, reads old_head-> next (C) on line 26, and then the operating system puts the stream to sleep.

Stream 2 calls Pop, and successfully removes A.

Thread 2 calls Push, and successfully inserts element B.

Thread 2 calls Push again, and inserts an element located at the same address as A (either A was released, and then another element was brought to the same address, or the user decided to insert an element that he just took from the stack, back)

Stream 1 wakes up and causes CAS. CAS runs successfully, despite the fact that during this time m_head has changed three times! As a consequence, element B is lost.

Not lock-free

Standard C ++ does not guarantee that new and delete are lock-free. What is the point of developing a lock-free algorithm if it calls library functions that are not lock-free? In order for the algorithm to be lock-free, working with memory must also be lock-free.

Simultaneous memory access

If the current value in some memory cell is 100, and one of the threads currently writes 200 to it, and the other stream reads its value (suppose that there are no other threads besides these two streams in the system). What value will read the second thread? It seems reasonable that he will read either 100 or 200. However, this is not so. In C ++, the described script leads to indefinite behavior, and the second thread can theoretically read 42. Before C ++ 11, people used volatile for variables that could be accessed simultaneously, but actually volatile should never be used for this purpose .

In our implementation, Push and Pop both read and write m_head, thus, each reading of m_head leads to indefinite behavior, and can return a value that has never been written to m_head.

Memory access order

It is well known that both the compiler and the processor can change the order in which commands are executed. Consider this example. Let x and y be both zero, and then the two threads execute the following code:

Stream 1: print x; x = 1; print y;

Stream 2: print y; y = 1; print x;

Suppose that both streams yielded two zeros. It follows from this that the first thread y brought up before the second thread assigned it a unit, what happened before the second thread printed x, what happened before the first thread assigned it a unit, what happened before the first thread brought y. That is, the first thread output y before it y output that does not make sense. However, such a scenario is possible, because both the compiler and the processor could change the order of reading and writing x and y in the streams.

In our implementation of the lock-free stack, we can see the value in the pointer to the next element, which was changed a long time ago, and, as a result, we can access the memory that was freed.

So how do you write a lock-free stack?

Most of the problems described have more than one solution. Here are the solutions that we use in our work for both the stack and other data structures.

Segfault

Before removing a piece of memory, you need to make sure that no one reads this memory. The first thought that comes to mind is to use a reference counter, but this solution does not work: between how we got the address and how we increased the reference count, the memory could be freed. We use a solution called Hazard Pointers. In the case of a stack, each thread has a special pointer visible to everyone (let's call it “local pointer”), where the address of the element with which this thread is currently running is stored. Before deleting any element, the deleting thread is convinced that this element is not contained in any of the local pointers. If it is contained in local pointers, the item cannot be deleted. You can either wait and try again, or put the item in the queue for deletion and trust other threads to delete it later.

Every thread that wants to perform any operation at the top of the stack first saves the top of the stack to its local pointer, then makes sure that the item saved to the local pointer is still the top of the stack (it could be removed and the memory could be freed while we kept it to local pointer). After that, the thread can be sure that the memory occupied by the element will not be released.

A big disadvantage is that to remove an element, you need to scan an array, the size of which is equal to the number of threads in the system. One of the optimization options can be the following: instead of deleting an element, always put it in a queue, and only when the queue dials a sufficiently large number of elements for deletion (comparable to the number of threads), delete them all at once. In this case, you can copy the values of all local pointers at that time into some quick-look data structure (for example, a hash table), and use this data structure to check if the item can be deleted.

Data structures are more complex than the stack (linked lists, skiplists) may require more than one local pointer to a stream.

Item loss

For an ABA problem to never occur, it is sufficient to ensure that m_head never takes the same value twice. To do this, you can store with a 64-bit pointer a number (let's call it a version), which is increased by one with each operation. In order to change the pointer and version at the same time, we need Double CAS, which is available on all modern processors.

Not lock-free

To solve the problem with the institution of memory, you can simply not start the memory. In our code, we store the pointer to the next element directly in the structure of the element. But this is not always an acceptable solution, because it consumes 8 bytes for each element, even if the element is not on the stack.

Simultaneous memory access

We use boost :: atomic for variables that can be accessed from multiple threads. Although the compiler we are using (g ++ 4.6) already has an implementation of std :: atomic, it is much less efficient than boost :: atomic, because it uses memory_barrier after each operation, even if memory_barrier is not needed after it.

Memory access order

In C ++ 11, a new memory model was introduced and the memory access rules for atomic variables. For each read or write operation, you can specify which warranties are required. For calls to the top of the lock-free stack, we are forced to use CompareAndSwap with sequential consistency guarantee (this is the strictest guarantee, and, as a result, the slowest guarantee). Sequential consistency means that for all read and write operations performed by all threads for a certain period of time, there is an order such that each thread sees these operations as if they occurred in that order.

If you understand the C ++ 11 memory model, and try to analyze what warranties should be used to work with local pointers (hazard pointers), you can assume that aqcuire / release is sufficient.

What is acquire / release

A acquire / release guarantee can be described in the following scenario: if Stream 1 changed variable A with a release guarantee, then variable B with a release guarantee, and Stream 2 read B with a guarantee of acquire, and saw the value recorded by Stream 1, then it is guaranteed that then Stream 2 reads A with a guarantee of purchase, then it will see the change made by Stream 1.

We used acquire / release for some time, until one day the server fell on the successfully delivered assert. Detailed error analysis showed that when using acquire / release the following scenario is possible:

Thread 1 prepares to execute Pop and reads the top of the stack.

Thread 1 writes the top of the stack to its local pointer (using a release guarantee)

Thread 1 reads the top of the stack again, it has not changed

Thread 2 removes the top of the stack, and decides to remove it (or adds to the queue for deletion, and another thread decides to remove it — let's call the thread that deletes the element, Thread 3 )

Stream 3 reads an array of local pointers with a acquire guarantee. Here a breakdown occurs - it is not guaranteed that we see the change made by Thread 1 .

Stream 3 removes memory.

Thread 1 dereferences the saved top of the stack, which has been deleted, and drops from SegFault.

If you use the Sequential Consistency for both accessing local pointers and working with the top of the stack, such a scenario is not possible. Since the order of operations for all threads is the same, either Thread 2 removed the element from the top of the stack before Thread 1 checked it after writing to the local pointer (then Thread 1 will realize that the top of the stack has changed and start again), or Thread 1 successfully compared its local pointer with the top of the stack before Thread 2 removed the element from the top of the stack, which means that Thread 3 sees the top of the stack in the local pointer.

Performance

For this article we wrote two stack implementations: one lock-free, the other using std :: mutex. The test program ran several threads, and called Push and Pop with equal probability in an infinite loop. For measurements used Dell Precision T5500. The results are shown in the graph below. The X axis is the number of threads, the Y axis is the number of operations per second.

It doesn't look very impressive.

One of the problems is that, by its nature, the stack has a very narrow place — its top. All the streams fight to change it first, and the performance will be as high as there is a theoretical possibility to change the top. Push and Pop operations are so simple that even when using a single thread, they rest on the limit of the number of changes to the top of the stack per unit of time. Adding new threads only slows down overall performance, because now you need to synchronize changes to the top of the stack between threads.

Another problem is that when several threads try to change the top of the stack at the same time, only one of them will do it successfully, and all other threads will go to a new iteration. This is problem. As you know, every problem has a quick, beautiful but wrong solution. Such a solution for this problem would be to add usleep (250) after an unsuccessful CAS. This is a simple but not optimal way to make the threads intersect less when the top of the stack changes. The result may seem surprising - adding usleep (250) improves performance 10 times! In practice, we use slightly more complex approaches to reduce the number of unsuccessful CAS, but as you can see, even such a simple approach as usleep gives excellent results.

It is also interesting to look at how much resources various implementations consume:

This is what HTOP looks like to run with a lock-free stack without usleep in 16 threads:

As you can see, the processor is 100% busy. This is due to the fact that all threads in the loop are trying to change the top of the stack, again and again performing a new iteration, completely consuming the processor time available to them.

This is what HTOP looks like to run with a lock-free stack with usleep in 16 threads:

The processor is almost idle. The threads that failed to immediately change the top, sleep without occupying the processor. This is an amazing observation - adding usleep not only increased the useful performance 10 times, but also significantly reduced the consumption of processor time.

This is what HTOP looks like to run with a stack with locks in 16 threads:

Here you can clearly see that the processor is fully occupied by system calls. In other words, almost all the CPU time is spent on working with locks.

Conclusion

Lock-free ensures that the system will always perform useful work, even if some threads have been suspended by the operating system. But lock-free does not guarantee that this will be achieved effectively. In addition, lock-free algorithms often contain errors that are not always easy to notice or reproduce. The simple implementation of the stack at the beginning of this article contained five different errors, and in performance was inferior to the implementation with locks. If you want to use lock-free algorithms, make sure they are worth it, both in terms of performance and in terms of implementation complexity. Try not to invent your own lock-free algorithms, but find ready-made implementations or scientific work.

Code

Source

With locks:

Without locks (there is no logic in the code responsible for clearing the memory)

1 #include <mutex> 2 #include <stack> 3 4 template<class T> 5 class LockedStack{ 6 public: 7 void Push(T* entry){ 8 std::lock_guard<std::mutex> lock(m_mutex); 9 m_stack.push(entry); 10 } 11 12 // For compatability with the LockFreeStack interface, 13 // add an unused int parameter. 14 // 15 T* Pop(int){ 16 std::lock_guard<std::mutex> lock(m_mutex); 17 if(m_stack.empty()){ 18 return nullptr; 19 } 20 T* ret = m_stack.top(); 21 m_stack.pop(); 22 return ret; 23 } 24 25 private: 26 std::stack<T*> m_stack; 27 std::mutex m_mutex; 28 }; Without locks (there is no logic in the code responsible for clearing the memory)

1 class LockFreeStack{ 2 public: 3 // Node 4 // 5 struct Node{ 6 boost::atomic<Node*> next; 7 }; 8 9 10 // , - . 11 // gcc x86_64. 12 // 13 class TaggedPointer{ 14 public: 15 TaggedPointer(): m_node(nullptr), m_counter(0) {} 16 17 Node* GetNode(){ 18 return m_node.load(boost::memory_order_acquire); 19 } 20 21 uint64_t GetCounter(){ 22 return m_counter.load(boost::memory_order_acquire); 23 } 24 25 bool CompareAndSwap(Node* oldNode, uint64_t oldCounter, Node* newNode, uint64_t newCounter){ 26 bool cas_result; 27 __asm__ __volatile__ 28 ( 29 "lock;" 30 "cmpxchg16b %0;" 31 "setz %3;" 32 33 : "+m" (*this), "+a" (oldNode), "+d" (oldCounter), "=q" (cas_result) 34 : "b" (newNode), "c" (newCounter) 35 : "cc", "memory" 36 ); 37 return cas_result; 38 } 39 private: 40 boost::atomic<Node*> m_node; 41 boost::atomic<uint64_t> m_counter; 42 } 43 // 16 double cas 44 // 45 __attribute__((aligned(16))); 46 47 48 bool TryPushStack(Node* entry){ 49 Node* oldHead; 50 uint64_t oldCounter; 51 52 oldHead = m_head.GetNode(); 53 oldCounter = m_head.GetCounter(); 54 entry->next.store(oldHead, boost::memory_order_relaxed); 55 return m_head.CompareAndSwap(oldHead, oldCounter, entry, oldCounter + 1); 56 } 57 58 bool TryPopStack(Node*& oldHead, int threadId){ 59 oldHead = m_head.GetNode(); 60 uint64_t oldCounter = m_head.GetCounter(); 61 if(oldHead == nullptr){ 62 return true; 63 } 64 m_hazard[threadId*8].store(oldHead, boost::memory_order_seq_cst); 65 if(m_head.GetNode() != oldHead){ 66 return false; 67 } 68 return m_head.CompareAndSwap(oldHead, oldCounter, oldHead->next.load(boost::memory_order_acquire), oldCounter + 1); 69 } 70 71 void Push(Node* entry){ 72 while(true){ 73 if(TryPushStack(entry)){ 74 return; 75 } 76 usleep(250); 77 } 78 } 79 80 Node* Pop(int threadId){ 81 Node* res; 82 while(true){ 83 if(TryPopStack(res, threadId)){ 84 return res; 85 } 86 usleep(250); 87 } 88 } 89 90 private: 91 TaggedPointer m_head; 92 // 93 // -, . 94 // 95 boost::atomic<Node*> m_hazard[MAX_THREADS*8]; 96 }; The benchmark code is also available on github (if you want to run the test locally):

https://github.com/memsql/lockfree-bench

Update:

At the suggestion, lostmsu added a spinlock stack to the benchmark instead of std :: mutex. For the purity of the experiment, I used spinlock, which, like the lockfree implementation, sleeps for 250 milliseconds if it fails to lock the lock on the first attempt. Not unexpectedly, such an implementation turned out to be more productive for both the lock-free implementation and the implementation with std :: mutex. The processor consumption is visually the same as that of the lock-free implementation with usleep (250).

The githaba repository has also been updated with a new implementation.

Source: https://habr.com/ru/post/174369/

All Articles