The concept of network virtualization based on Windows Server 2012 and System Center 2012 SP1

Good day, dear colleagues!

Most recently, we at Microsoft, in the office at Krylatsky, had an event dedicated to System Center 2012 SP1 , many of the new products that appeared in service packs were presented at this event. However, my colleagues and I analyzed these events, looked at what was going on in people around SP1 and came to the conclusion ... that the topic of network virtualization, the concept of this technology is poorly understood by an ordinary system administrator. I do not mean the technology itself, but how it is implemented in MS products and which technologies are responsible for network virtualization in Windows Server 2012 and System Center 2012 SP1.

We recently had several posts on Habré on the topic of network virtualization, but we decided to pay special attention to this issue, since the topic is really complex and critically necessary.

')

And so, let's get everything in order.

Network virtualization is a technology, a virtualization mechanism that allows you to abstract from the physical level of work with the network to the logical level, i.e. to the level of software software mechanisms. Virtualization is also understood as a typical consolidation, but here it is of a slightly different nature. Consolidation of a network means the ability to create several, multiple virtual networks on top of a common telecommunications environment, in other words, on top of ordinary network adapters. This way you can spread the infrastructure over a very large amount of equipment. The solution is more likely for providers than for medium-sized companies, or for large companies with complex heterogeneous infrastructure.

However, virtual networks are, of course, good - but what about security? Yes, and scalability issues can not be called such a technology. And for this, the concept of isolation is present in network virtualization technologies - in simple words, it is a mechanism that allows many isolated networks to work on top of a common physical channel in such a way that no channel knows about each other and behaves as if it is working on top own dedicated physical channel. This is a very important point, because it allows you to implement such popular trends as BYOIP (Bring Your Own IP) and BYON (Bring Your Own Network) in practice. These approaches are interesting primarily for providers and represent the ability to fully transfer and save all network addressing when migrating infrastructure to the cloud based on System Center 2012 SP1 and Windows Server 2012 SP1, and the second mechanism also allows you to transfer the entire network configuration due to its virtualization (the network itself and its components — gateways, addresses, virtual adapters, the creation of logical switches and the categorization of network adapter ports according to certain parameters, etc.). But these are already quite complex scenarios, so for now let's deal with the very basics, about more complex things we will lose with you in the next posts on Habré.

Without further ado, before telling about all sorts of infrastructure elements there and in order not to drive the reader into more embarrassment, I suggest that you first get acquainted with the conceptual design of the network components in terms of network virtualization and the possibilities of Windows Server 2012 and System Center 2012 SP1.

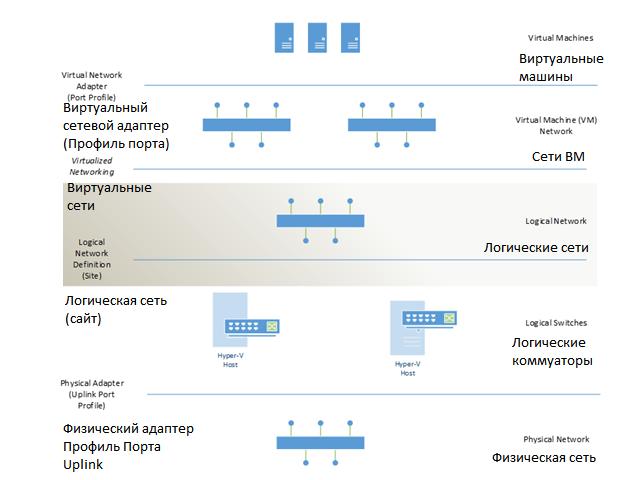

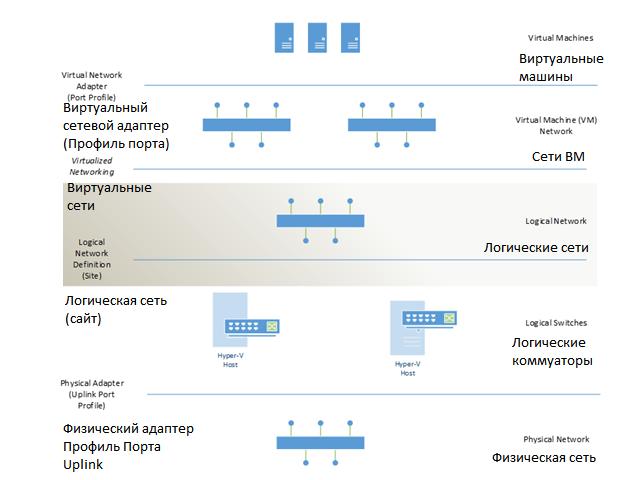

Figure 1. Schematic diagram of network virtualization

I deliberately did not remove the English names, as many of them will be useful in working with WS and SC. Well, the translation is attached - so everything is clear. Well, looking at the scheme, let's briefly describe the principle of operation of such a network, starting with the physical layer and ending with the client level (in this case, this is the VM level). At the lowest level, as it should be, the physical is , the data transmission medium itself, the hardware infrastructure, the physical channels, hardware, the network — call it what you want.

Next, you and I have Hyper-V virtualization hosts, and they include two important elements - logical switches (logical switches) and Uplink port profiles on the physical network adapter. That is, a Hyper-V host is an interface for accessing a physical medium — on the one hand, network virtualization and interface provisioning for a VM — on the other. Since the logical switch is a set of Hyper-V virtual switches with identical settings, we can say that the logical switches together represent a logical network (logical network) . Logically, the configuration of logical switches that make up a logical network can be classified as a site ( unified continuous network space).

But further, already, to a higher level, we begin with you directly virtualization of the network (even better than “networks”). The next level is the VM Network . A virtual machine network is the next level of virtualization of networks — since virtual machine networks, as a level of virtualization, are capable of isolating their own infrastructure, that is, this layer allows us to create virtual isolated networks that do not have access to each other — in fact, each network perceives himself as the only physical in his environment.

On top of the networks we have virtual machines, and they communicate with the network by means of a virtual network adapter . In fact, in terms of management and deployment issues ( System Center 2012 VMM SP1 ), there are port profiles and they are used on top of the network adapters of the virtual machines, giving them the necessary network settings.

This is if in brief how it works - now let's take a closer look at what innovations in VMM 2012 SP1 help us in our work.

Now let's look at the components of VMM 2012 SP1 in terms of the foregoing.

So, in order of appearance in VMM:

1) Logical networks (logical networks) - in the context of VMM, a logical network is a necessary condition for the further creation of VM networks, that is, a mechanism that uses virtualization of a network of hosts and presents it as a single continuous network space over which it is possible to "cut" virtual isolated networks . We always create this object first of all when we talk about network virtualization in VMM 2012 SP1.

Figure 2. Logic networks in VMM 2012 SP1

The network virtualization mechanism itself is built into Windows Server 2012 - and therefore, the 12th server is the only OS from MS that allows network virtualization without the notorious VLAN method, but also allows the end users to use this method within their virtual network. The chip itself is supported at the level of the network adapter driver - so do not forget to activate it on the physical adapter itself, on top of which you will assign a virtual switch .

Figure 3. Activating the network filtering driver for virtualization in Windows Server 2012

Next, we just create the logical network itself - and after simple manipulations with the driver, we can also use network virtualization to isolate them.

Figure 4. Creating a logical network with the possibility of the subsequent creation of isolated VM networks

2) In the simplest configuration for the implementation of network virtualization, all that would be necessary to do for “cutting” an infinite number of (figuratively) isolated grids is the VM Networks. This is essentially a logical network object that is built on top of a logical network and is provided as a network infrastructure for data exchange between VMs. Now we can create isolated virtual networks (VM networks) for dedicated isolated infrastructures.

Figure 5. Creating VM networks in SC VMM 2012 SP1

As you can see, isolation extends to IPv4 and IPv6 addresses selectively or simultaneously. It is also possible to create a network without isolation — in this case, the VM network area will coincide with the logical network area — such VM network configurations are used to provide access to VMM infrastructure objects and a private cloud.

3) Next in VMM we will meet another interesting component - these are MAC Address Pool pools. This mechanism is interesting from the point of view of infrastructure management and deployment. Simply speaking - each hardware manufacturer has its own pool of MAC addresses that they assign to their devices. Because within the same hardware vendor, as a rule, similar drivers and components are used - this allows you to automate the deployment tasks of Hyper-V clusters from scratch, including the necessary components for the server category. Suppose we have 3 hardware vendors: Dell, IBM and HP.

Figure 6. MAC address pools

We can prepare our deployment images for each group of servers, thereby eliminating the likelihood of host disconfiguration, and thanks to the MAC addresses, we ensure that the image matches the necessary drivers and components with the equipment to which they are deployed.

4) Further in VMM we have such an element as load balancers (load balancers) - these can be both hardware and virtual infrastructure elements that allow providing both fault-tolerant and increased bandwidth to the required service running on virtual machines. In other words - the usual balancer. By default, it supports the work with Microsoft NLB (and who would doubt !?), but support for third-party balancers is provided through the provider - say, you can install Citrix NetScaler and use it. Just remember - before using the balancer - you must install a provider to work with it on the VMM server and restart its service (VMM). You will also need access details to your balancer.

Figure 7. Registering a Citrix NetScaler Balancer

5) The balancer itself is a useful thing, but in this case ineffective. But if we use VIP (Virtual IP) profiles - virtual IP addresses in combination with balancers and use them when deploying and scaling our services - an excellent tool. In other words, the VIP profile is the virtual IP address that your service receives when deployed from a VMM template. This address hides all the components of the service infrastructure (web server, DBMS and others) and thus allows organizing a farm and cluster within the service to solve accessibility and scalability issues, because when we deploy a service from a template and use balancers with VIP profiles, we can on the fly, create the necessary service components (in the form of VMs) - and immediately add them to the common pool for work, and since the VIP template essentially virtualizes the IP address of the external environment - the channel from which users are await the service.

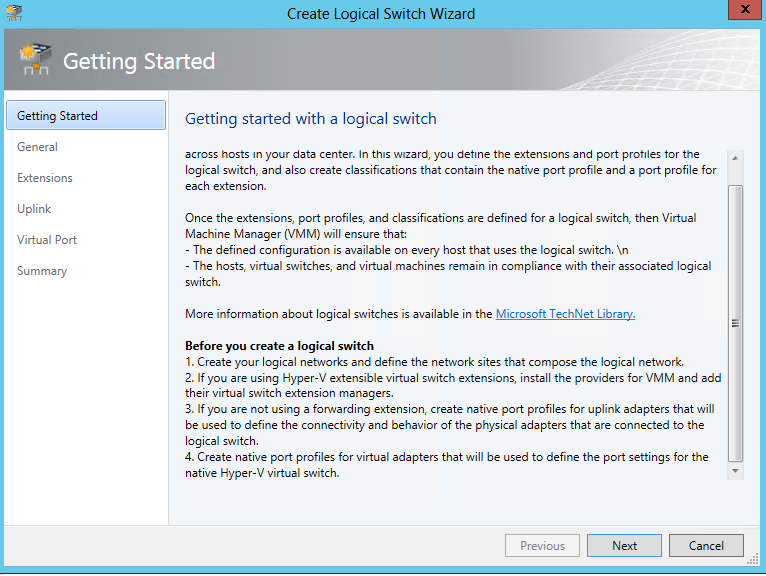

6) Before talking more about logical switches (logical switches) , let's look at one picture below:

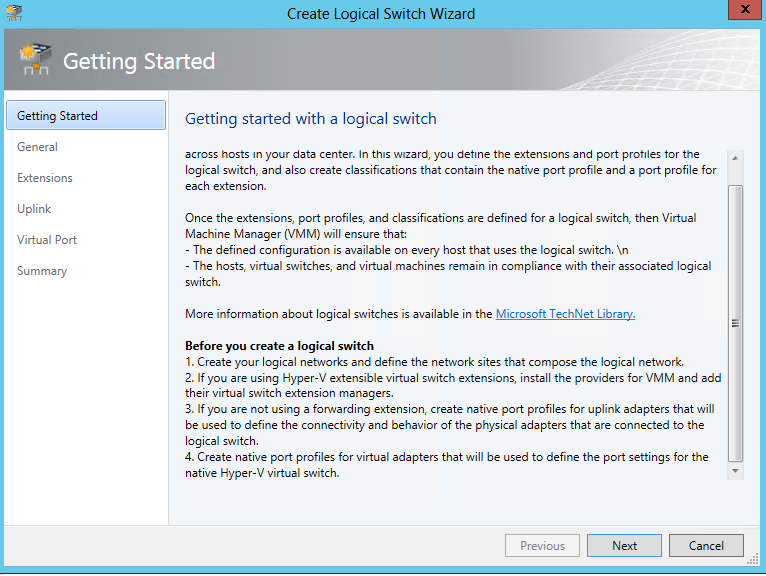

Figure 8. Requirements for creating a logical switch in VMM

From what we see, it follows that just because no one will create a logical switch for us, there are a number of certain conditions:

a) First, we need to create a logical network and VM networks - well, we have already dealt with this.

b) Next we need to understand whether we will use the Hyper-V virtual switch extensions from Windows Server 2012 - and if so, we need to register the provider in VMM to work with them. Here we are talking about the mechanisms of the advanced features of the switch - filtering traffic or additional features at the expense of third-party add-ons.

c) If we do not plan to use the previous scenario neither on the basis of WS2012, nor on the basis of third-party solutions of intelligent redirection, then we have no choice but to create profiles of native ports and ports for virtual machines for further comparison each other. This mechanism actually imposes requirements on the settings of the network adapter driver and the matching of requirements with the actual capabilities of the driver. In other words, we can logically separate traffic according to its type (service, communication, SAN traffic and other types of traffic) and apply these settings from the point of view of the network as a mechanism for matching settings and behavior of Hyper-V host adapters that are connected to it (physical adapters ) with port profiles of virtual adapters inside the VM. In other words, this mechanism, which, on the one hand, logically separates traffic from the switch to the host, assigns a specific configuration to the physical adapters to process some type of traffic, and on virtual machines, the same mechanism is used to determine the properties of the virtual adapters inside the VM to compare them with the properties host adapters — as a result — automatic port assignment.

Figure 9. Creating an uplink type profile

As you can see, when creating a native port profile, you can select the profile type, and its actual scope. Uplink - for use on network adapters that provide the virtual switch, and virtual adapter profiles - actually work at the level of adapters of virtual machines. The profile scope is assigned at the logical network level.

And here comes the most interesting and binding moment. There is one more component - the Port Classification - as a matter of fact, it is a mechanism for grouping the profiles of native ports. That is, you create different port profiles, for example, at a low speed, but for different types of traffic - and further integrate these ports into the “Slow” classification. And when you create a logical switch, you immediately specify a channel in it to exit to the outside (uplink) and immediately define port classifications, including all the necessary profiles. Since the profile extends to the logical network, its scope also determines the scope of the logical switch. Port classifications also apply when creating a cloud in VMM.

7) And the last component mechanism in VMM is the gateways . Gateways, as their name implies, are designed to interconnect scattered and isolated networks into a single continuous network. In other words, these are mechanisms for remote access and interaction at the level of networks and their boundaries. The gateway component in VMM also uses third-party tools and providers for working with gateways. Gateways can be both hardware and software. Gateways can also use VPN mechanisms to support their operations. Gateways also allow you to use hybrid cloud scripts to create a Windows Azure VM hybrid network. And if it is simpler and basically - then you can use the gateways to connect virtual isolated networks or virtual networks with external networks of clients using the tools they are familiar with (well, this is if the hardware is identical or with the functionality and interaction mechanisms).

Well, dear colleagues!

At this point, the story about the concept of network virtualization is over, but only for today.

In the future, I plan to talk about more complex scenarios and options for using all these and third-party components in the complex.

So stay tuned and keep abreast!

Respectfully,

fireman

George A. Gadzhiev

Information Infrastructure Expert

Microsoft Corporation

Most recently, we at Microsoft, in the office at Krylatsky, had an event dedicated to System Center 2012 SP1 , many of the new products that appeared in service packs were presented at this event. However, my colleagues and I analyzed these events, looked at what was going on in people around SP1 and came to the conclusion ... that the topic of network virtualization, the concept of this technology is poorly understood by an ordinary system administrator. I do not mean the technology itself, but how it is implemented in MS products and which technologies are responsible for network virtualization in Windows Server 2012 and System Center 2012 SP1.

We recently had several posts on Habré on the topic of network virtualization, but we decided to pay special attention to this issue, since the topic is really complex and critically necessary.

')

And so, let's get everything in order.

Network virtualization

Network virtualization is a technology, a virtualization mechanism that allows you to abstract from the physical level of work with the network to the logical level, i.e. to the level of software software mechanisms. Virtualization is also understood as a typical consolidation, but here it is of a slightly different nature. Consolidation of a network means the ability to create several, multiple virtual networks on top of a common telecommunications environment, in other words, on top of ordinary network adapters. This way you can spread the infrastructure over a very large amount of equipment. The solution is more likely for providers than for medium-sized companies, or for large companies with complex heterogeneous infrastructure.

However, virtual networks are, of course, good - but what about security? Yes, and scalability issues can not be called such a technology. And for this, the concept of isolation is present in network virtualization technologies - in simple words, it is a mechanism that allows many isolated networks to work on top of a common physical channel in such a way that no channel knows about each other and behaves as if it is working on top own dedicated physical channel. This is a very important point, because it allows you to implement such popular trends as BYOIP (Bring Your Own IP) and BYON (Bring Your Own Network) in practice. These approaches are interesting primarily for providers and represent the ability to fully transfer and save all network addressing when migrating infrastructure to the cloud based on System Center 2012 SP1 and Windows Server 2012 SP1, and the second mechanism also allows you to transfer the entire network configuration due to its virtualization (the network itself and its components — gateways, addresses, virtual adapters, the creation of logical switches and the categorization of network adapter ports according to certain parameters, etc.). But these are already quite complex scenarios, so for now let's deal with the very basics, about more complex things we will lose with you in the next posts on Habré.

Who is Hu or more clearly

Without further ado, before telling about all sorts of infrastructure elements there and in order not to drive the reader into more embarrassment, I suggest that you first get acquainted with the conceptual design of the network components in terms of network virtualization and the possibilities of Windows Server 2012 and System Center 2012 SP1.

Figure 1. Schematic diagram of network virtualization

I deliberately did not remove the English names, as many of them will be useful in working with WS and SC. Well, the translation is attached - so everything is clear. Well, looking at the scheme, let's briefly describe the principle of operation of such a network, starting with the physical layer and ending with the client level (in this case, this is the VM level). At the lowest level, as it should be, the physical is , the data transmission medium itself, the hardware infrastructure, the physical channels, hardware, the network — call it what you want.

Next, you and I have Hyper-V virtualization hosts, and they include two important elements - logical switches (logical switches) and Uplink port profiles on the physical network adapter. That is, a Hyper-V host is an interface for accessing a physical medium — on the one hand, network virtualization and interface provisioning for a VM — on the other. Since the logical switch is a set of Hyper-V virtual switches with identical settings, we can say that the logical switches together represent a logical network (logical network) . Logically, the configuration of logical switches that make up a logical network can be classified as a site ( unified continuous network space).

But further, already, to a higher level, we begin with you directly virtualization of the network (even better than “networks”). The next level is the VM Network . A virtual machine network is the next level of virtualization of networks — since virtual machine networks, as a level of virtualization, are capable of isolating their own infrastructure, that is, this layer allows us to create virtual isolated networks that do not have access to each other — in fact, each network perceives himself as the only physical in his environment.

On top of the networks we have virtual machines, and they communicate with the network by means of a virtual network adapter . In fact, in terms of management and deployment issues ( System Center 2012 VMM SP1 ), there are port profiles and they are used on top of the network adapters of the virtual machines, giving them the necessary network settings.

This is if in brief how it works - now let's take a closer look at what innovations in VMM 2012 SP1 help us in our work.

VMM components

Now let's look at the components of VMM 2012 SP1 in terms of the foregoing.

So, in order of appearance in VMM:

1) Logical networks (logical networks) - in the context of VMM, a logical network is a necessary condition for the further creation of VM networks, that is, a mechanism that uses virtualization of a network of hosts and presents it as a single continuous network space over which it is possible to "cut" virtual isolated networks . We always create this object first of all when we talk about network virtualization in VMM 2012 SP1.

Figure 2. Logic networks in VMM 2012 SP1

The network virtualization mechanism itself is built into Windows Server 2012 - and therefore, the 12th server is the only OS from MS that allows network virtualization without the notorious VLAN method, but also allows the end users to use this method within their virtual network. The chip itself is supported at the level of the network adapter driver - so do not forget to activate it on the physical adapter itself, on top of which you will assign a virtual switch .

Figure 3. Activating the network filtering driver for virtualization in Windows Server 2012

Next, we just create the logical network itself - and after simple manipulations with the driver, we can also use network virtualization to isolate them.

Figure 4. Creating a logical network with the possibility of the subsequent creation of isolated VM networks

2) In the simplest configuration for the implementation of network virtualization, all that would be necessary to do for “cutting” an infinite number of (figuratively) isolated grids is the VM Networks. This is essentially a logical network object that is built on top of a logical network and is provided as a network infrastructure for data exchange between VMs. Now we can create isolated virtual networks (VM networks) for dedicated isolated infrastructures.

Figure 5. Creating VM networks in SC VMM 2012 SP1

As you can see, isolation extends to IPv4 and IPv6 addresses selectively or simultaneously. It is also possible to create a network without isolation — in this case, the VM network area will coincide with the logical network area — such VM network configurations are used to provide access to VMM infrastructure objects and a private cloud.

3) Next in VMM we will meet another interesting component - these are MAC Address Pool pools. This mechanism is interesting from the point of view of infrastructure management and deployment. Simply speaking - each hardware manufacturer has its own pool of MAC addresses that they assign to their devices. Because within the same hardware vendor, as a rule, similar drivers and components are used - this allows you to automate the deployment tasks of Hyper-V clusters from scratch, including the necessary components for the server category. Suppose we have 3 hardware vendors: Dell, IBM and HP.

Figure 6. MAC address pools

We can prepare our deployment images for each group of servers, thereby eliminating the likelihood of host disconfiguration, and thanks to the MAC addresses, we ensure that the image matches the necessary drivers and components with the equipment to which they are deployed.

4) Further in VMM we have such an element as load balancers (load balancers) - these can be both hardware and virtual infrastructure elements that allow providing both fault-tolerant and increased bandwidth to the required service running on virtual machines. In other words - the usual balancer. By default, it supports the work with Microsoft NLB (and who would doubt !?), but support for third-party balancers is provided through the provider - say, you can install Citrix NetScaler and use it. Just remember - before using the balancer - you must install a provider to work with it on the VMM server and restart its service (VMM). You will also need access details to your balancer.

Figure 7. Registering a Citrix NetScaler Balancer

5) The balancer itself is a useful thing, but in this case ineffective. But if we use VIP (Virtual IP) profiles - virtual IP addresses in combination with balancers and use them when deploying and scaling our services - an excellent tool. In other words, the VIP profile is the virtual IP address that your service receives when deployed from a VMM template. This address hides all the components of the service infrastructure (web server, DBMS and others) and thus allows organizing a farm and cluster within the service to solve accessibility and scalability issues, because when we deploy a service from a template and use balancers with VIP profiles, we can on the fly, create the necessary service components (in the form of VMs) - and immediately add them to the common pool for work, and since the VIP template essentially virtualizes the IP address of the external environment - the channel from which users are await the service.

6) Before talking more about logical switches (logical switches) , let's look at one picture below:

Figure 8. Requirements for creating a logical switch in VMM

From what we see, it follows that just because no one will create a logical switch for us, there are a number of certain conditions:

a) First, we need to create a logical network and VM networks - well, we have already dealt with this.

b) Next we need to understand whether we will use the Hyper-V virtual switch extensions from Windows Server 2012 - and if so, we need to register the provider in VMM to work with them. Here we are talking about the mechanisms of the advanced features of the switch - filtering traffic or additional features at the expense of third-party add-ons.

c) If we do not plan to use the previous scenario neither on the basis of WS2012, nor on the basis of third-party solutions of intelligent redirection, then we have no choice but to create profiles of native ports and ports for virtual machines for further comparison each other. This mechanism actually imposes requirements on the settings of the network adapter driver and the matching of requirements with the actual capabilities of the driver. In other words, we can logically separate traffic according to its type (service, communication, SAN traffic and other types of traffic) and apply these settings from the point of view of the network as a mechanism for matching settings and behavior of Hyper-V host adapters that are connected to it (physical adapters ) with port profiles of virtual adapters inside the VM. In other words, this mechanism, which, on the one hand, logically separates traffic from the switch to the host, assigns a specific configuration to the physical adapters to process some type of traffic, and on virtual machines, the same mechanism is used to determine the properties of the virtual adapters inside the VM to compare them with the properties host adapters — as a result — automatic port assignment.

Figure 9. Creating an uplink type profile

As you can see, when creating a native port profile, you can select the profile type, and its actual scope. Uplink - for use on network adapters that provide the virtual switch, and virtual adapter profiles - actually work at the level of adapters of virtual machines. The profile scope is assigned at the logical network level.

And here comes the most interesting and binding moment. There is one more component - the Port Classification - as a matter of fact, it is a mechanism for grouping the profiles of native ports. That is, you create different port profiles, for example, at a low speed, but for different types of traffic - and further integrate these ports into the “Slow” classification. And when you create a logical switch, you immediately specify a channel in it to exit to the outside (uplink) and immediately define port classifications, including all the necessary profiles. Since the profile extends to the logical network, its scope also determines the scope of the logical switch. Port classifications also apply when creating a cloud in VMM.

7) And the last component mechanism in VMM is the gateways . Gateways, as their name implies, are designed to interconnect scattered and isolated networks into a single continuous network. In other words, these are mechanisms for remote access and interaction at the level of networks and their boundaries. The gateway component in VMM also uses third-party tools and providers for working with gateways. Gateways can be both hardware and software. Gateways can also use VPN mechanisms to support their operations. Gateways also allow you to use hybrid cloud scripts to create a Windows Azure VM hybrid network. And if it is simpler and basically - then you can use the gateways to connect virtual isolated networks or virtual networks with external networks of clients using the tools they are familiar with (well, this is if the hardware is identical or with the functionality and interaction mechanisms).

Well, dear colleagues!

At this point, the story about the concept of network virtualization is over, but only for today.

In the future, I plan to talk about more complex scenarios and options for using all these and third-party components in the complex.

So stay tuned and keep abreast!

Respectfully,

fireman

George A. Gadzhiev

Information Infrastructure Expert

Microsoft Corporation

Source: https://habr.com/ru/post/174249/

All Articles