Online broadcast via Nginx-RTMP: several ready-made recipes

Recently I stumbled upon the topic " nginx-based online broadcasting server " about the remarkable module of Roman Harutyunyan (@rarutyunyan) for nginx: nginx-rtmp-module . The module is very easy to configure and allows you to create a server for publishing video recordings and live broadcasting based on nginx.

You can read about the module itself on its GitHub page , but I want to give a few simple examples of usage. I hope the topic will help beginners in video files (such as me).

RTMP (Real Time Messaging Protocol) is a proprietary broadcast protocol from Adobe. TCP is used as default transport (port 1935). You can also encapsulate RTMP over HTTP (RTMPT). The RTMP client is primarily the Adobe Flash Player.

Video codec - H.264, audio codec AAC, nellymoser or MP3, MP4 or FLV containers.

')

In other words, video on demand (VOD). Just add to the rtmp {server {...}} section of nginx.conf.

(Note: of course, the section does not have to be called vod)

Now you can put the video file in the correct format in the / var / videos folder and feed the player to the source, for example rtmp: //server/vod/file.flv. As far as I understood, MP4 natively supports video rewinding, and FLV will have to be indexed separately.

All the examples below will be about live broadcast using ffmpeg under Windows. However, this information will be useful for Linux users.

We can send a stream of video and audio to the server using the same RTMP protocol for publishing. And our customers will be able to watch the broadcast. To do this, you need to add a section on the server:

I recommend to immediately close access to the publication to everyone except trusted IP, as shown in the example.

On the machine with which we will be broadcast, first we need to get a list of DirectShow devices. Start - Run - cmd, go to the ffmpeg / bin folder and run:

If there are Russian letters in the name of your source, then they may appear as krakozabrami. Tru admins iconv, and simple guys like me decode byaku on the site of Lebedev . FFmpeg'u need to feed a readable inscription.

Now, knowing the name of the video and audio source, you can capture it using ffmpeg and send it to the server.

At a minimum, you must specify the source of the video, codec and server:

Instead of "Webcam C170" you need to substitute the name of your camera from the list.

The -an switch says that we are not transmitting the audio stream. If an audio stream is needed, then the launch line will look something like this:

Here we use the libfaac codec, sampling rate of 44100, 2 channels (stereo). You can use MP3 (code libmp3lame) instead of AAC.

If your camera has an analog output, then it can be connected to a computer using a capture device. I use a cheap PAL camera and USB capture card with the Dealextreme.

There are two options: install FFSplit or use screen-capture-recorder with FFmpeg.

FFSplit is easier to use. It has a convenient GUI, but it does not work under XP / 2003.

If you decide to choose the second method, then the FFmpeg launch line will look something like this:

Audio stream can be captured with virtual-audio-capturer.

An example of screen capture in the application

Naturally, you can relay a video or audio file (or stream) of FFmpeg to a server. In the example below, we are transmitting MJPEG video from a remote camera:

But for such purposes, it is more reasonable to use the push option on the RTMP server itself to eliminate the intermediate link and pull the stream on the server itself.

Some kind of webcam in Japan

-preset name H.264 has several sets of compression / speed ratio settings: ultrafast, superfast, veryfast, faster, medium, slow, slower, veryslow. Therefore, if you want to improve performance, you should use:

-crf number directly affects the bitrate and quality. Accepts values from 0 to 51 - the more, the lower the picture quality. The default is 23, 18 - losless quality. The bitrate roughly doubles with a decrease in CRF of 6.

The -r number specifies the input and output FPS. For sources from which you are capturing a picture, you can set instead of -r -re to use the native FPS.

-rtbufsize number real-time buffer size. If you constantly receive messages about buffer overflow and dropping frames, you can put a large buffer (for example, 100000k), but this may increase the transmission delay.

-pix_fmt sets the color model. If you have a black square instead of a picture, and the sound is working, try putting yuv420p or yuv422p .

-s width x height input and output picture size.

-g number as I understand it, this is the maximum number of frames between the key. If your FPS is very small, then you can put this value smaller to reduce the delay in the start of the broadcast.

-keyint_min number is the minimum number of frames between keyframes.

-vf "crop = w: h: x: y" crop video

-tune zerolatency is the "magic" option to reduce broadcast delay. What she specifically does, I never found (-:

-analyzeduration 0 disables duration analysis, which helps reduce broadcast delay

In addition to the audio parameters discussed above, you may need to -acodec copy if your audio stream does not require additional MP3 / AAC transcoding.

Example: broadcasting from a low latency webcam without sound; we draw the current time at the top of the picture

It's simple. Put on your site one of the popular players, such as Flowplayer or JW Player .

You can see an example of connecting JW Player on the demo page.

With the help of the rtmp module, you can create not only video broadcasting, but also video chat, Internet radio, a simple platform for webinars. Dare!

I only considered the basic functionality of nginx-rtmp-module and ffmpeg. Their possibilities are much wider, so pay attention to the documentation:

Blog nginx-rtmp-module

Wiki nginx-rtmp-module

FFmpeg Documentation

Streaming guide

x264 encoding guide

Filtering guide

You can read about the module itself on its GitHub page , but I want to give a few simple examples of usage. I hope the topic will help beginners in video files (such as me).

RTMP in brief

RTMP (Real Time Messaging Protocol) is a proprietary broadcast protocol from Adobe. TCP is used as default transport (port 1935). You can also encapsulate RTMP over HTTP (RTMPT). The RTMP client is primarily the Adobe Flash Player.

Video codec - H.264, audio codec AAC, nellymoser or MP3, MP4 or FLV containers.

')

Video posting

In other words, video on demand (VOD). Just add to the rtmp {server {...}} section of nginx.conf.

application vod { play /var/videos; } (Note: of course, the section does not have to be called vod)

Now you can put the video file in the correct format in the / var / videos folder and feed the player to the source, for example rtmp: //server/vod/file.flv. As far as I understood, MP4 natively supports video rewinding, and FLV will have to be indexed separately.

All the examples below will be about live broadcast using ffmpeg under Windows. However, this information will be useful for Linux users.

Online streaming

We can send a stream of video and audio to the server using the same RTMP protocol for publishing. And our customers will be able to watch the broadcast. To do this, you need to add a section on the server:

application live { allow publish 1.2.3.4; allow publish 192.168.0.0/24; deny publish all; allow play all; live on; } I recommend to immediately close access to the publication to everyone except trusted IP, as shown in the example.

On the machine with which we will be broadcast, first we need to get a list of DirectShow devices. Start - Run - cmd, go to the ffmpeg / bin folder and run:

ffmpeg -list_devices true -f dshow -i dummy If there are Russian letters in the name of your source, then they may appear as krakozabrami. Tru admins iconv, and simple guys like me decode byaku on the site of Lebedev . FFmpeg'u need to feed a readable inscription.

Now, knowing the name of the video and audio source, you can capture it using ffmpeg and send it to the server.

Webcam

At a minimum, you must specify the source of the video, codec and server:

ffmpeg -f dshow -i video="Webcam C170" -c:v libx264 -an -f flv "rtmp://1.2.3.4/live/test.flv live=1" Instead of "Webcam C170" you need to substitute the name of your camera from the list.

The -an switch says that we are not transmitting the audio stream. If an audio stream is needed, then the launch line will look something like this:

ffmpeg -f dshow -i video="Webcam C170" -f dshow -i audio=" ..." -c:v libx264 -c:a libfaac -ar 44100 -ac 2 -f flv "rtmp://1.2.3.4/live/test.flv live=1" Here we use the libfaac codec, sampling rate of 44100, 2 channels (stereo). You can use MP3 (code libmp3lame) instead of AAC.

Analog camera

If your camera has an analog output, then it can be connected to a computer using a capture device. I use a cheap PAL camera and USB capture card with the Dealextreme.

ffmpeg -r pal -s pal -f dshow -i video="USB2.0 ATV" -c:v libx264 -an -f flv "rtmp://1.2.3.4/live/test.flv live=1"

Screen capture

There are two options: install FFSplit or use screen-capture-recorder with FFmpeg.

FFSplit is easier to use. It has a convenient GUI, but it does not work under XP / 2003.

If you decide to choose the second method, then the FFmpeg launch line will look something like this:

ffmpeg -f dshow -i video="screen-capture-recorder" -c:v libx264 -an -r 2 -f flv "rtmp://1.2.3.4/live/test.flv live=1" Audio stream can be captured with virtual-audio-capturer.

An example of screen capture in the application

Retransmission

Naturally, you can relay a video or audio file (or stream) of FFmpeg to a server. In the example below, we are transmitting MJPEG video from a remote camera:

ffmpeg -f mjpeg -i video="http://iiyudana.miemasu.net/nphMotionJpeg?Resolution=320x240&Quality=Standard" -c:v libx264 -f flv "rtmp://1.2.3.4/live/test.flv live=1" But for such purposes, it is more reasonable to use the push option on the RTMP server itself to eliminate the intermediate link and pull the stream on the server itself.

Some kind of webcam in Japan

Tuning, problem solving

-preset name H.264 has several sets of compression / speed ratio settings: ultrafast, superfast, veryfast, faster, medium, slow, slower, veryslow. Therefore, if you want to improve performance, you should use:

-preset ultrafast -crf number directly affects the bitrate and quality. Accepts values from 0 to 51 - the more, the lower the picture quality. The default is 23, 18 - losless quality. The bitrate roughly doubles with a decrease in CRF of 6.

The -r number specifies the input and output FPS. For sources from which you are capturing a picture, you can set instead of -r -re to use the native FPS.

-rtbufsize number real-time buffer size. If you constantly receive messages about buffer overflow and dropping frames, you can put a large buffer (for example, 100000k), but this may increase the transmission delay.

-pix_fmt sets the color model. If you have a black square instead of a picture, and the sound is working, try putting yuv420p or yuv422p .

-s width x height input and output picture size.

-g number as I understand it, this is the maximum number of frames between the key. If your FPS is very small, then you can put this value smaller to reduce the delay in the start of the broadcast.

-keyint_min number is the minimum number of frames between keyframes.

-vf "crop = w: h: x: y" crop video

-tune zerolatency is the "magic" option to reduce broadcast delay. What she specifically does, I never found (-:

-analyzeduration 0 disables duration analysis, which helps reduce broadcast delay

In addition to the audio parameters discussed above, you may need to -acodec copy if your audio stream does not require additional MP3 / AAC transcoding.

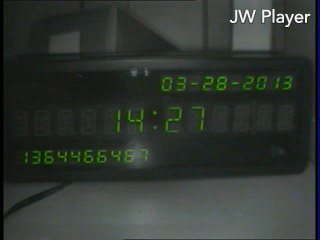

Example: broadcasting from a low latency webcam without sound; we draw the current time at the top of the picture

ffmpeg -r 25 -rtbufsize 1000000k -analyzeduration 0 -s vga -copyts -f dshow -i video="Webcam C170" -vf "drawtext=fontfile=verdana.ttf:fontcolor=yellow@0.8:fontsize=48:box=1:boxcolor=blue@0.8:text=%{localtime}" -s 320x240 -c:v libx264 -g 10 -keyint_min 1 -preset UltraFast -tune zerolatency -crf 25 -an -r 3 -f flv "rtmp://1.2.3.4:1935/live/b.flv live=1" Player on site

It's simple. Put on your site one of the popular players, such as Flowplayer or JW Player .

You can see an example of connecting JW Player on the demo page.

What's next?

With the help of the rtmp module, you can create not only video broadcasting, but also video chat, Internet radio, a simple platform for webinars. Dare!

I only considered the basic functionality of nginx-rtmp-module and ffmpeg. Their possibilities are much wider, so pay attention to the documentation:

Blog nginx-rtmp-module

Wiki nginx-rtmp-module

FFmpeg Documentation

Streaming guide

x264 encoding guide

Filtering guide

Source: https://habr.com/ru/post/174089/

All Articles