Git for Photos. Large Git Repositories

The idea is to use git to store all your photos.

As it turned out, GIT cope with this task with great difficulty.

If only directives are needed, see the last paragraph.

I found that the client had to disable archiving so that the add and commit commands went faster. And on the server, in addition to disabling archiving, you must also limit the use of memory, and adjust the packing / unpacking policy of the objects.

Cloning over ssh is quite slow and requires a lot of resources on the server. Therefore, for such repositories it is better to use the "stupid" http protocol. In addition, you need to update git to the latest version, because 1.7.1 problems with low memory.

There is also the possibility to register

')

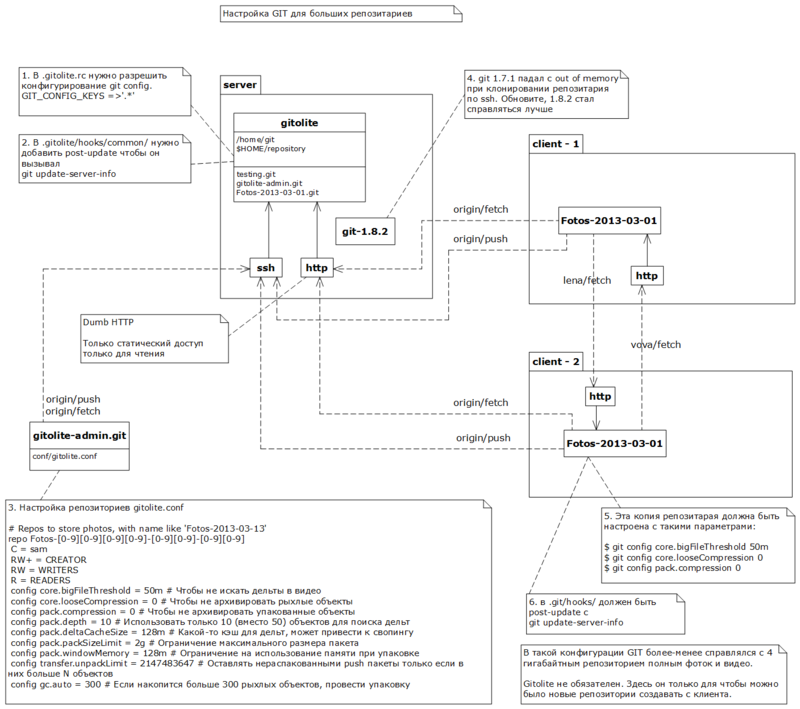

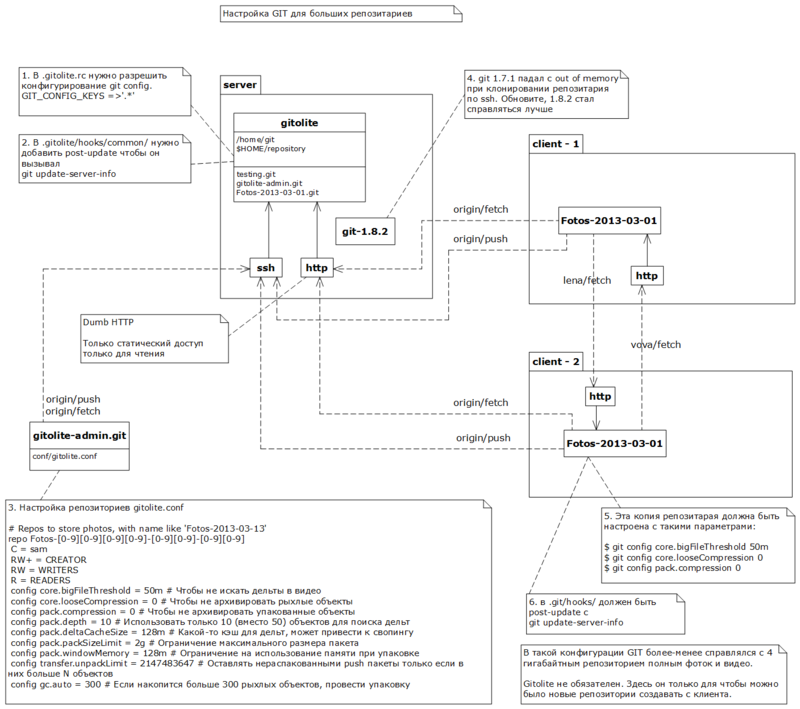

To work on the server, I chose gitolite. This is a wrapper for git, for managing repositories. By and large, only because of the wild repo - repositories created on demand. But also his ability to register settings in the repository was useful.

On server

Everything is painted in the book , in chapter 4.8.

Configure gitolite made on the client. We clone gitolite-admin, we make changes, commit, push. Set up a wild repository - add such a block to the

After cloning an empty repository, a

A folder with 367 files with a capacity of 3.7 gigabytes has been added to the second commit.

293 photos in jpg - 439 megabytes

37 videos in avi - 3.26 gigabytes

37 THM files - 0.3 megabytes

9 minutes push handled local computing, then 14 minutes forwarding.

The idea is to twist git so that it does not try to compress the video and photo. How to turn off the type of files I did not find, I had to completely disable compression.

We clone an empty repository and set it up.

On the client

These commands save settings locally (for the current repository, i.e. in the .git / config file)

On the client

After executing the push command on the server, the repository did not contain any additional settings (as it should be). The local repository remained in a loose state, and was packed on the server. But at the same time the package size was 3.7 gigabytes. The server did not have the settings pack.packsizelimit = 2g (but they would not save either).

After cloning, the repository was packed, and the package was 3.7 gigabytes in size, despite local settings of 2 gigabytes

On the client

The assumption that the server does not cope (chokes on a swap) due to the lack of restrictions on the packet size, too big bigFileThreshold and the lack of restrictions on the use of memory to search for deltas.

I attempted to repack the repository on the server. Installed on the server

And launched, again on the server

After that, packages up to 2 gigabytes in size were created, but the huge package was not removed. An attempt to collect garbage fell from a lack of memory, or choked on a swap. In general, I deleted the system setting, and decided to register in each repository.

In the new repositories for photos, we will prescribe

All this we will register in each repository for photos. To do this, configure gitolite.

Before you add configs to the server, in the

And here is what gitolite config will look like.

If you do not specify a large

We clone a new empty repository and check that the necessary settings are written on the server.

Customer

Server

The client copy of the repository is configured by default. Therefore, before adding 3.7 gigabytes of photos, you need to configure again

Customer

Both the client and server have loose repositories.

Attempts to clone a new ssh repository failed. On the server side, git 1.7.1 crashed with Out Of Memory.

After the update, the git stopped falling for out of memory. But at the beginning of the cloning I chose all the memory - 1 gigabyte, after the start of the transfer I reduced it to 250 megabytes.

Actually the cloning took 48 minutes. And on the client, the repository contained one pack of 3.7 gigabytes in size.

The idea is that the server refuse to perform any work when downloading the repository.

Fortunately, git can fetch via http. All you need to do is run

We will take an example, and set up gitolite to prescribe this hook to all repositories.

On server

Now, after the next update, the repository will be available for http.

In order not to dig into the details of the http settings, I will use the "toy" web server. Create a folder

On server

On the client

Lead time 4 minutes! 3.7 GB in 4 minutes! It suits us.

To serve large repositories with Git, you need Clone and Fetch via http. Register a bunch of settings and it is desirable to update the git.

Server repository

Client repository

Thank you for letting me understand this question.

What did you want to achieve?

- Throw pictures one heap (DCIM), and when there will be time to sort the folders.

- Drop pictures from one computer and work with them from another.

- To move-rename photos and folders magically synchronized on all computers.

- To be able to edit photos, but be able to restore the original.

- To keep the history of edits.

As it turned out, GIT cope with this task with great difficulty.

The resulting configuration is briefly

If only directives are needed, see the last paragraph.

I found that the client had to disable archiving so that the add and commit commands went faster. And on the server, in addition to disabling archiving, you must also limit the use of memory, and adjust the packing / unpacking policy of the objects.

Cloning over ssh is quite slow and requires a lot of resources on the server. Therefore, for such repositories it is better to use the "stupid" http protocol. In addition, you need to update git to the latest version, because 1.7.1 problems with low memory.

There is also the possibility to register

*.* -delta in .gitattributes , this will lead to the delta packing being disabled. Those. even if only exif data is changed, the file will be copied to the entire repository. Here I have not considered this approach.

')

Details

To work on the server, I chose gitolite. This is a wrapper for git, for managing repositories. By and large, only because of the wild repo - repositories created on demand. But also his ability to register settings in the repository was useful.

Install gitolite

On server

useradd git su - git git clone git://github.com/sitaramc/gitolite gitolite/install -to $HOME/bin gitolite setup -pk sam.pub Everything is painted in the book , in chapter 4.8.

Configure gitolite made on the client. We clone gitolite-admin, we make changes, commit, push. Set up a wild repository - add such a block to the

gitolite-admin/conf/gitolite.conf # Repos to store photos, with name like 'Fotos-2013-03-13' repo Fotos-[0-9][0-9][0-9][0-9]-[0-9][0-9]-[0-9][0-9] C = sam RW+ = CREATOR RW = WRITERS R = READERS First experiment (Adding 3.7 gigabytes of photos in git)

After cloning an empty repository, a

.gitignore file containing one line - Thumbs.db was added to the first .gitignore .A folder with 367 files with a capacity of 3.7 gigabytes has been added to the second commit.

293 photos in jpg - 439 megabytes

37 videos in avi - 3.26 gigabytes

37 THM files - 0.3 megabytes

git clone git@my-server:Fotos-2013-03-01 cd 2013-03-01 echo Thumbs.db > .gitignore git add .gitignore git commit -m 'Initial commit' git add <> # 4 46 git commit # 47 git push --all # 23 50 9 minutes push handled local computing, then 14 minutes forwarding.

Second experiment

The idea is to twist git so that it does not try to compress the video and photo. How to turn off the type of files I did not find, I had to completely disable compression.

We clone an empty repository and set it up.

On the client

git clone git@my-server:Fotos-2013-03-02 cd 2013-03-02 git config core.bigFileThreshold 50m git config core.looseCompression 0 git config pack.compression 0 These commands save settings locally (for the current repository, i.e. in the .git / config file)

core.bigFileThreshold - disables the search for partial changes (deltas) in files larger than 50 megabytes (by default 512 megabytes)core.looseCompression - disables the arivator packing of objects in the "loose" state.pack.compression - disables packaging by the archiver for packed objects.On the client

git add <> # 2 16 git commit # 47 git push --all # 7 20 # 2 43 4 37 . After executing the push command on the server, the repository did not contain any additional settings (as it should be). The local repository remained in a loose state, and was packed on the server. But at the same time the package size was 3.7 gigabytes. The server did not have the settings pack.packsizelimit = 2g (but they would not save either).

Cloning 3.7G repository in one package via ssh

git clone git@my-server:Fotos-2013-03-02 # 46 . After cloning, the repository was packed, and the package was 3.7 gigabytes in size, despite local settings of 2 gigabytes

On the client

git config --system -l | grep packsize pack.packsizelimit=2g Server Reconfiguration

The assumption that the server does not cope (chokes on a swap) due to the lack of restrictions on the packet size, too big bigFileThreshold and the lack of restrictions on the use of memory to search for deltas.

I attempted to repack the repository on the server. Installed on the server

git config --system pack.packsizelimit 2g And launched, again on the server

git repack -A After that, packages up to 2 gigabytes in size were created, but the huge package was not removed. An attempt to collect garbage fell from a lack of memory, or choked on a swap. In general, I deleted the system setting, and decided to register in each repository.

In the new repositories for photos, we will prescribe

core.bigFileThreshold = 50m # core.looseCompression = 0 # pack.compression = 0 # pack.depth = 10 # 10 ( 50) pack.deltaCacheSize = 128m # - pack.packSizeLimit = 2g # pack.windowMemory = 128m # transfer.unpackLimit = 2147483647 # push N gc.auto = 300 # 300 , All this we will register in each repository for photos. To do this, configure gitolite.

Before you add configs to the server, in the

.gitolite.rc file .gitolite.rc enable configuration parameters. Here is a line GIT_CONFIG_KEYS => '.*', And here is what gitolite config will look like.

# Repos to store photos, with name like 'Fotos-2013-03-13' repo Fotos-[0-9][0-9][0-9][0-9]-[0-9][0-9]-[0-9][0-9] C = sam RW+ = CREATOR RW = WRITERS R = READERS config core.bigFileThreshold = 50m # config core.looseCompression = 0 # config pack.compression = 0 # config pack.depth = 10 # 10 ( 50) config pack.deltaCacheSize = 128m # - , config pack.packSizeLimit = 2g # config pack.windowMemory = 128m # config transfer.unpackLimit = 2147483647 # push N config gc.auto = 300 # 300 , If you do not specify a large

transfer.unpackLimit , then git, receiving our push, which contains 3.7 gigabytes, leaves it in one package. Despite the restriction pack.packSizeLimit = 2g .gc.auto 300 requires that the repository on the server does not accumulate too many loose objects. To " git gc --auto ", when it works it does not hang for a long time. By default, gc.auto = 6700 .Third experiment

We clone a new empty repository and check that the necessary settings are written on the server.

Customer

git clone git@my-server:Fotos-2013-03-03 cd 2013-03-03 Server

cd repositories/Fotos-2013-03-03 cat config The client copy of the repository is configured by default. Therefore, before adding 3.7 gigabytes of photos, you need to configure again

Customer

git config core.bigFileThreshold 50m git config core.looseCompression 0 git config pack.compression 0 # git add <folder> # 2.5 # git commit # 45 # git push --all # 22.5 # 7.5 , 15 Both the client and server have loose repositories.

Attempts to clone a new ssh repository failed. On the server side, git 1.7.1 crashed with Out Of Memory.

Update git

Need working git any version (yum install git)

On server

Git update on server

Need working git any version (yum install git)

On server

# 1. # git://git.kernel.org/pub/scm/git/git.git # 2. (root) yum install gcc yum install openssl-devel yum install curl yum install libcurl-devel yum install expat-devel yum install asciidoc yum install xmlto # 3. make prefix=/usr/local all doc # # make prefix=/usr/local all doc info # "docbook2x-texi: command not found" # 4. 1.7.1, 1.8.2 yum remove git # 1.7.1 make prefix=/usr/local install install-doc install-html /usr/local/bin/git --version git version 1.8.2 Continuation of the third experiment.

After the update, the git stopped falling for out of memory. But at the beginning of the cloning I chose all the memory - 1 gigabyte, after the start of the transfer I reduced it to 250 megabytes.

Actually the cloning took 48 minutes. And on the client, the repository contained one pack of 3.7 gigabytes in size.

Static HTTP for fetch

The idea is that the server refuse to perform any work when downloading the repository.

Fortunately, git can fetch via http. All you need to do is run

git update-server-info after each update of the repository. This is usually done in hooks / post-update. And even an example of this hook in git contains exactly this command.We will take an example, and set up gitolite to prescribe this hook to all repositories.

On server

cp repositories/testing.git/hooks/post-update.sample .gitolite/hooks/common/post-update gitolite setup --hooks-only Now, after the next update, the repository will be available for http.

In order not to dig into the details of the http settings, I will use the "toy" web server. Create a folder

/home/git/http-root . I will add a git->../repositories . And I will launch a “toy” server from thereOn server

python -m SimpleHTTPServer > ../server-log.txt 2>&1 & On the client

git clone http://my-server:8000/git/Fotos-2013-03-05.git Lead time 4 minutes! 3.7 GB in 4 minutes! It suits us.

Conclusion

To serve large repositories with Git, you need Clone and Fetch via http. Register a bunch of settings and it is desirable to update the git.

Server repository

git config core.bigFileThreshold 50m# Not to look for deltas in videogit config core.looseCompression 0# To not archive loose objectsgit config pack.compression 0# To not archive packed objectsgit config pack.depth 10# Use only 10 (instead of 50) objects to search for deltasgit config pack.deltaCacheSize 128m# Some sort of delta cache may cause swapgit config pack.packSizeLimit 2g# Limiting the maximum packet sizegit config pack.windowMemory 128m# Limit on the use of memory when packinggit config transfer.unpackLimit 2147483647# Leave unpacked push packages only if there are more than N objects in themgit config gc.auto 300# If more than 300 loose objects are accumulated, pack- Make read access via HTTP protocol (dumb http, NOT some kind of gitolite smart)

Client repository

- Clone and update over http

- To commit, you need to configure the url for push:

git remote set-url origin --push git@my-server:Fotos-2013-03-05 git config core.bigFileThreshold 50m# Not to look for deltas in videogit config core.looseCompression 0# To not archive loose objectsgit config pack.compression 0# To not archive packed objects

Thank you for letting me understand this question.

Source: https://habr.com/ru/post/173453/

All Articles