Alternative use of GPU power?

I recently published an article about distributed rendering on the GPU - some questions and suggestions were received. Therefore, I consider it necessary to talk about the topic in more detail (and with pictures, but practically no articles are read without pictures), thereby attracting more readers to the topic.

I think this issue will interest owners of powerful computing systems: miners , gamers, admins of other powerful computing systems.

Many owners of powerful iron thought about it, but is it possible to make some extra money on the power of their piece of iron while it is worthless?

My beauty is currentless!

')

One of the most accessible ways is Bitcoin. In connection with the advent of the distributed payment system Bitcoin, such an interesting occupation appeared - mining (“mining Bitcoins”, calculations in favor of protecting the Bitcoin system, for which the system rewards the participant with Bitcoins, which he can exchange for one of the known currencies on Bitcoin exchanges) - this is enough costly, and not always profitable. Rather, mining turned out to be more profitable on FPGA shechek than on Radeonchiki. Therefore, the owners of the latter are less fortunate in terms of mining, and they have to sell their pieces of iron.

Mining farm, I counted 66 video cards. Beautiful! Taken from here .

I myself have recently tried to remember on the GTX580 (and Nvidia is bad for mining, yes), but I realized that $ 17 (seventeen) dollars a month - although a lot of money, but not exactly the salary I dreamed of.

But do not hurry to hibernate despair! You can try to save the day!

So, powerful vidyushki, when properly used:

1. Can serve for the benefit of someone.

2. Can make more profit than Bitcoin.

3. Make a profit from Bitcoin while idle.

How can video cards come in handy?

1. General purpose calculations on video cards (General Purpose Graphic Processor Unit (GPGPU)).

2. Hardware rasterization (OpenGL, DirectX).

How to use video cards?

CUDA is a great Nvidia card frenzymvork, and only for them. Hardware-specific platform.

Firestream - a framework for GPGPU computing on AMD video cards. Again, a hardware-dependent platform. Honestly, I haven't even met a single render on Firestream.

OpenCL is a hardware and software-independent platform for computing on anything: both the CPU and the GPU, on toasters and microwaves. Everything is fine, but on personal experience, and numerous tests made sure that the platform is still far from perfect. Glitches, bugs, poor optimization. Can they write on it with curved handles? I do not know, maybe someone will comment in the comments.

HLSL - shader language DirecrX. What is shader? On it write surface shading algorithms in DirectX. Even one friend made a render on HLSL. All anything, but the platform is software-dependent. Only DirectX from Melkomyagkih.

DirectCompute - applied (to DirectX, what else) programming language from Melkomyagkih. Often, with the help of it, they hang physics on a video card.

GLSL - shader language OpenGL. And OpenGL, as we know, supports the vast majority of hardware, runs on Windows, Linux, OSX. So the option seems to be the most advantageous. Honestly, I did not see serious software on it, but I think there is a reason to think. Try, and not to look for excuses, why it is not used.

In non-graphical computing, GLSL is popular in WebGL applications. You can see for yourself how unbiased render works on WebGL , and prevent pixels on the screen with your mouse.

There is a video showing the performance of code written using different frameworks on the Nvidia GeForce GTS250 and Core i5.

This video is not an absolute indicator. First, infa could become outdated, and suddenly, OpenCL is the fastest in the world? Secondly, GTS250 is not a top-notch video card to evaluate the performance of OpenCL on the same mining farms.

In my opinion, the most suitable platforms:

1. GLSL in view of its versatility, stability and speed. Minus - the inconvenience of programming.

2. OpenCL - universal, omnivorous. Enables all supported CPU and GPU. Minus - there are flaws.

3. Combine 1 and 2.

There are a lot of tasks that need GPU calculations. Engineering, scientific, financial, graphic. I’ll concentrate on rendering (which I’ll talk about a little further), as I’m doing graphics, and I have something to say about this.

The first thing that comes to mind is cloud games (see Onlive ). Cloud-based drawing of games is when a resource-demanding game is drawn remotely, on a computing server, and the finished picture with sound is sent to the user. Use this if the user's computer barely pulls the same game at the lowest settings, or is generally incompatible with this game.

Video about how you can play hardware-demanding games on your Android tablet:

So, now back to the system of cloud distributed rendering, which I want to offer.

Or distributed? Rather cloudy, only the cloud is distributed. Cloud distributed rendering.

I would like to dwell on rendering, but not on rasterization, but on more serious and deep algorithms, such as unbiased rendering , which I already buzzed about with all my ears.

How an unbiased GPU render looks like - using the example of Octane Render (Nvidia CUDA)

The algorithm is good because it allows rendering global illumination (light, reflections of light, reflections of reflections, reflections of reflections of reflections, etc.) in real time, albeit with a lot of noise.

But the greater the performance of the piece of iron, the faster the image is cleared of noise.

But do not throw in the wake of the owners of the Radeons! There are renderers using OpenCL:

This is Cycles Render (opensource), IndigoRT, SmallLuxGPU (also opensource)

SmallLuxGPU

In general, I think, time will show what is better: to write your software, or to understand someone else's code .

In what form to submit?

1. Through the 3d plug-in editor.

2. Through the browser with JavaScript. By the way, you can do something like “threw a reference - showed someone a 3D object.”

And how to use this system: for money, or as a gift for friends or for the sake of an interesting project - users decide for themselves.

As we know, graphic display adapters have rather high (compared to CPU) performance in multi-threaded calculations. But it is not necessary to write off the CPU . There are tasks that are very difficult to write, or impractical to use a GPU.

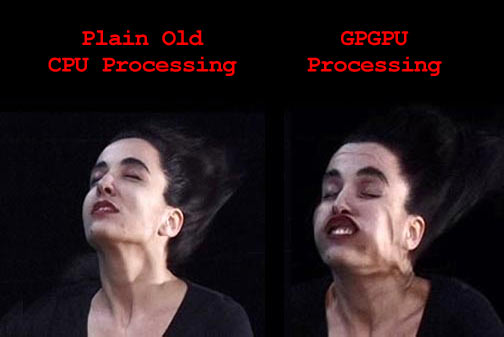

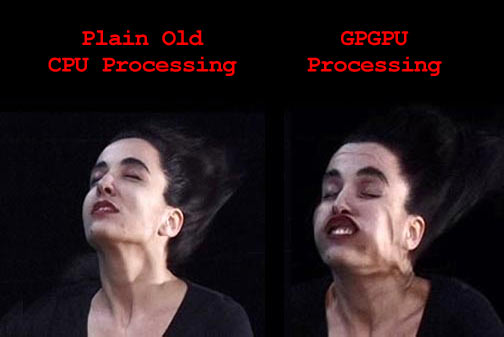

Although, in general, my opinion reflects this picture:

Well, a post without a button accordion is not a post.

Calculations are only part of the resources that need redistribution. Some need a productive gland, others have it unused. The same applies to other resources: money, water, food, energy, heat. Some have an excess that does not bring joy - others have a disadvantage that brings discomfort.

Calculations are only part of the resources that need redistribution. Some need a productive gland, others have it unused. The same applies to other resources: money, water, food, energy, heat. Some have an excess that does not bring joy - others have a disadvantage that brings discomfort.

Humanity needs mutual assistance in all aspects of life. And rendering and distributed computing are only a small part of the resources with which we can help each other. Still, it is more expedient to use the existing idle power than to buy new hardware?

This I mean, distributed computing has a future! I am not saying at all that the project should be developed on the bare enthusiasm, and the developers should be fed on the holy spirit.

If this topic suddenly interested someone, I suggest, comrades, to discuss!

I think this issue will interest owners of powerful computing systems: miners , gamers, admins of other powerful computing systems.

Many owners of powerful iron thought about it, but is it possible to make some extra money on the power of their piece of iron while it is worthless?

My beauty is currentless!

')

One of the most accessible ways is Bitcoin. In connection with the advent of the distributed payment system Bitcoin, such an interesting occupation appeared - mining (“mining Bitcoins”, calculations in favor of protecting the Bitcoin system, for which the system rewards the participant with Bitcoins, which he can exchange for one of the known currencies on Bitcoin exchanges) - this is enough costly, and not always profitable. Rather, mining turned out to be more profitable on FPGA shechek than on Radeonchiki. Therefore, the owners of the latter are less fortunate in terms of mining, and they have to sell their pieces of iron.

Mining farm, I counted 66 video cards. Beautiful! Taken from here .

I myself have recently tried to remember on the GTX580 (and Nvidia is bad for mining, yes), but I realized that $ 17 (seventeen) dollars a month - although a lot of money, but not exactly the salary I dreamed of.

But do not hurry to hibernate despair! You can try to save the day!

So, powerful vidyushki, when properly used:

1. Can serve for the benefit of someone.

2. Can make more profit than Bitcoin.

3. Make a profit from Bitcoin while idle.

How can video cards come in handy?

1. General purpose calculations on video cards (General Purpose Graphic Processor Unit (GPGPU)).

2. Hardware rasterization (OpenGL, DirectX).

Let's start with GPGPU

How to use video cards?

CUDA is a great Nvidia card frenzymvork, and only for them. Hardware-specific platform.

Firestream - a framework for GPGPU computing on AMD video cards. Again, a hardware-dependent platform. Honestly, I haven't even met a single render on Firestream.

OpenCL is a hardware and software-independent platform for computing on anything: both the CPU and the GPU, on toasters and microwaves. Everything is fine, but on personal experience, and numerous tests made sure that the platform is still far from perfect. Glitches, bugs, poor optimization. Can they write on it with curved handles? I do not know, maybe someone will comment in the comments.

HLSL - shader language DirecrX. What is shader? On it write surface shading algorithms in DirectX. Even one friend made a render on HLSL. All anything, but the platform is software-dependent. Only DirectX from Melkomyagkih.

DirectCompute - applied (to DirectX, what else) programming language from Melkomyagkih. Often, with the help of it, they hang physics on a video card.

GLSL - shader language OpenGL. And OpenGL, as we know, supports the vast majority of hardware, runs on Windows, Linux, OSX. So the option seems to be the most advantageous. Honestly, I did not see serious software on it, but I think there is a reason to think. Try, and not to look for excuses, why it is not used.

In non-graphical computing, GLSL is popular in WebGL applications. You can see for yourself how unbiased render works on WebGL , and prevent pixels on the screen with your mouse.

There is a video showing the performance of code written using different frameworks on the Nvidia GeForce GTS250 and Core i5.

This video is not an absolute indicator. First, infa could become outdated, and suddenly, OpenCL is the fastest in the world? Secondly, GTS250 is not a top-notch video card to evaluate the performance of OpenCL on the same mining farms.

In my opinion, the most suitable platforms:

1. GLSL in view of its versatility, stability and speed. Minus - the inconvenience of programming.

2. OpenCL - universal, omnivorous. Enables all supported CPU and GPU. Minus - there are flaws.

3. Combine 1 and 2.

There are a lot of tasks that need GPU calculations. Engineering, scientific, financial, graphic. I’ll concentrate on rendering (which I’ll talk about a little further), as I’m doing graphics, and I have something to say about this.

Hardware Rasterization

The first thing that comes to mind is cloud games (see Onlive ). Cloud-based drawing of games is when a resource-demanding game is drawn remotely, on a computing server, and the finished picture with sound is sent to the user. Use this if the user's computer barely pulls the same game at the lowest settings, or is generally incompatible with this game.

Video about how you can play hardware-demanding games on your Android tablet:

So, now back to the system of cloud distributed rendering, which I want to offer.

CLOUD RENDERING

Or distributed? Rather cloudy, only the cloud is distributed. Cloud distributed rendering.

I would like to dwell on rendering, but not on rasterization, but on more serious and deep algorithms, such as unbiased rendering , which I already buzzed about with all my ears.

How an unbiased GPU render looks like - using the example of Octane Render (Nvidia CUDA)

The algorithm is good because it allows rendering global illumination (light, reflections of light, reflections of reflections, reflections of reflections of reflections, etc.) in real time, albeit with a lot of noise.

But the greater the performance of the piece of iron, the faster the image is cleared of noise.

But do not throw in the wake of the owners of the Radeons! There are renderers using OpenCL:

This is Cycles Render (opensource), IndigoRT, SmallLuxGPU (also opensource)

SmallLuxGPU

In general, I think, time will show what is better: to write your software, or to understand someone else's code .

In what form to submit?

1. Through the 3d plug-in editor.

2. Through the browser with JavaScript. By the way, you can do something like “threw a reference - showed someone a 3D object.”

And how to use this system: for money, or as a gift for friends or for the sake of an interesting project - users decide for themselves.

Maybe we will distribute tasks on the CPU?

As we know, graphic display adapters have rather high (compared to CPU) performance in multi-threaded calculations. But it is not necessary to write off the CPU . There are tasks that are very difficult to write, or impractical to use a GPU.

Although, in general, my opinion reflects this picture:

Well, a post without a button accordion is not a post.

Ideological component of the project

Calculations are only part of the resources that need redistribution. Some need a productive gland, others have it unused. The same applies to other resources: money, water, food, energy, heat. Some have an excess that does not bring joy - others have a disadvantage that brings discomfort.

Calculations are only part of the resources that need redistribution. Some need a productive gland, others have it unused. The same applies to other resources: money, water, food, energy, heat. Some have an excess that does not bring joy - others have a disadvantage that brings discomfort.Humanity needs mutual assistance in all aspects of life. And rendering and distributed computing are only a small part of the resources with which we can help each other. Still, it is more expedient to use the existing idle power than to buy new hardware?

This I mean, distributed computing has a future! I am not saying at all that the project should be developed on the bare enthusiasm, and the developers should be fed on the holy spirit.

TOTAL

If this topic suddenly interested someone, I suggest, comrades, to discuss!

Source: https://habr.com/ru/post/172969/

All Articles