How we moved from 30 servers to 2: Go

How we moved from 30 servers to 2: Go

When we released the first version of IronWorker about 3 years ago, it was written in Ruby, and the API was written in Rails. After a while, the load began to grow rapidly and we quickly reached the limits of our Ruby applications. In short, we switched to Go. And if you want to know the details - keep reading ...

First version

First, a bit of history: we wrote the first version of IronWorker, originally called SimpleWorker (not a bad name, is it?) In Ruby. We were a consulting company building applications for other companies, and at that time there were 2 popular things: Amazon Web Services and Ruby On Rails. And so we created applications using Ruby on Rails and AWS, and attracted new customers. The reason we created IronWorker was to "scratch where it itches." We had several customers using devices that constantly sent data in 24/7 mode and we had to accept and convert this data into something useful. This task was solved by launching heavy processes on a schedule that processed data every hour, every day, and so on. We decided to create something that we can use for all of our clients, without the need to constantly raise and maintain a separate infrastructure for each of them to process its data. Thus, we created a “handler as a service”, which we first used for our tasks, and then we decided that maybe someone else would need a similar service and we made it public. IronWorker was born that way.

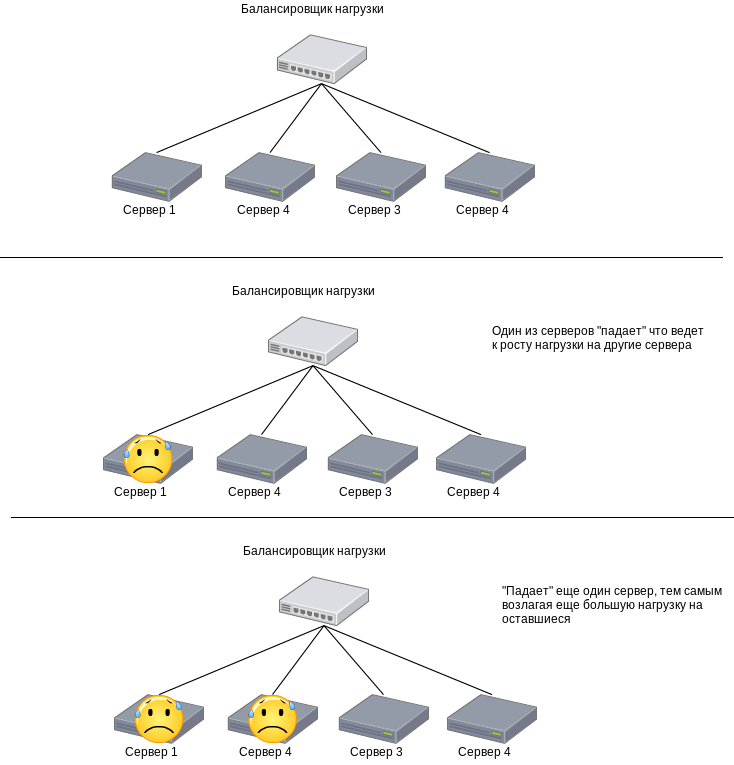

Constant loading of processors on our servers was about 50-60%. When the load increased, we added more servers to keep the CPU load at about 50%. It suited us while we were satisfied with the price we paid for so many servers. The bigger problem was how we dealt with load jumps. The next load jump (traffic) created a domino effect that could damage a whole cluster. During such a jump in load, exceeding the usual by only 50%, our Rails servers started using the processor at 100% and stopped responding. This made the load balancer think that this server fell and redistribute the load among the other servers. And, since, in addition to processing the requests of the fallen server, the remaining servers had to handle the peak load, it usually took a while before the next server crashed, which was again excluded from the balancer pool, and so on. Pretty soon, all servers were down: This phenomenon is also known as colossal clusterf ** k ( + Blake Mizerany )

')

The only way to avoid this with the applications that we had at that time was to use a huge amount of additional capacity to reduce the load on our servers and be ready for peak loads. But it meant spending a lot of money. Something had to change.

We rewrote it

We decided to rewrite the API. It was a simple solution. To be honest, our API, written in Ruby on Rails, was not scalable. Based on many years of experience developing such things in Java, which could handle a large load using far less resources than Ruby on Rails, I knew that we could do this much better. Thus, the decision came down to which language to use.

Language selection

I was open to new ideas, since the last thing I wanted to do was return to Java. Java is (was?) A great language, with many advantages, such as performance, but after writing Ruby code for several years, I was fascinated by how productively I could write code. Ruby is a convenient, understandable and simple language.

We looked at other scripting languages with better performance than Ruby (which was easy), such as Python and JavaScript / Node.js. We also looked at JVM-based Scala and Clojure, and other languages like Erlang (which uses AWS) and Go (golang). Go won . That competition was a fundamental part of the language was excellent; the standard kernel library contained almost everything we needed to build an API; it is capacious; it compiles quickly; programming on Go is just nice — just like Ruby, and in the end, numbers don't lie. After building a prototype and testing performance, we realized that we can handle large loads without problems using Go. And, after some discussions with the team (“It's all right, it is supported by Google”), we decided to use Golang.

When we first decided to try Go, it was a risky decision. There was not a large community, there was not a large number of open source projects, there were no successful cases of Go in production. We were also not sure that we could hire talented programmers if we chose Go. But we soon realized that we could hire the best programmers precisely because we chose Go. We were one of the first companies that publicly announced the use of Go in production, and the first company to post a vacancy announcement on the golang mailing list. After that, the best developers wanted to work for us, because they could use Go in their work.

After go

After we launched the Go version, we reduced the number of servers to two (the second one needs more for reliability). The load on the server was minimal, as if nothing and did not use resources. The processor load was less than 5%, and the application used several hundred KB of memory (at startup) compared to Rails applications that ate ~ 50 MB (at startup). Compare this with the JVM! Much time has passed. And since then, we have never had colossal clusterf ** k.

We have grown a lot since those times. Now we have a lot more traffic, we have launched additional services (IronMQ and IronCache), and now we use hundreds of servers to cover the needs of customers. And the whole backend is implemented on Go. In retrospect, it was a great decision - to choose Go, because it allowed us to build great products to grow and scale, as well as attract talented people. And, I think that the choice made will continue to help us grow in the foreseeable future.

PS Translated with the permission of Travis, and if there are any questions, he and other members of the Iron.io team will be happy to answer them.

Source: https://habr.com/ru/post/172795/

All Articles