PostgreSQL performance scaling with table partitioning

Classic script

You are working on a project where transactional data is stored in a database. Then you deploy the application in a production environment, and the performance is great! Requests pass quickly, and the delay in entering them is almost imperceptible. After a few days / weeks / months, the database becomes more and more, and the speed of requests slows down.

There are several approaches with which you can speed up your application and database.

The database administrator (DBA) will look and make sure that the database is optimally configured. He will offer to add certain indexes, remove logging to a separate partition, correct the parameters of the database engine and make sure that the database is healthy. You can also add highlighted Input / Output Operations Per second on an EBS disk to increase the speed of the disk partitions. This will give you time and give you the opportunity to solve the main problem.

')

Sooner or later you will realize that the data in your database is a bottleneck (botleneck).

In many application databases, the importance of information decreases with time. If you can think of a way to get rid of this information, your requests will pass faster, the time to create backups will decrease, and you will save a lot of space. You can delete this information, but then it will disappear permanently. You can send many DELETE requests, triggering tons of logs, and use a bunch of database engine resources. So how do we get rid of old information effectively, but not losing it forever?

In the examples, we will use PostgreSQL 9.2 on Engine Yard. You also need git to install plsh.

Partitioning tables

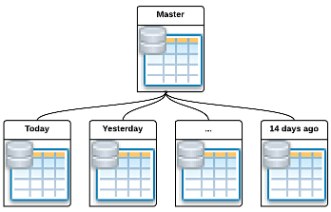

Partitioning tables is a good solution to this problem. Take one giant table and break it into a bunch of small ones - these small tables are called partitions and child tables. Actions such as creating backups, SELECT and DELETE operations can be performed with individual or all partitions. Partitions can also be deleted or exported by a single request, which will keep logging to a minimum.

Terminology

Let's start with the terminology that will be used in this article.

Master Table

Also called the Master Partition Table, this is the template by which child tables are created. This is a regular table, but it does not store any information and needs a trigger (more on that later). The type of relationship between the master and child tables is one-to-many (one-to-many), that is, there is one main table and many children.

Child Table

These tables inherit their structure (or in other words, their data description language — Data Definition Language or DDL ) from the main table and belong to the same main table. It is in the child tables that all data is stored. These tables are often called partition tables .

Partition function

Partition function is a stored procedure that determines which of the child tables will accept the new record. The main table contains a trigger that calls the partition function. There are two methodologies for routing records to child tables:

According to data values - an example of this is the date of the order of purchase. When purchase orders arrive in the main table, this function is called by a trigger. If you create partitions by day, each subsidiary part will represent all orders that came on a particular day. This method is described in this article.

For fixed values — an example of this is geography, such as states. In this case, you can have 50 child tables, one for each US state. When INSERT requests come into the main table, the function sorts each new row into one of the child tables. This methodology is not described in this article, as it will not help us get rid of old data.

It's time to customize these partitions.

This solution demonstrates the following:

- Automatic creation of partition databases based on date

- Planning to export old partitions to compressed files

- Disposal of old partitions without sacrificing performance.

- Reload the old partitions so that they are again available for the main partition.

Most of the parting documentation I read is simply used to keep the database clean and tidy. If you need old data, you would have to keep a backup of the old database. I will show you how you can keep your database healthy using partitioning, but with access to old information if necessary without the need to create backup copies.

Assumptions (Conventions)

The commands executed from under the shell, by the root user have the following prefix:

root# postgres$ Commands executed inside the PostgreSQL database will look like this: my_database> What you need

In the examples, we will use PostgreSQL 9.2 on Engine Yard . You also need git to install plsh.

Summary

Here is a brief description of what we are going to do:

- create master table

- create trigger function

- create table trigger

- create a partition maintenance function

- schedule partitions

- reload old partitions when needed

Creating the main table

For this example, we will create a table to store basic performance information (cpu, memory, disk) server groups (server_id) every minute.

CREATE TABLE myschema.server_master ( id BIGSERIAL NOT NULL, server_id BIGINT, cpu REAL, memory BIGINT, disk TEXT, "time" BIGINT, PRIMARY KEY (id) ); Note that the name time is enclosed in quotes. This is necessary because time is a keyword in PostgreSQL. You can learn more about the Date / Time keywords and their functions in the PostgreSQL documentation .

Create a trigger function

The trigger function does the following:

- Creates a child partition of tables with dynamically generated “CREATE TABLE” parameters if it does not already exist

- Parameters (child tables) are determined by the values of the “time” column, creating a partition for each calendar day

- The time is stored in the epoch format, which is a representation of the number of seconds elapsed since midnight (00:00:00 UTC) on January 1, 1970, as an integer

- Each day contains 86400 seconds, the midnight of each day is the epoch date, which is divided by 86400 without a balance.

- The name of each child table will be in the format of myschema.server_YYYY-MM-DD.

CREATE OR REPLACE FUNCTION myschema.server_partition_function() RETURNS TRIGGER AS $BODY$ DECLARE _new_time int; _tablename text; _startdate text; _enddate text; _result record; BEGIN --Takes the current inbound "time" value and determines when midnight is for the given date _new_time := ((NEW."time"/86400)::int)*86400; _startdate := to_char(to_timestamp(_new_time), 'YYYY-MM-DD'); _tablename := 'server_'||_startdate; -- Check if the partition needed for the current record exists PERFORM 1 FROM pg_catalog.pg_class c JOIN pg_catalog.pg_namespace n ON n.oid = c.relnamespace WHERE c.relkind = 'r' AND c.relname = _tablename AND n.nspname = 'myschema'; -- If the partition needed does not yet exist, then we create it: -- Note that || is string concatenation (joining two strings to make one) IF NOT FOUND THEN _enddate:=_startdate::timestamp + INTERVAL '1 day'; EXECUTE 'CREATE TABLE myschema.' || quote_ident(_tablename) || ' ( CHECK ( "time" >= EXTRACT(EPOCH FROM DATE ' || quote_literal(_startdate) || ') AND "time" < EXTRACT(EPOCH FROM DATE ' || quote_literal(_enddate) || ') ) ) INHERITS (myschema.server_master)'; -- Table permissions are not inherited from the parent. -- If permissions change on the master be sure to change them on the child also. EXECUTE 'ALTER TABLE myschema.' || quote_ident(_tablename) || ' OWNER TO postgres'; EXECUTE 'GRANT ALL ON TABLE myschema.' || quote_ident(_tablename) || ' TO my_role'; -- Indexes are defined per child, so we assign a default index that uses the partition columns EXECUTE 'CREATE INDEX ' || quote_ident(_tablename||'_indx1') || ' ON myschema.' || quote_ident(_tablename) || ' (time, id)'; END IF; -- Insert the current record into the correct partition, which we are sure will now exist. EXECUTE 'INSERT INTO myschema.' || quote_ident(_tablename) || ' VALUES ($1.*)' USING NEW; RETURN NULL; END; $BODY$ LANGUAGE plpgsql; Creating a trigger for a table

When the partition function is created, you need to add an input trigger to the main table. It will call the partition function when new entries arrive.

CREATE TRIGGER server_master_trigger BEFORE INSERT ON myschema.server_master FOR EACH ROW EXECUTE PROCEDURE myschema.server_partition_function(); Now you can send rows to the main table and watch how they will fit into the corresponding child tables.

Creating the partition maintenance function

Now we put the main table on a diet. The function below was created to collectively handle the maintenance of the partition. That is why you will not see any specific syntax for the server.

How it works:

- All child tables for a particular master table are scanned for partitions, where the name of the partition corresponds to a date greater than 15 days.

- Each “too old” partition is exported to the local file system by calling the database function myschema.export_partition (text, text). More on this in the next section.

- Only if the export to the local file system was successful, the child table will be dropped.

- This function assumes the presence of the / db / partition_dump folder on the local database server. More on this in the next section. If you're wondering where these partitions will be exported, then you should go there!

CREATE OR REPLACE FUNCTION myschema.partition_maintenance(in_tablename_prefix text, in_master_tablename text, in_asof date) RETURNS text AS $BODY$ DECLARE _result record; _current_time_without_special_characters text; _out_filename text; _return_message text; return_message text; BEGIN -- Get the current date in YYYYMMDD_HHMMSS.ssssss format _current_time_without_special_characters := REPLACE(REPLACE(REPLACE(NOW()::TIMESTAMP WITHOUT TIME ZONE::TEXT, '-', ''), ':', ''), ' ', '_'); -- Initialize the return_message to empty to indicate no errors hit _return_message := ''; --Validate input to function IF in_tablename_prefix IS NULL THEN RETURN 'Child table name prefix must be provided'::text; ELSIF in_master_tablename IS NULL THEN RETURN 'Master table name must be provided'::text; ELSIF in_asof IS NULL THEN RETURN 'You must provide the as-of date, NOW() is the typical value'; END IF; FOR _result IN SELECT * FROM pg_tables WHERE schemaname='myschema' LOOP IF POSITION(in_tablename_prefix in _result.tablename) > 0 AND char_length(substring(_result.tablename from '[0-9-]*$')) <> 0 AND (in_asof - interval '15 days') > to_timestamp(substring(_result.tablename from '[0-9-]*$'),'YYYY-MM-DD') THEN _out_filename := '/db/partition_dump/' || _result.tablename || '_' || _current_time_without_special_characters || '.sql.gz'; BEGIN -- Call function export_partition(child_table text) to export the file PERFORM myschema.export_partition(_result.tablename::text, _out_filename::text); -- If the export was successful drop the child partition EXECUTE 'DROP TABLE myschema.' || quote_ident(_result.tablename); _return_message := return_message || 'Dumped table: ' || _result.tablename::text || ', '; RAISE NOTICE 'Dumped table %', _result.tablename::text; EXCEPTION WHEN OTHERS THEN _return_message := return_message || 'ERROR dumping table: ' || _result.tablename::text || ', '; RAISE NOTICE 'ERROR DUMPING %', _result.tablename::text; END; END IF; END LOOP; RETURN _return_message || 'Done'::text; END; $BODY$ LANGUAGE plpgsql VOLATILE COST 100; ALTER FUNCTION myschema.partition_maintenance(text, text, date) OWNER TO postgres; GRANT EXECUTE ON FUNCTION myschema.partition_maintenance(text, text, date) TO postgres; GRANT EXECUTE ON FUNCTION myschema.partition_maintenance(text, text, date) TO my_role; The function you see below is also generic and allows you to pass the name of the table you want to export to the OS and the name

compressed file that will contain this table.

-- Helper Function for partition maintenance CREATE OR REPLACE FUNCTION myschema.export_partition(text, text) RETURNS text AS $BASH$ #!/bin/bash tablename=${1} filename=${2} # NOTE: pg_dump must be available in the path. pg_dump -U postgres -t myschema."${tablename}" my_database| gzip -c > ${filename} ; $BASH$ LANGUAGE plsh; ALTER FUNCTION myschema.export_partition(text, text) OWNER TO postgres; GRANT EXECUTE ON FUNCTION myschema.export_partition(text, text) TO postgres; GRANT EXECUTE ON FUNCTION myschema.export_partition(text, text) TO my_role; Please note that the code above uses the plsh language extension as explained below. It should also be noted that in our system bash is located in / bin / bash.

Interesting, isn't it?

Everything is almost done. So far we have made all the necessary changes inside the database to place the table partitions:

- Created a new master table

- Created trigger and trigger function for main table

- Created a partition maintenance function to export old partitions and lower them.

What we need to do to automate the service:

- Install the plsh extension

- Configure the OS to store the partition dumps

- Create a cron job to automate the call to the partition service function

PostgreSQL and OS configuration

Enable PLSH in PostgreSQL

The PLSH extension is needed in PostgreSQL to run shell commands. It is used in myschema.export_partition (text, text) to dynamically create shell lines to run pg_dump. From as root, run the following commands

root# cd /usr/local/src # .so postgresql root# curl -L href="https://github.com/petere/plsh/archive/9a429a4bb9ed98e80d12a931f90458a712d0adbd.tar.gz">https://github.com/petere/plsh/archive/9a429a4bb9ed98e80d12a931f90458a712d0adbd.tar.gz -o plsh.tar.gz root# tar zxf plsh.tar.gz root# cd plsh-*/ root# make all install # ! postgres header root# su - postgres # postgresql postgres$ psql my_database # my_database> CREATE EXTENSION plsh; # : Create a folder

root# mkdir -p /db/partition_dump Make sure that the postgres user owns the folder, and that the user's deployment group has access to read these files. The user for the default deployment in Engine Yard Cloud is the user 'deploy'.

root# chown postgres:deploy /db/partition_dump More information about PL / SH can be found in the plsh project documentation.

Partition scheduling

The command below will schedule partition_maintenance every day at midnight.

root# su - postgres ## , cron job postgres$ mkdir -p $HOME/bin/pg_jobs ## postgres$ cat > $HOME/bin/pg_jobs/myschema_partition_maintenance #!/bin/bash # : psql . psql -U postgres glimpse <<SQL SELECT myschema.partition_maintenance('server'::text, 'server_master'::text, now()::date ); SQL ## <ctrl+d> “cat” postgres$ exit ## postgres : root# chmod +x /home/postgres/bin/pg_jobs/myschema_partition_maintenance # Make script executable root# crontab -u postgres -e ## : 0 0 * * * /home/postgres/bin/pg_jobs/myschema_partition_maintenance Check the cron jobs for the postgres user to verify that the crontab line is correct:

root# crontab -u postgres -l 0 0 * * * / home / postgres / bin / pg_jobs / myschema_partition_maintenance

Make sure that you have a backup copy of the / db / partition_dump folder if you are not using an instance on Engine Yard Cloud. If you need this information again, you will need these files to restore old partitions. This can be done using rsyncing (copying) these files to another server for greater certainty. We believe that S3 is great for such archiving.

So, we have planned that the maintenance of your main table will be performed at a certain time, and you can relax, knowing that you have done something special: an agile database that will follow the diet itself!

We load old partitions

If you are tormented by separation from old data, or there may be a request for compliance control found on your desktop, you can still load old partitions from system files.

To do this, we will go to the / db / partition_dump folder on your local db server and identify the required file. The postgres user then imports this file into the database.

postgres$ cd /db/partition_dump postgres$ ls # postgres$ psql my_database < name_of_partition_dump_file After the file is uploaded, you can again send requests from the main table to it. Do not forget that the next time the scheduler starts processing a partition, this old partition will be exported again.

Let's see it in work

Creating child tables

Let's load two lines of information to look at the new subsidiary partition in action. Open the psql session and run the following command:

postgres$ psql my_database my_database> INSERT INTO myschema.server_master (server_id, cpu, memory, disk, time) VALUES (123, 20.14, 4086000, '{sda1:510000}', 1359457620); --Will create "myschema"."servers_2013-01-29" my_database> INSERT INTO myschema.server_master (server_id, cpu, memory, disk, time) VALUES (123, 50.25, 4086000, '{sda1:500000}', 1359547500); --Will create "myschema"."servers_2013-01-30" So what happened? Assuming you run this for the first time, two child tables were created. See the comments in the sql message for creating child tables. The first insert can be seen by choosing from the main or from the child table:

SELECT * FROM myschema.server_master; -- SELECT * FROM myschema."server_2013-01-29"; -- Notice that we use double quotes around the table name of the child partition. We do this not because it is an inherited table, but because of the hyphen used between year-month-day.

We start service of tables

Two lines that we have made more than 15 days. After manually starting the partition service (as it would be started via cron), two partitions will be exported to the OS, and these partitions will be dropped.

postgres $ / home / postgres / bin / pg_jobs / myschema_partition_maintenance

Upon completion, we will be able to see two exported files:

postgres$ cd /db/partition_dump postgres$ ls -alh … -rw------- 1 postgres postgres 1.0K Feb 16 00:00 servers_2013-01-29_20130216_000000.000000.sql.gz -rw------- 1 postgres postgres 1.0K Feb 16 00:00 servers_2013-01-30_20130216_000000.000000.sql.gz If you try to select the main table, it will return 0 rows to you, so that the two child tables no longer exist.

Loading old partitions

if you want to load old child tables, first gunzip and then load with psql:

postgres$ cd /db/partition_dump postgres$ gunzip servers_2013-01-29_20130216_000000.000000.sql.gz postgres$ psql my_database < servers_2013-01-29_20130216_000000.000000.sql If you send a select to the main table, the result will be 1 row - the child table is restored.

Notes

Our database files are on a partition on / db which is separated from our root ('/') partition.

To learn more about PostgreSQL extensions, read the following documentation .

The database engine will not return the correct number of rows of the affected tables (always 0 affected tables) after sending INSERT or UPDATE to the main table. If you use Ruby, do not forget to tweak the code, given that pg jam will not correctly display the correct values when reporting cmd_tuples. If you use ORM, then hopefully they fix it accordingly.

Do not forget to backup exported partitions to / db / partition_dump, these files lie outside the standard path to create database backups.

The database user who performs INSERT into the main table must also have DDL permissions to create child tables.

When implementing INSERT into the main table, a slight change in performance will be noticeable, since the trigger function will be launched.

Be sure to use the latest version of PostgreSQL. This ensures that you are working with the most stable and secure version.

This solution works for my situation, your requirements may be different, so feel free to change, add to, cripple, laugh hysterically or copy it for your own purposes.

Ps my translation I hope the article will be useful.

Source: https://habr.com/ru/post/171745/

All Articles