How Google changed Android with your brain

In the latest version of the Android mobile operating system, the web giant Google has made big changes in how the OS interprets your voice commands. The company has made a voice recognition system based on what we call a neural network — a computerized learning system that behaves like a human brain.

For many users, says Vincent Vanhusk, a Google research scientist who directed network development efforts, the results were impressive. “It was partly a surprise how much we could improve recognition by simply changing the model,” he says.

')

Vanhusk notes that the number of recognition errors in the new version of Android - known as Jelly Bean - is about 25% less than in previous versions of the program, and that it makes the voice command system more convenient for people. Today, he writes, users prefer to use a more natural language when speaking with their phone. In other words, they speak less so as if they are talking to a robot. "It really changes people's behavior."

And this is just one example of how algorithms based on neural networks change the performance of our technologies - and how we use them. This field of research was of little interest to many people for many years after the 1980s passed with their passion for neural networks, but now they are returning - Microsoft, IBM and Google are working on applying them to completely real technologies.

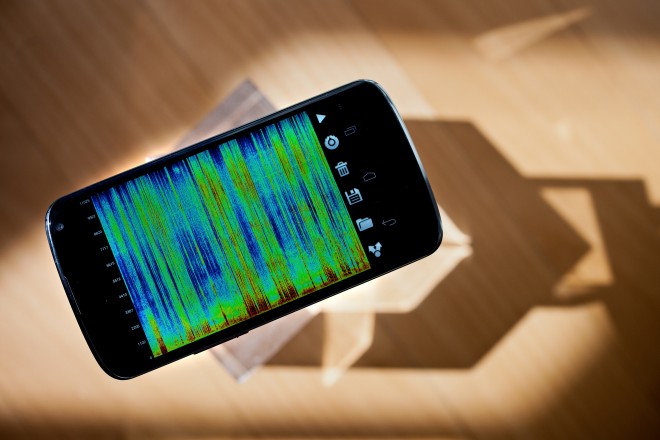

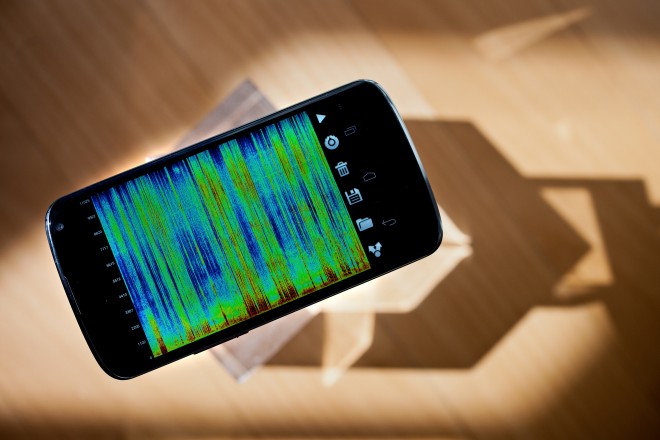

When you talk to Android’s voice recognition system, your speech spectrogram is truncated and sent to 8 different computers that belong to a huge army of Google servers. They then process speech using neural network models developed by Vanhusk and his team. Google is known for its ability to cope with large computational tasks and the speed of their implementation - in this case, Google turned to Jeff Dean and his team of engineers , a group that is known for actually inventing modern data centers.

Neural networks have given researchers, such as Van Husk, a way to analyze many, many patterns - in the case of Jelly Bean, a spectrogram of spoken words - and then predict what kind of new pattern they can represent. The metaphor came from biology, where neurons in the body form networks that allow them to process signals in a special way. In the case of the neural network that Jelly Bean uses, Google was able to build several models of how a language works — for example, one for the English language and its search queries — based on an analysis of vast real data.

“People believed for a long, long time — partly based on what you saw in the brain — that in order to get a good perception system, you need to use several levels of functioning,” said Jeffrey Hintno, a computer science professor at the University of Toronto. “But the question is how can you effectively train them.”

Android takes a picture of voice commands, and Google processes it using the neural network model to determine what is being said.

First, Google software tries to parse speech into separate parts - into the different vowels and consonant sounds that make up words. This is one level of neural network. He then uses this information to build a more complex assumption, combining letters into words, words into sentences. Each level of this model brings the system closer to finding out what is actually said.

Neural network algorithms can also be used for image analysis. “What you want to do is find small pieces of structured pixels, such as the angles in the picture,” says Hinton. “You can have a feature-level detector where things like small angles are defined. The next level detects small combinations of corners to search for objects. Having done this you go to the next level and so on. "

Neural networks promised to do this in the 80s, but then it was difficult to implement such a multi-level approach to analysis.

In 2006 there were two big changes. First, Hinton and his team developed the best way to mark deep neural networks — networks that consist of many different levels of connections. Secondly, low-cost graphics processors entered the market, giving scientists a much cheaper and faster way to do large computing. “It's a huge difference when you can do things 30 times faster,” says Hinton.

Today, networked neural algorithms are beginning to capture voice and image recognition, but Hinton sees their future wherever prediction is needed. In November at the University of Toronto, researchers used neural networks to determine how the drug molecule would behave in the real world.

Jeff Dean says that Google now uses neural algorithms in various products - some are experimental, some are not - but none has gone further than voice recognition in Jelly Bean. Google Street View, for example, can use neural networks to identify the types of objects in photographs — by distinguishing a house, for example, from a license plate.

And if you think that this all doesn’t really matter to ordinary people, check this out. Last year, Google researchers, including Dina, built a neural network that can identify cats in YouTube videos itself.

Microsoft and IBM are also studying neural networks. In October, Microsoft's principal investigator Rick Rashid demonstrated live in China a voice recognition system based on a neural network. In his demonstration, Rashid spoke English and paused after each phrase. For the audience, Microsoft's software synchronously translated his speech into Chinese. The program even picked up the intonation that would sound like Rashid.

“We still have a lot of work to do,” he said. “But the technology is very promising, and we hope that over the next few years we will be able to break the language barrier between people. Personally, I think it will make our world a better place. ”

For many users, says Vincent Vanhusk, a Google research scientist who directed network development efforts, the results were impressive. “It was partly a surprise how much we could improve recognition by simply changing the model,” he says.

')

Vanhusk notes that the number of recognition errors in the new version of Android - known as Jelly Bean - is about 25% less than in previous versions of the program, and that it makes the voice command system more convenient for people. Today, he writes, users prefer to use a more natural language when speaking with their phone. In other words, they speak less so as if they are talking to a robot. "It really changes people's behavior."

And this is just one example of how algorithms based on neural networks change the performance of our technologies - and how we use them. This field of research was of little interest to many people for many years after the 1980s passed with their passion for neural networks, but now they are returning - Microsoft, IBM and Google are working on applying them to completely real technologies.

When you talk to Android’s voice recognition system, your speech spectrogram is truncated and sent to 8 different computers that belong to a huge army of Google servers. They then process speech using neural network models developed by Vanhusk and his team. Google is known for its ability to cope with large computational tasks and the speed of their implementation - in this case, Google turned to Jeff Dean and his team of engineers , a group that is known for actually inventing modern data centers.

Neural networks have given researchers, such as Van Husk, a way to analyze many, many patterns - in the case of Jelly Bean, a spectrogram of spoken words - and then predict what kind of new pattern they can represent. The metaphor came from biology, where neurons in the body form networks that allow them to process signals in a special way. In the case of the neural network that Jelly Bean uses, Google was able to build several models of how a language works — for example, one for the English language and its search queries — based on an analysis of vast real data.

“People believed for a long, long time — partly based on what you saw in the brain — that in order to get a good perception system, you need to use several levels of functioning,” said Jeffrey Hintno, a computer science professor at the University of Toronto. “But the question is how can you effectively train them.”

Android takes a picture of voice commands, and Google processes it using the neural network model to determine what is being said.

First, Google software tries to parse speech into separate parts - into the different vowels and consonant sounds that make up words. This is one level of neural network. He then uses this information to build a more complex assumption, combining letters into words, words into sentences. Each level of this model brings the system closer to finding out what is actually said.

Neural network algorithms can also be used for image analysis. “What you want to do is find small pieces of structured pixels, such as the angles in the picture,” says Hinton. “You can have a feature-level detector where things like small angles are defined. The next level detects small combinations of corners to search for objects. Having done this you go to the next level and so on. "

Neural networks promised to do this in the 80s, but then it was difficult to implement such a multi-level approach to analysis.

In 2006 there were two big changes. First, Hinton and his team developed the best way to mark deep neural networks — networks that consist of many different levels of connections. Secondly, low-cost graphics processors entered the market, giving scientists a much cheaper and faster way to do large computing. “It's a huge difference when you can do things 30 times faster,” says Hinton.

Today, networked neural algorithms are beginning to capture voice and image recognition, but Hinton sees their future wherever prediction is needed. In November at the University of Toronto, researchers used neural networks to determine how the drug molecule would behave in the real world.

Jeff Dean says that Google now uses neural algorithms in various products - some are experimental, some are not - but none has gone further than voice recognition in Jelly Bean. Google Street View, for example, can use neural networks to identify the types of objects in photographs — by distinguishing a house, for example, from a license plate.

And if you think that this all doesn’t really matter to ordinary people, check this out. Last year, Google researchers, including Dina, built a neural network that can identify cats in YouTube videos itself.

Microsoft and IBM are also studying neural networks. In October, Microsoft's principal investigator Rick Rashid demonstrated live in China a voice recognition system based on a neural network. In his demonstration, Rashid spoke English and paused after each phrase. For the audience, Microsoft's software synchronously translated his speech into Chinese. The program even picked up the intonation that would sound like Rashid.

“We still have a lot of work to do,” he said. “But the technology is very promising, and we hope that over the next few years we will be able to break the language barrier between people. Personally, I think it will make our world a better place. ”

Source: https://habr.com/ru/post/170143/

All Articles