Unit testing for dummies

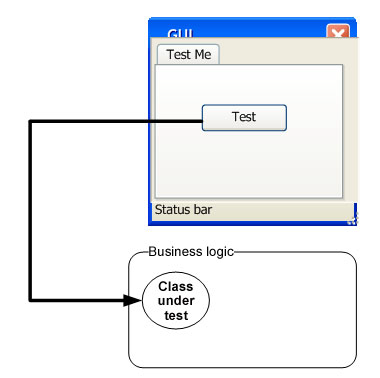

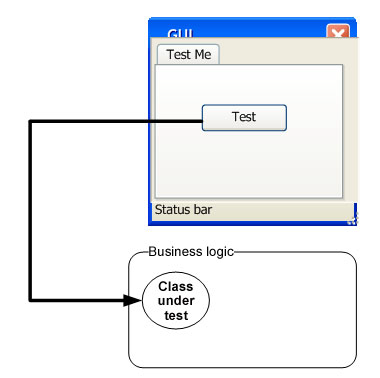

Even if you never thought you were testing, you do. You compile your application, press a button and check if the result is in line with your expectations. Quite often, in the application, you can find tweezers with the “Test it” button or classes called TestController or MyServiceTestClient .

What you do is called integration testing . Modern applications are quite complex and contain many dependencies. Integration testing verifies that several system components work together correctly.

')

It performs its task, but it is difficult to automate. As a rule, tests require that all or almost all of the system be deployed and configured on the machine on which they are run. Suppose you are developing a web application with UI and web services. The minimum equipment you need is a browser, a web server, properly configured web services, and a database. In practice, it is still more difficult. To deploy all this on the build server and all the developers' machines?

Let's first go down to the previous level and make sure that our components work correctly separately.

Turn to Wikipedia:

Thus, unit testing is the first bastion against bugs. Behind it is still integration, acceptance and, finally, manual testing, including “free search”.

Do you need all this? From my point of view, the answer is: “not always.”

In the first three cases, for objective reasons (tight deadlines, budgets, vague goals, or very simple requirements), you will not gain from writing tests.

We consider the last case separately. I know only one such person, and if you do not recognize yourself in the photo below, then I have bad news for you.

In my practice, I have met with projects over a year many times. They fall into three categories:

Projects of the first type are a tough nut to work with them the hardest. Typically, their cost refactoring is equal to or greater than rewriting from scratch.

In order for the dark side of the code not to take over, you need to adhere to the following basic rules .

Your tests should:

To achieve the fulfillment of these points, we need patience and will. But let's order.

Do you have parts of <PROJECT_NAME> .Core, <PROJECT_NAME> .Bl and <PROJECT_NAME> .Web? Add more <PROJECT_NAME> .Core.Tests, <PROJECT_NAME> .Bl.Tests and <PROJECT_NAME> .Web.Tests.

This naming convention has an additional side effect. You can use the pattern * .Tests.dll to run tests on the build server.

into a sad second type of project (with tests that no one runs).

In my opinion, the best way to name methods is: [Test method] _ [Scenario] _ [Expected behavior] .

Suppose we have a class Calculator, and it has a method Sum, which (hello, Cap!) Has to add two numbers.

In this case, our testing class will look like this:

This record is clear without explanation. This is a specification for your code.

Pay a little more attention to the framework overview. For example, many .NET developers use MsTest only because it is included in the studio's distribution. I prefer NUnit. It does not create extra folders with test results and has support for parameterized testing. I can just as easily run my NUnit tests with Resharper. Someone will love xUnit's elegance: a constructor instead of initialization attributes, an implementation of IDisposable as TearDown.

I like this approach: draw a piece of paper along the X and Y axis, where X is the algorithmic complexity and Y is the number of dependencies. Your code can be divided into 4 groups.

We first consider extreme cases: simple code without dependencies and complex code with a large number of dependencies.

What we have left:

This form is much easier to read than

So, this code is easier to maintain.

If you do not adhere to this rule, your tests will become unreadable, and soon it will be very difficult for you to support them.

Consider this example.

The factory in this example takes data about a specific implementation of AccountData from the configuration file, which we are absolutely not satisfied with. We do not want to support the zoo * .config files. Moreover, these implementations may be database dependent. If we continue in the same vein, then we will stop testing only the methods of the controller and begin to test with them the other components of the system. As we remember, this is called integration testing .

In order not to test everything together, we will slip a fake implementation.

Rewrite our class like this:

Now the controller has a new entry point, and we can transfer other interface implementations there.

There are two types of fakes: stubs (stubs) and moki (mock).

Often these concepts are confused. The difference is that stub does not check anything, but only imitates a given state. Mock is an object that has expectations. For example, that a given class method must be called a certain number of times. In other words, your test will never break because of the “stub”, but because of the mock it can.

From a technical point of view, this means that using stubs in Assert, we check the status of the class being tested or the result of the method performed. When using mock, we check whether the expectation of mock is consistent with the behavior of the class under test.

Stubs are used in state testing, and mocks are interactions. It is better to use no more than one moka per test . Otherwise, with a high probability, you violate the principle of "testing only one thing." In this case, in one test there can be as many stubs as you like or as a mock and stubs.

In the example above, I used the Moq framework to create mocks and stubs. The Rhino Mocks framework is quite common. Both frameworks are free. In my opinion, they are almost equivalent, but Moq is subjectively more convenient.

There are also two commercial frameworks on the market: TypeMock Isolator and Microsoft Moles . In my opinion, they have excessive capabilities to replace non-virtual and static methods. Although this may be useful when working with legacy code, I’ll describe below why I don’t advise doing such things.

Showcases of the listed insulation frameworks can be found here . And information on the technical aspects of working with them is easy to find in Habré.

Here we got off with a little blood. Unfortunately, not always everything is so simple. Let's look at the main cases of how we can inject dependencies:

For example, we decided to write an extensible calculator (with complex actions) and began to select a new layer of abstraction.

We do not want to test the Multiplier class, there will be a separate test for it. Rewrite the code like this:

The code is intentionally simplified in order to focus attention on the illustration of the method. In real life, instead of a calculator, most likely there will be DataProviders, UserManagers and other entities with much more complex logic.

Even if so, most likely, you can find a compromise solution. For example, in .NET you can use internal-methods and the [InternalsVisibleTo] attribute to give access to test methods from your test builds.

Here are some principles that help write test code:

It is necessary to highlight the basic rule. If you do not change the interfaces - everything is simple, the technique is identical. But if you are thinking of big changes, you should create a dependency graph and break your code into separate smaller subsystems (I hope that this is possible). Ideally, it should look like this: kernel, module # 1, module # 2, etc.

After that, select the victim. Just do not start with the kernel. Take something smaller first: that which you can refactor in a reasonable time. Cover this subsystem with integration and / or acceptance tests. And when you're done, you can cover this part with unit tests. Sooner or later, step by step, you must succeed.

Be prepared to do this quicklymost likely will not work. You will have to show willpower.

Do not treat your tests as a second-rate code. Many novice developers mistakenly believe that DRY, KISS and everything else is for production. And in tests everything is permissible. This is not true. Tests - the same code. The only difference is that tests have another goal - to ensure the quality of your application. All principles applied in the development of production code can and should be applied when writing tests.

There are only three reasons why the test stopped to pass:

Pay attention to the support of your tests, fix them on time, remove duplicates, select the base classes and develop the test APIs. You can create template base test classes that require you to implement a test suite (for example, CRUD). If you do it regularly, then soon it will not take much time.

The first shows whether our actions have a result, or we waste time that we could spend on features. The second is how much more we have to do.

The most popular tools for measuring code coverage on the .NET platform are:

I deliberately did not touch on this topic until the very end. From my point of view, Test First is a good practice with a number of undeniable advantages. However, for one reason or another, sometimes I step back from this rule and write tests after the code is ready.

In my opinion, “how to write tests” is much more important than “when to do it”. Do as you like, but do not forget: if you start with tests, then you get the architecture "to boot." If you first write code, you may have to change it to make it testable.

What you do is called integration testing . Modern applications are quite complex and contain many dependencies. Integration testing verifies that several system components work together correctly.

')

It performs its task, but it is difficult to automate. As a rule, tests require that all or almost all of the system be deployed and configured on the machine on which they are run. Suppose you are developing a web application with UI and web services. The minimum equipment you need is a browser, a web server, properly configured web services, and a database. In practice, it is still more difficult. To deploy all this on the build server and all the developers' machines?

We need to go deeper

Let's first go down to the previous level and make sure that our components work correctly separately.

Turn to Wikipedia:

Unit testing , or unit testing (unit testing) is a programming process that allows you to check for the correctness of individual modules of the program source code.

The idea is to write tests for each non-trivial function or method. This allows you to quickly check whether the next code change did not lead to regression, that is, to the appearance of errors in the already tested program areas, and also facilitates the detection and elimination of such errors.

Thus, unit testing is the first bastion against bugs. Behind it is still integration, acceptance and, finally, manual testing, including “free search”.

Do you need all this? From my point of view, the answer is: “not always.”

No need to write tests if

- You make a simple online business card of 5 static html-pages and with one form of sending a letter. On this, the customer is likely to calm down, he does not need anything more. There is no special logic here, it’s faster just to check everything with “hands”

- You are engaged in an advertising site / simple flash games or banners - complex layout / animation or a large amount of static. There is no logic, only the presentation

- You are doing a project for the exhibition. Term - from two weeks to a month, your system - a combination of hardware and software, at the beginning of the project it is not fully known what exactly should happen at the end. The software will work for 1-2 days at the exhibition

- You always write code without errors, have an ideal memory and foresight. Your code is so cool that it changes itself, following the requirements of the client. Sometimes the code explains to the client that his requirements do not need to be implemented.

In the first three cases, for objective reasons (tight deadlines, budgets, vague goals, or very simple requirements), you will not gain from writing tests.

We consider the last case separately. I know only one such person, and if you do not recognize yourself in the photo below, then I have bad news for you.

Any long-term project without proper test coverage is doomed sooner or later to be rewritten from scratch.

In my practice, I have met with projects over a year many times. They fall into three categories:

- Uncovered tests. Usually such systems are accompanied by spaghetti code and resigned leading developers. No one in the company knows exactly how it all works. Yes, and what it ultimately needs to do, employees represent very remotely.

- With tests that no one runs and does not support. There are tests in the system, but what they are testing, and what result is expected of them, is unknown. The situation is already better. There is some kind of architecture, there is an understanding of what weakness is. You can find some documents. Most likely, the company still employs the main system developer, who keeps in his head the features and the intricacies of the code.

- With serious coverage. All tests pass. If the tests in the project really run, there are a lot of them. Much more than in systems from the previous group. And now each of them is atomic: one test checks only one thing. A test is a specification of a class method, a contract: what input parameters does this method expect and what other components of the system expect from it at the output. Such systems are much smaller. They contain current specification. There is little text: usually a couple of pages, with a description of the main features, server layouts and getting started guide . In this case, the project does not depend on people. Developers can come and go. The system is reliably tested and tells about itself through tests.

Projects of the first type are a tough nut to work with them the hardest. Typically, their cost refactoring is equal to or greater than rewriting from scratch.

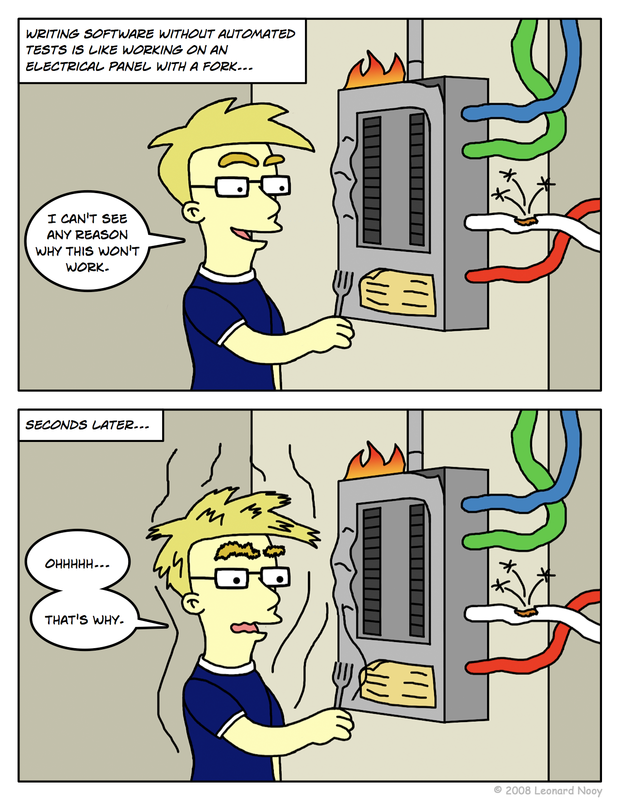

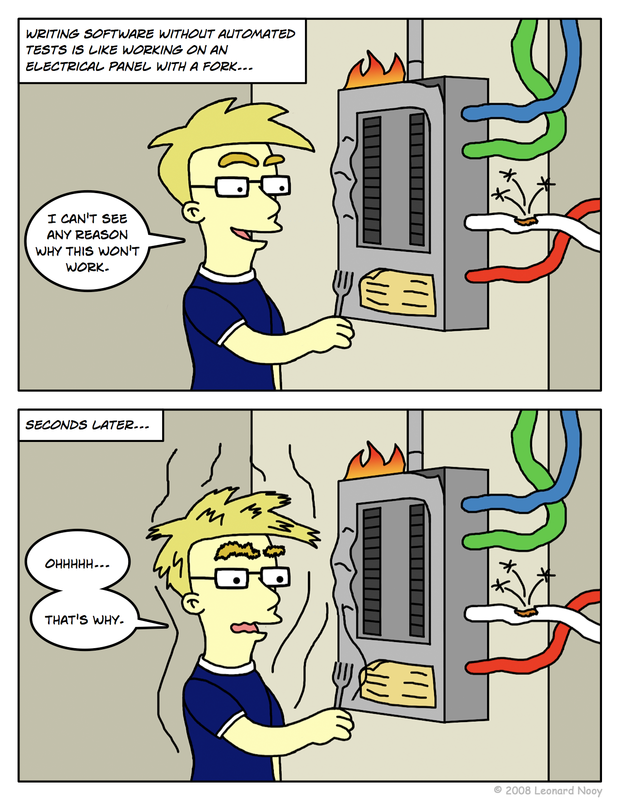

Why are there projects of the second type?

Colleagues from ScrumTrek assure that the dark side of the code and Lord Dart Avtotestius are to blame. I am convinced that this is very close to the truth. Thoughtless writing of tests not only does not help, but harms the project . If earlier you had one poor-quality product, then having written tests, without understanding this subject, you receive two. And double the time for support and support.In order for the dark side of the code not to take over, you need to adhere to the following basic rules .

Your tests should:

- To be authentic

- Do not depend on the environment on which they are executed.

- Easy to maintain

- Easy to read and easy to understand (even a new developer must understand what is being tested)

- Comply with the uniform naming convention.

- Run regularly in automatic mode

To achieve the fulfillment of these points, we need patience and will. But let's order.

Select the logical location of the tests in your VCS

The only way. Your tests should be part of version control. Depending on the type of your decision, they can be organized differently. General recommendation: if the application is monolithic, put all the tests in the Tests folder; If you have many different components, store the tests in the folder of each component.Choose a method for naming projects with tests.

One of the best practices: add to each project its own test project.Do you have parts of <PROJECT_NAME> .Core, <PROJECT_NAME> .Bl and <PROJECT_NAME> .Web? Add more <PROJECT_NAME> .Core.Tests, <PROJECT_NAME> .Bl.Tests and <PROJECT_NAME> .Web.Tests.

This naming convention has an additional side effect. You can use the pattern * .Tests.dll to run tests on the build server.

Use the same naming method for test classes.

Do you have a class ProblemResolver? Add ProblemResolverTests to the test project. Each testing class must test only one entity. Otherwise, you will very quickly fallChoose the “talking” method of naming the testing class methods.

TestLogin is not the best name for a method. What exactly is being tested? What are the input parameters? Can errors and exceptions occur?In my opinion, the best way to name methods is: [Test method] _ [Scenario] _ [Expected behavior] .

Suppose we have a class Calculator, and it has a method Sum, which (hello, Cap!) Has to add two numbers.

In this case, our testing class will look like this:

lass CalculatorTests { public void Sum_2Plus5_7Returned() { // … } } This record is clear without explanation. This is a specification for your code.

Choose a test framework that suits you

Regardless of the platform, do not write bicycles. I have seen many projects in which automated tests (mostly not units, but acceptance ones) were launched from a console application. Do not do this, everything has been done for you.Pay a little more attention to the framework overview. For example, many .NET developers use MsTest only because it is included in the studio's distribution. I prefer NUnit. It does not create extra folders with test results and has support for parameterized testing. I can just as easily run my NUnit tests with Resharper. Someone will love xUnit's elegance: a constructor instead of initialization attributes, an implementation of IDisposable as TearDown.

What to test and what not?

Some talk about the need to cover the code by 100%, others consider it a waste of resources.I like this approach: draw a piece of paper along the X and Y axis, where X is the algorithmic complexity and Y is the number of dependencies. Your code can be divided into 4 groups.

We first consider extreme cases: simple code without dependencies and complex code with a large number of dependencies.

- Simple code without dependencies. Most likely here and so everything is clear. It can not be tested.

- Complex code with a lot of dependencies. Hmm, if you have this code, it smells like a God Object and a strong connectivity. Most likely, it will be nice to refactor. We will not cover this code with unit tests, because we will rewrite it, which means that we will change the method signatures and create new classes. So why write tests that have to be thrown away? I want to make a reservation that for this kind of refactoring we still need testing, but it is better to use higher-level acceptance tests . We will consider this case separately.

What we have left:

- Complex code without dependencies. These are certain algorithms or business logic. Well, these are important parts of the system, test them.

- Not very complicated code with dependencies. This code links together different components. Tests are important to clarify exactly how the interaction should occur. The reason for the loss of the Mars Climate Orbiter on September 23, 1999 was a software-human error: one unit of the project counted "in inches" and the other - "in meters", and clarified this after the loss of the device. The result could be different if the teams tested the “stitches” of the application.

Adhere to the same style of writing the test body

The AAA approach (arrange, act, assert) has proven itself well. Let's go back to the calculator example: class CalculatorTests { public void Sum_2Plus5_7Returned() { // arrange var calc = new Calculator(); // act var res = calc.Sum(2,5); // assert Assert.AreEqual(7, res); } } This form is much easier to read than

class CalculatorTests { public void Sum_2Plus5_7Returned() { Assert.AreEqual(7, new Calculator().sum(2,5)); } } So, this code is easier to maintain.

Test one thing at a time

Each test should check only one thing. If the process is too complicated (for example, buying from an online store), divide it into several parts and test them separately.If you do not adhere to this rule, your tests will become unreadable, and soon it will be very difficult for you to support them.

Dealing with addictions

So far we have tested the calculator. He has no dependencies at all. In modern business applications, the number of such classes, unfortunately, is small.Consider this example.

public class AccountManagementController : BaseAdministrationController { #region Vars private readonly IOrderManager _orderManager; private readonly IAccountData _accountData; private readonly IUserManager _userManager; private readonly FilterParam _disabledAccountsFilter; #endregion public AccountManagementController() { _oms = OrderManagerFactory.GetOrderManager(); _accountData = _ orderManager.GetComponent<IAccountData>(); _userManager = UserManagerFactory.Get(); _disabledAccountsFilter = new FilterParam("Enabled", Expression.Eq, true); } } The factory in this example takes data about a specific implementation of AccountData from the configuration file, which we are absolutely not satisfied with. We do not want to support the zoo * .config files. Moreover, these implementations may be database dependent. If we continue in the same vein, then we will stop testing only the methods of the controller and begin to test with them the other components of the system. As we remember, this is called integration testing .

In order not to test everything together, we will slip a fake implementation.

Rewrite our class like this:

public class AccountManagementController : BaseAdministrationController { #region Vars private readonly IOrderManager _oms; private readonly IAccountData _accountData; private readonly IUserManager _userManager; private readonly FilterParam _disabledAccountsFilter; #endregion public AccountManagementController() { _oms = OrderManagerFactory.GetOrderManager(); _accountData = _oms.GetComponent<IAccountData>(); _userManager = UserManagerFactory.Get(); _disabledAccountsFilter = new FilterParam("Enabled", Expression.Eq, true); } /// <summary> /// For testability /// </summary> /// <param name="accountData"></param> /// <param name="userManager"></param> public AccountManagementController( IAccountData accountData, IUserManager userManager) { _accountData = accountData; _userManager = userManager; _disabledAccountsFilter = new FilterParam("Enabled", Expression.Eq, true); } } Now the controller has a new entry point, and we can transfer other interface implementations there.

Fakes: stubs & mocks

We rewrote the class and now we can slip the controller other dependency implementations that will not crawl into the database, look at configs, etc. In a word, they will only do what is required of them. Separate and conquer. We must test real implementations separately in our own test classes. Now we are testing only the controller.There are two types of fakes: stubs (stubs) and moki (mock).

Often these concepts are confused. The difference is that stub does not check anything, but only imitates a given state. Mock is an object that has expectations. For example, that a given class method must be called a certain number of times. In other words, your test will never break because of the “stub”, but because of the mock it can.

From a technical point of view, this means that using stubs in Assert, we check the status of the class being tested or the result of the method performed. When using mock, we check whether the expectation of mock is consistent with the behavior of the class under test.

Stub

[Test] public void LogIn_ExisingUser_HashReturned() { // Arrange OrderProcessor = Mock.Of<IOrderProcessor>(); OrderData = Mock.Of<IOrderData>(); LayoutManager = Mock.Of<ILayoutManager>(); NewsProvider = Mock.Of<INewsProvider>(); Service = new IosService( UserManager, AccountData, OrderProcessor, OrderData, LayoutManager, NewsProvider); // Act var hash = Service.LogIn("ValidUser", "Password"); // Assert Assert.That(!string.IsNullOrEmpty(hash)); }

[Test] public void LogIn_ExisingUser_HashReturned() { // Arrange OrderProcessor = Mock.Of<IOrderProcessor>(); OrderData = Mock.Of<IOrderData>(); LayoutManager = Mock.Of<ILayoutManager>(); NewsProvider = Mock.Of<INewsProvider>(); Service = new IosService( UserManager, AccountData, OrderProcessor, OrderData, LayoutManager, NewsProvider); // Act var hash = Service.LogIn("ValidUser", "Password"); // Assert Assert.That(!string.IsNullOrEmpty(hash)); } Mock

[Test] public void Create_AddAccountToSpecificUser_AccountCreatedAndAddedToUser() { // Arrange var account = Mock.Of<AccountViewModel>(); // Act _controller.Create(1, account); // Assert _accountData.Verify(m => m.CreateAccount(It.IsAny<IAccount>()), Times.Exactly(1)); _accountData.Verify(m => m.AddAccountToUser(It.IsAny<int>(), It.IsAny<int>()), Times.Once()); }

[Test] public void Create_AddAccountToSpecificUser_AccountCreatedAndAddedToUser() { // Arrange var account = Mock.Of<AccountViewModel>(); // Act _controller.Create(1, account); // Assert _accountData.Verify(m => m.CreateAccount(It.IsAny<IAccount>()), Times.Exactly(1)); _accountData.Verify(m => m.AddAccountToUser(It.IsAny<int>(), It.IsAny<int>()), Times.Once()); } Condition testing and behavior testing

Why is it important to understand the seemingly insignificant difference between moka and stubs? Let's imagine that we need to test the automatic irrigation system. You can approach this task in two ways:State testing

Run the cycle (12 hours). And after 12 hours we check whether the plants are well watered, whether there is enough water, what is the condition of the soil, etc.Interaction testing

Install sensors that will detect when watering has begun and ended, and how much water came from the system.Stubs are used in state testing, and mocks are interactions. It is better to use no more than one moka per test . Otherwise, with a high probability, you violate the principle of "testing only one thing." In this case, in one test there can be as many stubs as you like or as a mock and stubs.

Insulation Frameworks

We could implement moki and stubs on our own, but there are several reasons why I do not advise doing this:- Bicycles are already written to us

- Many interfaces are not so easy to implement with a half-kick

- Our handwritten fakes may contain errors

- This is additional code that will have to be supported.

In the example above, I used the Moq framework to create mocks and stubs. The Rhino Mocks framework is quite common. Both frameworks are free. In my opinion, they are almost equivalent, but Moq is subjectively more convenient.

There are also two commercial frameworks on the market: TypeMock Isolator and Microsoft Moles . In my opinion, they have excessive capabilities to replace non-virtual and static methods. Although this may be useful when working with legacy code, I’ll describe below why I don’t advise doing such things.

Showcases of the listed insulation frameworks can be found here . And information on the technical aspects of working with them is easy to find in Habré.

Tested architecture

Let's go back to the example with the controller. public AccountManagementController( IAccountData accountData, IUserManager userManager) { _accountData = accountData; _userManager = userManager; _disabledAccountsFilter = new FilterParam("Enabled", Expression.Eq, true); } Here we got off with a little blood. Unfortunately, not always everything is so simple. Let's look at the main cases of how we can inject dependencies:

Injection into the constructor

Add an additional constructor or replace the current constructor (depending on how you create objects in your application, whether you use an IOC container). We used this approach in the example above.Injection into the factory

Setter can additionally be “hidden” from the main application, if you select the IUserManagerFactory interface and work in the production code via the interface link. public class UserManagerFactory { private IUserManager _instance; /// <summary> /// Get UserManager instance /// </summary> /// <returns>IUserManager with configuration from the configuration file</returns> public IUserManager Get() { return _instance ?? Get(UserConfigurationSection.GetSection()); } private IUserManager Get(UserConfigurationSection config) { return _instance ?? (_instance = Create(config)); } /// <summary> /// For testing purposes only! /// </summary> /// <param name="userManager"></param> public void Set(IUserManager userManager) { _instance = userManager; } } Factory substitution

You can replace the entire factory entirely. This will require the allocation of the interface or the creation of a virtual function, the creation of objects. After this, you can redefine the factory methods so that they return your fakes.Override local factory method

If dependencies are instantiated directly in the code explicitly, then the easiest way is to select the factory-protected CreateObjectName () method and override it in the inheriting class. After that, test the heir class, not your original class being tested.For example, we decided to write an extensible calculator (with complex actions) and began to select a new layer of abstraction.

public class Calculator { public double Multipy(double a, double b) { var multiplier = new Multiplier(); return multiplier.Execute(a, b); } } public interface IArithmetic { double Execute(double a, double b); } public class Multiplier : IArithmetic { public double Execute(double a, double b) { return a * b; } } We do not want to test the Multiplier class, there will be a separate test for it. Rewrite the code like this:

public class Calculator { public double Multipy(double a, double b) { var multiplier = CreateMultiplier(); return multiplier.Execute(a, b); } protected virtual IArithmetic CreateMultiplier() { var multiplier = new Multiplier(); return multiplier; } } public class CalculatorUnderTest : Calculator { protected override IArithmetic CreateMultiplier() { return new FakeMultiplier(); } } public class FakeMultiplier : IArithmetic { public double Execute(double a, double b) { return 5; } } The code is intentionally simplified in order to focus attention on the illustration of the method. In real life, instead of a calculator, most likely there will be DataProviders, UserManagers and other entities with much more complex logic.

Test architecture VS OOP

Many developers are starting to complain, they say "this is your test design," breaks the encapsulation, opens too much. I think that there are only two reasons for this:Strong security requirements

This means that you have serious cryptography, the binaries are packed, and everything is hung with certificates.Even if so, most likely, you can find a compromise solution. For example, in .NET you can use internal-methods and the [InternalsVisibleTo] attribute to give access to test methods from your test builds.

Performance

There are a number of tasks when architecture has to be sacrificed for the sake of performance, and for some it becomes a reason to abandon testing. In my practice, to leave the server / upgrade iron has always been cheaper than writing untested code. If you have a critical section, it is probably worth rewriting it at a lower level. Your C # application? Perhaps it makes sense to build one unmanaged assembly in C ++.Here are some principles that help write test code:

- Think of interfaces, not classes, then you can always easily replace real implementations with fakes in test code.

- Avoid direct instantiation of objects within logic methods. Use factories or dependency injection . In this case, using the IOC container in a project can greatly simplify your work.

- Avoid directly invoking static methods.

- Avoid constructors that contain logic: it will be difficult for you to test.

Work with legacy code

By "inherited" we will understand the code without tests. The quality of such a code may be different. A few tips on how to cover it with tests.Architecture is testable

We are lucky, there are no direct creations of classes and a meat grinder, and the principles of SOLID are respected. There is nothing simpler - we create test projects, and step by step we cover the application using the principles described in the article. As a last resort, we will have to add a couple of setters for the factories and select several interfaces.Architecture is not testable

We have hard ties, crutches and other joys of life. We have to refactor. How to conduct complex refactoring correctly is a topic that goes far beyond the scope of this article.It is necessary to highlight the basic rule. If you do not change the interfaces - everything is simple, the technique is identical. But if you are thinking of big changes, you should create a dependency graph and break your code into separate smaller subsystems (I hope that this is possible). Ideally, it should look like this: kernel, module # 1, module # 2, etc.

After that, select the victim. Just do not start with the kernel. Take something smaller first: that which you can refactor in a reasonable time. Cover this subsystem with integration and / or acceptance tests. And when you're done, you can cover this part with unit tests. Sooner or later, step by step, you must succeed.

Be prepared to do this quickly

Test support

Do not treat your tests as a second-rate code. Many novice developers mistakenly believe that DRY, KISS and everything else is for production. And in tests everything is permissible. This is not true. Tests - the same code. The only difference is that tests have another goal - to ensure the quality of your application. All principles applied in the development of production code can and should be applied when writing tests.

There are only three reasons why the test stopped to pass:

- Error in the production code: this is a bug, you need to start it in the bug tracker and fix it.

- Bug in test: apparently, the production code has changed, and the test is written with an error (for example, it tests too much or is not what was needed). It is possible that before he passed erroneously. Understand and repair the test.

- Change of requirements. If the requirements have changed too much - the test should fall. This is correct and normal. You need to deal with new requirements and fix the test. Or delete if it is no longer relevant.

Pay attention to the support of your tests, fix them on time, remove duplicates, select the base classes and develop the test APIs. You can create template base test classes that require you to implement a test suite (for example, CRUD). If you do it regularly, then soon it will not take much time.

How to "measure" progress

To measure the success of unit test implementation in your project, you should use two metrics:- Number of bugs in new releases (including regressions)

- Code coverage

The first shows whether our actions have a result, or we waste time that we could spend on features. The second is how much more we have to do.

The most popular tools for measuring code coverage on the .NET platform are:

- NCover

- dotTrace

- built into the studio Test Coverage

Test First?

I deliberately did not touch on this topic until the very end. From my point of view, Test First is a good practice with a number of undeniable advantages. However, for one reason or another, sometimes I step back from this rule and write tests after the code is ready.

In my opinion, “how to write tests” is much more important than “when to do it”. Do as you like, but do not forget: if you start with tests, then you get the architecture "to boot." If you first write code, you may have to change it to make it testable.

Read on

An excellent selection of references and books on the subject can be found in this article on Habré . I especially recommend the book The Art of Unit Testing. I read the first edition. It turns out that the second has already happened.Source: https://habr.com/ru/post/169381/

All Articles