Wavelet compression on the fingers

Wavelets are now at the hearing. Even people who are inexperienced in mathematics have probably heard that they can be used to compress images and videos while maintaining acceptable quality. But what is a wavelet? Wikipedia answers this question with a whole heap of formulas for which it is not so easy to see the essence.

Let's try using simple examples to figure out where the wavelets come from and how they can be used in compression. It is assumed that the reader is familiar with the basics of linear algebra, vector and matrix are not afraid of words, and are able to multiply them. (And in the second part we will even try to program something.)

')

Image compression

Simply put, the image is a table, in the cells of which the colors of each pixel are stored. If we work with a black-and-white (or, more precisely, gray) image, then instead of the color, the brightness values from the [0, 1] segment are placed in the cells. At the same time, 0 corresponds to black, 1 - to white. But it’s inconvenient to work with fractions, so often the brightness values are taken as integers from the range from 0 to 255. Then each value will take exactly 1 byte.

Even small images require a lot of memory to store. So, if we encode the brightness of each pixel in one byte, the image of one frame of FullHD format (1920 × 1080) will take almost two megabytes. Imagine how much memory you need to store an hour and a half movie!

Therefore, images tend to compress. That is, encode so that less storage is required for storage. And while watching, we decode the data stored in the memory and get the original frame. But this is only ideally.

There are many data compression algorithms. Their number can be judged by the formats supported by modern archivers: ZIP, 7Z, RAR, ACE, GZIP, HA, BZ2 and so on. It is not surprising that due to the active work of scientists and programmers, at present, the degree of data compression has come close to the theoretical limit.

The bad news is that this theoretical limit is not so great for an image. Try saving a photo (especially with a lot of small details) in PNG format - the size of the resulting file can upset you.

This is due to the fact that in images from the real world (photos, for example) brightness values are rarely the same, even for neighboring pixels. There are always the smallest fluctuations that are elusive with the human eye, but which the compression algorithm honestly tries to take into account.

Compression algorithms "love" when there is a pattern in the data. Long sequences of zeros are best compressed (the pattern is obvious here). In fact, instead of storing 100 zeros in memory, you can simply write the number 100 (of course, with a note that this is exactly the number of zeros). The decoding program will “understand” that they meant zeros and will reproduce them.

However, if a unit suddenly appears in our sequence in the middle, then it will not be possible to limit it to one number 100.

But why encode absolutely all the details? After all, when we look at the photo, the overall picture is important to us, and we will not notice any minor fluctuations in brightness. So, when encoding, we can slightly change the image so that it is well encoded. At the same time, the compression ratio will immediately increase. True, the decoded image will differ slightly from the original, but who will notice?

Transform haar

So, our goal is to transform the image so that it is well compressed by classical algorithms. Let's think about how to change it to get long chains of zeros.

The "real" images, such as photos, have one feature - the brightness of neighboring pixels usually differs by a small amount. In fact, in the world one rarely sees dramatic, contrasting changes in brightness. And if they are, they occupy only a small part of the image.

Consider a fragment of the first row of brightness from the well-known image “Lenna” (in the figure).

154, 155, 156, 157, 157, 157, 158, 156

It can be seen that the neighboring numbers are very close. To get the desired zeros or at least something close to them, you can encode the first number separately, and then consider only the differences of each number from the previous one.

We get:

154, 1, 1, 1, 0, 0, 1, -2.

Already better! Such a method is in fact used and is called delta encoding. But he has a serious drawback - he is non-local. That is, you cannot take a piece of the sequence and find out exactly which brightness in it is encoded without decoding all the values in front of this piece.

Let's try to do otherwise. We will not try to immediately get a good consistency, we will try to improve it at least a little.

To do this, divide all the numbers into pairs and find the half-sum and half-difference of values in each of them.

(154, 155), (156, 157), (157, 157), (158, 156)

(154.5, 0.5), (156.5, 0.5), (157, 0.0), (157, -1.0)

Why precisely half sums and half differences? And everything is very simple! Half-sum is the average brightness value of a pair of pixels. And half the difference carries information about the differences between the values in the pair. Obviously, knowing the half-sum a and half-difference d one can find the values themselves:

the first value in the pair = a - d,

second value in pair = a + d.

This transformation was proposed in 1909 by Alfred Haar and bears his name.

And where is the compression?

The resulting numbers can be regrouped according to the principle “flies separately, cutlets separately,” by dividing half-sum and half-difference:

154.5, 156.5, 157, 157; 0.5, 0.5, 0.0, -1.0.

The numbers in the second half of the sequence will usually be small (the fact that they are not integers, let them not confuse them yet). Why is that?

As we have already found out, in real images, neighboring pixels rarely differ significantly from each other. If the value of one is great, then the other is great. In such cases, it is said that neighboring pixels are correlated.

In fact, consider the first 2000 pairs of neighboring pixels and each pair will be represented on the graph by a dot.

All points are lined up along one straight line. And so in almost all real images. The upper left and lower right corners of the image are almost always empty.

And now we will consider the schedule, points in which there will be half sums and half differences.

It can be seen that half-differences are in a much narrower range of values. This means that you can spend less than one byte on them. Any, and compression.

Let's do the math!

Let's try to write mathematical expressions describing the Haar transform.

So, we had a couple of pixels (vector)

, and we want to get a couple

, and we want to get a couple  .

.This transformation is described by the matrix.

.

.Indeed

what we needed.

what we needed.The attentive reader probably noticed that the figures from the points on the last two graphs are the same. The difference is only in the rotation angle of 45 °.

In mathematics, rotation and extension are called affine transformations and are described just by multiplying the matrix by the vector. What we got higher. That is, the Haar transform is simply turning the points so that they can be conveniently and compactly encoded.

True, there is one nuance. Under affine transformations, the area of the figure may vary. Not that it was bad, but somehow carelessly. As is known, the coefficient of change of area is equal to the determinant of the matrix. Let's see what it is for the Haar transform.

In order for the determinant to become equal to one, it is sufficient to multiply each element of the matrix by

. This will not affect the angle of rotation (and therefore the “compressive capacity” of the transformation).

. This will not affect the angle of rotation (and therefore the “compressive capacity” of the transformation).The resulting matrix

How to decode?

As it is known, if the determinant of a matrix is not equal to zero, then for it there is an inverse matrix that "cancels" its action. If we find the inverse matrix for H, then the decoding will simply consist in multiplying vectors with half sums and half differences on it.

Generally speaking, finding the inverse matrix is not such an easy task. But maybe it will be possible somehow to simplify this task?

Let's take a closer look at our matrix. It consists of two vector lines:

and

and  . Let's call them v 1 and v 2 .

. Let's call them v 1 and v 2 .They have interesting properties.

First, their lengths are 1, i.e.

. Here the letter T means transposition. Multiplying a row vector by a transposed row vector is a scalar product.

. Here the letter T means transposition. Multiplying a row vector by a transposed row vector is a scalar product.Secondly, they are orthogonal, i.e.

.

.A matrix whose rows possess the specified properties is called orthogonal. An extremely important property of such matrices is that the inverse matrix for them can be obtained by simple transposition.

The validity of this expression can be seen by multiplying the H inverse matrix. On the diagonal we get the scalar products of vector strings on themselves, that is, 1. And outside the diagonals there are scalar products of vector strings on each other, that is 0. As a result, the product will be equal to the identity matrix.

We love orthogonal matrices!

Increasing the number of points

All this works well for two points. But what if there are more points?

In this case, it is also possible to describe the transformation by a matrix, but larger in size. The diagonal of this matrix will consist of matrices H, so pairs will be selected in the vector of initial values, to which the Haar transform will be applied independently.

I.e. the source vector is simply processed independently in pairs.

Filters

So, when we know how to perform the Haar transformation, we will try to figure out what it gives us.

The resulting "half-sum" (due to the fact that we do not divide by 2, we have to use quotes) - this, as we have already found out, is the average values in pairs of pixels. That is, in fact, the half-sum values are a reduced copy of the original image! Reduced because half the amount is two times less than the original pixels.

But what is the difference?

Half-sum averages of the brightness values, that is, they “filter out” random bursts of values. We can assume that this is some kind of frequency filter.

Similarly, the differences "distinguish" among the values of inter-pixel "bursts" and eliminate the constant component. That is, they "filter out" low frequencies.

Thus, the Haar transform is a pair of filters that divide the signal into low-frequency and high-frequency components. To get the original signal, you just need to re-combine these components.

What does this give us? Suppose we have a portrait picture. The low-frequency component carries information about the general shape of the face, and smooth brightness variations. High-frequency - this is noise and small details.

Usually, when we look at a portrait, we are more interested in the low-frequency component, which means that when compressing, some of the high-frequency data can be discarded. Moreover, as we found out, it usually has smaller values, and therefore is more compactly encoded.

The compression ratio can be increased by applying the Haar transform multiple times. In fact, the high-frequency component is only half of the whole set of numbers. But what prevents to apply our procedure once again to low-frequency data? After repeated use, the high frequency information will occupy already 75%.

Although we have talked about one-dimensional chains of numbers so far, this approach is well applicable for two-dimensional data. To perform a two-dimensional Haar transformation (or similar), you only need to perform it for each row and for each column.

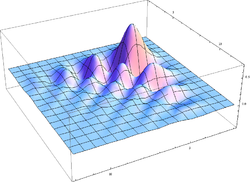

After repeated application to, for example, photos of the castle Lichtenstein, we obtain the following figure.

Black areas correspond to low brightness, that is, values close to zero. As practice shows, if the value is small enough, then it can be rounded off or even zeroed out without much damage to the decoded picture.

This process is called quantization. And it is at this stage that some of the information is lost. (By the way, the same approach is used in JPEG, only discrete cosine transform is used instead of the Haar transform.) By varying the number of nullable coefficients, you can adjust the compression ratio!

Of course, if you zero too much, the distortion will be visible to the eye. Everything needs measure!

After all these actions, we will have a matrix containing many zeros. You can write it line by line into a file and compress it with some kind of archiver. For example, the same 7Z. The result will be quite good.

Decoding is done in the reverse order: unpack the archive, apply the inverse Haar transform, and write the decoded image to a file. Voila!

Where is the Haar transform effective?

When will the Haar transform give the best result? Obviously, when we get a lot of zeros, that is, when the image contains long sections of the same brightness values. Then all differences will be reset. This may be, for example, an X-ray, a scanned document.

It is said that the Haar transform eliminates the constant component (it is also a moment of zero order), that is, converts constants to zeros.

But still in real photographs of areas with the same brightness, not so much. Let's try to improve the transformation, so that it also nullifies the linear component. In other words, if the brightness values increase linearly, they will also be reset.

This problem and more complex (elimination of moments of higher orders) was solved by Ingrid Dobeshi - one of the creators of the theory of wavelets.

Dobeshi's transformation

For our advanced transformation, there will already be few points. Therefore, we will take four values, shifting each time by two.

That is, if the original sequence is 1, 2, 3, 4, 5, 6, ..., N-1, N, then we will take quadruples (1, 2, 3, 4), (3, 4, 5, 6) etc. The last four "bites the sequence by the tail": (N-1, N, 1, 2).

Similarly, we will try to build two filters: high-frequency and low-frequency. Every four will be replaced by two numbers. Since the quadruples overlap, the number of values after the conversion will not change.

In order to make it convenient to consider the inverse matrix, we also require the orthogonality of the transformation. Then the search for the inverse matrix is reduced to transpose

Let the values of the brightness in the four are x, y, z, t. Then we write the first filter in the form

The four coefficients that make up the vector line of the transformation matrix are not yet known to us.

To make the vector line of the coefficients of the second filter be orthogonal to the first one, take the same coefficients but rearrange them and change the signs:

The transformation matrix will have the form.

The orthogonality requirement is satisfied for the first and second rows automatically. We require that lines 1 and 3 also be orthogonal:

Vectors must have unit length (otherwise the determinant will not be unit):

The conversion should reset the chain of identical values (for example, (1, 1, 1, 1)):

The transformation should reset the chain of linearly growing values (for example, (1, 2, 3, 4)):

By the way, if this four is reset, then any other linearly growing or linearly decreasing will be reset. It is easy to verify this by writing down the corresponding equation and dividing all the coefficients by the first factor.

Received 4 equations connecting coefficients. Solving them, we get:

Substituting them into the matrix, we obtain the desired transformation. After applying it to photos, we get more zeros and small coefficients, which will compress the image more strongly.

Another nice feature is that artifacts will not be noticeable after quantization.

This transformation was called the D4 wavelet (the reader is invited to independently solve the mystery of this alphanumeric name).

Other wavelets

Of course, we can not dwell on this, and demand the elimination of the parabolic component (the moment of the 2nd order), and so on. As a result, we obtain the D6, D8 wavelets and others.

In order not to count everything manually, the coefficients can be viewed in Wikipedia .

Dobeshi discovered a very interesting way of obtaining the coefficients of these transformations, but alas, this is already beyond the scope of our article.

Homework

In order to finally understand the basics, I suggest writing a program in your favorite language that opens an image, performs a Haar transformation (or even D4), quantizes the result, and then saves the result to a file. Try to compress this file with your favorite archiver. Good shrink?

Try the reverse transform. How do you explain the nature of the artifacts in the image?

Conclusion

So, we briefly reviewed the basic ideas of the discrete wavelet transform.

Of course, this article did not consider very many interesting mathematical details and practical applications of wavelet transforms. But you can not grasp the immensity. Yes, and much difficult to explain without raising the degree of matan. I hope that the writing turned out to be useful to someone.

Thanks for attention!

Continued: Wavelet compression "on the fingers": practice .

Literature

There are many pretty good books that give a deeper insight into wavelets. I recommend starting with the following:

- Welstead C. Fractals and wavelets for compressing images in action. - M .: Triumph, 2003.

- Stark G.-G. The use of wavelets for DSP. - M .: Technosphere, 2007.

Source: https://habr.com/ru/post/168517/

All Articles