NIC Teaming on Windows Server 2012

With the release of Windows Server 2012 , NIC Teaming technology has become a regular means of the server operating system. For a long time, solutions for integrating (grouping) network adapters for the Windows platform were provided only by third-party manufacturers, primarily equipment suppliers. Now Windows Server 2012 contains tools that allow you to group network adapters, including adapters from different manufacturers.

NIC Teaming, also referred to as Load Balancing / Failover (LBFO), is available in all editions of Windows Server 2012 and in all server operating modes (Core, MinShell, Full GUI). Combining (timing) several physical network adapters into a group leads to the emergence of a virtual network interface tNIC, which represents a group for higher levels of the operating system.

')

Combining adapters into a group provides two main advantages:

Windows Server 2012 allows you to group up to 32 Ethernet network adapters. Timing of non-Ethernet adapters (Bluetooth, Infiniband, etc.) is not supported. In principle, a group can contain only one adapter, for example, to separate traffic across VLANs, but, obviously, failover is not provided in this case.

The network adapter driver included in the group must be digitally signed with Windows Hardware Qualification and Logo (WHQL). In this case, adapters from different manufacturers can be grouped together, and this will be a supported Microsoft configuration.

Only adapters with the same speed connection can be included in one group.

It is not recommended to use third-party timing and timing on the same server. Configurations are not supported when the adapter included in the third-party timing is added to the group created by the standard OS facilities, and vice versa.

When creating a timing group, it is necessary to specify several parameters (discussed below), two of which are of fundamental importance: the mode of timing (teaming mode) and the mode of balancing traffic (load balancing mode).

Timing group can operate in two modes: switch dependent (switch dependent) and switch independent (switch independent).

As the name implies, the first option (switch dependent) will require setting up a switch to which all adapters of the group are connected. Two options are possible - static configuration of the switch (IEEE 802.3ad draft v1), or use of the Link Aggregation Control Protocol (LACP, IEEE 802.1ax).

In switch independent mode, group adapters can be connected to different switches. I emphasize it may be, but it is not necessary. If this is so, fault tolerance can be provided not only at the network adapter level, but also at the switch level.

In addition to specifying the mode of operation of timing, you must also specify the mode of distribution or balancing of traffic. There are essentially two such modes: Hyper-V Port and Address Hash.

Hyper-V Port . On a host with a raised Hyper-V role and the nth number of virtual machines (VM), this mode can be very effective. In this mode, the port of Hyper-V Extensible Switch , to which a certain VM is connected, is assigned to a network adapter of the timing group. All outgoing traffic of this VM is always transmitted through this network adapter.

Address Hash . In this mode, a hash is calculated for a network packet based on the source and destination addresses. The resulting hash is associated with any group adapter. All subsequent packets with the same hash value are sent through this adapter.

The hash can be calculated based on the following values:

Port based hash calculation allows traffic to be distributed more evenly. However, for non-TCP or UDP traffic, a hash is used based on an IP address; for non-IP traffic, a hash is based on MAC addresses.

The table below describes the logic of the distribution of incoming / outgoing traffic, depending on the mode of operation of the group and the selected traffic distribution algorithm. Based on this table, you can choose the most suitable option for your configuration.

It is necessary to note one more parameter. By default, all group adapters are active and used to transmit traffic. However, you can specify one of the adapters as Standby. This adapter will only be used as a hot-swap if one of the active adapters fails.

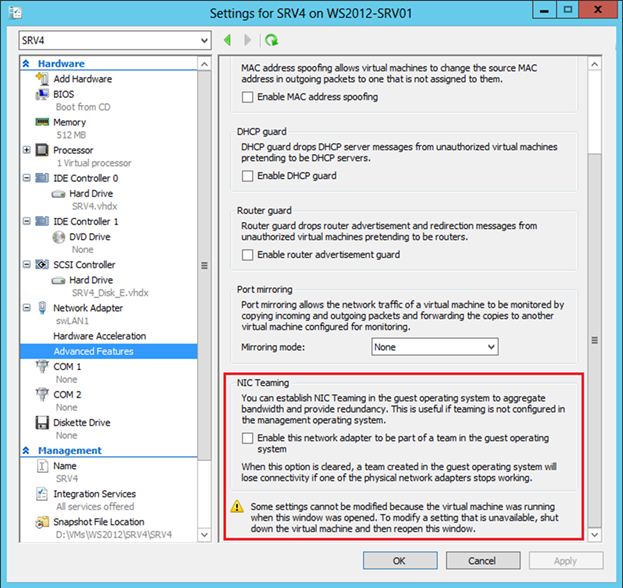

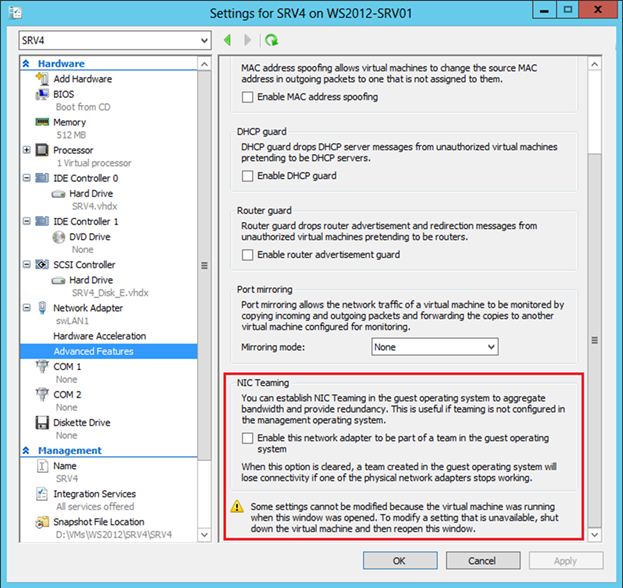

For various reasons, you may not want to enable timing on the host machine. Alternatively, installed adapters cannot be merged into the timing by regular OS tools. The latter is true for adapters with SR-IOV, RDMA or TCP Chimney support. However, if there is more than one such physical network adapter on the host, you can use NIC Teaming inside the guest OS. Imagine that the host has two network cards. If in some VM there are two virtual network adapters, these adapters are connected via two virtual external switches to two physical cards, respectively, and Windows Server 2012 is installed inside the VM, then you can configure NIC Teaming inside the guest OS. And such a VM can take advantage of timing, and fault tolerance, and increased throughput. But in order for Hyper-V to understand that if one physical adapter fails, the traffic for this VM needs to be transferred to another physical adapter, you need to set the checkbox in the properties of each virtual NIC included in the timing.

In PowerShell, a similar setting is made as follows:

I will add that in a guest OS only two adapters can be combined into a group, and for a group only switch independent + address hash mode is possible.

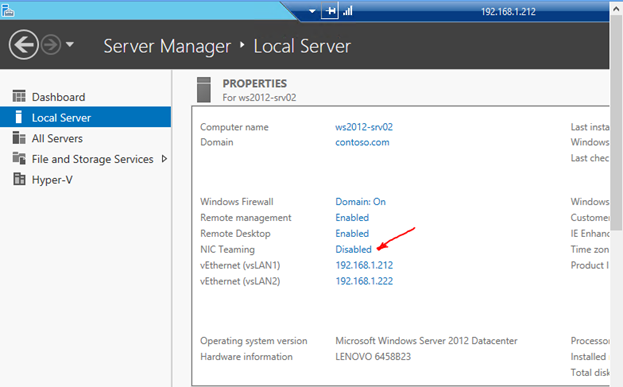

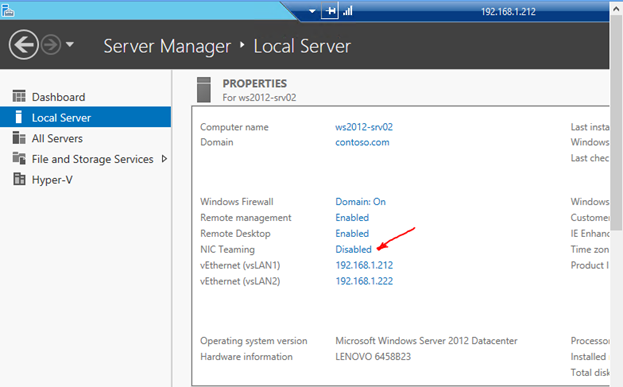

Timing setting is possible in the Server Manager GUI, or in PowerShell. Let's start with the Server Manager, in which you need to select Local Server and NIC Teaming.

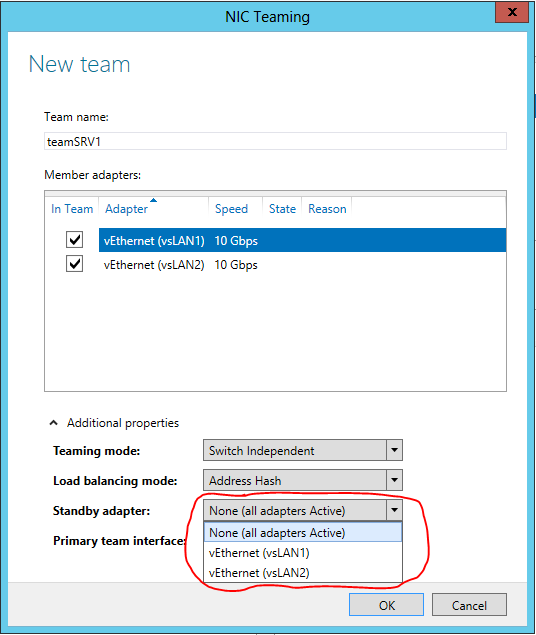

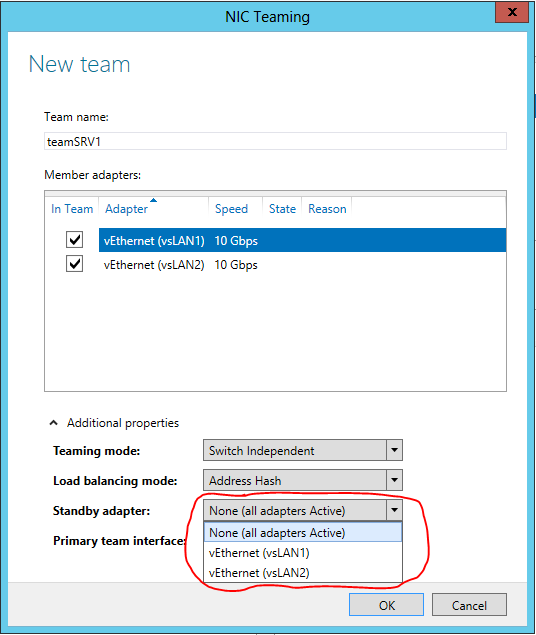

In the TEAMS section of the TASKS menu, select New Team.

We set the name of the group to be created, mark the adapters to be included in the group, and select the timing mode (Static, Switch Independent or LACP).

Choose a traffic balancing mode.

If necessary, specify the Standby-adapter.

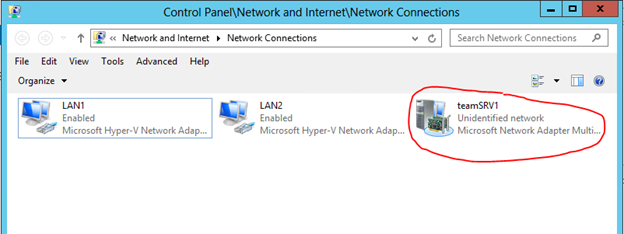

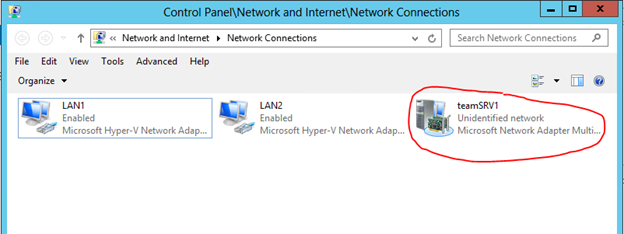

As a result, a new network interface appears in the list of adapters for which you need to specify the required network settings.

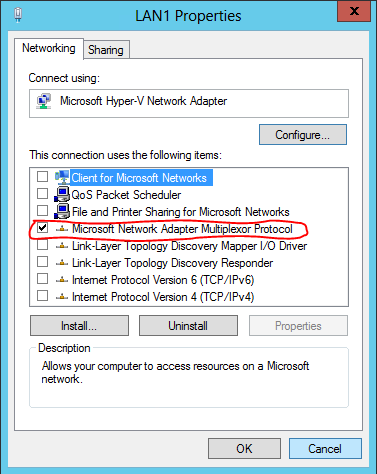

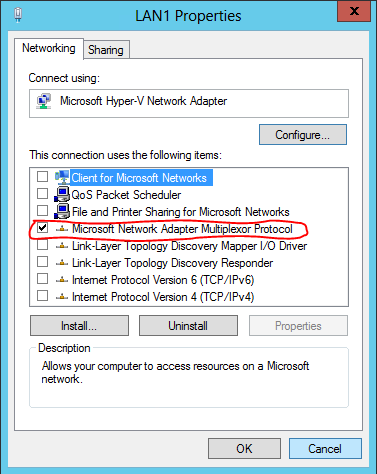

In the properties of real adapters, you can see the enabled multiplexing filter.

In PowerShell, manipulations with timing are implemented by a set of commands with the suffix Lbfo. For example, creating a group might look like this:

Here, TransportPorts means balancing using 4-tuple hash.

I note that the newly created network interface uses dynamic IP addressing by default. If you need to set fixed IP and DNS settings in the script, you can do it, for example, like this:

Thus, with the built-in tools of Windows Server 2012, you can now group network adapters of a host or virtual machine, providing fault tolerance for network traffic and aggregating the bandwidth of adapters.

You can see the technology in action, as well as get additional information on this and other network features of Windows Server 2012, by viewing free courses on the Microsoft Virtual Academy portal:

Hope the material was helpful.

Thank!

What does NIC Teaming give?

NIC Teaming, also referred to as Load Balancing / Failover (LBFO), is available in all editions of Windows Server 2012 and in all server operating modes (Core, MinShell, Full GUI). Combining (timing) several physical network adapters into a group leads to the emergence of a virtual network interface tNIC, which represents a group for higher levels of the operating system.

')

Combining adapters into a group provides two main advantages:

- Fault tolerance at the level of the network adapter and, accordingly, network traffic. Failure of the network adapter of the group does not lead to loss of the network connection, the server switches the network traffic to healthy adapters of the group.

- Aggregation of bandwidth adapters included in the group. When performing network operations, such as copying files from public folders, the system can potentially use all the adapters of the group, improving the performance of network interaction.

NIC Teaming Features in Windows Server 2012

Windows Server 2012 allows you to group up to 32 Ethernet network adapters. Timing of non-Ethernet adapters (Bluetooth, Infiniband, etc.) is not supported. In principle, a group can contain only one adapter, for example, to separate traffic across VLANs, but, obviously, failover is not provided in this case.

The network adapter driver included in the group must be digitally signed with Windows Hardware Qualification and Logo (WHQL). In this case, adapters from different manufacturers can be grouped together, and this will be a supported Microsoft configuration.

Only adapters with the same speed connection can be included in one group.

It is not recommended to use third-party timing and timing on the same server. Configurations are not supported when the adapter included in the third-party timing is added to the group created by the standard OS facilities, and vice versa.

NIC Teaming Options

When creating a timing group, it is necessary to specify several parameters (discussed below), two of which are of fundamental importance: the mode of timing (teaming mode) and the mode of balancing traffic (load balancing mode).

Timing mode

Timing group can operate in two modes: switch dependent (switch dependent) and switch independent (switch independent).

As the name implies, the first option (switch dependent) will require setting up a switch to which all adapters of the group are connected. Two options are possible - static configuration of the switch (IEEE 802.3ad draft v1), or use of the Link Aggregation Control Protocol (LACP, IEEE 802.1ax).

In switch independent mode, group adapters can be connected to different switches. I emphasize it may be, but it is not necessary. If this is so, fault tolerance can be provided not only at the network adapter level, but also at the switch level.

Balancing mode

In addition to specifying the mode of operation of timing, you must also specify the mode of distribution or balancing of traffic. There are essentially two such modes: Hyper-V Port and Address Hash.

Hyper-V Port . On a host with a raised Hyper-V role and the nth number of virtual machines (VM), this mode can be very effective. In this mode, the port of Hyper-V Extensible Switch , to which a certain VM is connected, is assigned to a network adapter of the timing group. All outgoing traffic of this VM is always transmitted through this network adapter.

Address Hash . In this mode, a hash is calculated for a network packet based on the source and destination addresses. The resulting hash is associated with any group adapter. All subsequent packets with the same hash value are sent through this adapter.

The hash can be calculated based on the following values:

- MAC address of the sender and recipient;

- IP address of the sender and recipient (2-tuple hash);

- The TCP port of the sender and receiver and the IP address of the sender and receiver (4-tuple hash).

Port based hash calculation allows traffic to be distributed more evenly. However, for non-TCP or UDP traffic, a hash is used based on an IP address; for non-IP traffic, a hash is based on MAC addresses.

The table below describes the logic of the distribution of incoming / outgoing traffic, depending on the mode of operation of the group and the selected traffic distribution algorithm. Based on this table, you can choose the most suitable option for your configuration.

| Address hash | Hyper-v port | |

|---|---|---|

| Switch independent |

|

|

| Static, LACP |

|

|

It is necessary to note one more parameter. By default, all group adapters are active and used to transmit traffic. However, you can specify one of the adapters as Standby. This adapter will only be used as a hot-swap if one of the active adapters fails.

NIC Teaming in the guest OS

For various reasons, you may not want to enable timing on the host machine. Alternatively, installed adapters cannot be merged into the timing by regular OS tools. The latter is true for adapters with SR-IOV, RDMA or TCP Chimney support. However, if there is more than one such physical network adapter on the host, you can use NIC Teaming inside the guest OS. Imagine that the host has two network cards. If in some VM there are two virtual network adapters, these adapters are connected via two virtual external switches to two physical cards, respectively, and Windows Server 2012 is installed inside the VM, then you can configure NIC Teaming inside the guest OS. And such a VM can take advantage of timing, and fault tolerance, and increased throughput. But in order for Hyper-V to understand that if one physical adapter fails, the traffic for this VM needs to be transferred to another physical adapter, you need to set the checkbox in the properties of each virtual NIC included in the timing.

In PowerShell, a similar setting is made as follows:

Set-VMNetworkAdapter -VMName srv4 -AllowTeaming On I will add that in a guest OS only two adapters can be combined into a group, and for a group only switch independent + address hash mode is possible.

Configure NIC Teaming

Timing setting is possible in the Server Manager GUI, or in PowerShell. Let's start with the Server Manager, in which you need to select Local Server and NIC Teaming.

In the TEAMS section of the TASKS menu, select New Team.

We set the name of the group to be created, mark the adapters to be included in the group, and select the timing mode (Static, Switch Independent or LACP).

Choose a traffic balancing mode.

If necessary, specify the Standby-adapter.

As a result, a new network interface appears in the list of adapters for which you need to specify the required network settings.

In the properties of real adapters, you can see the enabled multiplexing filter.

In PowerShell, manipulations with timing are implemented by a set of commands with the suffix Lbfo. For example, creating a group might look like this:

New-NetLbfoTeam -Name teamSRV1 -TeamMembers LAN1,LAN2 -TeamingMode SwitchIndependent -LoadBalancingAlgorithm TransportPorts Here, TransportPorts means balancing using 4-tuple hash.

I note that the newly created network interface uses dynamic IP addressing by default. If you need to set fixed IP and DNS settings in the script, you can do it, for example, like this:

New-NetIPAddress -InterfaceAlias teamSRV1 -IPAddress 192.168.1.163 -PrefixLength 24 -DefaultGateway 192.168.1.1 Set-DnsClientServerAddress -InterfaceAlias teamSRV1 -ServerAddresses 192.168.1.200 Thus, with the built-in tools of Windows Server 2012, you can now group network adapters of a host or virtual machine, providing fault tolerance for network traffic and aggregating the bandwidth of adapters.

You can see the technology in action, as well as get additional information on this and other network features of Windows Server 2012, by viewing free courses on the Microsoft Virtual Academy portal:

- New features of Windows Server 2012. Part 1. Virtualization, networks, storage

- Windows Server 2012: Network Infrastructure

Hope the material was helpful.

Thank!

Source: https://habr.com/ru/post/162509/

All Articles