Forwarding NVIDIA Quadro 4000 to a virtual machine using the Xen hypervisor

Once I read a post [ 1 ] about successfully deploying a video card to a virtual machine, I thought it would be nice for me to get myself such a workstation.

When developing cross-platform software, there are often problems with testing it. I do all my work exclusively under Linux, with the end user working exclusively on the Windows operating system (OS). It would be possible to use VirtualBox, for example, but when you need to test the operation of modules using OpenGL or CUDA, serious problems arise. Dual Boot as an option I do not even consider. It turns out that, one way or another, I have to use a second computer, which is simply not where to put it. In this case, most of the time he is idle without work. It turns out extremely not effective, in terms of the use of resources, the scheme.

One day my dream has become a necessity. It was necessary to build a graphic station with the following characteristics:

Many may think: "And where is virtualization?". The problem is that Windows, in my personal opinion, is not very reliable. Often, the end users of the system are not very qualified people, as a result of which malicious software gets onto the computer, which can destroy all data on all drives. In this case, it is necessary that the backup was stored locally, but it was not possible to destroy it. Organizing large and fast data storage is also not a trivial task. One way or another, it was decided to run Windows in a virtual machine (VM) environment.

I read a lot of articles and documentation (including on Habré [ 1 - 3 ]) about forwarding video cards to the VM environment. The conclusion to which I came was not comforting for me. Most successful probros were carried out with ATI graphics cards. Throwing NVIDIA graphics card is possible, but not every. This requires a patch hypervisor [ 4 , 5 ].

')

Because of the need to use CUDA technology, I had to forward the NVIDIA graphics card. There is NVIDIA Multi-OS technology [ 6 ], which allows forwarding video cards to VMs. This technology is supported only by accelerators from the Quadro series [ 7 ].

Another disadvantage of most video cards is their minimum two slots. This is often unacceptable and that is why the single-slot NVIDIA Quadro 4000 graphics card was chosen. It is the most powerful member of the Quadro family among single-slot options.

Key elements of the system:

Initially, I wanted to implement my plan using the Xen hypervisor. I chose DebianGNU / Linux wheezy (hereinafter Debian) as the OS for dom0. The Linux kernel has an excellent implementation of software RAIDs.

During the experiments I tried KVM. He coped well with any device other than a video card. Unfortunately, withXen 4.1 , which is part of common distributions, I also didn’t do anything. In the virtual machine environment, the video card stubbornly refused to start.

Fortunately, not long before I started working on this issue,Xen 4.2.0 was released. It is available, of course, only in the form of source codes.

In order to avoid problems with disabling the necessary for forwarding devices from the dom0, it is necessary that thexen-pciback driver be included in the kernel. In Debian distributions, it is shipped as a module.

Thus, it was necessary to assemble the hypervisor and the kernel.

When installing Debian, I didn’t do anything unusual. Install only the base system. The graphical environment should not be installed, because along with it a lot of software is put, part of which will conflict with the compiled Xen.

At the end of the basic installation, I installed the minimum set of necessary software.

In order not to litter the combat system, I performed the assembly in a clean operating system specially installed under VirtualBox.

To build Xen you need to run a series of simple commands [ 8 ].

At the exit, I received the xen-upstream-4.2.0.deb package.

The kernel was built because of the need to include thexen-pciback driver in the kernel. The build process is well described in the official Debian documentation [ 9 ].

I got linux-image-3.2.32_1.0.0_amd64.deb on output.

I installed the received packages on the target system. Installing Xen creates unnecessary symbolic links in the / boot directory that need to be removed. Since the assembledxen-upstream-4.2.0.deb package does not contain dependencies, the packages required for Xen need to be installed manually.

Making Xen bootable by default.

We register Xen services in the system.

To hide the devices necessary for forwarding from dom0, thexen-pciback driver needs to submit a list of them. To disable Memory Ballooning, you must pre-specify the amount of memory required for dom0. To do this, you need to add 2 lines to / etc / default / grub .

In addition to the video card, 4 USB controllers, network and sound cards, and a number of peripherals were thrown.

After that, you need to update the GRUB bootloader configuration file.

Since the ASUS P9X79 WS motherboard has two network interfaces, it was decided not to make any network bridges. dom0 and domU will have independent network connections.

The following sequence of commands sets the minimum amount of memory for dom0 (thereby finally turning off Memory Ballooning) and turns off unnecessary network scripts. I also made VNC available at any IP address.

I will not dwell on the issue of installing Windows on a VM environment. Let me just say that the installation must be performed remotely using VNC. NVIDIA Quadro starts working only after installing the drivers. It starts only when the OS is fully loaded. Also, paravirtual drivers were successfully installed [ 10 ].

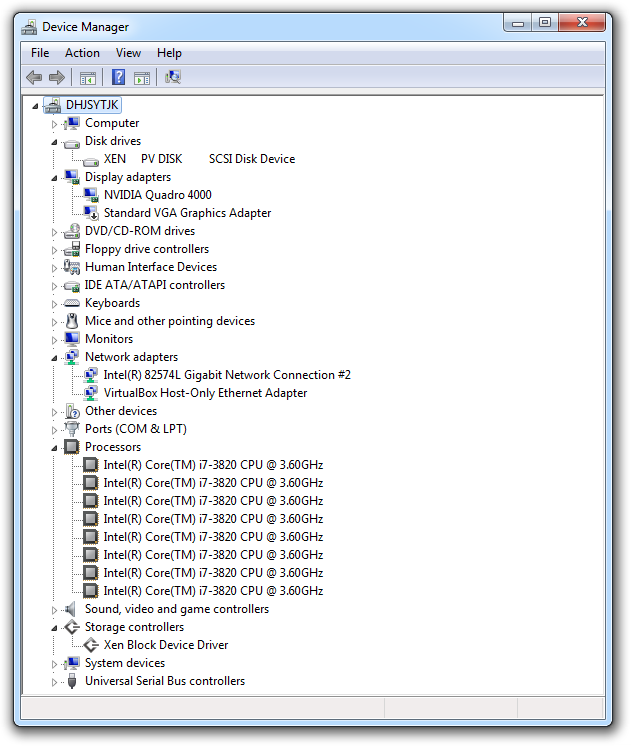

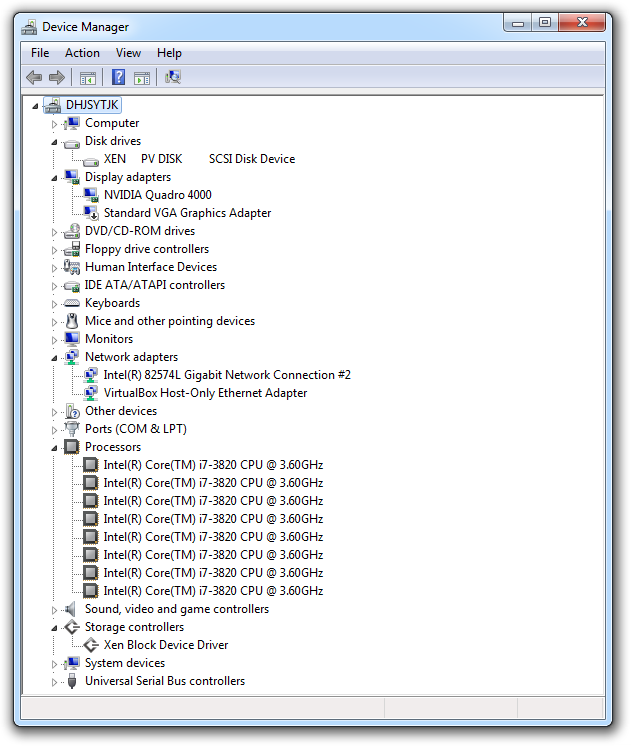

Among the network devices is the VirtualBox Host-Only Ethernet Adapter. The fact is that I installed VirtualBox in the VM environment (inside the domU). Inside was installed Windows XP. Even with double nesting inside the VM OS, Windows XP worked with acceptable speed.

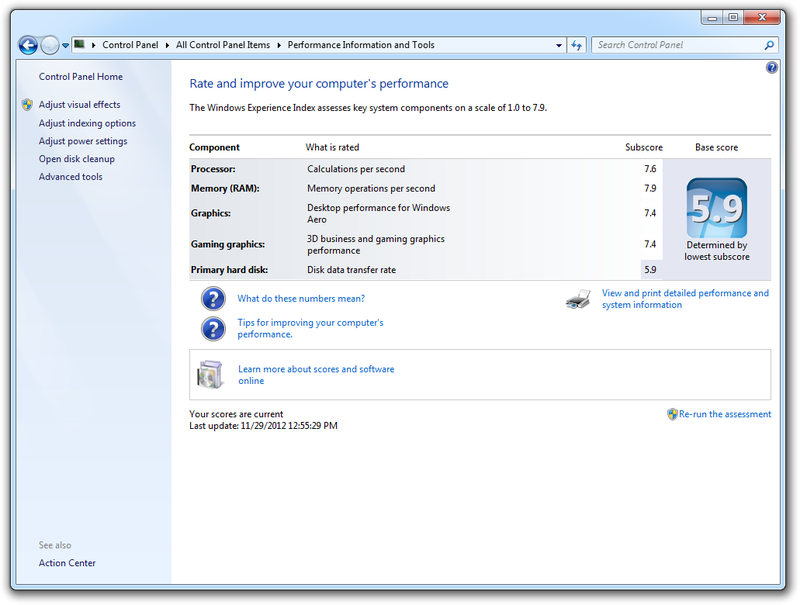

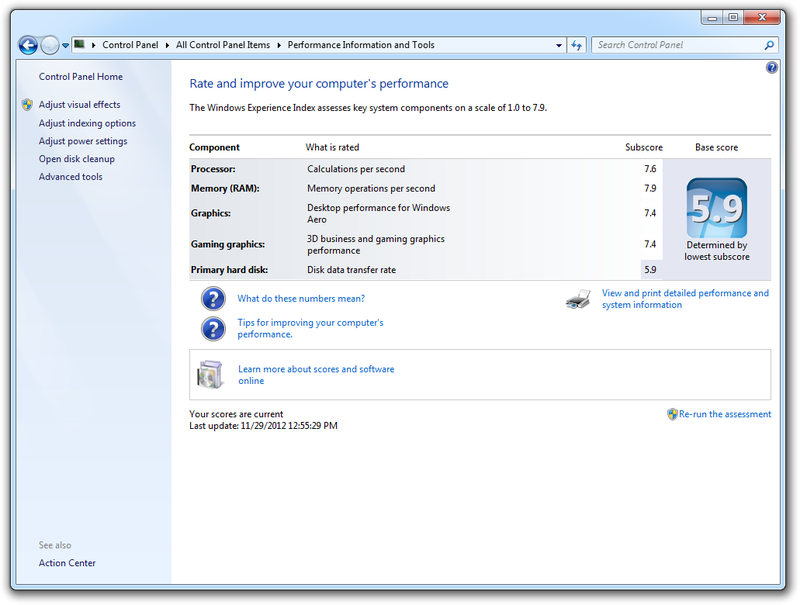

I’ll just say that I didn’t even try to install Windows on bare hardware, so I don’t have anything to compare objectively with. The entire assessment was reduced to viewing the Windows Experience Index (WEI) and installing 3DMark 2011. That is, to be honest, I didn’t carry out any performance testing.

The WEI is determined by the test result with the worst result. In my case, the bottleneck was “hard disk”. The fact is that all the experiments I conducted using a single old hard drive. Otherwise, the results are not very bad.

The NVIDIA Quadro 4000 video card is not gaming, but Crysis worked smartly.

Since version4.1 , the xl toolkit has come to Xen. It does not need xend and in the future it should replace xm.

When working in manual xl did not cause any complaints. However, the xendomains script is clearly not designed to work with it. When you run/etc/init.d/xendomains start , domU domains started up without problems. However, when calling /etc/init.d/xendomains stop did not occur, absolutely nothing. The domU operation did not automatically terminate as a result of which the entire system was hanging. During a small investigation, it turned out that some of the functions inside xendomains are simply not adapted to work with xl. In principle, I was ready to cope with these problems.

The worst thing was that when you run domU xl it gave an error saying that it could not reset the PCI device, namely the video card, via sysfs. For a video card, the reset file in sysfs is simply missing. I'm not completely sure, but it seems to me that it was because of this that after reloading the domU video card, it often refused to start. Only restarting the entire system helped.

In xm, resetting PCI devices seems to be implemented differently. At least, when working with it, there were no problems with the video card. That is why I stopped at xm.

It took me 5 days to implement everything described. In general, the system worked very stably. No BSODs and freezes were observed. I hope that in the near future, without exception, all video cards will be suitable for forwarding to a VM, since NVIDIA Quadro cannot be called a budget option.

When developing cross-platform software, there are often problems with testing it. I do all my work exclusively under Linux, with the end user working exclusively on the Windows operating system (OS). It would be possible to use VirtualBox, for example, but when you need to test the operation of modules using OpenGL or CUDA, serious problems arise. Dual Boot as an option I do not even consider. It turns out that, one way or another, I have to use a second computer, which is simply not where to put it. In this case, most of the time he is idle without work. It turns out extremely not effective, in terms of the use of resources, the scheme.

One day my dream has become a necessity. It was necessary to build a graphic station with the following characteristics:

- Operating system Windows 7 (hereinafter Windows);

- A set of software using DirectX, OpenGL and CUDA;

- High-speed, local, fault-tolerant storage of about 10 TB;

- Backup and recovery mechanism for the entire system;

- Periodic automatic backup of user data.

Many may think: "And where is virtualization?". The problem is that Windows, in my personal opinion, is not very reliable. Often, the end users of the system are not very qualified people, as a result of which malicious software gets onto the computer, which can destroy all data on all drives. In this case, it is necessary that the backup was stored locally, but it was not possible to destroy it. Organizing large and fast data storage is also not a trivial task. One way or another, it was decided to run Windows in a virtual machine (VM) environment.

I read a lot of articles and documentation (including on Habré [ 1 - 3 ]) about forwarding video cards to the VM environment. The conclusion to which I came was not comforting for me. Most successful probros were carried out with ATI graphics cards. Throwing NVIDIA graphics card is possible, but not every. This requires a patch hypervisor [ 4 , 5 ].

')

Because of the need to use CUDA technology, I had to forward the NVIDIA graphics card. There is NVIDIA Multi-OS technology [ 6 ], which allows forwarding video cards to VMs. This technology is supported only by accelerators from the Quadro series [ 7 ].

Another disadvantage of most video cards is their minimum two slots. This is often unacceptable and that is why the single-slot NVIDIA Quadro 4000 graphics card was chosen. It is the most powerful member of the Quadro family among single-slot options.

Key elements of the system:

- Motherboard ASUS P9X79 WS;

- Core i7-3820 processor;

- 32 GB memory (4 levels of 8 GiB);

- Video card for dom0 (no matter what);

- Video card for domU NVIDIA Quadro 4000.

Initially, I wanted to implement my plan using the Xen hypervisor. I chose Debian

During the experiments I tried KVM. He coped well with any device other than a video card. Unfortunately, with

Fortunately, not long before I started working on this issue,

In order to avoid problems with disabling the necessary for forwarding devices from the dom0, it is necessary that the

Thus, it was necessary to assemble the hypervisor and the kernel.

Install debian

When installing Debian, I didn’t do anything unusual. Install only the base system. The graphical environment should not be installed, because along with it a lot of software is put, part of which will conflict with the compiled Xen.

At the end of the basic installation, I installed the minimum set of necessary software.

$ sudo apt-get install gnome-core gvncviewer mc Build the hypervisor and kernel

In order not to litter the combat system, I performed the assembly in a clean operating system specially installed under VirtualBox.

To build Xen you need to run a series of simple commands [ 8 ].

$ sudo apt-get build-dep xen $ sudo apt-get install libglib2.0-dev libyajl-dev fakeroot bison flex libbz2-dev liblzo2-dev $ wget http://bits.xensource.com/oss-xen/release/4.2.0/xen-4.2.0.tar.gz $ tar xf xen-4.2.0.tar.gz $ cd xen-4.2.0 $ ./configure --enable-githttp $ echo "PYTHON\_PREFIX\_ARG=--install-layout=deb" > .config $ make deb At the exit, I received the xen-upstream-4.2.0.deb package.

The kernel was built because of the need to include the

$ sudo apt-get install linux-source $ sudo apt-get build-dep linux-latest $ sudo apt-get install kernel-package fakeroot $ tar xf /usr/src/linux-source-3.2.tar.bz2 $ cd linux-source-3.2 $ cp /boot/config-`uname -r` .config $ sed -i 's/CONFIG_XEN_PCIDEV_BACKEND=.*$/CONFIG_XEN_PCIDEV_BACKEND=y/g' .config $ make-kpkg clean $ fakeroot make-kpkg --initrd --revision=1.0.0 kernel_image I got linux-image-3.2.32_1.0.0_amd64.deb on output.

Install the kernel and hypervisor

I installed the received packages on the target system. Installing Xen creates unnecessary symbolic links in the / boot directory that need to be removed. Since the assembled

$ sudo dpkg -i linux-image-3.2.32_1.0.0_amd64.deb $ sudo dpkg -i xen-upstream-4.2.0.deb $ sudo su -c "cd /boot; rm xen.gz xen-4.gz xen-4.2.gz xen-syms-4.2.0" $ sudo apt-get install libyajl2 liblzo2-2 libaio1 Making Xen bootable by default.

$ sudo dpkg-divert --divert /etc/grub.d/08_linux_xen --rename /etc/grub.d/20_linux_xen We register Xen services in the system.

$ sudo update-rc.d xend start 99 2 3 5 stop 99 0 1 6 $ sudo update-rc.d xencommons start 99 2 3 5 stop 99 0 1 6 $ sudo update-rc.d xendomains start 99 2 3 5 stop 99 0 1 6 $ sudo update-rc.d xen-watchdog start 99 2 3 5 stop 99 0 1 6 To hide the devices necessary for forwarding from dom0, the

GRUB_CMDLINE_LINUX_XEN_REPLACE_DEFAULT = "xen-pciback.hide = (02: 00.0) (02: 00.1) <br> (00: 1a.0) (00: 1d.0) (09: 00.0) (0a: 00.0) (0b: 00.0 ) (03: 00.0) (00: 1b.0) " GRUB_CMDLINE_XEN_DEFAULT = "dom0_mem = 15360M"

In addition to the video card, 4 USB controllers, network and sound cards, and a number of peripherals were thrown.

After that, you need to update the GRUB bootloader configuration file.

$ sudo update-grub Since the ASUS P9X79 WS motherboard has two network interfaces, it was decided not to make any network bridges. dom0 and domU will have independent network connections.

The following sequence of commands sets the minimum amount of memory for dom0 (thereby finally turning off Memory Ballooning) and turns off unnecessary network scripts. I also made VNC available at any IP address.

$ sudo sed -i 's/^XENDOMAINS_SAVE=.*$/XENDOMAINS_SAVE=""/g' /etc/default/xendomains $ cd /etc/xen $ sudo sed -i 's/^.*.(dom0-min-mem.*)$/(dom0-min-mem 15360)/g' xend-config.sxp $ sudo sed -i 's/^.*.(enable-dom0-ballooning.*)$/(enable-dom0-ballooning no)/g' xend-config.sxp $ sudo sed -i 's/^(network-script.*)$/#&/g' xend-config.sxp $ sudo sed -i 's/^(vif-script.*)$/#&/g' xend-config.sxp $ sudo sed -i "s/^.*.(vnc-listen.*)$/(vnc-listen '0.0.0.0')/g" xend-config.sxp Install Windows

I will not dwell on the issue of installing Windows on a VM environment. Let me just say that the installation must be performed remotely using VNC. NVIDIA Quadro starts working only after installing the drivers. It starts only when the OS is fully loaded. Also, paravirtual drivers were successfully installed [ 10 ].

Among the network devices is the VirtualBox Host-Only Ethernet Adapter. The fact is that I installed VirtualBox in the VM environment (inside the domU). Inside was installed Windows XP. Even with double nesting inside the VM OS, Windows XP worked with acceptable speed.

Domain configuration file

builder = "hvm" memory = 16384 vcpus = 8 name = "windows-7" uuid = "830460b8-3541-11e2-8560-5404a63ce590" disk = ['phy: / dev / storage / windows-7, hda, w'] boot = 'c' pci = ['02: 00.0 ',' 02: 00.1 ',' 00: 1a.0 ',' 00: 1d.0 ', <br> '09: 00.0', '0a: 00.0', '0b: 00.0 ', '03: 00.0', '00: 1b.0'] gfx_passthru = 0 pae = 1 nx = 1 videoram = 16 stdvga = 1 vnc = 1 usb = 1 usbdevice = "tablet" localtime = 1 xen_platform_pci = 1

Test results

I’ll just say that I didn’t even try to install Windows on bare hardware, so I don’t have anything to compare objectively with. The entire assessment was reduced to viewing the Windows Experience Index (WEI) and installing 3DMark 2011. That is, to be honest, I didn’t carry out any performance testing.

The WEI is determined by the test result with the worst result. In my case, the bottleneck was “hard disk”. The fact is that all the experiments I conducted using a single old hard drive. Otherwise, the results are not very bad.

The NVIDIA Quadro 4000 video card is not gaming, but Crysis worked smartly.

Comments on Xen

Since version

When working in manual xl did not cause any complaints. However, the xendomains script is clearly not designed to work with it. When you run

The worst thing was that when you run domU xl it gave an error saying that it could not reset the PCI device, namely the video card, via sysfs. For a video card, the reset file in sysfs is simply missing. I'm not completely sure, but it seems to me that it was because of this that after reloading the domU video card, it often refused to start. Only restarting the entire system helped.

libxl: error: libxl_pci.c: 1001: libxl__device_pci_reset: <br> The kernel doesn't support reset from sysfs for PCI device 0000: 02: 00.0 libxl: error: libxl_pci.c: 1001: libxl__device_pci_reset: <br> The kernel doesn't support reset from sysfs for PCI device 0000: 02: 00.1

In xm, resetting PCI devices seems to be implemented differently. At least, when working with it, there were no problems with the video card. That is why I stopped at xm.

Conclusion

It took me 5 days to implement everything described. In general, the system worked very stably. No BSODs and freezes were observed. I hope that in the near future, without exception, all video cards will be suitable for forwarding to a VM, since NVIDIA Quadro cannot be called a budget option.

List of used sources

- Forwarding a video card to a virtual machine

- Forwarding a video card to a guest OS from the Xen hypervisor

- Forwarding a video card in Xen, from under Ubuntu

- Xen VGA Passthrough

- VGA device assignment - KVM

- NVIDIA Multi-OS

- Graphics and Virtualization - NVIDIA

- Compiling Xen From Source

- Compiling a New Kernel

- Xen Windows GplPv / Installing

Source: https://habr.com/ru/post/161747/

All Articles